ECONOMICS 762: 2SLS Stata Example

advertisement

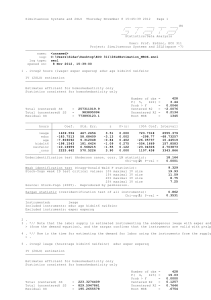

ECONOMICS 762: 2SLS Stata Example L. Magee March, 2008 This example uses data in the file 2slseg.dta. It contains 2932 observations from a sample of young adult males in the U.S. in 1976. The variables are: 1. nearc2 2. nearc4 3. educ 4. age 5. smsa 6. south 7. wage 8. married =1 if lived near a 2 yr college in 1966 =1 if lived near a 4 yr college in 1966 years of schooling, 1976 age in years, 1976 =1 if lived in an SMSA, 1976 (SMSA = “Standard Metropolitan Statistical Area”, basically indicates live in an urban area) =1 if live in southern U.S., 1976 hourly wage in cents, 1976 =1 if married, 1976 This data set is used in the article “Using Geographic Variation in College Proximity to Estimate the Returns to Schooling,” by D. Card (1994) in L.N. Christophides et al.(ed.), Aspects of Labour Market Behaviour: Essays in Honour of John Vanderkamp and used in the textbook: Introductory Econometrics: A Modern Approach, second edition, by Jeffrey M. Wooldridge. The goal is to estimate the percentage effect on the wage of getting an extra year of education, by estimating the coefficient on EDUC variable in a regression equation with the log of WAGE as the dependent variable, controlling for other factors as follows: LHS variable: log of WAGE RHS variables: EDUC, AGE, MARRIED, SMSA This will be referred to as the wage equation. It is commonly thought that EDUC is correlated with the error term in the wage equation (“unobserved ability”). This would result in OLS over-estimating the effect of EDUC on the log wage. It is hard to find instruments though. They need to be uncorrelated with the error term, yet help to predict years of schooling. In this example, some information on how far these young men lived from two types of colleges 10 years earlier is used as instruments. Here is the do file without comments: ****************************************************************************** ** 2SLS.do : March 2007 ****************************************************************************** clear capture log using "C:\Documents and Settings\courses\761 and 762\w07\2SLS\2SLS.log", replace use "C:\Documents and Settings\courses\761 and 762\w07\2SLS\2SLSeg.dta" summarize gen lwage=log(wage) ** IV regression (2SLS) ** ivreg lwage age married smsa (educ = nearc2 nearc4) ** general version of Hausman test ** predict ivresid,residuals est store ivreg reg lwage educ age married smsa hausman ivreg .,constant sigmamore df(1) ** Wu version of Hausman test ** quietly reg educ age married smsa nearc2 nearc4 predict educhat,xb reg lwage educ age married smsa educhat ** overidentification test ** quietly reg ivresid age married smsa nearc2 nearc4 predict explresid,xb matrix accum rssmat = explresid,noconstant matrix accum tssmat = ivresid,noconstant scalar nobs=e(N) scalar x2=nobs*rssmat[1,1]/tssmat[1,1] scalar pval=1-chi2(1,x2) scalar list x2 pval log close 2 Here is the same do file with comments about some of the commands inserted below them in italics: ****************************************************************************** ** 2SLS.do : March 2007 ****************************************************************************** clear capture log using "C:\Documents and Settings\courses\761 and 762\w07\2SLS\2SLS.log", replace use "C:\Documents and Settings\courses\761 and 762\w07\2SLS\2SLSeg.dta" summarize gen lwage=log(wage) ** IV regression (2SLS) ** ivreg lwage age married smsa (educ = nearc2 nearc4) This ivreg command computes the 2SLS estimates. The dependent variable is lwage. The regressors that are assumed exogenous are left outside of the parentheses: age married smsa. The regressors that are assumed endogenous are in the parentheses to the left of the equals sign. There’s just one in this example: educ. In the parentheses to the right of the equals sign are the instrumental variables, that are assumed exogenous and do not appear as regressors in the equation. Here they are nearc2 and nearc4. The key assumption is that distances from 2yr and 4yr colleges in 1966 are not correlated with the error in the wage equation, but do help to explain years of schooling in 1976. ** general version of Hausman test ** predict ivresid,residuals This post-estimation command stores the 2SLS residuals in a variable that I called ivresid.. est store ivreg This post-estimation command stores some of the 2SLS results for later use in a Hausman test. reg lwage educ age married smsa This command estimates the same equation by OLS in order to compute the Hausman test statistic. hausman ivreg .,constant sigmamore df(1) This command computes the Hausman test statistic. The null hypothesis is that the OLS estimator is consistent. If accepted, we probably would prefer to use OLS instead of 2SLS. The option constant is necessary to tell Stata to include the constant term in the comparison of both estimates. The sigmamore option tells Stata to use the same estimate of the variance of the error term for both models. This is desirable here since the error term has the same interpretation in both models. The df(1) option tells Stata that the null distribution has one degree of freedom. Stata was able to figure this out when I left this option out, even though the Hausman test is comparing values of two 5element (not one-element) vectors. It probably knew this by finding only one non-zero eigenvalue of the 5-by-5 covariance matrix estimate that it calls (V_b-V_B) in the output. It’s safer to impose the d.f. in the hausman command as above. ** Wu version of Hausman test ** quietly reg educ age married smsa nearc2 nearc4 The above OLS regression is done only to get the predicted value of educ to perform the Wu version of the Hausman test as described on p.82 of the Greene text, 5th edition. To reduce the amount of output in the log file, its output is suppressed by preceding the command with quietly. predict educhat,xb 3 reg lwage educ age married smsa educhat This OLS regression takes the original wage equation and adds the OLS predicted values of all of the (suspected) endogenous variables. Here there is only one, educhat. It was predicted using the full set of exogenous variables. The Wu version of the Hausman test is the standard significance test for the coefficient(s) on these added variables. Since there’s just one here, use a two-sided t-test. ** overidentification test ** quietly reg ivresid age married smsa nearc2 nearc4 The uncentred R-square of the above regression will be computed below to produce the overidentification test statistic, also known as the Sargan statistic. The dependent variable ivresid is the 2SLS residual vector, saved earlier. predict explresid,xb The predicted values from the regression are saved in order to calculate the uncentred R-squared. matrix accum rssmat = explresid,noconstant matrix accum tssmat = ivresid,noconstant There’s probably a neater way to do this, but I used these matrix accum commands with a noconstant option in order to compute two scalars, rssmat (which is the sum of squares of explresid) and tssmat (which is the sum of squares of ivresid) scalar nobs=e(N) e(N) is the sample size, which was automatically stored earlier. This command stores that value in a scalar variable nobs. scalar x2=nobs*rssmat[1,1]/tssmat[1,1] This command computes the overidentification test statistic, called x2. scalar pval=1-chi2(1,x2) This command computes the P-value using the Stata function chi2(n,x), which computes the area to the left of x under a chi-square distribution with n d.f. scalar list x2 pval This prints out the values of x2 and pval. log close 4 Now the log file: . use "C:\Documents and Settings\courses\761 and 762\w07\2SLS\2SLSeg.dta" . . summarize Variable | Obs Mean Std. Dev. Min Max -------------+-------------------------------------------------------nearc2 | 2932 .430764 .4952676 0 1 nearc4 | 2932 .6828104 .4654613 0 1 educ | 2932 13.25887 2.682475 1 18 age | 2932 28.11937 3.134548 24 34 smsa | 2932 .7060027 .4556684 0 1 -------------+-------------------------------------------------------south | 2932 .3915416 .4881783 0 1 wage | 2932 577.1872 264.5756 100 2404 married | 2932 .7141883 .4518772 0 1 . . gen lwage=log(wage) . . ** IV regression (2SLS) ** . ivreg lwage age married smsa (educ = nearc2 nearc4) Instrumental variables (2SLS) regression Source | SS df MS -------------+-----------------------------Model | -19.3235809 4 -4.83089521 Residual | 601.657409 2927 .205554291 -------------+-----------------------------Total | 582.333829 2931 .198680938 Number of obs F( 4, 2927) Prob > F R-squared Adj R-squared Root MSE = = = = = = 2932 122.71 0.0000 . . .45338 -----------------------------------------------------------------------------lwage | Coef. Std. Err. t P>|t| [95% Conf. Interval] -------------+---------------------------------------------------------------educ | .1386543 .0342091 4.05 0.000 .0715779 .2057307 age | .0366522 .0027297 13.43 0.000 .0312999 .0420044 married | .1937981 .0201602 9.61 0.000 .1542685 .2333277 smsa | .0976942 .0417188 2.34 0.019 .0158931 .1794953 _cons | 3.184304 .4405519 7.23 0.000 2.320481 4.048127 -----------------------------------------------------------------------------Instrumented: educ Instruments: age married smsa nearc2 nearc4 -----------------------------------------------------------------------------. . ** general version of Hausman test ** . predict ivresid,residuals . est store ivreg . reg lwage educ age married smsa Source | SS df MS -------------+-----------------------------Model | 145.487691 4 36.3719228 Residual | 436.846137 2927 .149247057 -------------+-----------------------------Total | 582.333829 2931 .198680938 5 Number of obs F( 4, 2927) Prob > F R-squared Adj R-squared Root MSE = = = = = = 2932 243.70 0.0000 0.2498 0.2488 .38633 -----------------------------------------------------------------------------lwage | Coef. Std. Err. t P>|t| [95% Conf. Interval] -------------+---------------------------------------------------------------educ | .0485886 .0027103 17.93 0.000 .0432742 .0539029 age | .0364856 .0023253 15.69 0.000 .0319262 .041045 married | .1759239 .0161841 10.87 0.000 .1441906 .2076572 smsa | .1962286 .0159841 12.28 0.000 .1648874 .2275698 _cons | 4.326357 .074032 58.44 0.000 4.181197 4.471517 -----------------------------------------------------------------------------. hausman ivreg .,constant sigmamore df(1) Note: the rank of the differenced variance matrix (1) does not equal the number of coefficients being tested (5); be sure this is what you expect, or there may be problems computing the test. Examine the output of your estimators for anything unexpected and possibly consider scaling your variables so that the coefficients are on a similar scale. ---- Coefficients ---| (b) (B) (b-B) sqrt(diag(V_b-V_B)) | ivreg . Difference S.E. -------------+---------------------------------------------------------------educ | .1386543 .0485886 .0900657 .0290232 age | .0366522 .0364856 .0001666 .0000537 married | .1937981 .1759239 .0178742 .0057599 smsa | .0976942 .1962286 -.0985344 .0317522 _cons | 3.184304 4.326357 -1.142053 .3680211 -----------------------------------------------------------------------------b = consistent under Ho and Ha; obtained from ivreg B = inconsistent under Ha, efficient under Ho; obtained from regress Test: Ho: difference in coefficients not systematic chi2(1) = (b-B)'[(V_b-V_B)^(-1)](b-B) = 9.63 Prob>chi2 = 0.0019 (V_b-V_B is not positive definite) . . ** Wu version of Hausman test ** . quietly reg educ age married smsa nearc2 nearc4 . predict educhat,xb . reg lwage educ age married smsa educhat Source | SS df MS -------------+-----------------------------Model | 146.924944 5 29.3849888 Residual | 435.408884 2926 .148806864 -------------+-----------------------------Total | 582.333829 2931 .198680938 Number of obs F( 5, 2926) Prob > F R-squared Adj R-squared Root MSE = = = = = = 2932 197.47 0.0000 0.2523 0.2510 .38575 -----------------------------------------------------------------------------lwage | Coef. Std. Err. t P>|t| [95% Conf. Interval] -------------+---------------------------------------------------------------educ | .0478031 .0027181 17.59 0.000 .0424736 .0531327 age | .0366522 .0023225 15.78 0.000 .0320982 .0412061 married | .1937981 .0171531 11.30 0.000 .1601647 .2274315 smsa | .0976942 .035496 2.75 0.006 .0280945 .1672939 educhat | .0908512 .0292331 3.11 0.002 .0335316 .1481708 6 _cons | 3.184304 .3748395 8.50 0.000 2.449328 3.91928 -----------------------------------------------------------------------------. . ** overidentification test ** . quietly reg ivresid age married smsa nearc2 nearc4 . predict explresid,xb . matrix accum rssmat = explresid,noconstant (obs=2932) . matrix accum tssmat = ivresid,noconstant (obs=2932) . scalar nobs=e(N) . scalar x2=nobs*rssmat[1,1]/tssmat[1,1] . scalar pval=1-chi2(1,x2) . scalar list x2 pval x2 = 5.9600396 pval = .01463371 . . log close log: C:\Documents and Settings\courses\761 and 762\w07\2SLS\2SLS.log log type: text closed on: 13 Mar 2007, 16:28:25 --------------------------------------------------------------------------------------------------------------- The two Hausman tests give identical information. The general version is in chi-square form, and equals 9.63, while the Wu version is a t-statistic, t = 3.11, which is the square root of 9.63. The have the same Pvalue of .002, indicating rejection of the consistency of OLS, providing support for using 2SLS. The overidentification test has a P-value of .014, which is significant at 5% but not 1%. So at the 5% level we would reject the hypothesis that the instrumental variables nearc2 and nearc4 are exogenous. If no other instrumental variables are available, it is hard to know what to do about this. We could drop one of the two instruments, but we would not know if that solves the problem because we then have no overidentification restrictions left to test. 7