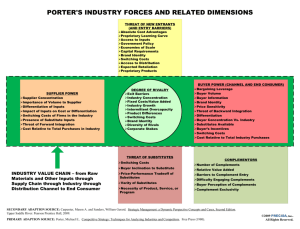

1 IDENTIFICATION AND REVIEW OF SENSITIVITY ANALYSIS

advertisement