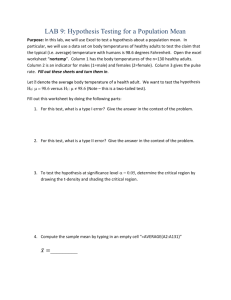

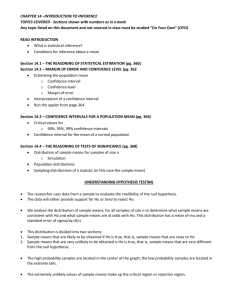

This chapter introduces the fundamental statistical ideas that

advertisement