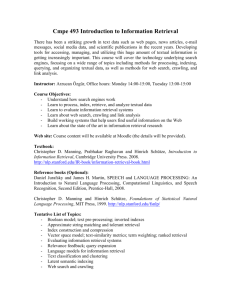

LIBR 558: Information Retrieval Systems: Structures and Algorithms

advertisement

LIBR 558: Information Retrieval Systems: Structures and Algorithms Course Syllabus Program: Master of Library and Information Studies Year: Fall session 2013-2014, Term 1 Course Schedule: Wednesday, 2:00 p.m. – 4:50 p.m. Location: IBLC 158 Instructor: Edie Rasmussen Office location: Room 478, IBLC Office phone: (604) 827-5486 Office hours: Monday, 10:30 a.m. – 11:30 a.m. Wednesday, 10:30 a.m. – 11:30 a.m. Email address: edie.rasmussen@ubc.ca Course website: See your Connect account. Course Goal: To provide an introduction to the methods used in the storage and retrieval of textual, pictorial, graphic, and voice data. Course Objectives: understand the complexity of information retrieval; understand the functions of an information retrieval system; be able to understand and measure the contribution of the components of an information retrieval system to its performance; be able to isolate the factors which optimize the information retrieval process; be aware of current issues in information retrieval, including search engines. Course Topics: Documents and queries Information retrieval models Evaluating information retrieval systems Implementing information retrieval systems Improving effectiveness of information retrieval systems Multimedia information retrieval systems Information retrieval on the WWW Users and information retrieval 1 Prerequisites: MLIS and Dual MAS/MLIS: completion of the MLIS core MAS: completion of MAS core and permission of the SLAIS Graduate Adviser ARST/LIBR 500, LIBR 501 Format of the course: Lectures, journal club, presentations, guest speakers, lab sessions Recommended and Required Readings: Weakly readings will draw heavily on several recently published monographs: R. Baeza-Yates and B. Ribeiro-Neto (2011). Modern Information Retrieval: the Concepts and Technology Behind Search. 2nd ed. Harlow, England: Addison-Wesley. S. Bϋttcher, C.L.A. Clarke, and G.V. Cormack (2010). Information Retrieval: Implementing and Evaluating Search Engines. Cambridge, MA: MIT Press. W.B. Croft, D. Metzler, T. Strohman, Search Engines: Information Retrieval in Practice. Boston: Addison Wesley, 2010. (SEIRIP) D. Manning, P. Raghavan, and H. Schϋtze, Introduction to Information Retrieval. Cambridge : Cambridge University Press, 2008. (IIR) Manning et al., and several chapters of Croft et al., and Bϋttcher et al., are available on the publisher’ web site as noted in the readings. Copies are also available on reserve. Readings by Week: Week Readings J. Allan, B. Croft, A. Moffat and M. Sanderson (2012). Frontiers, challenges and opportunities for information retrieval. ACM SIGIR Forum 46(1): 2-32 (June 2012). Available at http://dl.acm.org/citation.cfm?id=2215678 N.J. Belkin (2008). Some(what) grand challenges for information retrieval. Keynote lecture, European Conference on Information Retrieval, Glasgow, Scotland, 31 March 2008. Available at http://www.sigir.org/forum/2008J/2008j-sigirforum-belkin.pdf 1 Chapter 1, Search engines and information retrieval. In W.B. Croft, D. Metzler, T. Strohman, Search Engines: Information Retrieval in Practice. Boston: Addison Wesley, 2010. Pp. 1-12. N. Fuhr (2012). Salton Award Lecture: Information Retrieval as Engineering Science. ACM SIGIR Forum 46(2): 19-28. Available at http://dl.acm.org/citation.cfm?id=2422259 Griffiths, J.-M. and King, D.W. (2002). US information retrieval system evolution and evaluation (1945-1975). IEEE Annals of the History of Computing, 24(30: 35-55. [Available at 2 http://ieeexplore.ieee.org/xpl/articleDetails.jsp?arnumber=1024761 2 Chapter. 2: The term vocabulary and postings lists. In. C.D. Manning, P. Raghavan, and H. Schϋtze, Introduction to Information Retrieval. Cambridge : Cambridge University Press, 2008. Pp. 1844. [Available at http://nlp.stanford.edu/IR-book/pdf/02voc.pdf Chapter 4, Processing Text. In W.B. Croft, D. Metzler, T. Strohman, Search Engines: Information Retrieval in Practice. Boston: Addison Wesley, 2010. Available at: http://www.pearsonhighered.com/croft1epreview/pdf/chap4.pdf Chapter 1: Boolean retrieval. In. C.D. Manning, P. Raghavan, and H. Schϋtze, Introduction to Information Retrieval. Cambridge : Cambridge University Press, 2008. Pp. 1-17. [Available at http://nlp.stanford.edu/IR-book/pdf/01bool.pdf] 3 Chapter 2, Architecture of a search engine. In W.B. Croft, D. Metzler, T. Strohman, Search Engines: Information Retrieval in Practice. Boston: Addison Wesley, 2010. Pp. 13-29. Chapter 7, Retrieval models. In W.B. Croft, D. Metzler, T. Strohman, Search Engines: Information Retrieval in Practice. Boston: Addison Wesley, 2010. Pp. 233-296. F. Crestani, M. Lalmas, C.J. Van Rijsbergen, and I. Campbell (1998). “Is This Document Relevant? . . . Probably”: A Survey of Probabilistic Models in Information Retrieval. ACM Computing Surveys 30(4): 528-552. Available at http://tinediey.cc/i7el1 4 Lemur Project Tutorials: Starting Out: Overview: Language Models and Information Retrieval. Available at http://tinyurl.com/2a5gqte X. Liu and W.B. Croft (2005). Statistical language modeling for information retrieval. Annual Review of Information Science and Technology 39(1): 1-31. 5 P. Borlund (2003). The concept of relevance in IR. Journal of the American Society for Information Science and Technology, 54(10): 913-925. Chapter 8, Evaluating search engines. In W.B. Croft, D. Metzler, T. Strohman, Search Engines: Information Retrieval in Practice. 3 Boston: Addison Wesley, 2010. Available at http://www.pearsonhighered.com/croft1epreview/pdf/chap8.pdf Chapter 8, Evaluation in information retrieval. In C.D. Manning, P. Raghavan and H. Schϋtze, Introduction to Information Retrieval. Cambridge : Cambridge University Press, 2008. Available at http://nlp.stanford.edu/IR-book/pdf/irbookonlinereading.pdf D. Harman, D. (2011). Information Retrieval Evaluation. Synthesis Lectures on Information Concepts, Retrieval, and Services #19. Morgan & Claypool Publishers. (107 p.) (Browse) S. Robertson (2008). On the history of evaluation in IR. Journal of Information Science 34(4): 439-2008. Available at http://jis.sagepub.com/cgi/content/abstract/34/4/439 M. Sanderson (2010). Test collection based evaluation of information retrieval systems. Foundations and Trends in Information Retrieval 4(4): 247-375. (Browse) E. Voorhees (2007). TREC: Continuing information retrieval’s tradition of experimentation. Communications of the ACM 50(11): 51-54. [Available at http://portal.acm.org/citation.cfm?doid=1297797.1297822] Chapter 5, Relevance feedback and query expansion. In R. BaezaYates and B Ribeiro-Neto, Modern Information Retrieval. Harlow, England: Addison-Wesley, 2011. Pp. 177-202. 6 Chapter. 9, Relevance feedback and query expansion. In. C.D. Manning, P. Raghavan and H. Schϋtze, Introduction to Information Retrieval. Cambridge : Cambridge University Press, 2008. Pp. 162177. [Available at http://nlp.stanford.edu/IR-book/pdf/09expand.pdf] G. Salton & C. Buckley (1990). Improving retrieval performance by relevance feedback. Journal of the American Society for Information Science 41: 288-297. Chapter 2, User Interfaces for Search (M. Hearst). In R. BaezaYates and B Ribeiro-Neto, Modern Information Retrieval. Harlow, England: Addison-Wesley, 2011. Pp. 21-55. 7 Chapter 5: The User-oriented IR research approach. In Ingwersen, P. (1992). Information Retrieval Interaction. London, TaylorGraham. Full text of book is available at http://vip.db.dk/pi/iri/index.htm. B.J. Jansen (2009). Understanding User-Web Interactions via Web 4 Analytics. Synthesis Lectures on Information Concepts, Retrieval, and Services #6. Morgan & Claypool D. Kelly (2009) Methods for Evaluating Interactive Information Retrieval Systems with Users, Foundations and Trends in Information Retrieval: Vol. 3: No 1—2, pp 1-224. (browse) Chapter 19, Web search basics; Chapter 20, Web crawling and indexes. In C.D. Manning, P. Raghavan and H. Schϋtze, Introduction to Information Retrieval. Cambridge : Cambridge University Press, 2008 Available at http://nlp.stanford.edu/IR-book/pdf/irbookonlinereading.pdf 8 M. Franceschet (2011). PageRank: Standing on the shoulders of giants. Communications of the ACM 54(6): 92-101. http://cacm.acm.org/magazines/2011/6/108660-pagerank-standingon-the-shoulders-of-giants/fulltext P. Vogl and M. Barrett (2010). Regulating the information gatekeepers. Communications of the ACM 53(11): 67-72. Available at http://cacm.acm.org/magazines/2010/11/100623-regulating-theinformation-gatekeepers/fulltext I.H. Witten (2008). Searching… in a Web. Journal of Universal Computer Science 14(10): 1739-1762. Available at http://www.cs.waikato.ac.nz/~ihw/papers/08-IHWSearchinginWeb.pdf Ch. 14, Multimedia Information Retrieval (D. Ponceléon and M. Slaney). In R. Baeza-Yates and B Ribeiro-Neto, Modern Information Retrieval. Harlow, England: Addison-Wesley, 2011. Pp. 587-639. R. Datta, D. Josh, J. Li and J.Z. Wang. (2008). Image retrieval: ideas, influences and trends of the new age. ACM Computing Surveys 40(2): 5.1-5.60. 9 P. Enser (2008). The evolution of visual information retrieval. Journal of Information Science 34: 531-546. Available at http://jis.sagepub.com/cgi/content/abstract/34/4/531 M.S. Lew, N. Sebe, C. Djeraba, and R. Jain (2006). Content-based multimedia information retrieval: state of the art and challenges. ACM Transactions on Multimedia Computing, Communications, and Applications 2(1): 1-19. Available at http://www.liacs.nl/~mlew/mir.survey16b.pdf 5 S. Rϋger (2010). Multimedia Information Retrieval. Synthesis Lectures on Information Concepts, Retrieval, and Services #10. Morgan & Claypool Publishers. (157 pp.). (Chapters 1 and 2) 10 Mid-term Examination (Take-Home) Chapter 10, Social search. In W.B. Croft, D. Metzler, T. Strohman, Search Engines: Information Retrieval in Practice. Boston: Addison Wesley, 2010. Pp. 397-450. D. Das and A.F.T. Martins (2007). A survey on automatic text summarization. Language Technologies Institute, Carnegie Mellon University. Available at www.cs.cmu.edu/~nasmith/LS2/dasmartins.07.pdf 11 W. Fan, L. Wallace, S. Rich, and Z. Zhang. (2006). Tapping the power of text mining. Communications of the ACM 49(9): 76-82. Available at http://dl.acm.org/citation.cfm?id=1151032 K.L. Kroeker (2011). Weighing Watson’s impact. Communications of the ACM 54(7): 13-15. Available at http://cacm.acm.org/magazines/2011/7/109887-weighing-watsonsimpact/fulltext Roussinov, D., Weiguo, F. and Robles-Flores, J. (2008). Beyond keywords: automated question answering on the Web. Communications of the ACM 51(9): 60-65. [Available at http://portal.acm.org/citation.cfm?id=1378743] 12 J. Zobel, and A. Moffat (2006). Inverted files for text search engines. ACM Computing Surveys 38(2): 1-56. 13 Wrap-up Recommended A few more basic textbooks---older, but may be useful for explanations of some topics: G.G. Chowdhury (1999). Introduction to Modern Information Retrieval. London: Library Association. W.B. Frakes and R. Baeza-Yates (eds.) (1992). Information Retrieval: Data Structures & Algorithms. Englewood Cliffs, NJ: Prentice-Hall. 6 R.R. Korfhage (1997). Information Storage and Retrieval. New York: John Wiley. I.H. Witten, A. Moffat, and T.C. Bell (1999). Managing Gigabytes: Compressing and Indexing Documents and Images. 2nd ed. San Francisco, CA: Morgan Kaufmann. And, two series which have excellent chapter (or longer) length discussions of specific topics within information retrieval: Foundations and Trends in Information Retrieval (D. Oard and M. Sanderson, Editorsin-Chief). NOW Publishing. http://www.nowpublishers.com/journals/Foundations%20and%20Trends%C2%AE%20 in%20Information%20Retrieval/4#volumes Synthesis Lectures on Information Concepts, Retrieval and Services. (G. Marchionini, Editor) Morgan-Claypool http://www.morganclaypool.com/toc/icr/1/1 Course Assignments and Weight in relation to final course mark: Assignment A short paper providing the context for a journal club discussion; leading the discussion Midterm Exam (Take-Home) Project (Group optional) Implement an information retrieval software package and evaluate it using a standard text collection and metrics; present results. Participation Weight 20% 30% 40% 10% Course Schedule: Date Topic Week 1 September 4 Introduction to course Introduction to information retrieval Goals of IR History of IR Recent developments Allan (2012) Belkin (2008) Fuhr (2012) Griffiths (2002) SEIRIP, Ch. 1 Week 2 September 11 Representing document content Document processing Statistics of text Text processing (tokenizing, stopwords, stemming) Index term weighting Weights and metrics IIR, Ch. 2 SEIRIP, Ch. 4 Week 3 TPD Information retrieval models I: Readings/ Assignment IIR, Ch 1 SEIRIP, Ch. 2,7 7 Classical models Boolean models Ranked output models Week 4 TBD Information retrieval models II: Newer models Language models Latent semantic indexing Learning to rank Crestani (1998) Lemur Liu (2005) Week 5 October 2 Measuring effectiveness of IR systems Laboratory models Evaluation measures Role of TREC Interactive evaluation User analytics Borlund (2003) CIIR, Ch. 8 SEIRIP, Ch. 8 Harman (2011) Robertson (2008) Sanderson (2010) Voorhees (2007) Week 6 October 9 Improving effectiveness of IR systems Query expansion Relevance feedback Augmented search (e.g. Wikification) Wisdom of crowds Recommender systems Tagging and folksonomies IIR, Ch. 9 MIR, Ch. 5 Salton (1990) Week 7 October 16 Week 8 October 23 Week 9 October 30 Users and information systems Interactive IR Studying users User interfaces Information visualization IIIR, Ch. 5 Ingwersen (1992) Jansen (2009) Kelly (2009) MIR, Ch. 2 IR applications I: Web search engines Web IR Crawlers and indexers Search engines Webometrics Adversarial Search Computational Advertising IIIR, Chs. 19, 20 Franceschet (2011) Vogl (2010) Witten (2008) IR Applications II: Multimedia IR Datta (2008) 8 Multimedia IR Image retrieval Video retrieval Audio and music retrieval Enser (2008) Lew (2006) MIR, Ch 14 Rϋger (2010) Week 10 November 6 Mid-term Examination (Take Home) Week 11 November 13 IR Applications III: Working with Text Text mining Text summarization Text categorization Question-answering systems Das (2007) Fan (2006) Kroeker (2011) Roussinov, 2008 SEIRIP, Ch 10 Week 12 November 20 Implementing IR systems History Technical issues Reliability Scalability Zobel (2006) Week 13 November 27 Course summary Project/paper presentations Final papers/projects Course Policies: Attendance: The calendar states: “Regular attendance is expected of students in all their classes (including lectures, laboratories, tutorials, seminars, etc.). Students who neglect their academic work and assignments may be excluded from the final examinations. Students who are unavoidably absent because of illness or disability should report to their instructors on return to classes.” Regular on-time attendance in class is an important and required part of this course. It is your responsibility to obtain from one of the other class members any handouts distributed and notes taken during sessions you miss. Sudden unexpected problems arise for everyone (including myself), but I expect you to attend and be on time for class. Absences or repeated tardiness will result in a lower course mark or in a request from me that you drop the course. The size of an attendancerelated course mark penalty will be determined by the instructor. If you ARE late for class (for whatever reason) please come into the classroom rather than waiting for the break. 9 Evaluation: All assignments will be marked using the evaluative criteria given on the SLAIS web site. The grades are based on submission of the assignment in accordance with the due date. Decisions on extensions will be made on a case-by-case basis and extensions may result in a grading penalty at the discretion of the instructor. Please note that within these guidelines, a B+ mark is given for “Work demonstrating diligence and effort above basic requirements.” Written & Spoken English Requirement: Written and spoken work may receive a lower mark if it is, in the opinion of the instructor, deficient in English. Access & Diversity: Access & Diversity works with the University to create an inclusive living and learning environment in which all students can thrive. The University accommodates students with disabilities who have registered with the Access and Diversity unit: [http://www.students.ubc.ca/access/drc.cfm]. You must register with the Disability Resource Centre to be granted special accommodations for any on-going conditions. Religious Accommodation: The University accommodates students whose religious obligations conflict with attendance, submitting assignments, or completing scheduled tests and examinations. Please let your instructor know in advance, preferably in the first week of class, if you will require any accommodation on these grounds. Students who plan to be absent for varsity athletics, family obligations, or other similar commitments, cannot assume they will be accommodated, and should discuss their commitments with the instructor before the course drop date. UBC policy on Religious Holidays: http://www.universitycounsel.ubc.ca/policies/policy65.pdf . Academic Integrity Plagiarism The Faculty of Arts considers plagiarism to be the most serious academic offence that a student can commit. Regardless of whether or not it was committed intentionally, plagiarism has serious academic consequences and can result in expulsion from the university. Plagiarism involves the improper use of somebody else's words or ideas in one's work. It is your responsibility to make sure you fully understand what plagiarism is. Many students who think they understand plagiarism do in fact commit what UBC calls "reckless plagiarism." Below is an excerpt on reckless plagiarism from UBC Faculty of Arts' leaflet, "Plagiarism Avoided: Taking Responsibility for Your Work," (http://www.arts.ubc.ca/artsstudents/plagiarism-avoided.html). "The bulk of plagiarism falls into this category. Reckless plagiarism is often the result of careless research, poor time management, and a lack of confidence in your own ability to think critically. Examples of reckless plagiarism include: Taking phrases, sentences, paragraphs, or statistical findings from a variety of sources and piecing them together into an essay (piecemeal plagiarism); 10 Taking the words of another author and failing to note clearly that they are not your own. In other words, you have not put a direct quotation within quotation marks; Using statistical findings without acknowledging your source; Taking another author's idea, without your own critical analysis, and failing to acknowledge that this idea is not yours; Paraphrasing (i.e. rewording or rearranging words so that your work resembles, but does not copy, the original) without acknowledging your source; Using footnotes or material quoted in other sources as if they were the results of your own research; and Submitting a piece of work with inaccurate text references, sloppy footnotes, or incomplete source (bibliographic) information." Bear in mind that this is only one example of the different forms of plagiarism. Before preparing for their written assignments, students are strongly encouraged to familiarize themselves with the following source on plagiarism: the Academic Integrity Resource Centre http://help.library.ubc.ca/researching/academic-integrity. Additional information is available on the SAIS Student Portal http://connect.ubc.ca. If after reading these materials you still are unsure about how to properly use sources in your work, please ask me for clarification. Students are held responsible for knowing and following all University regulations regarding academic dishonesty. If a student does not know how to properly cite a source or what constitutes proper use of a source it is the student's personal responsibility to obtain the needed information and to apply it within University guidelines and policies. If evidence of academic dishonesty is found in a course assignment, previously submitted work in this course may be reviewed for possible academic dishonesty and grades modified as appropriate. UBC policy requires that all suspected cases of academic dishonesty must be forwarded to the Dean for possible action. Additional course information: Use of Connect: Connect will be used for discussion of the readings and to communicate any special announcements, clarifications on assignments, etc. If you have general comments or queries, use the discussion list; if you have individual concerns, please email me at edie.rasmussen@ubc.ca, call or visit during office hours. Note that if your query is of general concern, I may address it on the course list. Use of Dedicated Computer: The computer closest to the door in the Kitimat Lab is available for class projects related to IR evaluation; it has the appropriate software and datasets for our use. Note that the 11 software used is Open Source and you can load it on your own machines, but our license for the data does not allow me to distribute it freely. 12