CPU & Memory

I think there is a world market for maybe five computers!

To err is human but in order to mess-up big time you need a computer!

CPU & Memory

Dr Alan Hind

School of Computing

You can never have too much memory!

GIGO – Garbage

In Garbage Out

There is no reason that anyone in the right state of mind will want a computer in their home.

1

What is a Computer?

• It wasn’t until the early 1940s that electrical devices were first referred to as computers .

• Over the years, a rough definition of a computer has evolved to this:

– It must take some sort of input .

– It must produce some sort of output .

– It must process information/data .

– It must have some sort of memory/storage .

– It must control what it does.

2

The Stored Program Computer

• This is the universal model for conventional computing developed independently by John

Von Neumann and Alan Turing.

• It separates the data I/O (data in memory), from the hardware and the program (also in memory).

Input

Devices

CPU Output

Devices

Memory

3

A Typical Desktop Computer

The variations over the last few years are in control devices

(mouse/trackball/gamepad) and memory storage (USB sticks/CD/DVD/Blu-ray).

4

These ALL contain Computers

5

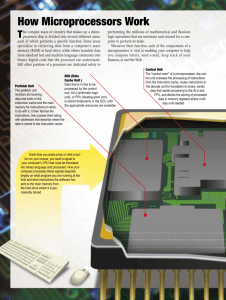

The CPU

• At the heart of any computer you will find a component called a processor .

• More formally, it is described as a

Central Processing Unit , or CPU .

• Today’s processors are constructed as a single electronic circuit - called an Integrated Circuit ( IC ) - on one single chip of silicon.

• Hence the names computer chip or microprocessor .

• What we often call a computer these days is more accurately a microprocessor-based computer system or microcomputer .

6

The Simplest Computer

• What are the smallest number of components we need to build a simple computer?

– Processor

• to process information and to control the whole system.

– Memory

• for storing data and instructions.

– Input device(s)

• to enter data into the system: a keyboard say.

– Output device(s)

• usually a monitor so we can see what our computer is up to.

• Together input and output devices are known as I/O .

7

Even Simpler

• In fact, a computer can happily work without the

I/O devices (not very usefully though) but does need three other vital components.

– Power

• From a power supply which converts mains AC at 240 Volts to 5 Volts DC or less.

– Clock signal

• To coordinate everything – over the years we moved from kHz through MHz to GHz (clock speed= 1 /

T

).

– Bus (plural busses )

• A set of parallel communication connections.

8

A Simple Computer

• What does it do?

• Fetch an instruction from memory and execute it.

• … then do it again, and again, and again … ad infinitum …

• This is the Fetch-execute cycle .

• When it’s first switched on/powered-up the processor fetches the instruction from the first location in the memory, unless designed and configured to do otherwise.

• N.b. This is location zero not location one.

9

Fetch-Execute Cycle

• How can the cycle be speeded-up?

– Note that there are at least two limiting factors influencing its performance.

• CPU execution rate of instructions.

• Read/write access to the system memory.

• The so-called Von Neumann bottleneck .

10

Going Faster!

• Increase the Clock Speed .

– Decrease the time period (T) for each cycle (F= 1 /

T

)

– 1 MHz in 1980 1 GHz in 2000 7.03 GHz in 2013

• This has been achieved through:

– An increase in VLSI * density using ever smaller electronic devices.

– Advanced multi-chip modular packaging.

– Faster memory systems.

(*VLSI – Very Large-Scale Integrated circuit)

11

Going Even Faster!

• Increase the instruction size/complexity

– I.e. increase the amount of work done per cycle

• More data at a time.

• More complex instructions.

• More specialised instructions.

• This hasn’t been entirely successful as added complexity limited the clock speed hence the move to

RISC (Reduced Instruction Set Computer architecture).

• The above is then termed CISC (Complex Instruction

Set Computer architecture).

12

Going Even More faster!

• Parallelism !

– Have more than one fetch-execute cycle running at a time.

– Turns out to be tricky as most problems are inherently sequential*.

– This has led eventually to multicore processors though.

(*Look up Amdahl’s Law)

13

Going Even More Faster Still!

IF 0

• Pipelining & Superscalar

ID 0

IF 1

DF 0

ID 1

IF 2

IE 0

DF 1

ID 2

IF 3

SR 0

IE 1

DF 2

ID 3

SR 1

IE 2

DF 3

SR 2

IE 3

– Pipelining

• involves using special circuitry to allow the fetchexecute cycle to be streamlined into something like an assembly-line.

• E.g. Instruction Fetch (IF), Instruction Decode (ID), Data

Fetch (DF), Instruction Execution (IE), Store Result (SR).

– The Superscalar approach

• uses separate specialised functional units for different instructions.

• E.g. Mathematics, graphics, communications.

SR 3

14

Success?

• These techniques have been used concurrently along with advances in VLSI technology to speed-up processor operation.

– Smaller transistors Increased clock speed

– Increasing instruction complexity (CISC) More power.

– Decreasing instruction complexity (RISC) Faster clock speed.

– Parallelism Distributed and Multicore processors.

– Pipelining/Superscalar Greater integration More power.

• Along with this the advances have given us another opportunity.

– Smaller transistors Reduced power consumption.

– Greater integration More power on one chip.

– Hence, mobile, hand-held, battery powered computing devices.

15

Multicores

• Parallelism is hard to do within programs or within an operating system – Trust me, I’m a doctor and did my PhD in this area!

• But, whole programs can run on separate processors (cores) quite nicely as long as they don’t need to talk to each other – much.

• The problems relate to Amdahl’s law

– again.

Cores

1

2

3

4

5

6

7

8

9

10

Names single-core dual-core tri-core quad-core penta-core hexa-core hepta-core octa-core nona-core deca-core

16

Octa-core Task Manager

17

Moore’s Law

• An observation was made in 1965 by Gordon Moore , co-founder of Intel.

– ”The number of transistors per square inch on ICs has doubled every year since the integrated circuit was invented.”

• Moore predicted that this trend would continue for the foreseeable future.

• In subsequent years, the pace slowed down a bit, but chip density/chip complexity has doubled approximately every two years, and this is the current definition of Moore's Law.

• Most experts expect Moore's Law to hold for at least another two decades.

18

Moore’s Law in action

19

Gordon Moore on Moore’s Law

20

Tech Joke Time

21

Memory Hierarchy

• Memory devices are distinguished by:

– Access time

– Capacity

– Volatility

– Locality

– Cost

• Fast memory is expensive even in small amounts

• Large Capacity memory is relatively slow.

22

Speed Vs. Volatility

• A memory that is fast enough not to keep the

CPU waiting during the fetch-execute cycle:

– has to be on the same chip (i.e. very close) …

– but the memory circuitry takes up lots of silicon realestate …

– so, it is therefore relatively expensive …

– and not very big! (kb at best)

• Modern processors have registers and cache memory on-chip with the processor.

• However, this memory is also volatile – the contents are lost at shutdown.

23

Immediate Vs. Secondary

• So, we have two categories of memory device:

– Immediate Access Storage (fast, expensive, volatile)

• Registers and cache based on SRAM .

• Main memory based on DRAM .

– Secondary Access Storage (slower, cheaper, non-volatile)

• Internal and External Hard disk based on magnetic disks.

• USB memory sticks based on EAROM (flash memory).

• Magneto-optical disks based on CD, DVD and blu-ray.

• SRAM – Static Random Access Memory – uses flip-flop circuits with 6 transistors – fast to read/write – large silicon area

• DRAM – Dynamic Random Access Memory – uses a capacitor and one transistor – slow to read/write – needs refresh - smaller silicon area

24

Effect on Computer Operation

• Booting up a computer takes time partly as the program code and data is moved (loaded) from slower secondary (disk) to faster immediate access storage

(main memory).

• Programs being executed and data currently being processed is stored in immediate access storage.

– If it’s not in a register (<1ns) then it’s in the cache (<5 ns), if it’s not in the cache it’s in main memory (<50 ns) , if it’s not there then it’s out on the hard disk (<1 ms) etc.

• Everything else is in secondary storage.

25

Virtual Memory

• Modern operating systems (OS) are too big to completely fit in the computer’s main memory (even if you have 4GB installed).

• So the computer pretends it has more than it does by creating a Virtual

Memory space on the hard disk.

– If you want to use an OS function not loaded in main memory then the computer has to get it from the virtual memory on the hard disk.

• Modern applications have also become steadily larger and more sophisticated.

– The more applications you run, the more memory (real and virtual) you use, the more likely the computer will spend a great deal of time swapping things between main memory and the hard disk.

– A major culprit is opening lots of different web pages in separate pages

(or tabs) plus all that browser history you haven’t deleted for a while.

– The perfect storm is when you load lots of applications on a computer whose hard disk is almost full – things start well and then … slowly … grind … to … a … … …

26

Cache Memory

• Cache memory is all about making the whole system go faster!

• Access is faster than main store memory (DRAM) as it is local and uses SRAM. It is limited in size partly due to cost.

• Most processors have part of the cache memory on-chip: this is called on-die cache. Off-die cache is located in other SRAM chips

• Cache is also often organised as two levels for speed considerations.

Level-1 cache is smaller in capacity but faster than Level-2.

• Cache memory is used to pre-fetch program instructions and data prior to being needed by the processor. Sophisticated algorithms are needed to "guess" what instructions or data are needed next.

• It works independently to all the other memory systems and sits between the processor and main store memory.

27

Memory cost Vs. seismic activity

28

Tech Joke Time

29

Inside a Simple CPU

• If we look inside the simplest CPU we start to get an insight into it’s fetch cycle using Register Transfer

Language (RTL).

– [MAR] [PC]

– [PC] [PC] + 1

– [MBR] [MS([MAR])]

– [IR] [MBR]

– CU [IR(op-code)]

• Key

– [ ] indicates "the contents of".

– The arrow ( ) indicates the direction.

– ( ) indicates “given by the”.

– Copies are non-destructive to the source

– The first line reads as:

• Copy the contents of the Program Counter into the Memory Address Register.

30

The Execute Cycle

• This depends on the instruction - obviously.

• Let’s use the simplest single address instruction format.

– E.g. ADD X ; add the contents of memory

; location X to the accumulator.

– Here’s the format. op-code operand

– where the op-code is a binary number indicating the operation to be carried out (e.g. ADD) and the operand is another binary number with the data or the address of the data.

31

Execute the ADD

• The overall effect of the execution of the instruction ADD X in RTL is:

– [A] [A] + [MS(X)]

• The execute sequence for ADD X is:

– [MAR] [IR(address)]

– [MBR] [MS([MAR])]

– ALU [MBR] + [A]

– [A] ALU

32

The Full ADD X

• Each line of RTL happens in each clock cycle (or tick).

• So, this instruction takes 9 clock cycles on our simple processor.

• At the beginning of each cycle the Control Unit (CU) has to output the right binary signals to each part of the CPU.

• N.b. all fetch cycles will be identical and execute cycles will vary in length with complexity.

• Fetch

– [MAR] [PC]

– [PC] [PC] + 1

– [MBR] [MS([MAR])]

– [IR] [MBR]

– CU [IR(op-code)]

• Execute

– [MAR] [IR(address)]

– [MBR] [MS([MAR])]

– ALU [MBR] + [A]

– [A] ALU

33

A Simple Instruction Set

Op-code Assembly

Instructions

RTL Operation Comment

000 LDA X [A] [MS(X)] Load A with contents of location X

001 STA X

010 ADD X

011 SUB X

100 INC X

101 DEC X

110 JMP X

111 BRA X

[MS(X)] [A] Store contents of A in location X

[A] [A] + [MS(X)] Add the contents of location X to A

[A] [A] - [MS(X)] Subtract contents of location X from A

[MS(X)] [MS(X)] + 1 Increment value in location X

[MS(X)] [MS(X)] - 1 Decrement value in location X

[PC] X Jump to instruction given by the value in location X

IF Z = 1 THEN [PC] X Conditional branch if Z is true*

(* Z is the Zero flag which is set to true if the result of the last instruction was zero)

34

Computer Code

• A simple assembly language program.

– LDA 20

– ADD 21

– STA 22

• Which does the following.

– Load the accumulator with contents of location 20.

– Add contents of location 21 to the accumulator.

– Store the result in location 22.

• The binary executable machine code in main store at locations 0, 1 & 2 will be:

– 000 10100

2

– 010 10101

2

– 001 10110

2

(14

H

)

(55

H

)

(36

H

)

• N.b. the numbers we want to add must be pre-loaded in to locations 20 and 21.

• N.b. the 3-bit op-code and the 5-bit operand giving an

8-bit instruction.

35

Low-level Programming

• We started by inputting instructions and data into computers in binary using switches ! (I kid you not!)

• We then wrote programs to read hexadecimal shorthand – a Hex loader – one instruction at a time.

• We then wrote programs to read holes in paper tape or cards to input programs of instructions and data.

• However, we could then move on to low-level programming written in Assembly Language .

– We typed the instructions into a card/tape punch machine which produced the right holes to give the machine code.

36

A University Computer Centre in 1980

37

Writing Low-level Programs

• Once computers became interactive with a monitor and keyboard, we could use a text editor program to write an Assembly Language text file.

• An Assembler program would check this for syntax errors and then, if there weren’t any, would produce a new text file with all the absolute memory locations calculated.

• A Linker program would then be used to create a binary executable file, linking with any other necessary or requested files at the same time.

38

High-Level Programming

• High-level programming allows us to write more sophisticated programs that are processor-independent .

• Assembly language is specific to the processor.

• We don’t need to worry about how many wossnames it has, how big or where they are!

• Our simple program to add two numbers together gets much simpler and interactive!

39

C Program to add two numbers

40

High-Level Language Tools

• A Compiler is a program which takes a text-based high-level language program file and converts it into a binary executable file.

• It does this in three “passes”.

– Lexical analyser – takes out the irrelevant stuff.

– Syntax analyser – makes sure the language makes sense (no speeling errods!)

– Semantic analyser – makes sure that the program means something valid.

– Finally, it produces binary executable code but can also produce Assembly Language text.

41

More High-level language Tools

• An Interpreter …

• A Cross-compiler …

• An Emulator …

• An IDE …

42

More High-level language Tools

• An Interpreter produces executable code line-by-line rather than allin-one-go like a compiler.

– This is much slower but useful for interactive development, debugging as-you-go-along and learning programming.

• A Cross-compiler allows a PC to produce binary executable code for another device or platform.

– E.g. A mobile phone, tablet computer, games console etc.

• An Emulator is a program which allows you to run an application compiled for another device.

– E.g. Running an iPhone app on a Mac, running a PS2 game on a PC.

• An IDE ( Integrated Development Environment ) combines an intelligent editor, compiler and runtime debugging environment.

– E.g. Microsoft’s Visual Studio

43

Thank you and …

44

Contact

• Dr Alan Hind

– School of Computing

– Teesside University

– Borough Road, Middlesbrough, TS1 3BA.

• Email: a.hind@tees.ac.uk

• Telephone: (01642) 342641

45

Bibliography

• Arduino: http://arduino.cc/en/

• Raspberry Pi: http://www.raspberrypi.org/

• What goes on inside the CPU: http://www.pcauthority.com.au/Feature/290164, what-goes-on-inside-the-cpu.aspx

• Computer Tutorial: http://www.eastaughs.fsnet.co.uk/index.htm

• Teach-ICT: http://www.teach-ict.com/

• Computer Organization and

Architecture: http://alanclements.org/index.html

46