Math 263 - Spring 2016 Solution for Homework 2 1. An urn contains

advertisement

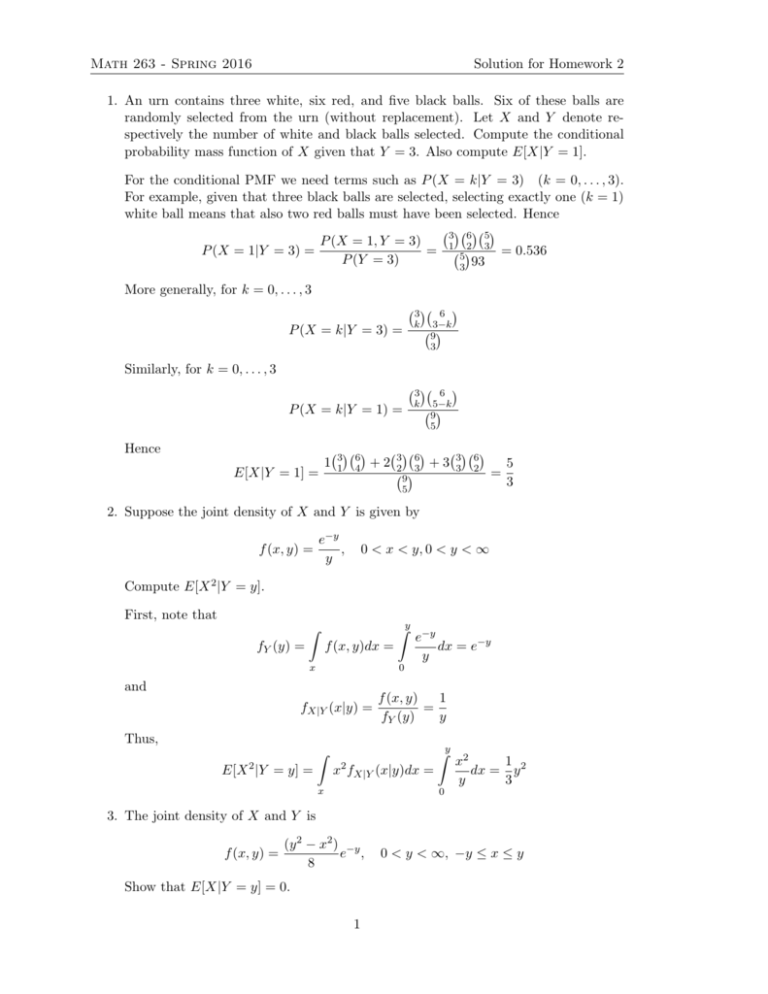

Solution for Homework 2 Math 263 - Spring 2016 1. An urn contains three white, six red, and five black balls. Six of these balls are randomly selected from the urn (without replacement). Let X and Y denote respectively the number of white and black balls selected. Compute the conditional probability mass function of X given that Y = 3. Also compute E[X|Y = 1]. For the conditional PMF we need terms such as P (X = k|Y = 3) (k = 0, . . . , 3). For example, given that three black balls are selected, selecting exactly one (k = 1) white ball means that also two red balls must have been selected. Hence 3 6 5 P (X = 1, Y = 3) 1 2 3 P (X = 1|Y = 3) = = 0.536 = 5 P (Y = 3) 3 93 More generally, for k = 0, . . . , 3 3 k 6 3−k 9 3 3 k 6 5−k 9 5 6 +3 3 3 P (X = k|Y = 3) = Similarly, for k = 0, . . . , 3 P (X = k|Y = 1) = Hence E[X|Y = 1] = 1 6 3 1 4 +2 3 2 3 6 2 9 5 = 5 3 2. Suppose the joint density of X and Y is given by f (x, y) = e−y , y 0 < x < y, 0 < y < ∞ Compute E[X 2 |Y = y]. First, note that Zy Z fY (y) = f (x, y)dx = x e−y dx = e−y y 0 and fX|Y (x|y) = f (x, y) 1 = fY (y) y Thus, E[X 2 |Y = y] = Z x2 fX|Y (x|y)dx = x Zy x2 1 dx = y 2 y 3 0 3. The joint density of X and Y is f (x, y) = (y 2 − x2 ) −y e , 8 Show that E[X|Y = y] = 0. 1 0 < y < ∞, −y ≤ x ≤ y Solution for Homework 2 Math 263 - Spring 2016 First, note that fX|Y (x|y) = f (x, y) = fY (y) 1 2 8 (y Ry 1 (y 2 8 −y − x2 )e−y − x2 )e−y dx Then Zy E[X|Y = y] = (y 2 − x2 ) (y 2 − x2 ) = 2 = y 4 3 y x − 31 x3 −y 3y 1 dx = 0 3 3y x(y 2 − x2 ) 4 −y since the integral is taken over two odd power functions of x (multiplied with constants and y-terms which are themselves constants). 4. Suppose Y is uniformly distributed on (0, 1), and that the conditional distribution of X given that Y = y is uniform on (0, y). Find E(X) and V ar(X). We know that fY (y) = 1 0<y<1 0 otherwise and fX|Y (x|y) = Thus E[X] = E[E(X|Y )] = 1 y 0 0<x<y otherwise R E(X|Y )fY (y)dy R R xfX|Y (x|y)dx fY (y)dy = y y x = R1 Ry = R1 h 1 x2 iy 0 0 = 0 R1 0 x y1 dx1dy dy 2 y 0 1 2 ydy = 1 4 Also, E[X 2 ] = E[E(X 2 |Y )] = E(X 2 |Y )fY (y)dy R R 2 = x fX|Y (x|y)dx fY (y)dy R y y x = R1 Ry = R1 0 0 0 x2 y1 dx1dy 1 2 3 y dy = Hence V ar(X) = E(X 2 ) − E(X)2 = 1 9 1 1 7 − = 9 16 144 5. A coin, having probability p of landing heads, is continually flipped until at least one head and one tail have been flipped. 2 Solution for Homework 2 Math 263 - Spring 2016 (a) Find the expected number of flips needed. Let X denote the number of flips needed. Let Y = 1 is the first flip is heads (else Y = 0). Suppose the first flip is heads, then X is one (for the first flip) plus however many flips are needed to get the first tail. The latter is a geometric random variable with parameter q = 1 − p. Its mean is 1q . Similarly, if the first flip was tails, we now have to wait for the first head. Thus E[X] = E [E[X|Y ]] = E[X|Y = 1]P (Y = 1) + E[X|Y = 0]P (Y = 0) 1 p q 1 p+ 1+ q =1+ + = 1+ q p q p (b) Find the expected number of flips that land on heads. There are only two options. If the first flip is heads, then the toss sequence could possibly have many heads and will have only one tail. If the first toss was tails, then there may be many tails but only one heads in the sequence. Let Z denote the number of heads flipped. Then E[Z] = E [E[Z|Y ]] = (1 + E[number of heads before first tail]) p + 1(1 − p) Note, that the expected number of tosses until the first tail is tossed is Geometric with parameter q = 1 − p. Thus, the expected number of heads before the first tail is one less than the expected value of that geometric. 1 E[Z] = 1 + p −1 q 3