In sight, out of mind: When object

advertisement

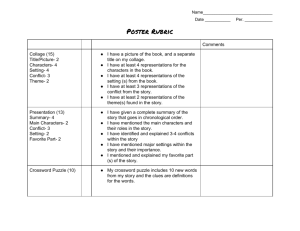

PSYCHOLOGICAL SCIENCE Research Report IN SIGHT, OUT OF MIND: When Object Representations Fail Daniel J. Simons Cornell University Abstract—Models of human visual memory often presuppose an extraordinary ability to recognize and identify objects, based on evidence for nearly flawless recognition of hundreds or even thousands of pictures after a single presentation (Nickerson, 1965; Shepard, 1967; Standing, Conezio, & Haber, 1970) and for storage of tens of thousands of object representations over the course of a lifetime (Biederman, 1987). However, recent evidence suggests that observers often fail to notice dramatic changes to scenes, especially changes occurring during eye movements (e.g., Grimes, 1996). The experiments presented here show that immediate memory for object identity is surprisingly poor, especially when verbal labeling is prevented. However, memory for the spatial configuration of objects remains excellent even with verbal interference, suggesting a fundamental difference between representations of spatial configuration and object properties. Humans demonstrate an extraordinary ability to discriminate previously viewed objects and scenes from hundreds of distractors (Nickerson, 1965; Shepard, 1967; Standing, Conezio, & Haber, 1970; but see Nickerson & Adams, 1979). Models of these recognition abilities typically rely on internal visual representations that are matched to current perceptions (e.g., Biederman, 1987; Tarr & Kriegman, 1992). Such representations are thought to be essential because without them, the world would appear totally new with each fixation. The notion of visual representations conforms to our phenomenal belief that we can form a rich, detailed image of a scene without effortful processing. Although object representations are essential for object identification (e.g., determining if something is a chair), a number of recent findings suggest the fallibility of explicit memory for specific objects across successive views. People often fail to notice dramatic changes (e.g., objects switching places or changing color) occurring across two presentations of a single scene (Currie, McConkie, Carlson-Radvansky, & Irwin, 1995; Grimes, 1996; Pashler, in press), especially when the changes occur during an eye movement. How can we reconcile our extraordinary ability to identify objects and recognize scenes (e.g., Intraub, 1981) with our inability to notice dramatic changes to scenes? Recently, several researchers have argued that we do not have a precise, metric representation that is preserved across views of a scene (O'Regan, 1992; Pollatsek & Rayner, 1992). That is, little if any visual information survives from one view of a scene to the next. We do not need to store visual images because object properties remain stable in most natural events. If our perceptual system could assume such stability __________________________________________________________ over time, the world itself would serve as a memory store that could be accessed when necessary (O'Regan, 1992). Therefore, we may fail to notice changes to objects because we lack internal visual representations.1 However, if we cannot compare a current view of a scene with a visual representation of the past, how can we discriminate novel and familiar objects successfully? How can we ever notice property changes or changes in the layout of objects? Although visual representations of object properties may not survive from one view to the next, we may be able to form longer lasting, abstract representations through effortful encoding. Such effortful encoding is constrained by attention, thus precluding complete processing of all object properties simultaneously. As a result, recognition of details of a scene will sometimes fail; observers may miss changes to object properties that were not encoded effortfully (e.g., Pashler, 1988). This report examines changes in object identity and location to assess the effectiveness of visual memory. If visual representations capture information about the properties and arrangements of objects, then subjects should be sensitive to such changes. GENERAL METHOD Subjects A total of 62 undergraduates at Cornell University participated (22 in the first experiment, 10 in each of the others) and were tested individually. Some subjects received course credit for participating, and all were informed of their rights as experimental participants. Materials and Procedure Each experiment consisted of 120 randomly ordered trials in which subjects viewed arrays of five objects on a 16-in. computer monitor. In each array, objects were randomly assigned to five of nine possible locations in a 15-cm x 13-cm rectangular box. On each trial, an array appeared for 2 s, followed by a 4.3-s interstimulus interval (ISI) and then by another array (see Fig. 1).2 This second array remained visible until subjects responded __________________________________________________________ Address correspondence to Daniel J. Simons, Department of Psychology, Uris Hall, Cornell University, Ithaca, NY 14853; e-mail: djs5@cornell.edu. 1. For the remainder of this report, I use "visual representations" to refer to representations that preserve the metric properties of the environment without substantial abstraction. Such representations are akin to photographic images. 2. The positions of the arrays on the screen were randomized to prevent subjects from fixating a single screen location throughout each trial. VOL. 7, NO. 5, SEPTEMBER 1996 Copyright 1996 American Psychological Society 301 PSYCHOLOGICAL SCIENCE Visual Representations and was either identical to the first array (60 trials) or different in one of the following ways (20 trials each): (a) In the identity change (I) condition, one randomly chosen object was replaced by an object not in the initial array; (b) in the switch (S) condition, two randomly selected objects switched positions; and (c) in the configuration change (C) condition, one randomly selected object moved to a previously empty location, changing the spatial configuration of the array. Participants were asked to indicate whether the two arrays were the "same" or "different" by pressing one of two keys on a keyboard. Except where noted, this general procedure was followed for all of the experiments. Results of the experiments are presented in Figure 2 and are described individually in the following section. RESULTS In Experiment 1, object arrays were generated from a set of 67 photographs of common objects (e.g., cap, keys, Fig. 1. Schematic Illustration of potential changes in the experimental arrays (objects represented by letters). Fig. 2. Mean percentage correct (with standard error bar) for each condition in Experiments 1 through 5. Asterisks indicate a significant difference (p < .05) from chance performance of 50% correct. ISI = interstimulus interval. 302 VOL. 7, NO. 5, SEPTEMBER 1996 PSYCHOLOGICAL SCIENCE Daniel J. Simons 3 stapler). Accuracy in the configuration change condition was nearly perfect, and significantly greater than in the identity change and the switch conditions (C vs. S: t[21] = 7.68, p < .0001; C vs. I: t[21] = 6.29, p < .0001; S vs I: t[21] = 0.556, n.s.; p values were Bonferroni adjusted). Subjects noticed substantially fewer changes in the identity change and switch conditions than in the configuration condition (see Fig. 2). Responses to configuration changes were also nearly 1s faster than responses to the other changes, a trend that was consistent across all five experiments. All subjects in the first experiment reported verbally encoding objects in the first array, a strategy that could improve accuracy in the identity condition because one of the labeled objects would be absent on each trial. Additionally, if observers labeled the objects in a particular order, the configuration change and switch conditions could benefit because the labels would not coincide with the new arrangement in the second array. However, verbal labeling cannot completely account for accurate responding because performance in the configuration change condition was substantially more accurate than in the switch condition. Although twice as many objects changed location in the switch condition as in the configuration change condition, configuration changes were far more noticeable than switches. This disparity suggests a separate encoding process for configuration that does not depend on verbal labeling. Perhaps objects are represented as physical bodies without retention of properties or category information; switching the positions of two objects changes their locations, but does not change the overall configuration. If verbal labeling improves accuracy only in the identity condition, reducing labeling should affect only identity accuracy. Experiment 2 asked whether all three conditions would be equally influenced by eliminating explicit encoding of labels. Experiment 2 was identical to Experiment I except that photographed objects were replaced by novel black geometric shapes that were designed to be difficult to label.4 The pattern of results in Experiment 1 was replicated (C vs. S: t[9] = 8.20, p < .0001; C vs. I: t[9] = 5.77, p = .0008; S vs. I: t[9] = 1.65, n.s.), but with relatively reduced accuracy in the identity change and switch conditions (see Fig. 2). However, because each object was repeated on approximately nine trials, subjects still reported assigning labels to the novel shapes. Experiment 3 reduced this repetition by generating arrays from a set of 275 shapes; no shape was repeated on more than three trials. Accuracy in the configuration change condition was again superior (C vs. S: t[9] = 8.301, p < .0001; C vs. I: t[9] = 10.82, p < .0001; S vs. I: t[9] = 2.20, p = .166). However, accuracy in the identity change __________________________________________________________ 3. A second experiment using the same materials demonstrated that varying the delay between arrays (from 1 to 7 s) had no effect on the outcome (the main effect and interactions involving delay all had Fs < 1). For each condition, the mean percentages correct were nearly identical in these experiments (C: 96.7, I: 73.3, and S: 60.8 in the experiment with a 4.3-s delay; C: 96.7, I: 72.5, and S: 65.8 across delays of 1 s to 7 s in the second experiment). Therefore,accuracy results from the two experiments were combined. 4. These shapes have been used in a recent series of negative priming studies conducted by Anne Treisman, who graciously allowed me to use them. VOL. 7, NO. 5, SEPTEMBER 1996 condition was not significantly greater than chance (see Fig.2). As expected, verbal labeling seemed to have a strong effect on performance in the identity change condition, with accuracy declining as labeling bebecame more difficult; accuracy was greater in Experiment 1 (photographs) than in Experiment 2 (novel shapes with repetition), and accuracy was greater in Experiment 2 than in Experiment 3 (novel shapes with less repetition). However, only the difference between Experiments 1 and 3 was significant, t(30) = 4.56, p < .0005. Experiment 4 controlled for the possibility that relatively poor performance in the identity change and switch conditions of the first three experiments was due to the relatively long delay between the two arrays by precisely replicating the procedure of Experiment 3 but reducing the ISI from 4.3 s to 250 ms. This ISI is comparable to those used in other change detection tasks (e.g., Pashler, 1988). Again, the pattern of results was nearly identical (C vs. S: t[9] = 8.486, p < .0001; C vs. I: t[9] = 17.471, p < .0001; S vs. I: t[9] = 3.025, p = .0432). Accuracy in all three conditions was reduced relative to Experiment 3 (see Fig. 2), but none of the differences between the two experiments were significant. Decreasing the ISI did not improve accuracy. All three experiments with novel shapes suggest a fundamental difference between coding of spatial configuration and coding of object identity (Mishkin, Ungerleider, & Macko, 1983; Pollatsek, Rayner, & Henderson, 1990). Memory for layout remained excellent despite reduced verbal labeling. However, memory for object identity dropped to chance without verbal encoding. A fifth experiment examined whether a similar memory reduction could be obtained using the photographic stimuli of Experiment 1 by interfering with verbal encoding. Subjects viewed these arrays while engaging in an interference task designed to eliminate verbal encoding. They shadowed a recorded story, repeating each word aloud immediately after hearing it (see Cherry, 1953; Treisman, 1964). The pattern of results was similar to the pattern in Experiments 2, 3, and 4 (C vs. S: t[9] = 8.072, p < .0001; C vs. I: t[9] = 9.192, p < .0001; S vs. I: t[9] = 0.699, n.s.). Accuracy in the configuration change condition was unaffected by this difficult interference task, but performance in the identity change condition was only slightly more accurate than chance and was significantly reduced relative to Experiment 1, which used the same stimuli without shadowing, t(30) = 2.915, p = .020. GENERAL DISCUSSION Despite our phenomenal experience of a world filled with objects and their properties, we seem to retain little more than layout information in the absence of further, effortful processes such as labeling.5 Memory for the spatial configuration of objects remained nearly perfect across all of the experimental manipulations, with no significant differences across experiments. Within each experiment, accuracy was significantly greater in _________________________________________________________ 5. These experiments used an explicit memory task to examine object representaations. An implicit memory task (e.g., testing for priming across displays) might reveal more accurate memory for object identity without effortful encoding. 303 PSYCHOLOGICAL SCIENCE Visual Representations the configuration change condition than in either of the other two conditions. Immediate memory for layout appears to be directly visual and impervious to verbal interference (see Gibson, 1979). In contrast, without effortful verbal encoding, we appear to retain little information about object properties. The important link between verbal labeling and performance in the identity change condition suggests that no visual information about object properties is preserved across views of a scene. However, all of the experiments presented here (as well as those reviewed in the introduction) used static images. Although the resulting findings point to a general failure to notice changes to scenes, the results may be an artifact of processing static events. Therefore, an additional experiment was conducted to determine whether object substitutions (similar in character and timing to those of the identity change condition described earlier) would be noticed during a dynamic event. In this experiment, 10 observers watched a color video of a brief conversation. Figure 3 shows a sequence of four frames taken from the video. Frame 1 shows the first person pouring a 2-L bottle of cola. Subjects saw her pick up the bottle, pour the cola, and set the bottle down in its original location (Frame 2). The bottle was in view for a total of 6.5 s. The camera then panned to reveal the approach of another person (Frame 3). During the pan, the table was out of view for approximately 4 s. The final frame shows the scene when the approaching person reached the table (Frame 4). Notice that the bottle of cola (the central object at the beginning of the scene) was replaced by a cardboard box while the camera was directed toward the arriving person. This view of the scene was on the screen for the remainder of the film (approximately 30 s), and then the monitor was turned off. None of the subjects reported noticing either the presence of a new object or the absence of the original cola bottle, suggesting that storing information about object identity is not automatic in processing natural scenes and events. When subjects were asked if they noticed anything unusual in the film, one noted that "the older lady poured only about a drop of diet soda into her cup," and another stated, "One person was drinking Diet Pepsi, while the other had Diet Coke." Subjects clearly perceived an action involving the bottle, but failed to see that it had been replaced. Visual memory for object identity and properties can be poor even in a relatively natural event in which the object in question is central to the event. How can we reconcile this inability to notice dramatic Fig. 3. Four frames from a video showing a change in object identity. 304 VOL. 7, NO. 5, SEPTEMBER 1996 PSYCHOLOGICAL SCIENCE Daniel J. Simons changes to scenes with our incredible ability to recognize more than 1,000 pictures (e.g., Shepard, 1967)? The results presented here suggest that our robust recognition memory does not result from the formation of an accurate image of each picture; our representations of object properties in each picture would be transitory at best without explicit encoding. Perhaps subjects in such picture recognition tasks have been able to encode enough object properties to discriminate all of the pictures sufficiently. The pictures in these tasks have been chosen to be as distinctive as possible, so a minimal amount of encoding might allow discrimination based on object properties. Interestingly, observers often fail to recognize a single familiar target (e.g., a penny) when the distractors are similar to the target (e.g., altered pennies; see Nickerson & Adams, 1979). Another possible explanation for this discrepancy relies on the notion of configuration or layout. If our representations of layout are as rapid and precise as the current studies suggest, subjects may be able to quickly encode the layout of objects in the pictures. They could then use these representations of layout to discriminate between targets and distractors. These explanations could be empirically distinguished by testing recognition with distractors that vary in either layout or object properties. In summary, the inability to notice changes to objects suggests that we do not maintain visual representations of object properties across views. Instead, our perceptual system assumes that object properties remain stable, allowing the world itself to serve as our memory (O'Regan, 1992). In contrast, our accurate and facile recognition of changes to the configuration of objects suggests that layout information is visually represented and does not depend on effortful abstraction (Gibson, 1979; Neisser, 1994). Such representations may help to account for our extraordinary ability to recognize distinctive pictures. Although little if any visual information about object properties appears to survive from one view of a scene to the next, representations of layout appear to be visual and independent of verbal or other effortful encoding. Acknowledgements—Thanks to Hal Pashler and an anonymous reviewer for their helpful suggestions and comments. Many thanks to Dan Levin for programming help and Anne Treisman for providing her set of novel shapes. Thanks also to Justin Barrett, James Cutting, Grant Gutheil, Linda Hermer, Frank Keil, Dan Levin, Katherine Richards, Carter Smith, Elizabeth Spelke, and Peter Vishton for their comments and suggestions. REFERENCES Biederman, I. (1987). Recognition-by-components: A theory of human image understanding. Psychological Review, 94, 115-147. Cherry. E.C. ( 1953). Some experiments upon the recognition of speech with one and with two ears. Journal of the Acoustical Society of America, 25, 975-979. VOL. 7, NO. 5, SEPTEMBER 1996 Currie, C., McConkie, G.W., Carlson-Radvansky, L.A., & Irwin, D.E. (1995). Maintaining visual stability across saccades: Role of the saccade target object (Technical Report No. UIUC-BI-HPP-95-01). ChampaignUrbana: University of Illinois, Beckman Institute. Gibson, J.J. (1979). The ecological approach to visual perception. Boston: Houghton Mifflin. Grimes, J. (1996). On the failure to detect changes in scenes across saccades. In K. Akins (Ed.), Perception (Vancouver Studies in Cognitive Science, Vol. 5, pp. 89-110). New York: Oxford University Press. Intraub, H. (1981). Rapid conceptual identification of sequentially presented pictures. Journal of Experimental Psychology: Human Perception and Performance, 7, 604-610. Mishkin, M., Ungerleider, L.G., & Macko, K.A. (1983). Object vision and spatial vision: Two cortical pathways. Trends in Neurosciences, 6, 414417. Neisser, U. (1994, November). Hodological and categorical systems in perception: "What" and "where" from an ecological point of view. Paper presented at Psychology Department Colloquium, Cornell University, Ithaca, NY. Nickerson, R.S. (1965). Short-term memory for complex meaningful visual configurations A demonstration of capacity. Canadian Journal of Psychology, 19, 155-160. Nickerson, R.S., & Adams, M.J. (1979). Long-term memory for a common object. Cognitive Psychology, 11, 287-307. O'Regan, J.K. (1992). Solving the 'Real' mysteries of visual perception: The world as an outside memory. Canadian Journal of Psychology, 46, 461 488. Pashler, H. (1988). Familiarity and visual change detection. Perception & Psychophysics, 44, 369-378. Pashler, H. (in press). Attention and visual perception: Analyzing divided attention. In D. Osherson & S. Kosslyn (Eds.), An invitation to cognitive science. Cambridge. MA: MIT Press. Pollatsek, A., & Rayner, K. (1992). What is integrated across fixations? In K. Rayner (Ed.), Eye movements and visual cognition: Scene perception and reading (pp. 166-191). New York: Springer-Verlag. Pollatsek, A., Rayner, K., & Henderson, J.M. (1990). Role of spatial location in integration of pictorial information across saccades. Journal of Experimental Psychology: Human Perception and Performance, 16, 199210. Shepard, R.N. (1967). Recognition memory for words, sentences, and pictures. Journal of Verbal Learning and Verbal Behavior, 6, 156-163. Standing, L., Conezio, J., & Haber, R.N. (1970). Perception and memory for pictures: Single-trial learning of 2500 visual stimuli. Psychonomic Science, 19, 73-74. Tarr, M.J., & Kriegman, D.J. (1992, November). Viewpoint-dependent features in human object representation. Poster presented at the 33rd annual meeting of the Psychonomic Society, St. Louis, MO. Treisman, A. (1964). Monitoring and storage of irrelevant messages in selective attention. Journal of Verbal Learning and Verbal Behavior, 3, 449-459. (RECEIVED 5/15/95; ACCEPTED 7/21/95) 305