Logistic Regression - Penn State Department of Statistics

advertisement

Logistic Regression

Logistic Regression

Jia Li

Department of Statistics

The Pennsylvania State University

Email: jiali@stat.psu.edu

http://www.stat.psu.edu/∼jiali

Jia Li

http://www.stat.psu.edu/∼jiali

Logistic Regression

Logistic Regression

Preserve linear classification boundaries.

I By the Bayes rule:

Ĝ (x) = arg max Pr (G = k | X = x) .

k

I

Decision boundary between class k and l is determined by the

equation:

Pr (G = k | X = x) = Pr (G = l | X = x) .

I

Divide both sides by Pr (G = l | X = x) and take log. The

above equation is equivalent to

log

Jia Li

Pr (G = k | X = x)

=0.

Pr (G = l | X = x)

http://www.stat.psu.edu/∼jiali

Logistic Regression

I

Since we enforce linear boundary, we can assume

p

X (k,l)

Pr (G = k | X = x)

(k,l)

log

= a0 +

aj xj .

Pr (G = l | X = x)

j=1

I

Jia Li

For logistic regression, there are restrictive relations between

a(k,l) for different pairs of (k, l).

http://www.stat.psu.edu/∼jiali

Logistic Regression

Assumptions

Pr (G = 1 | X = x)

Pr (G = K | X = x)

Pr (G = 2 | X = x)

log

Pr (G = K | X = x)

log

= β10 + β1T x

= β20 + β2T x

..

.

log

Jia Li

Pr (G = K − 1 | X = x)

Pr (G = K | X = x)

http://www.stat.psu.edu/∼jiali

= β(K −1)0 + βKT −1 x

Logistic Regression

I

For any pair (k, l):

log

Pr (G = k | X = x)

= βk0 − βl0 + (βk − βl )T x .

Pr (G = l | X = x)

I

Number of parameters: (K − 1)(p + 1).

I

Denote the entire parameter set by

θ = {β10 , β1 , β20 , β2 , ..., β(K −1)0 , βK −1 } .

I

Jia Li

The log ratio of posterior probabilities are called log-odds or

logit transformations.

http://www.stat.psu.edu/∼jiali

Logistic Regression

I

Under the assumptions, the posterior probabilities are given

by:

Pr (G = k | X = x) =

exp(βk0 + βkT x)

PK −1

1 + l=1 exp(βl0 + βlT x)

for k = 1, ..., K − 1

Pr (G = K | X = x) =

I

1+

l=1

exp(βl0 + βlT x)

.

For Pr (G = k | X = x) given above, obviously

I

I

Jia Li

1

PK −1

PK

Sum up to 1: k=1 Pr (G = k | X = x) = 1.

A simple calculation shows that the assumptions are satisfied.

http://www.stat.psu.edu/∼jiali

Logistic Regression

Comparison with LR on Indicators

I

Similarities:

I

I

I

Difference:

I

I

Jia Li

Both attempt to estimate Pr (G = k | X = x).

Both have linear classification boundaries.

Linear regression on indicator matrix: approximate

Pr (G = k | X = x) by a linear function of x.

Pr (G = k | X = x) is not guaranteed to fall between 0 and 1

and to sum up to 1.

Logistic regression: Pr (G = k | X = x) is a nonlinear function

of x. It is guaranteed to range from 0 to 1 and to sum up to 1.

http://www.stat.psu.edu/∼jiali

Logistic Regression

Fitting Logistic Regression Models

I

Criteria: find parameters that maximize the conditional

likelihood of G given X using the training data.

I

Denote pk (xi ; θ) = Pr (G = k | X = xi ; θ).

I

Given the first input x1 , the posterior probability of its class

being g1 is Pr (G = g1 | X = x1 ).

I

Since samples in the training data set are independent, the

posterior probability for the N samples each having class gi ,

i = 1, 2, ..., N, given their inputs x1 , x2 , ..., xN is:

N

Y

i=1

Jia Li

http://www.stat.psu.edu/∼jiali

Pr (G = gi | X = xi ) .

Logistic Regression

I

The conditional log-likelihood of the class labels in the

training data set is

L(θ) =

=

N

X

i=1

N

X

i=1

Jia Li

http://www.stat.psu.edu/∼jiali

log Pr (G = gi | X = xi )

log pgi (xi ; θ) .

Logistic Regression

Binary Classification

I

For binary classification, if gi = 1, denote yi = 1; if gi = 2,

denote yi = 0.

I

Let p1 (x; θ) = p(x; θ), then

p2 (x; θ) = 1 − p1 (x; θ) = 1 − p(x; θ) .

I

Jia Li

Since K = 2, the parameters θ = {β10 , β1 }.

We denote β = (β10 , β1 )T .

http://www.stat.psu.edu/∼jiali

Logistic Regression

I

If yi = 1, i.e., gi = 1,

log pgi (x; β) = log p1 (x; β)

= 1 · log p(x; β)

= yi log p(x; β) .

If yi = 0, i.e., gi = 2,

log pgi (x; β) = log p2 (x; β)

= 1 · log(1 − p(x; β))

= (1 − yi ) log(1 − p(x; β)) .

Since either yi = 0 or 1 − yi = 0, we have

log pgi (x; β) = yi log p(x; β) + (1 − yi ) log(1 − p(x; β)) .

Jia Li

http://www.stat.psu.edu/∼jiali

Logistic Regression

I

The conditional likelihood

L(β) =

=

N

X

i=1

N

X

log pgi (xi ; β)

[yi log p(xi ; β) + (1 − yi ) log(1 − p(xi ; β))]

i=1

I

I

Jia Li

There p + 1 parameters in β = (β10 , β1 )T .

Assume a column vector form for β:

β10

β11

β = β12 .

..

.

β1,p

http://www.stat.psu.edu/∼jiali

Logistic Regression

I

Jia Li

Here we add the constant term 1 to x to accommodate the

intercept.

1

x,1

x = x,2 .

..

.

x,p

http://www.stat.psu.edu/∼jiali

Logistic Regression

I

I

By the assumption of logistic regression model:

p(x; β) = Pr (G = 1 | X = x) =

exp(β T x)

1 + exp(β T x)

1 − p(x; β) = Pr (G = 2 | X = x) =

1

1 + exp(β T x)

Substitute the above in L(β):

L(β) =

N h

X

i=1

Jia Li

http://www.stat.psu.edu/∼jiali

yi β T xi − log(1 + e β

Tx

i

i

) .

Logistic Regression

I

To maximize L(β), we set the first order partial derivatives of

L(β) to zero.

∂L(β)

β1j

=

=

=

N

X

i=1

N

X

i=1

N

X

i=1

for all j = 0, 1, ..., p.

Jia Li

http://www.stat.psu.edu/∼jiali

yi xij −

yi xij −

T

N

X

xij e β xi

1 + e β T xi

i=1

N

X

p(x; β)xij

i=1

xij (yi − p(xi ; β))

Logistic Regression

I

In matrix form, we write

N

∂L(β) X

=

xi (yi − p(xi ; β)) .

∂β

i=1

I

I

To solve the set of p + 1 nonlinear equations ∂L(β)

∂β1j = 0,

j = 0, 1, ..., p, use the Newton-Raphson algorithm.

The Newton-Raphson algorithm requires the

second-derivatives or Hessian matrix:

N

X

∂ 2 L(β)

=−

xi xiT p(xi ; β)(1 − p(xi ; β)) .

T

∂β∂β

i=1

Jia Li

http://www.stat.psu.edu/∼jiali

Logistic Regression

I

The element on the jth row and nth column is (counting from

0):

∂L(β)

∂β1j ∂β1n

= −

= −

= −

T

T

T

N

X

(1 + e β xi )e β xi xij xin − (e β xi )2 xij xin

i=1

N

X

i=1

N

X

(1 + e β T xi )2

xij xin p(xi ; β) − xij xin p(xi ; β)2

xij xin p(xi ; β)(1 − p(xi ; β)) .

i=1

Jia Li

http://www.stat.psu.edu/∼jiali

Logistic Regression

I

Starting with β old , a single Newton-Raphson update is

β

new

=β

old

−

∂ 2 L(β)

∂β∂β T

−1

∂L(β)

,

∂β

where the derivatives are evaluated at β old .

Jia Li

http://www.stat.psu.edu/∼jiali

Logistic Regression

I

The iteration can be expressed compactly in matrix form.

I

I

I

I

I

Let y be the column vector of yi .

Let X be the N × (p + 1) input matrix.

Let p be the N-vector of fitted probabilities with ith element

p(xi ; β old ).

Let W be an N × N diagonal matrix of weights with ith

element p(xi ; β old )(1 − p(xi ; β old )).

Then

∂L(β)

∂β

2

∂ L(β)

∂β∂β T

Jia Li

http://www.stat.psu.edu/∼jiali

= XT (y − p)

= −XT WX .

Logistic Regression

I

The Newton-Raphson step is

β new

= β old + (XT WX)−1 XT (y − p)

= (XT WX)−1 XT W(Xβ old + W−1 (y − p))

= (XT WX)−1 XT Wz ,

I

where z , Xβ old + W−1 (y − p).

If z is viewed as a response and X is the input matrix, β new is

the solution to a weighted least square problem:

β new ← arg min(z − Xβ)T W(z − Xβ) .

β

I

Recall that linear regression by least square is to solve

arg min(z − Xβ)T (z − Xβ) .

β

I

I

Jia Li

z is referred to as the adjusted response.

The algorithm is referred to as iteratively reweighted least

squares or IRLS.

http://www.stat.psu.edu/∼jiali

Logistic Regression

Pseudo Code

1. 0 → β

2. Compute y by setting its elements to

1 if gi = 1

yi =

0 if gi = 2

,

i = 1, 2, ..., N.

3. Compute p by setting its elements to

T

e β xi

i = 1, 2, ..., N.

1 + e β T xi

Compute the diagonal matrix W. The ith diagonal element is

p(xi ; β)(1 − p(xi ; β)), i = 1, 2, ..., N.

z ← Xβ + W−1 (y − p).

β ← (XT WX)−1 XT Wz.

If the stopping criteria is met, stop; otherwise go back to step

3.

p(xi ; β) =

4.

5.

6.

7.

Jia Li

http://www.stat.psu.edu/∼jiali

Logistic Regression

Computational Efficiency

Jia Li

I

Since W is an N × N diagonal matrix, direct matrix

operations with it may be very inefficient.

I

A modified pseudo code is provided next.

http://www.stat.psu.edu/∼jiali

Logistic Regression

1. 0 → β

2. Compute y by setting its elements to

yi =

1

0

if gi = 1

if gi = 2

, i = 1, 2, ..., N .

3. Compute p by setting its elements to

T

p(xi ; β) =

e β xi

i = 1, 2, ..., N.

1 + e β T xi

4. Compute the N × (p + 1) matrix X̃ by multiplying the

matrix X by p(xi ; β)(1 − p(xi ; β)), i = 1, 2, ..., N:

T

x1

p(x1 ; β)(1 − p(x1 ; β))x1T

xT

p(x2 ; β)(1 − p(x2 ; β))x T

2

2

X=

· · · X̃ = · · ·

xNT

p(xN ; β)(1 − p(xN ; β))xNT

ith row of

5. β ← β + (XT X̃)−1 XT (y − p).

6. If the stopping criteria is met, stop; otherwise go back to step 3.

Jia Li

http://www.stat.psu.edu/∼jiali

Logistic Regression

Example

Diabetes data set

I

Input X is two dimensional. X1 and X2 are the two principal

components of the original 8 variables.

I

Class 1: without diabetes; Class 2: with diabetes.

I

Applying logistic regression, we obtain

β = (0.7679, −0.6816, −0.3664)T .

Jia Li

http://www.stat.psu.edu/∼jiali

Logistic Regression

I

The posterior probabilities are:

Pr (G = 1 | X = x) =

Pr (G = 2 | X = x) =

I

Jia Li

e 0.7679−0.6816X1 −0.3664X2

1 + e 0.7679−0.6816X1 −0.3664X2

1

1 + e 0.7679−0.6816X1 −0.3664X2

The classification rule is:

1 0.7679 − 0.6816X1 − 0.3664X2 ≥ 0

Ĝ (x) =

2 0.7679 − 0.6816X1 − 0.3664X2 < 0

http://www.stat.psu.edu/∼jiali

Logistic Regression

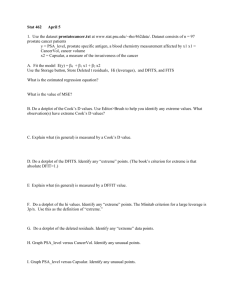

Solid line: decision boundary obtained by logistic regression. Dash

line: decision boundary obtained by LDA.

Jia Li

http://www.stat.psu.edu/∼jiali

I

Within training

data set

classification error

rate: 28.12%.

I

Sensitivity: 45.9%.

I

Specificity: 85.8%.

Logistic Regression

Multiclass Case (K ≥ 3)

I

Jia Li

When K ≥ 3, β is a (K-1)(p+1)-vector:

β10

β11

.

..

β10

β1p

β1

β20

β20

.

β2

.

β=

= .

..

.

β2p

.

β(K −1)0

..

β(K −1)0

βK −1

..

.

β(K −1)p

http://www.stat.psu.edu/∼jiali

Logistic Regression

βl0

.

Let β̄l =

βl

The likelihood function becomes

I

I

L(β) =

=

=

N

X

i=1

N

X

T

log

i=1

"

N

X

i=1

Jia Li

log pgi (xi ; β)

http://www.stat.psu.edu/∼jiali

1+

β̄gTi xi

e β̄gi xi

PK −1

l=1

!

Tx

i

e β̄l

− log 1 +

K

−1

X

l=1

!#

e

β̄lT xi

Logistic Regression

I

Note: the indicator function I (·) equals 1 when the argument

is true and 0 otherwise.

I

First order derivatives:

#

"

T

N

X

e β̄k xi xij

∂L(β)

=

I (gi = k)xij −

P −1 β̄ T x

∂βkj

l i

1+ K

l=1 e

i=1

=

N

X

i=1

Jia Li

http://www.stat.psu.edu/∼jiali

xij (I (gi = k) − pk (xi ; β))

Logistic Regression

I

Second order derivatives:

∂ 2 L(β)

∂βkj ∂βmn

=

N

X

xij ·

i=1

(1 +

1

PK −1

l=1

Tx

i

e β̄l

"

−e

β̄kT xi

I (k = m)xin (1 +

)2

·

K

−1

X

#

e

β̄lT xi

)+e

β̄kT xi

e

Tx

β̄m

i

xin

l=1

=

N

X

xij xin (−pk (xi ; β)I (k = m) + pk (xi ; β)pm (xi ; β))

i=1

= −

N

X

xij xin pk (xi ; β)[I (k = m) − pm (xi ; β)] .

i=1

Jia Li

http://www.stat.psu.edu/∼jiali

Logistic Regression

I

Matrix form.

I

y is the concatenated

N × (K − 1).

y1

y2

y= .

..

indicator vector of dimension

yk =

yK −1

I (g1 = k)

I (g2 = k)

..

.

I (gN = k)

1≤k ≤K −1

I

p is the concatenated

N × (K − 1).

p1

p2

p= .

..

pK −1

Jia Li

http://www.stat.psu.edu/∼jiali

vector of fitted probabilities of dimension

pk =

pk (x1 ; β)

pk (x2 ; β)

..

.

pk (xN ; β)

1≤k ≤K −1

Logistic Regression

I

Jia Li

X̃ is an N(K − 1) × (p + 1)(K − 1) matrix:

X 0

··· 0

0

X

··· 0

X̃ =

··· ··· ··· ···

0

0

··· X

http://www.stat.psu.edu/∼jiali

Logistic Regression

I

Jia Li

Matrix W is an N(K − 1) × N(K − 1) square matrix:

W11

W12

· · · W1(K −1)

W21

W

· · · W2(K −1)

22

W =

···

···

··· ···

W(K −1),1 W(K −1),2 · · · W(K −1),(K −1)

I

Each submatrix Wkm , 1 ≤ k, m ≤ K − 1, is an N × N

diagonal matrix.

I

When k = m, the ith diagonal element in Wkk is

pk (xi ; β old )(1 − pk (xi ; β old )).

I

When k 6= m, the ith diagonal element in Wkm is

−pk (xi ; β old )pm (xi ; β old ).

http://www.stat.psu.edu/∼jiali

Logistic Regression

I

I

Similarly as with binary classification

∂L(β)

∂β

= X̃T (y − p)

∂ 2 L(β)

∂β∂β T

= −X̃T WX̃ .

The formula for updating β new in the binary classification case

holds for multiclass.

β new = (X̃T WX̃)−1 X̃T Wz ,

where z , X̃β old + W−1 (y − p). Or simply:

β new = β old + (X̃T WX̃)−1 X̃T (y − p) .

Jia Li

http://www.stat.psu.edu/∼jiali

Logistic Regression

Computation Issues

Jia Li

I

Initialization: one option is to use β = 0.

I

Convergence is not guaranteed, but usually is the case.

I

Usually, the log-likelihood increases after each iteration, but

overshooting can occur.

I

In the rare cases that the log-likelihood decreases, cut step

size by half.

http://www.stat.psu.edu/∼jiali

Logistic Regression

Connection with LDA

I

Under the model of LDA:

Pr (G = k | X = x)

Pr (G = K | X = x)

πk

1

= log

− (µk + µK )T Σ−1 (µk − µK )

πK

2

T −1

+x Σ (µk − µK )

log

= ak0 + akT x .

Jia Li

I

The model of LDA satisfies the assumption of the linear

logistic model.

I

The linear logistic model only specifies the conditional

distribution Pr (G = k | X = x). No assumption is made

about Pr (X ).

http://www.stat.psu.edu/∼jiali

Logistic Regression

I

The LDA model specifies the joint distribution of X and G .

Pr (X ) is a mixture of Gaussians:

Pr (X ) =

K

X

πk φ(X ; µk , Σ) .

k=1

where φ is the Gaussian density function.

Jia Li

I

Linear logistic regression maximizes the conditional likelihood

of G given X : Pr (G = k | X = x).

I

LDA maximizes the joint likelihood of G and X :

Pr (X = x, G = k).

http://www.stat.psu.edu/∼jiali

Logistic Regression

Jia Li

I

If the additional assumption made by LDA is appropriate,

LDA tends to estimate the parameters more efficiently by

using more information about the data.

I

Samples without class labels can be used under the model of

LDA.

I

LDA is not robust to gross outliers.

I

As logistic regression relies on fewer assumptions, it seems to

be more robust.

I

In practice, logistic regression and LDA often give similar

results.

http://www.stat.psu.edu/∼jiali

Logistic Regression

Simulation

I

Assume input X is 1-D.

I

Two classes have equal priors and the class-conditional

densities of X are shifted versions of each other.

Each conditional density is a mixture of two normals:

I

I

I

I

Jia Li

Class 1 (red): 0.6N(−2, 14 ) + 0.4N(0, 1).

Class 2 (blue): 0.6N(0, 14 ) + 0.4N(2, 1).

The class-conditional densities are shown below.

http://www.stat.psu.edu/∼jiali

Logistic Regression

Jia Li

http://www.stat.psu.edu/∼jiali

Logistic Regression

LDA Result

Jia Li

I

Training data set: 2000 samples for each class.

I

Test data set: 1000 samples for each class.

I

The estimation by LDA: µ̂1 = −1.1948, µ̂2 = 0.8224,

σ̂ 2 = 1.5268. Boundary value between the two classes is

(µ̂1 + µ̂2 )/2 =−0.1862.

I

The classification error rate on the test data is 0.2315.

I

Based on the true distribution, the Bayes (optimal) boundary

value between the two classes is −0.7750 and the error rate is

0.1765.

http://www.stat.psu.edu/∼jiali

Logistic Regression

Jia Li

http://www.stat.psu.edu/∼jiali

Logistic Regression

Logistic Regression Result

I

Linear logistic regression obtains

β = (−0.3288, −1.3275)T .

The boundary value satisfies −0.3288 − 1.3275X = 0, hence

equals −0.2477.

I

The error rate on the test data set is 0.2205.

I

The estimated posterior probability is:

Pr (G = 1 | X = x) =

Jia Li

http://www.stat.psu.edu/∼jiali

e −0.3288−1.3275x

.

1 + e −0.3288−1.3275x

Logistic Regression

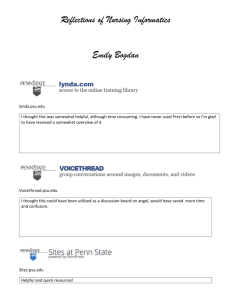

The estimated posterior probability Pr (G = 1 | X = x) and its true

value based on the true distribution are compared in the graph

below.

Jia Li

http://www.stat.psu.edu/∼jiali