Center for Statistical Ecology and Environmental Statistics

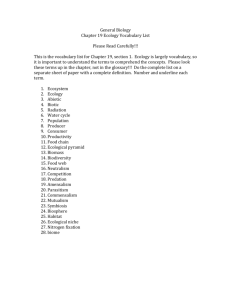

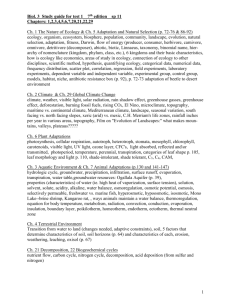

advertisement