The Role of Software in Optimization and Operations Research

advertisement

©UNESCO-EOLSS

OPTIMIZATION AND OPERATIONS RESEARCH – The Role of Software in Optimization and Operations Research - Harvey J.

Greenberg

THE ROLE OF SOFTWARE IN OPTIMIZATION AND

OPERATIONS RESEARCH

Harvey J. Greenberg

University of Colorado at Denver, USA

Keywords: optimization, mathematical programming, computational economics,

mathematical programming systems

Contents

1. Introduction

2. Historical Perspectives

3. Obtaining a Solution

4. Modeling

5. Computer-Assisted Analysis

6. Intelligent Mathematical Programming Systems

7. Beyond the Horizon

Acknowledgement

Glossary

Bibliography

Biographical Sketch

Summary

This chapter presents the many roles of software in optimization. Obtaining a solution

has been the focus of mathematical programming, and now one must consider different

architectures and algorithm environments. But the role goes beyond the numerical

computation, integrating concerns for supporting optimization modeling and analysis.

Beginning with a historical perspective this chapter describes modern developments,

including that of an intelligent mathematical programming system.

1. Introduction

The promise of operations research is to solve decision-making problems, and a large

part uses optimization. The mathematical program is given by the following:

optimize f ( x ) : x ∈ X , g ( x ) ≤ 0, h( x ) = 0,

(1)

where X ⊆ \ n , f : X 6 \, g : 6 \ m , h : X 6 \ M , and optimize is either minimize or

maximize.

In words, we seek to find a minimum or maximum of a real-valued function, possibly

subject to some mixture of inequality and equality constraints. There are variations of

this form, such as multiple objectives, goals, uncertainty, and logical expressions. Each

variation is approached by using the above standard form, and generally, this can be

done in more than one way. More discussion of this and particular mathematical

programs are given in the Mathematical Programming Glossary.

©Encyclopedia of Life Support Systems (EOLSS)

OPTIMIZATION AND OPERATIONS RESEARCH – The Role of Software in Optimization and Operations Research - Harvey J.

Greenberg

The basic role of software is to enable an expression of a mathematical program, obtain

a solution and provide tools to support model management and analysis of the results. In

this chapter, we begin with some historical perspectives about software and

optimization, followed by overviews of software designed to obtain a solution. Software

for modeling has its own history, but the focus here is on current systems and needs for

future designs. Then, computer-assisted analysis is explained, drawing from two bodies

of work and pointing towards opportunities for enhanced roles of software. This leads

naturally into a description of intelligent mathematical programming systems, marking

new roles for future systems. The concluding section discusses current developments

and the role of software in the broader field of operations research.

2. Historical Perspectives

Orchard-Hays gave an excellent historical account of the early years of mathematical

programming and its inseparable ties to the computing field. (Also see his companion

papers, as well as others, in the same proceedings.) Mathematical programming was

treated as a process, rather than an object. As such, people needed a way to express their

model, often with a general language like FORTRAN or with what were then called

matrix generators, which evolved into modeling languages. Some evolved further into

modeling systems.

This emerged in the early years, when system was used to denote the fact that it

contained complete optimization, taking data as an input file and producing a solution

file, rather than being a subroutine library. Currently, both the mathematical

programming system (MPS) and the library approach (e.g., IBM OSL) are used. With

the growth of object-oriented programming, there is a third paradigm: a shell that serves

as a main program with generic classes; the programmer adds particular classes within

this framework to represent a particular problem and algorithm. Current systems that

use this paradigm are ABACUS, MINTO and PICO.

There were significant developments in the 1980s, not the least of which was the

explosive growth of affordable computers. Waren, et al., give the status of nonlinear

programming (NLP), with the emergence of PCs cited as a significant change that

affects how algorithms are implemented and what has changed in importance, e.g.,

space (disk and memory) is no longer an issue (also see Fourer's guide in

Bibliography). This is in some contrast to most of the more recent surveys, which still

emphasize the algorithms apart from their implementation.

In integer programming, a recent plenary talk by Ralph Gomory suggested that modern

computers can make some of the group-theoretic ideas, which had been abandoned in

the 1970s, more practical. Following this note, Karla Hoffman gave a perceptive insight

using dinosaurs flying jets as a metaphor to emphasize the role of computing power

running old algorithms. Her primary assessment, however, was that it is the formulation

that has the most impact on solvability, and this is the theme to consider as the modern

role for software. In fact, this was a major goal of the project to develop an Intelligent

Mathematical Programming System (IMPS).

©Encyclopedia of Life Support Systems (EOLSS)

OPTIMIZATION AND OPERATIONS RESEARCH – The Role of Software in Optimization and Operations Research - Harvey J.

Greenberg

3. Obtaining a Solution

A solution is a global optimum, or a declaration that no optimum exists, preferably with

a reason, such as infeasible or unbounded. Software cannot guarantee that a correct

conclusion is reached. One reason is computational error, causing inaccurate results,

even for linear programs. Some problems use only integer arithmetic, where there is no

computational error, but they are typically hard problems that cannot guarantee an

accurate result due to how long it would take to consider the large number of possible

solutions. It is this latter source of inaccuracy, that is the cutting edge of optimization

software.

Several recent surveys provide details on the current state of the art in obtaining a global

optimum, drawing from classical methods based on local search (e.g., on calculus).

Because we cannot obtain exact solutions to hard problems in a practical amount of

time, two basic approaches have been taken: metaheuristics, such as a Tabu Search, and

approximation algorithms with guaranteed bounds. Software to implement these can

make a difference in their performance (see Global Optimization).

In discrete optimization some exact methods exist for special situations, like dynamic

programming, , but the branch and bound/cut remains the primary exact method. It is

also used in continuous (global) optimization, so branch and bound is a general exact

method. These typically use linear programming to solve relaxation problems for

bounds, cut generation, and search guidance. The relaxations drop integer restrictions,

and linear functions are used to approximate nonlinear ones (typically using the Taylor

expansion). A software issue is how fast the linear programs are solved when used this

way. The linear program (LP) relaxation of a combinatorial optimization problem has

very different characteristics than a true LP, such as one that represents product

distribution. This implies, for example, that the LP algorithm control parameters need

adjustments from their default values since they are typically tuned with test problems

that are true LPs (see Linear Programming, Dynamic Programming and Bellman's

Principle , Combinatorial Optimization and Integer Programming).

3.1. Data structures, Controls and Interfaces

Wirth's charming equation, Algorithms + Data Structures = Programs, is not quite

enough to be a system capable of solving optimization problems with computing

paradigms available in 2002. To it, we must add:

Programs + Controls + Interfaces = Systems

There have always been algorithm controls, but interfaces have become much more

sophisticated, especially with graphics.

Most publications in mathematical programming present algorithms, but very few

describe the data structures. Fundamentally, an algorithm is a precise sequence of steps.

We often refer to an algorithm family, or algorithm strategy, to mean some steps are not

completely defined, leaving room for tactical variations. For example, Figure 1 gives

the simplex algorithm strategy that uses the 2-phase method of getting an initial feasible

©Encyclopedia of Life Support Systems (EOLSS)

OPTIMIZATION AND OPERATIONS RESEARCH – The Role of Software in Optimization and Operations Research - Harvey J.

Greenberg

solution (or ascertaining that none exists), leaving such steps as pricing open to different

algorithm tactics.

Figure1: Two-Phase Simplex Algorithm Strategy

A data structure is how we store the information. Even a specific algorithm, such as

Dantzig's Specific Simplex Method, does not specify the type of arithmetic or other

things that depend upon the data structure (and the computing environment). In the case

of a simplex algorithm, a typical data structure is to store the data in a sparse format.

For example, the LP matrix is stored by columns with each column having a pair of data

entries for each nonzero:

row index | value

In particular, suppose we have a transportation problem with s sources and d

destinations. The dimensions of the matrix are m = s + d rows (one per node) and n = sd

columns (one per arc). Each column of the node-arc incidence matrix has exactly two

non-zeroes, -1 for the source and +1 for the destination. The total number of nonzeroes,

therefore, is 2n, and its density is

2n

mn

, which reduces to

2

m

. The greater the number of

nodes (m), the greater the sparsity, because the density becomes small as m becomes

large. To illustrate, consider the following node-arc incidence matrix, which represents

a transportation problem that has 2 sources and 3 destinations.

⎡ −1

⎢ 0

⎢

A=⎢ 1

⎢

⎢ 0

⎢⎣ 0

−1

0

0

1

−1

0

0

0

0

0

−1

1

0

0

−1

0

1

1

0

0

©Encyclopedia of Life Support Systems (EOLSS)

0⎤

−1⎥⎥

0⎥

⎥

0⎥

1⎥⎦

(2)

OPTIMIZATION AND OPERATIONS RESEARCH – The Role of Software in Optimization and Operations Research - Harvey J.

Greenberg

There are 12 nonzero entries in this 5 × 6 matrix, so its density is

12

30

, which is 0.4. For

more realistic sizes, where m 1000 , the density is less than 1%. Many large LPs have

an embedded network structure, which is one reason they tend to be very sparse.

Nonlinear functions are represented by their parse tree, or something very similar.

Figure 2 shows an example.

Figure 2: Parse Tree for f ( x1 ; x2 ) = x12 − x1 x2 + x23

Solvers call upon this tree for function evaluation. Modern methods use automatic

differentiation, which stores nonlinear functions in a similar data structure, designed for

rapid calculations of the function and its derivatives (if they exist).

3.2. Parallel Algorithms

One of the current topics of research is how to design parallel algorithms. An early use

of parallel architecture was for Dantzig-Wolfe decomposition in LP. Many types of

architectures and algorithms are in the text by Bertsekas and Tsitsiklis, and more recent

ideas are in proceedings, notably those edited by Heath, et al. and by Pardalos, et al.

To illustrate how a parallel architecture can be used effectively, consider a

parallelization of the conjugate gradient method (see Nonlinear Programming). We are

given a quadratic form,

1 T

x Qx − cx

2

, where Q is an n × n symmetric, positive-definite

matrix. We know its (unique) minimum occurs at the solution to the equation, Qx = c,

and one way to compute this is by the following iterations:

xt +1 = xt + α t d t ,

(3)

where x0 is given, d0 = − g0 = c − Qx0, and

αt = −

gt d t

(d t )T Qd t

©Encyclopedia of Life Support Systems (EOLSS)

(4)

OPTIMIZATION AND OPERATIONS RESEARCH – The Role of Software in Optimization and Operations Research - Harvey J.

Greenberg

d t = −( g t )T + β t d t−1

βt =

(5)

( g t −1 )T Qd t −1

(6)

(d t −1 )T Qd t −1

g t = ( xt )T Q − c = ∇

(

1 T

x Qx − cx

2

) x= x

t

(7)

Let us assess the computations at an iteration. At the beginning of iteration t, we have

already computed xt, dt-1 , and gt-1 (gt-1 )T. We must compute gt = Qxt − c and gt(gt)T ;

from these, we use equations (6) and (5) to compute βt and d t, respectively. Finally, we

use equation (3) to compute xt+1, thereby completing the iteration.

Suppose p ≥ n, so we can assign a processor to compute results pertaining to one

coordinate of the vectors. Let processor i be given the ith row of Q, denoted Qi•. The

vector Qxt - c is done completely in parallel, with processor i computing Qi• xt − ci.

These are communicated to a designated processor, which thus obtains gt. This

processor could compute gt(gt)T, or it could broadcast gt to the other processors and have

them compute ( git )2 and send its value back for accumulation. Which is better depends

on communication time versus the time to compute an inner product on the main

processor. Alternative schemes use hierarchies to accumulate inner products, and much

of the design depends not only upon the particular architecture, but also upon whether

the matrix and vectors are sparse.

This illustrates the general notion that one way to design a parallel algorithm is to use

parallel linear algebra. Many optimization algorithms can be parallelized this way, but

some do not use linear algebra centrally. In particular, combinatorial optimization

algorithms can take other advantage of parallel computation.

Consider the dynamic programming recursion for the 0-1 knapsack problem:

f j ( β ) = max{ f j −1 ( β ), c j + f j −1 ( β − a j )} for β ∈ {a j , a j + 1, ..., b}.

(8)

for j = 1, …, n, where f0 ≡ 0 (see Discrete Optimization, Dynamic Programming and

Bellman's Principle ). Suppose we decompose each iteration by having each processor

compute fj for an equal number of state values. For notational convenience, let b = kp

for some positive integer, k. In words, let the total size of the knapsack be an integer

multiple of the number of processors. This means that each processor has k state values

to compute, and each takes the same amount of time. This is clearly a logical

decomposition, but its merit depends on communication time, which depends upon the

particular parallel architecture.

Scheduling tasks to processors is the key problem in designing a parallel algorithm.

Load balancing is a proxy goal for maximizing the speedup, whose reciprocal is the

fraction of the best possible serial computation time. Let T*(s) be the best time it takes

©Encyclopedia of Life Support Systems (EOLSS)

OPTIMIZATION AND OPERATIONS RESEARCH – The Role of Software in Optimization and Operations Research - Harvey J.

Greenberg

to solve a problem in some class of size s on a serial computer. (The "size" is frequently

taken to mean the number of variables, but it could account for other data values.) Let

Tp(s) be the time it takes a parallel algorithm to solve the same problem with p

processors. Then,

T * ( s)

Speedup = S p ( s ) =

.

Tp ( s)

(9)

The best one can hope for is Sp(s) = p — equivalently, Tp(s) = T*(s)/p. This occurs if the

best serial algorithm can have its computations decomposed perfectly into p parts, each

taking the same amount of time. This could never be fully achieved, even with perfect

load balancing, due to message passing that is needed − that is, the information

produced by a processor must be communicated to at least one other processor.

The speedup of the dynamic programming parallelization is nearly linear. We have

S p (n, b) =

T * (n, b)

T * (n, k ) + M (n, k )

,

(10)

where M(n, k) is the communication time for n variables and k states. The size is given

as the number of variables (n) and the number of states (b), but we can consider the cost

per iteration that is, divide by n, since both algorithms perform n iterations. Then,

S p (n, kp) =

τ (kp)

.

τ (k ) + μ (k )

(11)

Further, each state requires a simple comparison of two values plus a storage of the

result, so τ(kp) = pτ (k). Substituting this into the above expression simplifies the

speedup, which is now independent of the number of variables:

S p ( n, b ) =

p

μ (k )

1+

τ (k )

(12)

,

The speedup depends on the communication time relative to the computation time,

which is the ratio:

μ (k )

τ (k )

. We can see that τ ( K ) ≈ α k for some constant α, but μ(k)

could be O(k) or O(log k), depending upon the architecture. A worst case has

S p (n, kp) ≈ γ p for some constant, γ, which is still very good for large state values.

Doubling the number of processors doubles the speedup. The more favorable case is

when μ (k) = O(log k), for then limk→∞ Sp(n, kp) → p. Currently, one of the most active

places for such research is Sandia National Laboratories, with the ASCI red machine

that consists of more than 9000 tightly-coupled Intel processors.

They have an object-oriented Parallel Integer and Combinatorial Optimization code,

called PICO. Despite its title, PICO is a framework designed for general parallel branch

©Encyclopedia of Life Support Systems (EOLSS)

OPTIMIZATION AND OPERATIONS RESEARCH – The Role of Software in Optimization and Operations Research - Harvey J.

Greenberg

and bound, including nonlinear programs with continuous-valued variables. While there

have been some efforts to use parallel algorithms for hard problems, this is still a

frontier with many design decisions and experiments ahead. One class of algorithms

that have a natural decomposition to balance the load among the processors is the class

of stochastic, population-based, evolutionary algorithms.

Some classes of hard nonlinear problems, such as in design optimization, involve

expensive functional evaluations. Often, f (x) is the solution to a differential equation or

a simulation, where x is a vector of design parameters. Although x is not large, obtaining

f (x) can take hours. Parallel algorithms are being developed for such problems, some

with a speedup on the order of p .

4. Modeling

Many modeling languages and systems have appeared over the past four decades; some

have disappeared. Each current language requires the modeler to adhere to a

predetermined way of expressing the model, and we shall discuss some of them in the

next section. An ideal system is one where the adaptation is the burden of the computer,

not the human. As we have different cognitive skills, some algebraic, some processoriented, some graphic, and various mixtures, the role of software is that the modeling

system ought to change its mode to suit the modeler, not the other way around.

Fundamentally, a model can be divided into its parts, as shown in Figure 3, where a

model is composed of objects and relations among those objects. In an optimization

model, there are two fundamental types of objects: data and decision. Data objects

include not only numerical values, like parameters, but also symbolic objects, like sets

of regions or a network topology, which can vary from one scenario to another. The

decision objects include not only variables, but also functionals, which could have other

objects in its domain.

There are three types of model relations: constraints, conditions and learning.

Constraints can be of the canonical form, g(x) ≤ 0, where x is a vector of decision

objects. This form hides the dependence of g on data objects, especially on "primary

data" objects used to compute what the solver sees. For example, the cost of capital

might be one of the primary data values, from which the modeling system is directed to

compute cost coefficients that appear in g. Logical constraints are prevalent, such as

"Select at most 1 from this set…". Most languages do not permit the expression to be in

English, and the modeler must bear the burden of how to express this in a way that is

eventually understood by the solver. An advantage of constrained logic programming

(CLP) is that it allows us to express logical relations more naturally than mathematical

programming languages. The conditions pertain to when certain objects or constraint

relations are in effect. For example, one might build a general network to accommodate

any scenario, then use data to determine whether a supply constraint or a transportation

variable should be generated. Admissibility conditions pertain to data values that are

checked to prevent erroneous scenarios.

©Encyclopedia of Life Support Systems (EOLSS)

OPTIMIZATION AND OPERATIONS RESEARCH – The Role of Software in Optimization and Operations Research - Harvey J.

Greenberg

Figure 3: Anatomy of a Model

Finally, learning is another level of relations that can be part of an artificially intelligent

environment for model formulation and management. It includes learning relations

among objects from experience with some scenarios, even without human feedback.

One example is when it detects an infeasibility and diagnoses its cause. This could lead

to an automatic check for those conditions in future scenarios.

The diagram in Figure 3 is simplified. It does not show, for example, whether an object

or relation is primitive or implied; it does not show attributes or other elements that

require discussion if we were to consider implementation. Its purpose is simply to

highlight an anatomy in tune with the role of software in optimization, starting with

model formulation. Also, the modeling process contains more than models; it also

contains guidance for solvers, model management aids, and rules to support analysis of

instances.

4.1. Expressive Power

The expressive power of a language is its ability to represent different kinds of objects

and relations. Theoretically, the languages in use today have the same expressive power,

©Encyclopedia of Life Support Systems (EOLSS)

OPTIMIZATION AND OPERATIONS RESEARCH – The Role of Software in Optimization and Operations Research - Harvey J.

Greenberg

but practically, they do differ. Some languages emphasize that they are algebraic, but

what does this mean? What languages are not algebraic? Essentially, an algebraic

language is one that allows you to express the optimization model the way you would

on a blackboard or in a textbook. It is some variant of the following:

Let x(i, j, k) denote the level of transportation of the kth material from i to j … There is

limited supply: ∑ j ,k x(i, j, k ) ≤ S (i) …

Languages like AMPL and GAMS are like this. Older languages, like MAGEN/OMNI

and RPMS/GAMMA, are process oriented. They would express activities, like the

following:

Let x(i, k) denote the level of operation of a distillation unit in region i at mode k … It

uses materials M(i, k) and has product yields Y (i, k); capacity is limited by C(i, k).

These have been unnecessarily contentious, and now there is another paradigm, based

on logical expressions, called logic programming. We commonly refer to constrained

logic programming to mean logical expressions of what to do, subject to constraints

like:

Choose 1 from the set...

4.2. Logic Programming and Optimization

There has been a gradual merger of operations research methodology with logic

programming. The role of the software is to provide a different paradigm for expressing

combinatorial optimization problems, like scheduling. Rather than require the modeler

to specify some system of inequalities; constraints, such as job i must precede job j, can

be expressed more naturally.

To illustrate, consider the traveling salesman problem. There are many integer

programming formulations, but constraint logic programming can represent it

differently. Let yi be from the set of cities, {1, …, n}. To express the constraint that

every city be visited exactly once, the logical constraint is all different {yi}, and that is

precisely all one must specify! This is beyond the expressive power of integer

programming, adding new capabilities to optimization modeling, as well as new

opportunities to express bad formulations.

5. Computer-Assisted Analysis

Obtaining a solution is not enough, and we have just described the role of software in

formulating and managing models. In addition, the role of optimization software must

support analysis. At least in linear programming, we often solve larger problems than

we can understand. It is not only post-optimal analysis that is important, but also

debugging tools to deal with anomalies and infeasibility diagnosis. This is a underdeveloped area, which began to emerge in the late 1970s. The only general-purpose

software currently available are ANALYZE, GMSCHK and MProbe. Some modeling

systems, like ASCEND, MIMI and XPRESS-LP, have some analysis aids.

©Encyclopedia of Life Support Systems (EOLSS)

OPTIMIZATION AND OPERATIONS RESEARCH – The Role of Software in Optimization and Operations Research - Harvey J.

Greenberg

In this section, this function is divided into some overlapping parts and elaboration is

given along the lines that are in a recent survey by Chinneck and Greenberg.

5.1. Query and Reporting

A fundamental part of optimization software is to be able to browse a model instance

and its solution obtained by the optimizer. (The solution is not necessarily optimal, as in

debugging an infeasible instance.) Modeling languages provide only very basic report

writing capabilities, with little or no debugging aids. ANALYZE enables multiple-view

query and syntax-directed report writing for mixed-integer linear programs, but it does

not have an interface with all modeling languages. Rutherford provides extensive report

writing tools for GAMS.

To appreciate the need for query and reporting, consider the LP:

min cx : x ≥ 0, Ax = b,

(13)

where A is m × n and the vectors conform to those dimensions. When m and n are small

enough to see A on one screen (or a sheet of paper), we have no problem. When,

realistically, the LP has many thousands of rows and columns, we face problems of

what to see and how to see it.

The functions of query and reporting extend to verification and validation. The former

pertains to knowing that what is in the computer-resident model is what is believed to

be there. The latter pertains to how well the model represents the world for which it is

intended. Both are fundamental to model management.

5.2. Debugging

Debugging is finding out what went wrong when the result is infeasible or anomalous.

The only software systems designed to assist with this are ANALYZE, GMSCHK,

MPRobe, and a suite of Chinneck's IIS codes. The approaches to infeasibility diagnosis

are as follows:

Navigation:

This is simply interactive query with a system like ANALYZE,

generally by someone who is expert in knowing the model.

Parametric

Programming: This begins with a feasible system for a parameter, θ, such as

Ax = θb, x ≥ 0. This is feasible for θ = 0 (possibly for θ > 0) and

parametric programming is used to drive θ to 1. It will reach a

feasibility threshold in (0,1), showing blockage, such as insufficient

supply or capacity.

Phase I

This applies only to linear programs. It rests on the fact that the dual

Aggregation:

variables associated with a phase I solution comprise a solution to the

alternative system. If {Ax = b, L ≤ x ≤ U} is infeasible and π is a

Phase I dual solution, the single equation, πAx = πb is inconsistent

with the bounds, L ≤ x ≤ U. This reveals which activities are involved

those with nonzero coefficients, πAj ≠ 0 and a blocking bound.

©Encyclopedia of Life Support Systems (EOLSS)

OPTIMIZATION AND OPERATIONS RESEARCH – The Role of Software in Optimization and Operations Research - Harvey J.

Greenberg

Logical Testing: This applies to when there are binary variables that represent logical

truth-values. Topological sorting is used to reveal inconsistencies,

such as a cycle of precedence relations. These tests extend to Horn

clauses.

Exploiting

Structures:

Special structures include block and link (which includes netforms)

and inventory equations.

Partitioning:

This is a powerful approach, which can be fully automated. The idea

is to find a partition of the original system of constraints and explore

at least one of those subsystems for probable cause. One type of

subsystem is the irreducible infeasible subsystem (IIS). This is

infeasible but the removal of any one constraint renders the

subsystem feasible. A necessary condition to become feasible is that

at least one constraint in each IIS be deleted, or adjusted in a way that

makes the subsystem feasible. A sufficient condition to become

feasible is that at least one constraint from every IIS be deleted. (The

IISs can overlap, so the removal of one constraint that is in every IIS

would render a feasible subsystem.)

There are tactical variations within these approaches, and the IIS approach extends to

general mathematical programs, where variables can be integer-valued and functions

can be nonlinear. Of course, the subproblems to solve in order to obtain IISs can then be

NP-hard. The role of software is to support all of these approaches because how they

behave depends upon the problem, and how experienced the analyst is in mathematical

programming. A rule-based intelligence is a level of ANALYZE that can automate the

diagnostic process and use heuristics to guide it.

To illustrate, Figure 4 shows a network with a maximum flow of 32 units, but the total

demand is 60 units. One could tell this to the analyst, but there are much smaller

isolations that are causal. In a realistic network, the cut set could have many thousands

of arcs, far too many for a person to visualize (see Graph and Network Optimization).

Figure 4: An Infeasible Network Flow

©Encyclopedia of Life Support Systems (EOLSS)

OPTIMIZATION AND OPERATIONS RESEARCH – The Role of Software in Optimization and Operations Research - Harvey J.

Greenberg

Optimization software begins to process this problem by solving its Phase I:

16

min ∑ vi : v ≥ 0,

i =1

Supply at node 1:

x14

+ v1 ≤ 20

Supply at node 2:

x24 + x25

+ v2 ≤ 20

Supply at node 3:

x35

+ v3 ≤ 20

Supply at node 4:

x14 + x24 − x46 − x47 + v4 = 0

Supply at node 5:

x25 + x35 − x57 − x58 + v5 = 0

Supply at node 6:

x46

+ v6 ≥ 10

Supply at node 7:

x47 + x57

+ v7 ≥ 20

Supply at node 8:

x58

+ v8 ≥ 30

Flow bounds:

0 ≤ x14 + v 9 ≤ 30

0 ≤ x24 + v10 ≤ 20

0 ≤ x25 + v11 ≤ 10

0 ≤ x35 + v12 ≤ 10

0 ≤ x46 + v13 ≤ 10

0 ≤ x47 + v14 ≤ 2

0 ≤ x57 + v15 ≤ 20

0 ≤ x58 + v16 ≤ 30

(14)

The analyst must now debug this infeasible LP by diagnosing its cause. Conventional

solvers and most modeling languages provide only displays of values, such as what you

see in Figure 5. In a realistic problem this table would be far too large to be useful, so

the analyst needs some screening aids.

Figure 5: Display of Solution Values for Network in Figure 4

©Encyclopedia of Life Support Systems (EOLSS)

OPTIMIZATION AND OPERATIONS RESEARCH – The Role of Software in Optimization and Operations Research - Harvey J.

Greenberg

There are several approaches to help analysts diagnose an infeasibility. One is to use the

Phase I dual prices to form one infeasible aggregate constraint:

x25 + 2 x35 + x47 ≥ 70.

(15)

This inequality is implied by the original system by taking a weighted sum. Letting pi

denote the dual price of the i-th supply constraint, and noting that p ≤ 0, we first obtain

the following:

p1 ( x14 + p2 ( x24 + x25 ) + p3 x35 ≥ ( p1 + p2 + p3 )20.

(16)

Since p = (−1,−1, 0), this becomes the aggregate supply limit: − x14 − x24 − x25 ≥ −40 .

Combining the balance equations in the same manner, and using its price vector (1,2)

(shown in Figure 1: Display of Solution Values for Network in Figure 4) we obtain the

aggregate balance equation:

x14 + x24 − x46 − x47 + 2( x25 + x35 − x57 − x58 = 0 .

The demand requirements use the price vector (1, 2, 2) to obtain the following aggregate

demand requirement: x46 + 2( x47 + x57 ) + 2 x58 ≥ 1 × 10 + 2 × 20 + 2 × 30 = 110 .

Adding these three aggregates produces the overall aggregate constraint, and this

approach notes that the greatest aggregate value on the left-hand side, for the given

bounds, is less than the aggregate requirement:

max{x25 + 2 x35 + x47 : 0 ≤ x25 ≤ 10, 0 ≤ x35 ≤ 10, 0 ≤ x47 ≤ 2} = 32 < 70.

(17)

The ANALYZE system does this and can provide an English response, such as what is

shown in Figure 6. The system can continue to help the analyst by explaining the

aggregate coefficients and right-hand side.

Figure 6: ANALYZE Diagnosis Using Phase I Price Aggregation

An IIS approach could produce the subnetworks shown in Figure 7. In 7(a), the only

constraints included are the flow bounds on x25, x35 and x4t . The sources of those arcs

are not shown because it does not matter where those flows come from, only that they

are limited to the values shown. Nodes 5, 7 and 8 are shown because the constraints

associated with them are in this IIS. Only the demand arcs are oriented; the others are

undirected, which means that the non-negativity constraints on those flows are not in the

©Encyclopedia of Life Support Systems (EOLSS)

OPTIMIZATION AND OPERATIONS RESEARCH – The Role of Software in Optimization and Operations Research - Harvey J.

Greenberg

IIS. The demands at nodes 7 and 8 dominate the direction of flow. By its definition, the

IIS is an infeasible subsystem such that dropping any one constraint renders the proper

subsystem feasible. If, for example, we drop the demand requirement at node 7, we can

ship 10 units from 7 to 5 (i.e., x57 = −10), then send it to node 8, thus rendering the

proper subsystem feasible. In 7(b), the demand at node 7 is dropped, but non-negativity

is added to the flow variable, x57.

Figure 7: Two IISs for the Infeasible Network in Figure 4

ANALYZE can import IISs created by Chinneck's code, which allows separate

treatment of logical constraints, such as non-negativity. If we retain x ≥ 0 in our

subsystem, we obtain a smaller subnetwork, shown in Figure 8. Node 7 plays no role,

and this subnetwork presents the analyst with the two relevant flow bounds and the one

demand requirement. Those constraints, themselves, with flow balance at node 5,

comprise an infeasible subsystem.

Figure 8: Good Diagnosis = Small Isolation + Succinct Explanation

6. Intelligent Mathematical Programming Systems

What makes an MPS intelligent? In artificial intelligence, we would say the computer

performs reasoning, arriving at conclusions that we normally attribute to human

problem-solving. Here, this is broadened to include discourse models, such as

visualization aids. The main focal points, analysis, formulation, and discourse, comprise

building blocks for the IMPS. The concepts on which they are built, the functions they

serve, and the techniques employed comprise the cornerstone of its foundation.

6.1. Formulation

©Encyclopedia of Life Support Systems (EOLSS)

OPTIMIZATION AND OPERATIONS RESEARCH – The Role of Software in Optimization and Operations Research - Harvey J.

Greenberg

Very few optimization modeling languages or systems provide intelligent aids for

formulation. One exception is the XPRESS-LP system. There have been approaches

taken, and the general idea is illustrated in Figure 9.

Figure 9: Formulation Component of IMPS

There have been experiments with the form of the model assistant, ranging from model

libraries to jigsaw puzzle reasoning. In all cases the role of the software was to help

someone who is not an expert in mathematical programming formulate a model. This

has so far not succeeded, and if it is to be developed, the formulation phase must be

viewed more broadly. In addition to some expression of the model objects and relations,

there needs to be support generated for model management, database auditing, solver

selection and subsequent analysis support.

6.2. Model and Scenario Management

Here we expand the model assistant functions with two components: solver selector and

scenario manager. These are two fundamental functions, shown in Figure 10 in

conjunction with the formulation assistants. The figure is already rather busy, so other

functions and files are omitted. Model management aids include verification and

validation tasks. Verification is confirming that what we think is in the computer is

indeed there. Validation is determining the extent to which the model, or some scenario,

is sufficiently consistent with what it is supposed to model that results are not

misleading.

One element of verification is shown in Figure 10, which is the data. Checks are

produced by the model assistant, and this file is read by the Verifier. For example, the

model assistant determines that a necessary condition for feasibility is that some

function of the data must satisfy a relation, f (D) ≥ 0 (viz., total supply - total demand ≥

0). Whenever the data objects in D change, the verifier checks that the relation holds. Its

role is to prevent infeasible scenarios, giving causal information whenever it happens.

©Encyclopedia of Life Support Systems (EOLSS)

OPTIMIZATION AND OPERATIONS RESEARCH – The Role of Software in Optimization and Operations Research - Harvey J.

Greenberg

Figure 10: Formulation Component of IMPS

6.3. Discourse

The objective of a discourse model is to communicate with a user, to which we add: in a

manner that is most natural for him or her. Natural language includes both text and

graphics. Algebraic and functional forms are what we are used to seeing in textbooks,

but constrained logic programming (CLP) is changing that. We now enlarge our

expressive powers to include many mathematical and logical forms.

The idea is to have one fundamental structure from which many different views can be

constructed at the will of the analyst. Some of the early ideas from neural networks and

concept graphs have not quite fulfilled their potential in discourse for optimization. We

primarily rely on syntax, but there is a growing development of visualization,

particularly for analysis support.

6.4. Analysis

So far, intelligent analysis support has been rule-driven. To illustrate, we shall show

some substructures found by ANALYZE, followed by a hypothetical discourse. Figure

11 shows a portion of a linear program that traces the flow of activities that generate

baseload electricity in a particular region. There are 26 activities that could generate this

electricity. Of these, 13 have zero reduced cost, including 9 basic activities. Each of the

13 activities is a candidate for being in a margin-setting path, so other paths were traced.

Each of the 12 paths must be blocked by some activity at bound; otherwise, a less

©Encyclopedia of Life Support Systems (EOLSS)

OPTIMIZATION AND OPERATIONS RESEARCH – The Role of Software in Optimization and Operations Research - Harvey J.

Greenberg

expensive path would have produced more, displacing the margin-setting flows in

Figure 11.

Figure 11: A Blocked Path in a Linear Program

Figure 12 shows a possible discourse with an analyst after the blocking path is found.

The English translations are made possible by the syntax maps recognized by

ANALYZE.

Figure 12: Discourse Explaining Blocked Path in Figure

Figure 13 gives an overview of an entire IMPS, with three places for intelligent support:

during model formulation (or revision), selecting a solver, and analyzing the results.

The level of detail is minimal, as one notes upon looking at Figure 10. The knowledge

bases could be distributed or united, and the model manager maintains all components.

7. Beyond the Horizon

Many of the underlying principles that connect software and optimization apply to other

techniques in operations research. Simulation is notable because the old concept of

languages evolved into systems (or platforms), and intelligent modeling aids began to

emerge in the 1980s. Animation was introduced to help debug simulation models, and

Jones showed how animation can be used for related insights into sensitivity analysis.

©Encyclopedia of Life Support Systems (EOLSS)

OPTIMIZATION AND OPERATIONS RESEARCH – The Role of Software in Optimization and Operations Research - Harvey J.

Greenberg

Figure 13: IMPS Components

More visualization has been a key to modern software systems, and modelers have

come to expect graphic user interfaces (GUI). Algorithm animation has not fully

penetrated optimization software, but this is also coming.

As visualization increases in importance, we can expect to see more on the web.

Websim is the current term that refers to web-based simulation, and it is one of the

current research areas. There are Java Applets presently on the web to allow people to

model and solve optimization problems. These are small, designed for pedagogical

purposes, but we can expect this to develop further into remote capabilities for

production.

Acknowledgement

The author is grateful to Professor, Dr. U. Derigs, for the opportunity to contribute this

chapter to the EOLSS and for his patience while I learned the formatting requirements.

©Encyclopedia of Life Support Systems (EOLSS)

OPTIMIZATION AND OPERATIONS RESEARCH – The Role of Software in Optimization and Operations Research - Harvey J.

Greenberg

Glossary

Algorithm:

Algorithm

Controls:

Approximation

Algorithm:

CLP:

Data structure:

GUI:

IMPS:

Infeasibility:

Load Balancing:

Message Passing:

Metaheuristics:

Model Assistant:

MPS:

NP-Hard:

Speedup:

A sequence of precisely specified computations to reach a

solution.

Parameters that affect tactics within an algorithm class, such as

whether to conduct some test.

For an NP-hard problem, this is a procedure that takes a

polynomial amount of time to obtain a solution that is guaranteed

to be within some percent of optimal.

Constrained Logic Programming.

How we store information in a computer.

Graphic user interface.

Intelligent Mathematical Programming System.

An instance of a mathematical program for which there is no

feasible solution.

The goal of having each of many processors perform the same

amount of computation.

The transfer of information from one processor to another in a

parallel computing environment.

A general framework for searching a space to solve hard

problems (meta is a prefix, as in a meta-language being a

language to describe languages).

A component of an IMPS designed to assist model formulation.

Mathematical Programming System.

A problem class for, which there is no known algorithm that can

solve any member in polynomial time.

The fraction of how close to perfect distribution of computation

an algorithm implementation comes.

Bibliography

Ashford R.W. and Daniel R.C. (1986). LP-MODEL: XPRESS-LP's model builder. IMA Journal of

Mathematics in Management 1(163), 176. [Downloads are available at <http:// www.dash.co.uk.>]

Bentley P.J. ed. (1992, 1999). Evolutionary Design by Computers, 446 pp. San Francisco, CA: Morgan

Kaufman. [This is a basic reference for evolutionary algorithms/programming.]

Bertsekas D.P. and Tsitsiklis J.N. (1989). Parallel and Distributed Computation, 715 pp. Englewood

Cliffs, NJ: Prentice-Hall. [This presents the fundamentals of parallel algorithms, with special attention to

optimization.]

Chandru V. and Hooker J.N. (1953, 1999). Optimization Methods for Logical Inference, 365 pp. New

York: John Wiley & Sons. [This organizes the developments in applying integer programming to the sort

of logical inference done in artificial intelligence. Emphasis is given to the authors' work during the past

decade.]

Chinneck J.W. (1997). Computer codes for the analysis of infeasible linear programs. Journal of

Operational Research Society 47(1), 61-72. [This describes the author's development of computing IISs

in various optimization programs.]

Chinneck J.W. (1997). Feasibility and viability. Advances in Sensitivity Analysis and Parametric

Programming, (ed. T. Gal and Greenberg H.J.), pp. 14-1-14-41, Boston, MA: Kluwer Academic

Publishers. [This surveys the state-of-the-art in practical methods for helping an analyst understand why a

©Encyclopedia of Life Support Systems (EOLSS)

OPTIMIZATION AND OPERATIONS RESEARCH – The Role of Software in Optimization and Operations Research - Harvey J.

Greenberg

problem is infeasible, including non-linear programs. This addresses the related question of whether some

variables must be zero in every feasible solution.]

Chinneck J.W. Analyzing mathematical programs using MProbe. Annals of Operations Research, To

appear. Downloads are available at <http://www.sce.carleton.ca/faculty/chinneck/mprobe.html>. [This

applies to any mathematical program, but its main use is to provide shape information for nonlinear

functions. It probes AMPL source code and uses a sampling technique.]

Chinneck J.W. and Greenberg H.J. (1999). Intelligent mathematical programming software: Past, present,

and future. Canadian Operations Research Society Bulletin 33(2), 14-28. Also appeared in INFORMS

Computing Society Newsletter 20(1), 1-9, 1999. [This surveys optimization software that is intelligent in

its ability to help users formulate and analyze mathematical programs and generally manage the process

of doing so.]

Crescenzi P. and Kann V., ed.. (2000). A compendium of NP optimization problems.

<http://www.nada.kth.se/~viggo/problemlist/compendium.html>. [The editors maintain this site, giving

latest bounds and time complexity of approximation algorithms for NP-hard problems.]

Dutta A., Siegel H.J., and Whinston A.B.. (1983). On the application of parallel architectures to a class of

operations research problems. R.A.I.R.O. Recherche opérationanelle/Operations Research 17(4),317-341.

[This is one of the first parallel algorithms for linear programming. It applies to Dantzig-Wolfe

decomposition.]

Eckstein J., Hart W.E., and Phillips C.A. (2000). PICO: An object-oriented framework for parallel

branch and bound. Research report, Albuquerque, NM: Sandia National Laboratories. Also available as a

RUTCOR technical report. [This is a primer for the entitled object-oriented system where the user

supplies the classes for a particular problem and algorithm.]

Fourer R. (1983). Modeling languages versus matrix generators for linear programming. ACM

Transactions On Mathematical Software 9, 143-183. [This was an early description that clarified the

entitled distinction.]

Fourer R. (1996). Software for optimization: A buyer's guide, Parts 1 & 2. INFORMS Computing Society

Newsletter 17(1, 2). [This gives a broad view of what is available in all areas of optimization software.]

Glover F. and Laguna M. (1997). Tabu Search, 382 pp. Boston, MA: Kluwer Academic Publishers. [This

is the basic book about Tabu Search, written by its pioneers.]

Greenberg H.J. (1993) A Computer-Assisted Analysis System for Mathematical Programming Models and

Solutions: A User's Guide for ANALYZE. Boston, MA: Kluwer Academic Publishers. Downloads are

available at <http://www.cudenver.edu/~hgreenbe/imps/software.html>. [This describes and illustrates the

ANALYZE software system, which is part of the author's intelligent mathematical programming system.]

Greenberg H.J. (1994). Syntax-directed report writing in linear programming. European Journal of

Operational Research 72(2), 300-311 [This shows how the rules of composition the syntax of a model

can be used to drive the report writing process, including interactive query.]

Greenberg H.J. (1996). A bibliography for the development of an intelligent mathematical programming

system. Annals of Operations Research 65, 55-90. Online versions are available at:

<http://www.cudenver.edu/~hgreenbe/imps/impsbib/impsbib.html>

and

<http://orcs.bus.okstate.edu/itorms>. [This gives hundreds of citation from early works through 1996.]

Greenberg

H.J.(1996-2000).

Mathematical

Programming

Glossary.

<http://www.

cudenver.edu/~hgreenbe/glossary/>. [This is an ongoing glossary with about 700 entries and

supplements.]

Greenberg H.J. and Maybee J.S. ed. (1981). Computer-Assisted Analysis and Model Simplification, 522

pp. New York: Academic Press. [This introduced computer-assisted analysis as a formal foundation for

what evolved into an intelligent aid.]

Greenberg H.J. and Murphy F.H. (1991). Approaches to diagnosing infeasible linear programs. ORSA

Journal on Computing 3(3), 253-261. Note: the journal has been renamed INFORMS Journal on

Computing. [This describes the main approaches to the entitled problem, pointing out that Diagnosis =

Isolation + Explanation.]

©Encyclopedia of Life Support Systems (EOLSS)

OPTIMIZATION AND OPERATIONS RESEARCH – The Role of Software in Optimization and Operations Research - Harvey J.

Greenberg

Greenberg H.J. and Murphy F.H. (1992). A comparison of mathematical programming modeling systems.

Annals of Operations Research 38,177-238. [This uses specific examples to compare and contrast three

types of systems: process, algebraic and schematic.]

Greenberg H.J. and Murphy F.H. (1995). Views of mathematical programming models and their

instances. Decision Support Systems 13(1), 3-34. [This shows many ways to view optimization models,

with focus on LP. A central data structure is proposed to enable the computer to produce any view that

best meets the cognitive skill of the user.]

Heath M.T., Ranade A., and Schreiber R.S. ed. (1999). Algorithms for Parallel Processing. New York:

Springer. [This is a modern account, with a variety of subjects by different authors.]

Hochbaum D.S., ed. (1997). Approximation Algorithms for NP-Hard Problems. Boston, MA: PWS

Publishing Co. [This is the fundamental reference to learn about approximation algorithms and their

application to optimization problems.]

Hoffman K.L. Combinatorial optimization: Current successes and directions for the future. Journal of

Computational and Applied Mathematics, (In Press). [This gives perceptive insights about the importance

of modeling combinatorial optimization problems. It was also a keynote talk at the 1999 SIOPT meeting.]

Hooker J. (2000). Logic-Based Methods for Optimization: Combining Optimization and Constraint

Satisfaction. New York: John Wiley & Sons. [This combines the strengths of the entitled topics into a

coherent theory of problem expression and algorithm design.]

Jones C.V. (1995). Visualization and Optimization. Boston, MA: Kluwer Academic Publishers,. An

online version is at <http://www.chesapeake2.com/cvj/itorms/>. [This is a complete compendium of the

many visualizations that have been developed, including many by the author.]

McAloon K. and Tretko C. ( 1995). Logic and Optimization. New York: John Wiley & Son. [This is an

important introduction, taking problem descriptions from the view of artificial intelligence, and solution

methodology from integer programming.]

McCarl B. (1996.). GMSCHK User Documentation. <http://agrinet.tamu.edu/mccarl/gamssoft.htm>.

Lubbock, TX: Texas A&M University. [This is one-of-a-kind software that applies directly to GAMS

source code.]

Nemhauser G.L., Savelsbergh M.W.P., and Sigismondi G.C. (1994). MINTO, A Mixed INTeger

Optimizer. Operations Research Letters 15, 47-58. Downloads available at <http:

//akula.isye.gatech.edu/~mwps/projects/minto.html>. [This is an object-oriented system where the user

supplies the classes for a particular problem and algorithm.]

Orchard-Hays W. (1978). History of mathematical programming systems. Design and Implementation of

Optimization Software, (ed. H.J. Greenberg) 551 pp., The Netherlands: Alphen aan den Rijn : Sijthoff &

Noordhoff. [This gives the early history of mathematical programming and how it was intertwined with

the history of computer science during the years 1950-75.]

Pardalos P.M., Resende M.G.C., and Ramakrishnan K.G. ed. (1995). Parallel Processing of Discrete

Optimization Problems, DIMACS Series in Discrete Mathematics and Theoretical Computer Science 22.

Providence, RI: American Mathematical Society. [This has an important collection of papers and an

insightful introduction.]

Robbins T. (1970). Antenna Design. Doctoral Thesis, Southern Methodist University, Dallas, TX. [This

was an early example of applying optimization to a very difficult objective, requiring significant

computer time for each evaluation.]

Rumelhart D.E. and McClelland J.L. ed. (1986). Parallel Distributed Processing, Explorations in the

Microstructure of Cognition, Vol. 1: Foundations. Cambridge, MA: MIT Press, [This was a very inspiring

book that helped to create major research efforts in neural network computers.]

Rutherford

T.

(1999).

Some

GAMS

Programming

Utilities.

<http://nash.

colorado.edu/tomruth/inclib/tools.htm>. Boulder, CO: University of Colorado. [This is a useful suite of

tools, including interfaces with building output tables.]

©Encyclopedia of Life Support Systems (EOLSS)

OPTIMIZATION AND OPERATIONS RESEARCH – The Role of Software in Optimization and Operations Research - Harvey J.

Greenberg

Sowa J.F. (1984). Conceptual Information Processing, 374 pp. Amsterdam; New York: American

Elsevier. [This presented new concepts connecting language and intelligence. It led to new

implementations of natural language processing.]

Spedicato E. ed. (1994). Algorithms for Continuous Optimization: The State of the Art. NATO ASI

Series, 565 pp. Dordrecht, The Netherlands: Kluwer Academic Publishers. [This has an excellent

collection of papers, focused on numerical methods to solve nonlinear programs.]

Stasko J.T., Dominque J.B., Brown M.H., and Price B.A., ed. (1998). Software Visualization;

Programming as a Multimedia Experience, 562 pp. Cambridge, MA: MIT Press. [This is a general book

on visualization that includes algorithm animation.]

Thienel S. (1995). ABACUS A Branch-And-CUt System. Doctoral Thesis, Universität zu Köln, Germany.

More information is available at <http://www.informatik.uni-koeln.de/ls_juenger/ projects/abacus/>.

[This is an object-oriented system where the user supplies the classes for a particular problem and

algorithm.]

Vanderplaats G. (1995). DOT Users Manual. Colorado Springs, CO: Vanderplaats Research and

Development, Inc. Downloads available at <http://www.vrand.com>. [This describes a Design

Optimization System, which addresses problems that are hard because the objective function requires a

significant amount of time for each evaluation. Generally, f (x) is the numerical solution to a PDE, where

x is a vector of design parameters.]

Waren A.D., Hung M.S., and Lasdon L.S. (1987). The status of nonlinear programming software: An

update. Operations Research 35(4), 489-503. [One of the significant advances addressed in this survey is

the emergence of PCs, making computer storage space no longer an issue, as it had been.]

Wirth N. (1976). Algorithms + Data Structures = Programs, 366 pp. Englewood Cliffs, N.J.: PrenticeHall. [This is a classic book about Algorithms + Data Structures = Programs.]

Biographical Sketch

Harvey Greenberg is Professor of Mathematics at the University of Colorado at Denver. After receiving

his Ph.D. in Operations Research from The Johns Hopkins University in 1968, he joined the Faculty of

Computer Science and Operations Research at Southern Methodist University. Dr. Greenberg has

pioneered the interfaces between operations research and computer science since that time. He was a

founder of what is now the INFORMS Computing Society, having served as its Chairman twice, and he

was the founding Editor of the INFORMS Journal of Computing. While at the U.S. Department of

Energy, 1976-83, Dr. Greenberg developed Computer Assisted Analysis (CAA), dealing with advanced

analysis techniques, including debugging scenarios that might be infeasible or anomalous. Shortly after

joining CU-Denver in 1983, he formed a Consortium to develop an Intelligent Mathematical

Programming System, which extended CAA in breadth and depth. During that time, the project generated

several software systems and about 50 papers. One of the systems, ANALYZE, was recognized with the

first “ORSA/CSTS Award for Excellence in the Interfaces Between Operations Research and Computer

Science.” The system is still used today, and it was a major part of Dr. Greenberg’s plenary talk at the

Canadian Operational Research Society, when he accepted their Harold Lardner Prize for having

“achieved international distinction in Operational Research.” Since 1996, Dr. Greenberg has been an

active developer of web materials to support teaching. In addition to his own site, which contains the

Mathematical Programming Glossary, he developed a site as a service to the Mathematics Department,

called “Using the Web to Teach Mathematics.” Most recently, Dr. Greenberg established a collaborative

relationship with Sandia National Laboratories, helping to develop parallel algorithms for NP-hard

problems, notably in computational biology, such as protein folding. Dr. Greenberg has published 104

papers and four books; he has (co)edited seven additional books or proceedings. He serves on six editorial

boards, and has been Guest Editor for the Annals of Mathematics and Artificial Research on such topics

as “Reasoning about Mathematical Models” and “Representations of Uncertainty”.

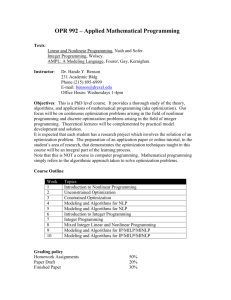

©Encyclopedia of Life Support Systems (EOLSS)