A Codeword Proof of the Binomial Theorem Column Integration and

advertisement

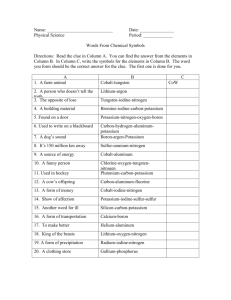

A Codeword Proof of the Binomial Theorem Mark Ramras (ramras@neu.edu), Northeastern University, Boston, MA 02115 The standard proof of the Binomial Theorem for positive integer exponent n treats the x in (1 + x)n as a variable and the nth power as a product of n factors of (1 + x). (See, for example, [1], [2], [3], [5].) The proof below relies more on counting and less on polynomial algebra. (See [4] for other identities involving binomial coefficients that use counting arguments.) Theorem 1. For any positive integers n and r , n n k n (1 + r ) = r . k k=0 Proof. Consider an alphabet consisting of r ordinary letters and one special letter, say ∗. We count the number of possible codewords of length n in two ways. First, since there are a total of 1 + r symbols available and there are n slots to fill, the Multiplication Principle shows that the number of codewords is (1 + r )n . On the other hand, we can partition the codewords according to the number of non-∗’s that appear. Let this number be k, so that 0 ≤ k ≤ n. Constructing a codeword with k non-∗’s consists of selecting n − k of the n slots for the ∗’s, and then selecting a codeword of length k containing no ∗’s. The Multiplication Rule says that the number of ways of constructing such codewords is the product of thenumber of n ways of making each of the two selections. The first selection is made in n−k = nk ways. The second, using the Multiplication Rule again, is made in r k ways. Summing on k from k = 0 to k = r by the Sum Rule completes the calculation of the number of codewords of length n. Since both sides of the Binomial Theorem count the same quantity, we therefore have equality and have proved the theorem. We can now proceed to extend the result to all real numbers r by using the fact that polynomials over have only finitely many zeros. Let F(x) = (1 + x)n − nk=0 nk x k . By the theorem above, F(r ) = 0 for all positive integers r . Now F is a polynomial of degree n, and has more than n zeros (in fact, ithas infinitely many zeros). Hence, F is identically zero. Therefore, (1 + x)n = nk=0 nk x k for all x ∈ . References 1. 2. 3. 4. 5. Kenneth P. Bogart, Introductory Combinatorics, 3rd ed., Harcourt/Academic Press, 2000. Richard A. Brualdi, Introductory Combinatorics, 3rd ed., Prentice-Hall, 1999. Fred S. Roberts, Applied Combinatorics, Prentice-Hall, 1984. Ken Rosen, Discrete Mathematics and its Applications, 4th ed., McGraw-Hill, 1999. Alan Tucker, Applied Combinatorics, 4th ed., Wiley, 2002. ◦ Column Integration and Series Representations Thomas P. Dence (tdence@ashland.edu), Ashland University, Ashland, OH 44805 Joseph B. Dence (dencec@mir.wustl.edu), University of Missouri, St. Louis, MO 63121 In the movie Stand and Deliver, which premiered in the 1980s, the calculus teacher Jaime Escalante demonstrated a short-cut scheme for integration. This method, some144 c THE MATHEMATICAL ASSOCIATION OF AMERICA times known as column integration [3] or tabular integration by parts [1, 2, 4], calls for two columns of functions, labeled D and I . If we wish to determine an integral of the form f (x)g (x) d x, we begin with f (x) under the D column, and then successively differentiate it, while g (x) is placed under the I column and is then followed by successive antiderivatives. Then, in many cases, a series of cross-multiplications, with alternating signs converges to the integral f (x)g (x) d x. To explain why, let (−k) f (k) (x) denote the kth derivative of f (x) and the kth integral of let g k(x)(k)denote g(x). Then, as shown below, if the series k≥0 (−1) f (x)g (−k) (x) converges, it represents an antiderivative of f (x)g (x). Let F = D (−1)k f (k) g (−k) . Then k≥0 F = = f (x) = f (0) (x) + f (1) (x) (−1)k f (k+1) g (−k) k≥0 (−1)k f (k) g (−k+1) + I k≥0 (−1)k f (k+1) g (−k) + f (0) g (1) k≥0 (−1)k f (k) g (−k+1) + f (2) (x) + g (0) (x) = g(x) g (−1) (x) g (−2) (x) .. . f (k) (x) k≥1 = f g . − g (1) (x) = g (x) .. . (−1)k .. . g (−k+1) (x) g (−k) (x) .. . In most applications f (x) is a polynomial, so the D column ends after finitely many steps and column integration yields f (x)g (x) d x. If f (x) is not a polynomial, one can always truncate the process at any level and obtain a remainder term [2] defined as the integral of the product of the two terms directly across from each other, namely f (k) (x) and g (−k+1) (x). Then the infinite series would converge if the remainder tends to zero. 1. Integrating xe x d x by parts using u = x and dv = e x d x, we get Example xe x d x = xe x − e x = e x (x − 1). By column integration with f (x) = e x and g (x) = x, we compute D ex I x + ex − ex + ex − xe x d x = e x x 2 /2 − e x x 3 /6 + e x x 4 /24 − · · · + (−1)k e x x k /k! + · · · x 2 /2 = e x [x 2 /2 − x 3 /6 + x 4 /24 − · · · + (−1)k x k /k! + · · ·] x 3 /6 = e x v(x), x 4 /24 k k x x where v(x) = ∞ k=2 (−1) x /k! is well-defined. Since e (x − 1) and e v(x) are antiderivatives of xe x , it follows that e x v(x) = e x (x − 1) + C. And since v(0) = 0, we have C = 1. Therefore, v(x) = x − 1 + e−x and VOL. 34, NO. 2, MARCH 2003 THE COLLEGE MATHEMATICS JOURNAL 145 e−x = 1 − x + v(x) ∞ (−1)k x k /k!. = k=0 Example 2. Integrating x 2 e x d x by parts using u = x 2 and dv = e x d x, we get 2 x x e d x = x 2 e x − 2[xe x − x] = (x 2 − 2x + 2)e x . By column integration using f (x) = e x , we obtain x 2 e x d x = e x [x 3 /3 − x 4 /(4 · 3) + x 5 /(5 · 4 · 3) − · · ·] = e x w(x). Since e x w(x) and (x 2 − 2x + 2)e x are antiderivatives of x 2 e x , e x w(x) = (x 2 − 2x + 2)e x + C. And since w(0) = 0, it follows that C = −2. Therefore, w(x) = x 2 − 2x + 2 − 2e−x and e−x = ∞ 1 [2 − 2x + x 2 − w(x)] = (−1)k x k /k!. 2 k=0 Interestingly, this series results from using column integration on any integral of the form x n e x d x. The next example produces some less familiar series of interest. Example 3. For column integration of x ln(x) d x, set g (x) = x. f (x) = ln(x) and Then D ln(x) 1/x −1/x 2 .. . 146 I + − + x x 2 /2 x 3 /6 = x 3 /3! .. . (−1)k−1 (k − 1)! xk x k+1 (k + 1)! .. . x k+2 (k + 2)! .. . c THE MATHEMATICAL ASSOCIATION OF AMERICA we obtain x2 x2 2x 2 3!x 2 (k − 1)!x 2 x2 ln(x) − − − − − ··· − − ··· x ln(x) d x = 2 3! 4! 5! 6! (k + 2)! 1 1 2 3! k! x2 2 ln(x) − x + + + + ··· + + ··· = 2 3! 4! 5! 6! (k + 3)! = x2 ln(x) − x 2 v(x). 2 (1) On the other hand, using integration by parts with u = ln(x) and dv = x d x, we find that x ln(x) d x has the value x 2 ln(x) x 2 − . 2 4 (2) Since (1) and (2) are both anti-derivatives of x ln(x), they agree except for at most a constant, and hence the coefficients of their variable terms must agree. Therefore, v(x) = 1/4, and we obtain the series ∞ 1 k! = . 4 (k + 3)! k=0 (3) This equality can be verified by writing the right-hand summation as a telescoping series ∞ 1 1 1 − . 2 k=0 (k + 2)(k + 1) (k + 3)(k + 2) If we look at the more general integral of x n ln(x) d x, integration by parts yields x n+1 x n+1 ln(x) − , n+1 (n + 1)2 whereas column integration gives x n+1 ln(x) − x n+1 p(x), n+1 where p(x) = 1 1 + (n + 2)(n + 1) (n + 3)(n + 2)(n + 1) + 2 + ···. (n + 4)(n + 3)(n + 2)(n + 1) Equating the coefficients of x n+1 , we obtain for each integer n ≥ 1 the family of representations ∞ 1 k! . = n! 2 (n + 1) (k + n + 2)! k=0 VOL. 34, NO. 2, MARCH 2003 THE COLLEGE MATHEMATICS JOURNAL (4) 147 This series likewise can be verified by writing the right-hand side as a telescoping series ∞ k! 1 = n! (k + n + 2)! (k + n + 2)(k + n + 1) · · · (k + n − 1)(k + 1) k=0 k=0 ∞ 1 1 = n! n + 1 k=0 (k + n + 1) · · · (k + 2)(k + 1) 1 − . (k + n + 2)(k + n + 1) · · · (k + 2) The series (3) and (4) also follow from the more general results for x p/q ln(x) d x, where p and q are positive integers. Using both methods for integration, we obtain 2 ∞ p k! q k+2 . = k+2 p+q k=0 ( p + iq) n! ∞ i=1 A word of caution should be mentioned regarding column integration. Selection of the initial functions to be placed under the D and I column is critical, for convergence may occur with one choice and not the other (although this is not too surprising since students face the same dilemma with integration by parts). Sometimes convergence may not occur with either choice as shown by sin(x) cos(x) d x, which evaluates to either ±(sin2 x + cos2 x + sin2 x + cos2 x + · · ·). References 1. Leonard Gillman, More on tabular integration by parts, The College Math. Journal 22(5) (1991) 407–410. 2. David Horowitz, Tabular integration by parts, The College Math. Journal 21(4) (1990) 307–311. 3. Margaret Lial, Raymond Greenwell, Charles Miller, Calculus with Applications, 6th ed., Addison-Wesley, 1998, pp. 430-431. 4. V. N. Murty, Integration by parts, The College Math. Journal, 11 (1980) 90–94. ◦ Constrained Optimization with Implicit Differentiation Gary W. De Young (gdeyoung@kingsu.ab.ca) The King’s University College, Edmonton, Alberta, Canada T6B 2H3 One of my favorite topics of first semester calculus is optimization using derivatives. Here students can really begin to see derivatives as powerful tools to solve many practical problems that occur in a wide variety of areas. This capsule presents an approach to constrained optimization problems that avoids much of the algebraic difficulties associated with the standard method of solving constrained optimization problems. This approach often leads to relations, not immediately available from the standard method, that give deeper insight into solutions. Hopefully the students will remember the insights even after they have forgotten how to take derivatives. 148 c THE MATHEMATICAL ASSOCIATION OF AMERICA