Georges Bossert - Security Evaluation of Communication Protocols

advertisement

Security Evaluation of Communication Protocols

in Common Criteria

Georges Bossert1,2 and Frédéric Guihéry2

1

CIDre team, Supelec, Cesson Sévigné, France

2

AMOSSYS ITSEF, Rennes, France

name.surname@{amossys,supelec}.fr

Abstract. During CC evaluations, experts may face proprietary hardware or software interfaces. Understanding, validating and testing these

interfaces require important time and resources. To limit associated costs,

a dynamic analysis approach appears more suitable, but currently lacks

of efficient tools.

We introduce Netzob3 , a tool that allows dynamic analysis of proprietary

protocols. It first permits to avoid manual learning of a protocol thanks

to a semi-automatized modelization approach. It leverages passive and

active algorithms on observed communications to build a model. This

model can afterward be used to measure the conformity of an implementation against its provided specification. For instance, Netzob allows to

learn message formats and state machine of protocols, and thus to identify deviations with the documentation. Moreover, Netzob can combine

any learned models with its traffic generator to apply targeted variations

on message formats and sequences. In the context of AVA VAN, Netzob

therefore permits efficient robustness analysis of protocol implementations.

Netzob handles different types of protocols: text protocols (like HTTP

and IRC), fixed fields protocols (like IP and TCP) and variable fields

protocols (like ASN.1 based formats). Netzob can model network protocols, structured files and inter-process communication. It leverages different algorithms from the fields of bio-informatics (Needleman-Wunsh,

UPGMA) and automata theory (semi-stochastic stateful model, Angluin

L*a).

Keywords: Protocol inference, Traffic generation, Vulnerability analysis, Common Criteria

1

Introduction

In recent years, the field of security evaluation of systems or software was extended with new approaches and new tools based on the fuzzing. Compared

to more traditional techniques (static and dynamic analyzes binary, potentially

combined with the analysis of the source code) that require specialized skills,

3

See project page: http://www.netzob.org

resources and time, these new tools offer many advantages: relative simplicity of implementation semi-automated approach, rapid acquisition of results,

etc. However, experience shows that to be truly effective, security analysis by

fuzzing requires a good knowledge of the target, and in particular the protocol communication that interact with thereof. This fact limits the effectiveness

and completeness of results obtained in the analysis of products implementing

proprietary or undocumented protocols.

On the other hand, in the analysis of security products such as firewall or

solutions of network intrusion detection (NIDS), the evaluator usually faces the

need to generate realistic traffic in order to assess the relevance and reliability of

the tested product (that is, its ability to limit false positives and false negatives).

This operation is complex to implement because it requires complete characterization and control traffic generated, which requires a thorough knowledge of

Protocol

In order to fill these needs, Netzob was developed. This tool is a framework

to infer protocols. It supports the expert in his reverse engineering work by offering a set of semi-automated features to reduce the analysis time and ultimately

improve its understanding of the targeted protocol. We organize the remainder

of the article as follows. After presenting terminology and our assumptions in

Section 2, we detail Netzob’s design and functionnalities in Section 3. We discuss its application in a Common Criteria evaluation context in Section 5 and

conclude in Section 6.

2

Terminology and design assumptions

Few days after beginning the project, we concluded the bibliography with the

following observation : there is a huge difference between the academic and the

applied world in the field of reverse engineering. The first one regroups multiple

work and papers while in the applied world, experts can be compared as fighters

specialized in one-lines commands and able to compute any CRC and format

trans-coding by heart.

On the academic field, most of the researchers consider A. Beddoe as the

initiator of the automated RE with its tool named PI4 presented in paper [2]

published in 2004. Following years have seen multiple published papers including those of W. Cui and al. [5, 4] and C. Leita [8]. Each of them include new

algorithms and next-gen complicated approaches, but none of them effectively

brought to the applied world a useable tool. This resulted to an important dissynchronization between the researchers and the security experts we’ll try to

tackle with this article.

2.1

Definition of a Communication Protocol

A communication protocol can be defined as the set of rules allowing one or more

entities (or actors) to communicate. Applied to the field of computer networking,

4

See the “Protocol

s/PI/PI.html

Informatics”

Project

:

http://www.4tphi.net/

awalter-

protocols have been the subject of many standardization activities, particularly

from the OSI model, which establishes, among other things, the principle of

protocol layers. However, few studies have attempted to give a formal and generic

definition of a protocol. We refer here to the definition provided by Gerard

Holzmann in his reference book “Design and Validation of Computer Protocols”

[7]. According to the author, a protocol specification consists of five distinct

parts:

1.

2.

3.

4.

5.

The

The

The

The

The

service provided by the protocol.

assumptions about the environment in which the protocol is executed.

vocabulary of messages used to implement the protocol.

encoding (format) of each message in the vocabulary.

procedure rules guarding the consistency of messages exchanges.

In our work, we seek to infer the three last elements of the specification

since there are mandatory to configure the fuzzing process and to generate valid

traffic. Subsequently, we consider protocol inference requires to learn both:

1. The set of messages and their format, also called vocabulary of a protocol.

2. All the rules of procedure that we name grammar of a protocol.

These two parts of a protocol are detailed in the followings.

2.2

Definition of the Vocabulary of a Protocol

Within Netzob, the vocabulary consists of a set of symbols. A symbol represents

an abstraction of similar messages. We consider the similarity property refers to

messages having the same role from a protocol perspective. For example, the set

of TCP SYN messages can be abstracted to the same symbol. An ICMP ECHO

REQUEST or an SMTP EHLO command are other kinds of symbols.

A symbol structure follows a format that specifies a sequence of expected

fields (e.g. TCP segments contains expected fields as sequence number and checksum). Fields have either a fixed or variable size. A field can similarly be composed

of sub-elements (such as a payload field). Therefore, by considering the concept

of protocol layer as a kind of particular field, it is possible to retrieve the protocol stack (e.g. TCP encapsulated in IP, itself encapsulated in Ethernet) each

layer having its own vocabulary and grammar. The identification of the different

fields allows to define the symbol format.

In our model, a field has different attributes: some of them concern every

fields, others are specific to the field type and only relevant for a visualization

purpose. The domain defines the authorized values of a field, under a disjunctive

normal form5 . An element of the domain can be:

– A static value (e.g. a magic number in a protocol header).

5

A Disjunctive Normal Form (DNF) is a logical formula built with OR of AND : ( (a

AND b) OR (c AND d)).

– A value that depends on another field, or set of fields, within the same

symbol. We call this notion intra-symbol dependency (e.g. a CRC code).

– A value depending on another field, or set of fields, that are part of a previous

symbol transmitted during the same session. We call this notion inter-symbol

dependency (e.g. an acknowledgment number).

– A value depending on the environment. We call this notion environmental

dependency (for example, a timestamp).

– A random value (e.g. the initial value of the TCP sequence number field).

Besides, fields have some interpretation attributes, notably for visualization

and data seeking purposes. We associate a field content with a unit size (the size

of atomic elements that compose the field, such as bit, half-byte, word, etc.), an

endianness, a sign and a representation (i.e. decimal, octal, hexadecimal, ASCII,

DER, etc.). Optionally, a semantic may be associated to a field (such as an IP

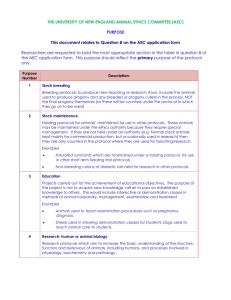

address, an URL, etc.). As an example, the Illustration 1 depicts different format

and visualization attributes of Botnet SDBot C&C messages.

Fig. 1. Format of SDBot C&C messages. The first line depicts the symbol format. The

second line corresponds to the visualization attributes. These attributes are optionally

overloaded by semantic characteristics.

2.3

Definition of the Grammar of a Protocol

The grammar represents the ordered sets of messages exchanged in a valid communication. Applied to the ICMP protocol, its grammar include a rule which

state that an ICMP ECHO REPLY TYPE 8 always follow an ICMP ECHO

REQUEST TYPE 8. Another example of a grammar is the set of rules which

describe the ordered symbols sent between two actors of a TCP session. These

rules can be represented using an automata which define the states and the sent

and received symbols between each transitions (see example on Illustration 2).

Within Netzob, the grammar is defined with our own mathematical model

named Stochastic Mealy Machine with Determinist Transitions (an SMMDT).

Fig. 2. TCP State Transition Automata (http://www.ssfnet.org/Exchange/tcp/

tcpTutorialNotes.html)

This extension of traditional Mealy Machines allows to have multiple output

symbols on the same transition and to represent multiple answers to the same

command. In addition to this feature, our model also include the reaction time

for each couple of request and reply symbols. The interested reader can contact

us for any questions regarding the model, we’ve some nice equations available.

2.4

Few Assumptions

Our objective is to infer the vocabulary and the grammar of a communication

protocol in order to compare against any provided specifications and to evaluate

their implementation using traffic simulator. These operations do not require

previous knowledge on the protocol but still the followings assumptions are made

:

1. The inferring process requires initial input data. Hence the expert must

obtain examples of multiple communication channels hosting the targeted

protocols. It can be any data flow from an USB channel (e.g. external devices)

to network flows (e.g. Botnets’ C&C) through inter-process calls (e.g. API

Hooking) or file protocols (e.g. configuration files, ...).

2. The input data should include the largest diversity of environmental values

(IP addresses, pseudos and other user data, hostnames, date and time, ...).

3. The expert must have access to an implementation which uses the targeted

protocol. It must be possible to automate its execution and to reinitialize it

to its initial state. We recommend snapshot mechanism included in VMWare

or VirtualBox for this.

4. Naturally, the targeted protocol should not include encrypted or compressed

content. If some exists, the expert can use provided entropy measures to

identify some of them and use solutions like API Hooking to retrieve the

clear data.

3

Inferring Vocabulary and Grammar with Netzob

In this Section, we describe the learning process implemented in Netzob to infer

the vocabulary and the grammar of a protocol. This process, illustrated in picture

3, is performed in three main steps:

1. Clustering messages and partitioning these messages in fields.

2. Characterizing message fields and abstracting similar messages in symbols.

3. Inferring the transition graph of the protocol.

3.1

Step 1: clustering Messages and Partitioning in Fields

To discover the format of a symbol, Netzob supports different partitioning approaches. In this article we describe the most accurate one, that leverages sequence alignment processes. This technique permits to align invariants in a set of

messages. The Needleman-Wunsh algorithm [10] performs this task optimally.

Needleman-Wunsh is particularly effective on protocols where dynamic fields

have variable lengths (as shown on picture 4).

When partitioning and clustering processes are done, we obtain a relevant

first approximation of the overall message formats. The next step consists in

determining the characteristics of the fields.

If the size of those fields is fixed, as in TCP and IP headers, it is preferable to

apply a basic partitioning, also provided by Netzob. Such partitioning works by

aligning each message by the left, and then separating successive fixed columns

from successive dynamic columns.

To regroup aligned messages by similarity, the Needleman-Wunsh algorithm

is used in conjunction with a clustering algorithm. The applied algorithm is

UPGMA [11].

Fig. 3. Steps of protocol inference in Netzob

Fig. 4. Example of sequence alignment on FTP messages

3.2

Step 2 : characterization of Fields

The field type identification partially derives from the partitioning inference

step. For fields containing only invariants, the type merely corresponds to the

invariant value. For other fields, the type is automatically materialized, in first

approximation, with a regular expression, as shown on figure 3. This form allows

to easily validate the data conformity with a specific type. Moreover, Netzob

offers the possibility to visualize the definition domain of a field. This helps to

manually refine the type associated with a field.

Some intra-symbol dependencies are automatically identified. The size field,

present in many protocol formats, is an example of intra-symbol dependency. A

search algorithm has been designed to look for potential size fields and their associated payloads. By extension, this technique permits to discover encapsulated

protocol payloads.

Environmental dependencies are also identified by looking for specific values

retrieved during message capture. Such specific values consist of characteristics

of the underlying hardware, operating system and network configuration. During

the dependency analysis, these characteristics are searched in various encoding.

3.3

Step 3: inferring the Transition Graph of the Protocol

The third step of the learning process discovers and extracts the transition graph

from a targeted protocol. This step is achieved by a set of active experiments

that stimulate a real client or server implementation using successive sequences

of input symbols and analyze its responses.

Each experiment includes a sequence of selected symbols according to an

adapted version of Angluin’s L* algorithm [1] that originally applies to DFA6

machines. This choice is justified by its effectiveness to infer a deterministic

transition graph in polynomial time. Its use on a protocol’s grammar requires:

– The knowledge of input symbols of the model (also called the vocabulary).

– That clients or servers fail in some obvious way, for instance by crashing, if

the submitted sequence of symbol is not valid.

– The capability of reseting clients/servers to their initial states between experiment.

– That we can temporary ensure the protocol’s grammar to be deterministic.

The inferring process implies a Learner who initially only knows the input

symbols extracted from the previous step. It tries to discover that represents the

language generated by the model by submitting queries to a Teacher and to an

Oracle.

Among the possible queries, the original algorithm considers the following:

– Membership queries, which consist in asking the Teacher whether a sequence

of symbols is valid. The Teacher replies yes or no.

– Equivalence queries, which consist in asking if the language of an hypothesized model is equal to the language of the inferring model. If not, the Oracle

supplies a counterexample.

This algorithm progressively builds a hypothesized model based on results

brought by submitted membership queries. Once the learned model is considered

stable by the algorithm, an equivalence query is made. If the query is successful,

the model is considered equal to the inferred model, otherwise more membership

queries are submitted according to the returned counterexample.

Finally, we consider that environmental variations may impact output symbols. Therefore, we confine the client/server in a restritive environment to limit

its indeterminism on output symbols. For example, we use network whitelistbased filtering to limit Internet accessibility to only identified necessary websites.

6

DFA stands for “Deterministic Finite Automaton” also known as “Deterministic

Finite State Machine”.

4

Netzob Implementation

This chapter presents the implementation-specific aspects of Netzob, which is

distributed under the GPLv3 license. At the time of writing (August 21, 2012),

the source code comprises about 30.000 lines of code, mostly in Python some

specific parts being implemented in C for performance purposes. All algorithms

described in this article have been reimplemented.

The source code is publicly available7 on a git repository and packages for

Debian, Gentoo, ArchLinux and Windows are provided. Currently, Netzob works

on both x86 and x64 architectures and includes the following modules (as shown

on picture 5):

Fig. 5. Netzob architecture.

– Import module: Data import is available in two ways: either by leveraging the channel-specific captors, or by using the XML interface format. As

communication protocols are omnipresent, it is fundamental to have the

possibility to capture data in a maximum number of contexts. Therefore,

Netzob provides native captors and easily allow the implementation of new

ones. Current work focuses on data flow analysis, that would permit to acquire clear messages before they get encrypted. Besides, Netzob supports

many input formats, such as network flows, PCAP files, structured files and

Inter-process communication (pipes, socket and shared memory).

– Protocol inference modules: The vocabulary and grammar inference methods

constitute the core of Netzob.

– Simulation module: One of our main goal is to generate realistic network

traffic from undocummented protocols. Therefore, we have implemented a

dedicated module that, given vocabulary and grammar models previously

7

See: http://www.netzob.org

inferred, can simulate a communication protocol between multiple bots and

masters. Besides their use of the same model, each actors is independent

from the others and is organized around three main stages. The first stage

is a dedicated library that reads and writes from the network channel. It

also parses the flow in messages according to previous protocols layers. The

second stage uses the vocabulary to abstract received messages into symbols and vice-versa to specialize emitted symbols into messages. A memory

buffer is also available to manage dependency relations. The last stage implements the grammar model and computes which symbols must be emitted

or received according to the current state and time.

– Export module: This module permits to export an inferred model of a protocol in formats that are understandable by third party software or by a

human. Current work focuses on export format compatible with main traffic

dissectors (Wireshark and Scapy) and fuzzers (Peach and Sulley).

5

Application to Common Criteria

In a context of Common Criteria evaluation, three areas are covered by Netzob

:

– attesting protocol compliance;

– testing the capability for network products (IDS, IPS, firewall, etc.) to filter

proprietary and/or undocumented protocols;

– realizing vulnerability analysis of a protocol implementation.

The first part of this chapter will emphasis the need for realistic and reproducible traffic generation. Then, we will focus on ATE test class of the CC

Methodologie and see how Netzob allows to validate protocol compliance and

assess the conformance of security functions of network products. We will finish

on how one can leverage Netzob to perform vulnerability analisys (AVA VAN

class) of a protocol implementation, whether this protocol is documented or not.

5.1

A need for traffic generation

The establishment of an evaluation methodology related to the CC needs to comply with several criteria to ensure guarantees of a rigorous scientific experiment.

Among these criteria, our work addresses the two following criteria:

Reproducibility refers to the ability for an independent and external expert

to execute the experiments and to obtain similar results to the initial experimentation.

Accuracy reflects the degree of similarity between a so-called ”reference” value

or considered to be true regarding the value obtained by the evaluation.

These two criteria leads to two conditions: realism and controllability. The

realism of the experiments provides accurate results. Controllability ensures reproducibility and require a complete characterization of the evaluation [14]. Evaluating equipments or software which uses a communication protocols requires,

among other things, to use communication traffic representing its operational

conditions. Among the various methods used to generate traffic, it is possible

to distinguish the following three main solutions: the replay of captures, the

synthetic generation and the hybrid generation.

The replay solution Re-injects some previously captured traffic on the communication channel. This method is often used by evaluators for its speed and

ease of use. However, in order to avoid introducing out-boundaries behaviors,

such as uncharacterized attacks or the presence of incompatible protocols, it

is needed to characterize all the events included in the capture. This operation is expensive in time and expertise [3]. Moreover, the right for privacy

is also a reason to avoid this solution: the actors involved in the captured

traces, may not wish to see their virtual identities used and published [12].

The synthetic generation solution Consists of the complete generation of

network traffic based on heuristics and statistics generally accepted [9]. Those

statistics model the evolution of an actor’s behavior over time. However, the

generated traffic is often too simple and lacks of realism since it requires

developing all the modules involved in a communication network (IP stacks,

web applications, etc..) which is complex.

The hybrid generation solution offers a way to generate traffic which respects both the criteria of realism and controllability. [6]. Unlike the synthetic

generation solution, the hybrid one extracts the characteristics of a previously captured traffic. Therefore, the generated traffic accurately represents

the reality at least up to the extracted characteristics used. The condition

of controllability is also satisfied since the method involves traffic generation

and not just replay.

Because the hybrid approach provides the two conditions we needed (realism

and controllability), we decided to implement it in Netzob for traffic generation.

Or, as far as the authors know, no other public tool dedicated to the generation

of traffic from undocumented protocols are available. Netzob tries to fill this gap.

5.2

ATE Test class analysis

Part of a CC evaluation is dedicated to the ATE class. This testing class provides

assurance that the TOE behaves as documented in the Functional Specification

(ADV FSP). Two categories of products are especially meant to be addressed

by Netzob during ATE analysis: secure protocol implementations (such as IPsec,

TLS/SSL, EAP, etc.) and network products (such as IDS, IPS, firewall, access

controllers, etc.). For the former, Netzob makes easier the task of validating

protocol compliance. Due to its capability to infer the message format and state

machine of a protocol from observed traffic, it is therefore able to produce a

protocol model that corresponds exactly to the implementation under evaluation.

The final task consists in comparing the inferred model with the theoretical

specification in order to attest compliance or to identify deviations.

In the context of testing network products (especially those that filter protocols), Netzob permits to infer the model of proprietary protocols and/or poorlydocumented protocols that should be supported by evaluated products. Its traffic

simulator engines is then able to generate realistic and reproducible traffic in order to assess the compliance of security functions and their coverage in terms of

supported protocols and detected attacks. In the case of an IDS, Netzob could

be use to generate realistic traffic of a botnet Command & Control channel.

Netzob can be used on the developer side during ATE class testing, for functional tests (ATE FUN), coverage (ATE COV) and depth (ATE DPT) families.

It may also be used on the evaluator side to realize ATE IND independent testing family. As an Open-Source project, Netzob can be part of the same tool-list

for each side.

5.3

Vulnerability analysis of a protocol implementation

In addition to test class analysis, Netzob can also by used by an evaluator in

the vulnerability analysis (AVA VAN) of the implementation of TOE’s communication protocols. It is usefull to recall that applied to the Common Criteria, a

“vulnerability analysis is an assessment activity to determine the existence and

exploitability of flaws or weaknesses in the target of evaluation (TOE) in the

operational environment.” [13].

As part of an AVA VAN, an evaluator may need to perform a dynamic analysis of the target. Executing such tests allows to filter false positives and false

negatives brought by static analysis. Indeed, the latter takes little account of

the environnement in which the TOE is executed which directly impacts the

obtained results. In addition, dynamic analysis brings additionnal information

on the target including its memory status, values of local variables, (...) which

helps the evaluator in its analysis.

A typical example of dynamic vulnerability analysis is the robustness tests.

It can be used by CC evaluators to reveal software programming errors which

can leads to software security vulnerabilities. These tests provide an efficient

and almost automated solution to easily identify and study exposed surfaces of

systems. Nevertheless, to be fully efficient, the fuzzing approaches must cover the

complete definition domain and combination of all the variables which exist in

a protocol (IP adresses, serial numbers, size fields, payloads, message identifer,

etc.). But fuzzing typical communication interface requires too many test cases

due to the complex variation domains introduced by the semantic layer of a

protocol. In addition to this, an efficient fuzzing should also cover the state

machine of a protocol which also brings another huge set of variations. The

necessary time is nearly always too high and therefore limits the efficiency of

this approach.

With all these contraints, achieving robustness tests on a target is feasible

only if the expert has access to a specially designed tool for the targeted protocol.

Hence the emergence of a large number of tools to verify the behavior of an

application on one or more communication protocols. However in the context of

proprietary communications protocols for which no specifications are published,

fuzzers do not provide optimal results.

Netzob helps the evaluator by simplifying the creation of a dedicated fuzzer

for a proprietary or undocumented protocol. It allows the expert to execute a

semi-automated inferring process to create a model of the targeted protocol.

This model can afterward be refined by the evaluator to include documented

specificities obtained from the ADV TDS or even from the ADV IMP. Finally,

the created model is included in the fuzzing module of Netzob which considers

the vocabulary and the grammar of the protocol to generate optimized and

specific test cases. Both mutation and generation are available for fuzzing.

6

Conclusion

In this paper, we introduced Netzob, an open source tool dedicated to the reverse engineering and simulation of communication protocols. In a context of

Common Criteria evaluation, Netzob is meant to help developers and evaluators

in different areas where automation, accuracy and reproductibility are essential:

– attesting protocol compliance;

– testing the capability for network products (such as IDS, IPS and firewalls)

to filter proprietary/undocumented protocols;

– realizing vulnerability analysis of a protocol implementation.

The tool has been successfully applied in the AMOSSYS ITSEF and supports academic researches in collaboration with Supelec CIDer research team.

This community project, already involving other security companies and organizations, will continue to provide up-to-date academic researches while being

available in operational context.

References

[1] D. Angluin. Learning regular sets from queries and counterexamples. Inf. Comput., 75:87–106, November 1987.

[2] M. A. Beddoe. Network protocol analysis using bioinformatics algorithms. In

Toorcon, 2004.

[3] M. Bishop, R. Crawford, B. Bhumiratana, L. Clark, and K. Levitt. Some problems

in sanitizing network data. In 15th IEEE International Workshops on Enabling

Technologies: Infrastructure for Collaborative Enterprises, pages 307–312, 2006.

[4] W. Cui. Discoverer: Automatic protocol reverse engineering from network traces.

In Proceedings of the 16th USENIX Security Symposium, 2007.

[5] W. Cui, V. Paxson, N. C. Weaver, and Y. H. Katz. Protocol-independent adaptive

replay of application dialog. In The 13th Annual Network and Distributed System

Security Symposium, 2006.

[6] S. Gorbunov and A. Rosenbloom. Autofuzz: Automated network protocol fuzzing

framework. In IJCSNS International Journal of Computer Science and Network

Security, volume 10, August 2010.

[7] G. J. Holzmann. Design and validation of computer protocols. Prentice-Hall, Inc.,

Upper Saddle River, NJ, USA, 1991.

[8] C. Leita, K. Mermoud, and M. Dacier. ScriptGen: an automated script generation

tool for honeyd. In Proceedings of ACSAC, 2005.

[9] S. Luo and G. A. Marin. Modeling networking protocols to test intrusion detection

systems. In Proceedings of the 29th Annual IEEE International Conference on

Local Computer Networks, LCN ’04, pages 774–775, 2004.

[10] S. B. Needleman and C. D. Wunsch. A general method applicable to the search

for similarities in the amino acid sequence of two proteins. Journal of Molecular

Biology, 48(3):443–453, 1970.

[11] R. R. Sokal and C. D. Michener. A statistical method for evaluating systematic

relationships. University of Kansas Scientific Bulletin, 28:1409–1438, 1958.

[12] J. Sommers, V. Yegneswaran, and P. Barford. Recent advances in network intrusion detection system tuning. In Information Sciences and Systems, 2006.

[13] Q. Trinh and J. Arnold. Common criteria vulnerability analysis of cryptographic

security mechanisms. In ICCC’07, 2007.

[14] C. Wright, C. Connelly, T. Braje, J. Rabek, L. Rossey, and R. Cunningham. Generating client workloads and high-fidelity network traffic for controllable, repeatable experiments in computer security. In Proceedings of the 13th International

Symposium on Recent Advances in Intrusion Detection (RAID 2010), 2010.