A chain rule for multivariate divided differences

advertisement

A chain rule for multivariate divided

differences

Michael S. Floater∗

Abstract

In this paper we derive a formula for divided differences of composite functions of several variables with respect to rectangular grids

of points. Letting the points coalesce yields a chain rule for partial

derivatives of multivariate functions.

Math Subject Classification: 41A05, 65D05, 26A06

Keywords: Divided difference, chain rule, calculus of several variables, Faa

di Bruno formula.

1

Introduction

The product rule for divided differences generalizes the Leibniz rule of calculus. It goes back at least to Popoviciu [16, 17] and Steffensen [22], and

has played a role in the development of spline theory [1]. More recently,

motivated by the error analysis of parametric curve interpolation, a chain

rule was derived for divided differences: a formula that expresses divided

differences of a composite function g ◦ f in terms of divided differences of the

two functions f and g separately. Somewhat curiously, there are (at least)

two such formulas, both of which were found in [8]. In one formula, all divided differences of the inner function f are over consecutive points, while in

the other, all divided differences of the outer function g are over consecutive

points, which suggests referring to the formulas as the inner and outer chain

rules respectively. The outer chain rule was found independently in [23].

Centre of Mathematics for Applications, Department of Informatics, University of

Oslo, PO Box 1053, Blindern, 0316 Oslo, Norway, email: michaelf@ifi.uio.no

∗

1

Divided differences of composite functions appear in error formulas for

parametric curve fitting based on polynomials and splines [15, 6, 7]. When

compared with derivative chain rules such as Faa di Bruno’s formula [5], an

advantage of the divided difference chain rules is that they can be applied

to functions with little or no smoothness. Moreover, by letting the points

in these difference formulas coalesce, they provide a new, and simple way of

deriving the Faa di Bruno formula [5, 11, 18, 19, 12, 13, 20, 11].

The inner chain rule was later used in [9] to derive a rule for divided

differences of inverses of functions. Surprisingly, the inverse rule can be

interpreted in terms of partitions of convex polygons, and offers a new way

of counting such partitions.

Given these various applications it seems worthwhile looking for a divided

difference chain rule for multivariate functions. In this paper we show that at

least the inner chain rule has a natural extension to multivariate functions,

when divided differences are defined over rectangular grids of points. The rule

can be interpreted in terms of polygonal paths that pass through points in the

grid and are monotonic with respect to each variable. Due to the fact that

multivariate divided differences converge to normalized partial derivatives as

the grid points coalesce, the rule yields a corresponding formula for partial

derivatives of multivariate composite functions; cf. [4].

2

The univariate formula

We begin by recalling the inner chain rule of [8]. Let [x0 , x1 , . . . , xn ]f denote

the usual divided difference of a real-valued function f at the real values

x0 , . . . , xn ; see [3]. We define [xi ]f = f (xi ), and for distinct xi , [x0 , . . . , xn ]f =

([x1 , . . . , xn ]f − [x0 , . . . , xn−1 ]f )/(xn − x0 ). We can allow any of the xi to be

equal if f has sufficiently many derivatives to allow it. In particular, if all

the xi are equal to x say, then [x0 , x1 , . . . , xn ]f = f (n) (x)/n!. Sometimes, in

order to simplify expressions, we will use the shorter notation

[x; i, j]f := [xi , xi+1 , . . . , xj ]f,

j ≥ i.

(1)

It was shown in [8] that for n ≥ 1 and f and g smooth enough,

[x; 0, n](g ◦ f ) =

n

X

X

[fi0 , fi1 , . . . , fik ]g

k=1 0=i0 <···<ik =n

2

k

Y

j=1

[x; ij−1 , ij ]f,

(2)

where fi := f (xi ). The formula is a sum over all sequences of integers

(i0 , i1 , . . . , ik ) satisfying the condition 0 = i0 < i1 < · · · < ik = n, and

the product term is formed by filling the gaps between xij−1 and xij . For

example, the case n = 3 is

[x; 0, 3](g ◦ f ) = [f0 , f3 ]g [x; 0, 3]f

+ [f0 , f1 , f3 ]g [x; 0, 1]f [x; 1, 3]f

+ [f0 , f2 , f3 ]g [x; 0, 2]f [x; 2, 3]f

+ [f0 , f1 , f2 , f3 ]g [x; 0, 1]f [x; 1, 2]f [x; 2, 3]f.

3

(3)

Chain rule: inner function multivariate

We begin the generalization of (2) by considering the case that the inner

function f is replaced by a multivariate function while the outer function

g remains univariate. Following the approach of ([10], sec. 6.6), if f is a

function of p variables, f = f (x1 , . . . , xp ), we denote by

[x10 , x11 , . . . , x1n1 ; x20 , x21 , . . . , x2n2 ; . . . ; xp0 , xp1 , . . . , xpnp ]f

the divided difference of f with respect to the real values xrk , r = 1, . . . , p,

0 ≤ k ≤ nr . This is the ‘tensor-product’ kind of divided difference, in the

sense that it is the leading coefficient of the unique tensor-product polynomial

that interpolates f over the rectangular grid of points

(x1k1 , . . . , xpkp ),

0 ≤ k r ≤ nr .

If these points are not distinct, the interpolating polynomial is understood

to be the Hermite one. The difficult but interesting issues of multivariate

polynomial interpolation over other configurations of points and associated

notions of divided differences have been addressed by several authors [1, 2,

14, 21].

In analogy with the univariate case, if xrkr → xr for all r, then

[x10 , x11 , . . . , x1n1 ; . . . ; xp0 , xp1 , . . . , xpnp ]f →

D n f (x)

,

n!

where x := (x1 , x2 , . . . , xp ), n = (n1 , n2 , . . . , np ), n! := n1 !n2 ! · · · np !, and

n1 n2

np

∂

∂

∂

n

D f (x) :=

···

f (x).

∂x1

∂x2

∂xp

3

Using the vector notation i = (i1 , . . . , ip ) and j = (j 1 , . . . , j p ), we can express

the condition that ir ≤ j r for all r = 1, . . . , p as i ≤ j, in which case, in

analogy with (1), we will sometimes make use of the shorthand

[x; i, j]f := [x1i1 , x1i1 +1 , . . . , x1j 1 ; . . . ; xrir , xrir +1 , . . . , xrjr ; . . . ; xpip , xpip +1 , . . . , xpjp ]f.

As in [8] we will need the product rule. In the multivariate case this is

X

[x; 0, n](f g) =

[x; 0, i]f [x; i, n]g,

(4)

0≤i≤n

which is a straightforward generalization of the univariate product rule found

by Popoviciu [16, 17] and Steffensen [22]. The formula can be viewed as a

sum over polygonal paths that connect the point 0 to the point n in the grid

of points i, 0 ≤ i ≤ n. Each path has two line segments, one connecting 0

to i, the other connecting i to n, either of which can degenerate to a point.

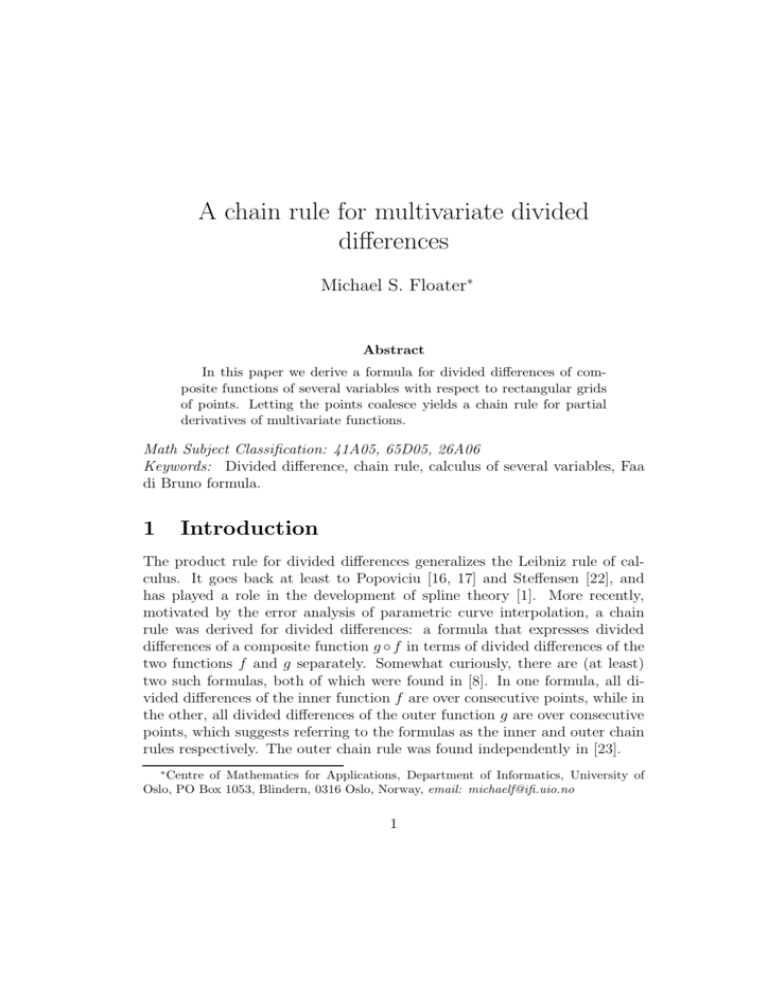

Figure 1(a) illustrates an example of a path in the bivariate case, p = 2. The

two shaded boxes indicate the points involved in the two divided differences

in the sum in (4), the bottom left box applies to f , while the top right box

applies to g. The rule extends in a simple way to deal with a product of r

functions f1 , f2 , . . . , fr , by induction on r,

!

r

r

Y

X

Y

[x; 0, n]

fj =

[x; ij−1, ij ]fj .

(5)

j=1

0=i0 ≤i1 ≤···≤ir =n j=1

Like (4), this formula is a sum over non-decreasing polygonal paths: polygonal paths that connect the point 0 to the point n in such a way that the

vertices form a non-decreasing sequence in each of the p coordinates. The

paths now have r line segments (possibly degenerate). Figure 1(b) illustrates

the bivariate case when there are four functions, r = 4.

We now derive a chain rule for divided differences when the inner function

is multivariate. With the vector notation, the formula looks almost like the

univariate one.

Theorem 1 If f : Rp → R and g : R → R are smooth enough, and fi :=

f (xi ), then

[x; 0, n](g ◦ f ) =

|n|

X

X

[fi0 , . . . , fik ]g

k=0 0=i0 <···<ik =n

4

k

Y

[x; ij−1 , ij ]f.

j=1

(6)

n

n

i3

i

i2

i1

0

0

Figure 1: The bivariate product rule: (a) two functions, (b) four functions.

Here, i1 < i2 means i1 ≤ i2 and i1 6= i2 , and |n| := n1 + n2 + · · · + np . Note

that we allow n = 0 in this formula, which is why we include the case k = 0

in the first sum, unlike in (2). If n = 0 we interpret the condition

0 = i0 < i1 < · · · < ik = n

(7)

as having the unique solution k = 0 and i0 = 0 and with the convention

that an empty product has the value 1, formula (6) reduces to the correct

equation (g ◦ f )(x0 ) = g(f (x0)). If on the other hand |n| ≥ 1, all solutions

to (7) require k ≥ 1. So for |n| ≥ 1 we could replace the first sum in (6) by

P|n|

k=1 . However, we will need to allow the case n = 0 when we use (6) to

prove Theorem 2.

Proof. It is sufficient to prove (6) when g is a polynomial of degree ≤ |n|

because if we replace g by the polynomial of degree ≤ |n| that interpolates

it on the points fi , 0 ≤ i ≤ n, the divided differences in (6) are unchanged.

Therefore, by the linearity of (6) in g, it is also sufficient to prove it for any

monomial g(y) = y r , with r ≤ |n|. In this case, by the divided difference

rule for the product of r functions,

r

[x; 0, n](g ◦ f ) = [x; 0, n](f ) =

X

r

Y

[x; αq−1 , αq ]f.

0=α0 ≤···≤αr =n q=1

Now, by counting the multiplicities of the points αj we have

(α0 , . . . , αr ) = (i0 , . . . , i0 , . . . , ik , . . . , ik )

| {z }

| {z }

1+µ0

5

1+µk

(8)

Figure 2: Chain rule with f bivariate and n = (1, 1).

for some k with 0 ≤ k ≤ r and 0 = i0 < i1 < · · · < ik = n and µ0 + · · ·+ µk =

r − k, with µj ≥ 0. The product on the right of (8) is then

r

Y

q=1

k

Y

[x; αq−1 , αq ]f = f (xi0 )f (xi1 ) · · · f (xik ) [x; ij−1 , ij ]f,

µ0

µk

µ1

j=1

and we obtain

[x; 0, n](g ◦ f ) =

r

X

X

X

k=0 0=i0 <···<ik =n µ0 +···+µk =r−k

fiµ00

· · · fiµkk

k

Y

[x; ij−1 , ij ]f. (9)

j=1

Now, observe that

X

fiµ00 · · · fiµkk = [fi0 , . . . , fik ]g,

µ0 +···+µk =r−k

the divided difference of the monomial g(y) = y r at the points fi0 , . . . , fik .

We substitute this identity into (9), and, then, since [fi0 , . . . , fik ]g = 0 if

k > r, we can replace the upper limit r in the first sum in (9) by |n|.

2

Similar to the product rule (5), formula (6) can be thought of as a sum

over non-decreasing polygonal paths of vertices that connect 0 to n in the

grid of points i, 0 ≤ i ≤ n. The number of line segments in the paths now

varies, and is given by k. As long as n 6= 0, the shortest path has one line

segment (k = 1), and the longest paths have k = |n| line segments. Unlike

the product rule, none of the line segments are degenerate, due to the strict

inequality ij−1 < ij . Figure 1(b) can be used to illustrate the structure of

the chain rule: it shows a path in (6) in the case k = 4. The shaded boxes

indicate the divided differences of f in the sum in (6).

6

Figure 3: Chain rule with f bivariate and n = (2, 1).

We write out a couple of examples of (6). In the bivariate case p = 2

with n = (1, 1) the formula has three terms. There is one term with k = 1,

corresponding to the path (i0 , i1 ) = (00, 11) (the first row of Figure 2) and

two terms with k = 2 corresponding to the paths (i0 , i1 , i2 ) = (00, 10, 11) and

(i0 , i1 , i2 ) = (00, 01, 11) (the second row of Figure 2);

[x; 00, 11](g ◦ f ) = [f00 , f11 ]g [x; 00, 11]f

+ [f00 , f10 , f11 ]g [x; 00, 10]f [x; 10, 11]f

+ [f00 , f01 , f11 ]g [x; 00, 01]f [x; 01, 11]f.

(10)

In the case n = (2, 1), the formula is a sum over eight terms, one for each of

the paths shown in Figure 3. The three rows in the figure show respectively:

the unique path with k = 1 segment, the four paths with k = 2 segments,

and the three paths with k = 3 segments, and the formula is

[x; 00, 21](g ◦ f ) = [f00 , f21 ]g [x; 00, 21]f

+ [f00 , f10 , f21 ]g [x; 00, 10]f [x; 10, 21]f

+ [f00 , f20 , f21 ]g [x; 00, 20]f [x; 20, 21]f

+ [f00 , f01 , f21 ]g [x; 00, 01]f [x; 01, 21]f

+ [f00 , f11 , f21 ]g [x; 00, 11]f [x; 11, 21]f

+ [f00 , f10 , f20 , f21 ]g [x; 00, 10]f [x; 10, 20]f [x; 20, 21]f

+ [f00 , f10 , f11 , f21 ]g [x; 00, 10]f [x; 10, 11]f [x; 11, 21]f

+ [f00 , f01 , f11 , f21 ]g [x; 00, 01]f [x; 01, 11]f [x; 11, 21]f. (11)

7

4

Chain rule: both functions multivariate

Now we treat the full multivariate case, building on Theorem 1. We consider

a composition g ◦ f in which f is a multivariate, vector-valued function f :

Rp → Rq and g is a multivariate scalar-valued function g : Rq → R.

Theorem 2 If f : Rp → Rq and g : Rq → R are smooth enough and f =

(f1 , . . . , fq ) then, defining fr,i := fr (xi ),

[x; 0, n](g ◦ f) =

|n|

X

X

[f1,ij0 , . . . , f1,ij1 ; . . . ; fq,ijq−1 , . . . , fq,ijq ]g ×

k=0 0=i0 <···<ik =n

0=j0 ≤···≤jq =k

jr

Y

q

Y

[x; ij−1, ij ]fr .

(12)

r=1 j=jr−1+1

Proof. Similar to the proof of Theorem 1, it is sufficient to prove the formula

when g is a polynomial of the form

g(y1, . . . , yq ) = y1r1 · · · yqrq ,

where 0 ≤ rj ≤ nj . Therefore, it is also sufficient to prove the formula in the

more general case that g is the product of q univariate functions, gi : R → R,

i = 1, . . . , q,

g(y1, . . . , yq ) = g1 (y1 ) · · · gq (yq ).

(13)

To show this, observe that with g in this form, the composition g ◦ f is given

by

g(f(x)) = g1 (f1 (x)) · · · gq (fq (x)).

(14)

Then, due to the product rule (5), we have

[x; 0, n](g ◦ f) =

q

Y

[x; αr−1 , αr ](gr ◦ fr ).

X

0=α0 ≤α1 ≤···≤αq =n r=1

Next, since gr is univariate, we can expand the divided differences on the

right using the chain rule of Theorem 1. Note that these divided differences

may have order 0, due to the possibility that αr−1 = αr , and this is why we

allowed n = 0 (and consequently k = 0) in formula (6). With the shorthand

k

Y

Ar (i0 , . . . , ik ) := [fr,i0 , . . . , fr,ik ]gr [x; ij−1 , ij ]fr ,

j=1

8

(15)

for points i0 , . . . , ik ∈ Rp and r = 1, . . . , q, we find

[x; 0, n](g ◦ f) =

q |αr −αr−1 |

Y

X

X

0=α0 ≤α1 ≤···≤αq =n r=1

kr =0

X

Ar (ir0 , . . . , irk ).

αr−1 =ir0 <···<irkr =αr

Next, observe that since the sequence ir0 < · · · < irkr in this expression connects αr−1 to αr , when we put all q of these sequences together we obtain

a single increasing sequence that connects 0 to n. This means that we can

rearrange the formula to be a single sum over paths connecting 0 to n, and

over all possible choices of q sub-paths, with each sub-path contributing to

one of the functions gr :

[x; 0, n](g ◦ f) =

|n|

X

X

q

Y

Ar (ijr−1 , . . . , ijr ).

k=0 0=i0 <···<ik =n r=1

0=j0 ≤···≤jq =k

To complete the proof, we expand the term Ar using (15), and hence we

obtain equation (12) for g of the form (13) because with this choice of g,

q

Y

[fr,ijr−1 , . . . , fr,ijr ]gr = [f1,ij0 , . . . , f1,ij1 ; . . . ; fq,ijq−1 , . . . , fq,ijq ]g.

r=1

2

We look at four examples. First, if p = 1 and q = 2, and setting a = f1

and b = f2 , the composition has the form g(f(x)) = g(a(x), b(x)), and the

formula with n = n = 1 is

[x; 0, 1](g ◦ f) = [a0 , a1 ; b1 ]g [x; 0, 1]a + [a0 ; b0 , b1 ]g [x; 0, 1]b,

(16)

and with n = n = 2,

[x; 0, 2](g ◦ f) = [a0 , a2 ; b2 ]g [x; 0, 2]a

+ [a0 ; b0 , b2 ]g [x; 0, 2]b

+ [a0 , a1 , a2 ; b2 ]g [x; 0, 1]a [x; 1, 2]a

+ [a0 , a1 ; b1 , b2 ]g [x; 0, 1]a [x; 1, 2]b

+ [a0 ; b0 , b1 , b2 ]g [x; 0, 1]b [x; 1, 2]b.

9

(17)

If p = 2 and q = 2, with a = f1 and b = f2 , the composition has the

form g(f(x)) = g(a(x), b(x)), with x ∈ R2 . Specializing to n = (1, 1) we

then obtain a sum over the three paths of Figure 2. But each path is now

split into q = 2 parts, and there are k + 1 ways of splitting a path with k

segments. Thus the total number of terms is 2 × 1 + 3 × 2 = 8:

[x; 00, 11](g ◦ f) = [a00 , a11 ; b11 ]g [x; 00, 11]a

+ [a00 ; b00 , b11 ]g [x; 00, 11]b

+ [a00 , a10 , a11 ; b11 ]g [x; 00, 10]a [x; 10, 11]a

+ [a00 , a10 ; b10 , b11 ]g [x; 00, 10]a [x; 10, 11]b

+ [a00 ; b00 , b10 , b11 ]g [x; 00, 10]b [x; 10, 11]b

+ [a00 , a01 , a11 ; b11 ]g [x; 00, 01]a [x; 01, 11]a

+ [a00 , a01 ; b01 , b11 ]g [x; 00, 01]a [x; 01, 11]b

+ [a00 ; b00 , b01 , b11 ]g [x; 00, 01]b [x; 01, 11]b.

(18)

If instead, n = (2, 1), the formula is a sum over the eight paths of Figure 3.

We do not write it out but the number of terms is 2 × 1 + 3 × 4 + 4 × 3 = 26.

5

Chain rule for derivatives

Letting the points xk in equation (12) converge to x immediately gives a

multivariate chain rule for derivatives. The points ir in the second sum in

(12) are no longer specifically needed in the derivative version; the order of the

partial derivative of fr depends only on the vector difference αr := ir − ir−1 .

Thus, summing over the αr instead of the ir , the derivative formula is

|n|

D n (g ◦ f)(x) X

=

n!

k=0

X

jr

q

D j g(f(x)) Y Y D αj fr (x)

,

j!

αj !

=n

r=1 j=j

+1

α1 +···+αk

0=j0 ≤···≤jq =k

(19)

r−1

where the second sum is over αr > 0, and j := (j1 − j0 , . . . , jq − jq−1 ).

This equation contains, in general, repeated terms, unlike Faa di Bruno’s

formula and the multivariate formula in [4]. It could be reformulated to

avoid repeats by summing over partitions of vector integers using the same

approach as that of the conversion of equation (25) of [8] to equation (26)

of [8]. Instead we simply gives some examples, taking, for comparison, the

same cases that we looked at earlier for divided differences. Specializing (19)

10

to the cases treated in (3), (10–11), (16–17), and (18), one obtains, after

cancelling factorials and drawing together repeated terms, their respective

derivative counterparts:

(g ◦ f )′′′ = g ′ f ′′′ + 3g ′′ f ′ f ′′ + g ′′′ (f ′ )3 ,

D 11 (g ◦ f ) = g ′ D 11 f + g ′′ D 10 f D01 f,

D 21 (g ◦ f ) = g ′ D 21 f + g ′′ (D 20 f D01 f + 2D 10 f D11 f ) + g ′′′ (D 10 f )2 D 01 f,

(g ◦ f)′ = D 10 g a′ + D 01 g b′ ,

(g ◦ f)′′ = D 10 g a′′ + D 01 g b′′ + D 20 g (a′ )2 + 2D 11 g a′ b′ + D 02 g (b′ )2 ,

and

D 11 (g ◦ f) = D 10 g D 11 a + D 01 g D 11 b

+ D 20 g D 10 a D 01 a + D 11 g D 10 a D 01 b

+ D 11 g D 01 a D 10 b + D 02 g D 01 b D 10 b.

References

[1] C. de Boor, A Leibniz formula for multivariate divided differences, SIAM

J. Numer. Anal. 41 (2003), 856–868.

[2] C. de Boor, A multivariate divided difference, in Approximation Theory

VIII, Vol 1: Approximation and Interpolation, C. K. Chui and L. L.

Schumaker (eds.), World Scientific, Singapore, 1995, pp. 87–96.

[3] C. de Boor, Divided differences, Surveys in Approximation Theory 1

(2005), 46—69.

[4] G. M. Constantine and T. H. Savits, A multivariate Faa di Bruno formula with applications. Trans. Amer. Math. Soc. 348 (1996), 503–520.

[5] C. F. Faa di Bruno, Note sur une nouvelle formule de calcul differentiel,

Quarterly J. Pure Appl. Math, 1 (1857), 359–360.

[6] M. S. Floater, Arc length estimation and the convergence of parametric

polynomial interpolation, BIT 45 (2005), 679–694.

11

[7] M. S. Floater, Chordal cubic spline interpolation is fourth order accurate, IMA J. Numer. Anal. 26 (2006), 25–33.

[8] M. S. Floater and T. Lyche, Two chain rules for divided differences and

Faa di Bruno’s formula, Math. Comp. 76 (2007), 867–877.

[9] M. S. Floater and T. Lyche, Divided differences of inverse functions and

partitions of a convex polygon, Math. Comp. 77 (2008), 2295-2308.

[10] E. Isaacson and H. B. Keller, Analysis of numerical methods, Wiley,

1966.

[11] W. P. Johnson, The curious history of Faa di Bruno’s formula, Amer.

Math. Monthly 109 (2002), 217–234.

[12] C. Jordan, Calculus of finite differences, Chelsea, New York, 1947.

[13] D. Knuth, The art of computer programming, Vol I, Addison Wesley,

1975.

[14] C. A. Micchelli, A constructive approach to Kergin interpolation in Rk :

multivariate B-splines and Lagrange interpolation, Rocky Mountain J.

Math. 10 (1979), 485–497.

[15] K. Mørken and K. Scherer, A general framework for high-accuracy parametric interpolation, Math. Comp. 66 (1997), 237–260.

[16] T. Popoviciu, Sur quelques propriétés des fonctions d’une ou de deux

variables reélles, dissertation, presented at the Faculté des Sciences de

Paris, published by Institutul de Arte Grafice “Ardealul” (Cluj, Romania), 1933.

[17] T. Popoviciu, Introduction à la théorie des différences divisées, Bull.

Math. Soc. Roumaine Sciences 42 (1940), 65–78.

[18] J. Riordan, Derivatives of composite functions, Bull. Amer. Math. Soc.

52 (1946), 664–667.

[19] J. Riordan, An introduction to combinatorial analysis, John Wiley, New

York, 1958.

12

[20] S. Roman, The formula of Faa di Bruno, Amer. Math. Monthly 87

(1980), 805–809.

[21] T. Sauer, Polynomial interpolation in several variables: lattices, differences, and ideals, in Topics in multivariate approximation and interpolation, K. Jetter et al. (eds.), Elsevier, 2006, pp. 191–230.

[22] J. F. Steffensen, Note on divided differences, Danske Vid. Selsk. Math.Fys. Medd 17 (1939), 1–12.

[23] X. Wang and H. Wang, On the divided difference form of Faà di Bruno’s

formula, J. Comp. Math. 24 (2006), 553–560.

13