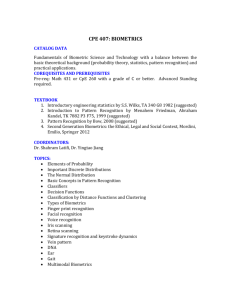

Environmental Scanning Report

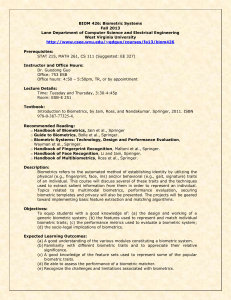

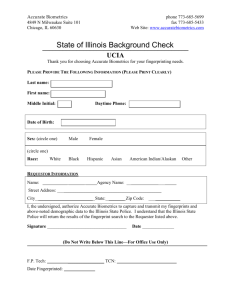

advertisement