Snapshots from Hell: Practical Issues in Data

advertisement

Snapshots from Hell: Practical Issues in Data Warehousing

Tyson Lee, LEAP Consulting, Palo Alto, CA

Introduction

Data warehouse projects have a reputation for being

complex, costly, and almost certain to fail. According to a

recent report from Information Week, the rate of data

warehouse project failures, while down from analysts’

estimates of 60% to 90% in the 1990s, still hovers around

40%. Lack of planning and poor design also contributed

to these inflexible, "stovepipe" projects that quickly

became legacy baggage in the face of an ever-changing

business environment. Instead of reaping the benefits of

data warehousing, many practitioners fear the nightmare

of delayed timelines and overrun budgets. Some even

describe their data warehousing experience as a living

hell. In this paper, the author intends to leverage practical

experiences to illustrate industry best practices in good

data warehouse design, construction and on-going

maintenance. Topics include:

• Fundamental Concepts of Data Warehouse

• Zen of Data Warehousing

• Myths and Facts of Data Warehousing

• Validation Issues

®

• Examples Using SAS

Database vs. Data Warehouse

On July 21, 2003, the key word search on Google for

words ‘database’ and ‘data warehouse’ yielded 48.8

million and 612 thousand hits respectively, while the most

widely searched key word ‘sex’ yielded 184 million hits.

By this measure, while database is becoming a quite

prevalent term, data warehouse remains much less known

to the general public.

By definition, both database and data warehouse are a

collection of data. They differ in their purposes and

structures.

satisfy certain rules (normalization rules for instance) to

guarantee data integrity and update efficiency. A database

is transaction oriented and dynamic. As a result, a

database is not friendly to end-users for queries and

decision support analyses.

A data warehouse, on the other hand, is “a subjectoriented, integrated, time-variant, nonvolatile collection of

data in support of management’s decision-making

process,” (Building the Data Warehouse, Bill Inmon). In

a repository data warehouse, data structure is defined as

such that it ensures full integration of data and application

in a new environment. Data are pre-processed and

relatively stable; tables are merged and de-normalized to

facilitate applications known as on-line analytical

processing (OLAP). Unlike in a RDB, data redundancy is

highly encouraged in a data warehouse environment.

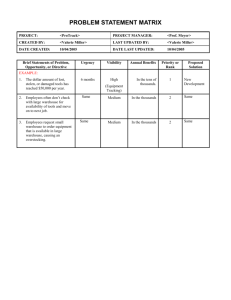

Objective

Data Structure

Database

DW

Transactional

Analytical

Normalized

Denormalized

Organization

Separated

Integrated

Update Frequency

Dynamic

Static

Data Acquisition

Decision Support

DBA

DWA

Interface System

Administrator

Over the years, data warehouses have been implemented

in a number of industries including market research,

financial risk management, investment modeling and

business intelligence.

In the pharmaceutical and

biotechnology industry, repository data warehouses are

commonly used in e-submissions to provide users with

integrated, analytical information rather than raw data.

Zen of Data Warehousing

Yin

Yang

A relational database (RDB) is typically used for data

acquisition and storage, applications known as on-line

transaction processing (OLTP). Therefore a RDB has to

In Chinese philosophy, Zen refers to the coexistence and

harmony of two opposite components. Due to the

complexity of data warehousing, it is invaluable to

leverage Zen philosophy as guiding principles in data

warehouse design, implementation, and project

management.

destined to become a victim of change. A successful

practitioner not only anticipates change but also embraces

changes. They would build the data warehouse with

changes in mind from the beginning. When designing the

data warehouse, one should always keep the schema

flexible to accommodate future requirements. Change

control procedures should also be implemented for

regulatory requirements and speedy implementation.

Small is Big

Despite the fact that data redundancy is encouraged in a

data warehouse for the ease of analytical processing,

elegant design and a small footprint is still very important.

In other words, redundancy should not be created

casually. In many cases, views should be used rather than

permanent tables. The advantages of views are dynamic

linking and small footprint. The downside is the

performance that is sacrificed at runtime.

Myths of Data Warehousing

Slow is Fast

Myth #1: We can do well without data warehousing.

The book In Search of Excellence, by Thomas Peters and

Robert Waterman, Jr., reveals an astounding fact:

According to a university study, most managers do not

regularly invest large blocks of time for planning. Worse,

the time they use is fragmented into tiny segments of

activity. The average time allotted to any particular task

was only nine minutes. Nine minutes! You cannot even

enjoy a good candy bar in nine minutes.

A lot of companies out there judge the consequences of

data warehousing as a greater risk than the risk of not

doing it. This is particularly true in today’s stalled

economy. However, history has taught us many lessons

on doing nothing. Montgomery Ward did nothing or very

little to ensure its survival. Xerox did nothing as the

future of traditional copying became grimmer and

grimmer, and Xerox’s own inventions – the mouse, the

graphical user interface – were grabbed by Apple. The

airlines did nothing, even as they saw Southwest achieving

the supposedly unthinkable. Sears did nothing as two

guys who got fired from their last job - Bernie Marcus and

Arthur Blank – built Home Depot into a chain of more

than 1,400 stores. Procter and Gamble and Kraft General

Foods did nothing as Starbucks redefined coffee. I can go

on and on. But the key point is doing nothing bears a

greater risk when your competitors are gaining better

understanding of the business through data warehousing

and business intelligence. As the poet Charles Wright has

said, “What we refuse defines us.”

Data warehousing should not be hastened. If we use the

‘3D’(Define, Design, Deliver) approach for data

warehousing, one is expected to spend a huge amount of

time upfront in the initial phase of definition and planning.

The rule of thumb is this: if you cut corners by 1 hour in

definition and planning phase, you will be expected to pay

the price of 10 hours and 100 hours respectively in the

subsequent phases. So the payback ratio is 1:10:100. A

slow, systematic start in data warehousing can set the

project on the right track and will pay off in the long run.

The key is doing things right the first time.

Myth #2: Data warehouse is just a fancy name for

analysis files.

Less is More

It is inconceivable that any sophisticated data warehouse

can address all business scenarios and needs. The ‘80/20’

rule tells us that you can spend 20% effort to build a data

warehouse that will meet 80% of the needs. However, if

you try to perfect it by addressing the rest of the 20%

requirements, you will end up spending 80% effort. This

is where a lot of projects failed. They have

underestimated the effort/payback ratio. So the key is to

have a few excellent ‘quick hits’ in your data warehousing

effort to target top priorities – mission-critical business

issues with the greatest ROI. Don’t try to warehouse all

your data.

Can you image an automaker with the mentality that “a car

is just a platform running on wheels,” can build top

quality automobiles over time? Just like you would not

call an EXCEL spreadsheet a database, a loosely tied set

of analysis files would not necessarily qualify as a data

warehouse. Building a data warehouse involves careful

planning,

deliberate

designing,

and

systematic

implementation. One has to add in bells and whistles such

as data schema (Conceptual, logical and physical data

models), data standard, derivation rules, and metadata

management. Calling analysis files a data warehouse

undermines the complexity of data warehousing.

Practitioners with this mentality are doomed to fail in the

face of changing needs from diverse users.

Future is Now

“Time is the best teacher. Unfortunately, it killed all its

pupils.” It is naïve to assume that data warehousing won’t

bring about technological, process or organizational

changes. Data warehousing, in many ways, is an exercise

of chasing a moving target. You’ve probably heard the

old adage that there are three types of people in the world:

those who make things happen, those who watch things

happen, and those who ask, “what happened?” In data

warehousing, either you’re an agent of change , or you’re

Myth #3: Standardization slows down the data-warehouse

project.

Albeit time-consuming, standardization is an essential part

of data warehousing. Projects without taking serious

considerations of data standard end up paying a higher

toll. Instead of reinventing the wheel, try to use

published, well-adopted data standard for reference.

2

Examples are CDISC in the biopharmaceutical industry

and HL7 in the healthcare industry.

However, PROC COMPARE doesn’t provide much

information on added/deleted observations. In this case,

SQL can be used to generate a customized validation

report. A more sophisticated approach is to develop a set

of audit trail utilities using a variety of tools.

Myth #4: A DBA can naturally be a good DWA (Data

Warehouse Administrator).

Data "Where" House: Knowing Where to Fix

The DWA role requires a different skill set than the one

possessed by most DBA’s. Inadequate user involvement is

ultimately responsible for most data warehousing failures.

A good DWA wears many hats and needs to be a subject

matter expert (SME), an efficient project manager, a

savvy leader and organizer. He or she needs to be able to

work with many cross-functional teams to promote the

data standard and collaboration so that the final

deliverables can bring information to end users’ fingertips.

A more critical issue is maintaining the data warehouse

for the long run. Specifically, when requests come to fix

some data problems, it is critical to know which tables to

fix. Since redundant data have been populated in a data

warehouse, identifying where to fix is tricky and an

effective approach could dramatically improve your

effectiveness.

Validation Issues

Case Study: In a clinical trial data warehouse, it is

decided to disqualify one investigator for the final ISE

(Integrated Summary of Efficacy) analysis. Also, a few

patients were transferred from one site to another in the

long-term follow-up phase. Global data updates are

needed to address these changes.

It is important to verify the results before applying any

permanent data changes to tables in a data warehouse. In

particular, in the biopharmaceutical industry, due to the

FDA regulation 21 CFR Part 11, it is important to

document the changes. In SAS, PROC COMPARE can

be a powerful tool to perform comparison of two data sets.

In this case, a data dictionary becomes handy. Data

dictionary, also known as metadata, contains data about

the data. In SAS, dictionary tables are generated at run

time and are read-only. The following SAS dictionary

tables are available by default:

By default, PROC COMPARE procedure generates the

following five reports:

1.

2.

3.

4.

5.

data set summary

variables summary

observation summary

values comparison summary

value comparison results for all variables judged

unequal.

MEMBERS: contains members of each library and

general information about members.

TABLES: detailed information on members of each

library.

COLUMNS: information on variables and their

attributes.

CATALOGS: catalog entries in libraries.

VIEWS: lists views in libraries.

INDEXES: information on indexes defined for data

files.

These reports are helpful but lengthy. Options in the

PROC COMPARE procedure can be specified to produce

customized reports. The following macro prints out the

differences for the data set before and after the change.

****************************************;

* Macro to compare a data set before and

*;

* after data update

*;

****************************************;

For the benefit of readers, let'

s simplify the case and

assume that investigator id, investigator site and patient id

are common fields for all tables in a clinical research data

warehouse.

Otherwise, we can still use the same

technique, just that we have to take one step further by

using the COLUMNS table to identify which tables to fix.

%MACRO COMPARE(DATASET, KEYS);

PROC COMPARE

BASE=WAREHSE.&DATASET

COMPARE=TEMP.&DATASET

NOPRINT OUT=RESULT OUTNOEQUAL

OUTBASE OUTCOMPARE OUTDIF;

Program:

**********************************************;

* Global changes to data warehouse:

*;

* - exclude Dr. Jones (id=875)

*;

* - change investigator site for patients

*;

**********************************************;

ID &KEYS;

RUN;

PROC PRINT DATA=RESULT;

BY &KEYS;

ID &KEYS;

TITLE “Discrepancies in &DATASET before and after”;

RUN;

*** Get table names in the library ***;

PROC SQL NOPRINT;

CREATE TABLE TO_CHG AS

SELECT MEMNAME

FROM DICTIONARY.TABLES

%MEND COMPARE;

3

(doing the right thing) in designing building and

maintaining a data warehouse.

WHERE LIBNAME = '

WAREHSE'

;

*** The data update macro ***;

First of all, a data warehouse may not have the

sophisticated audit trail and rollback capabilities that a

traditional rDBMS has. Data backup prior to each change

is critical. And data validation is essential, even for a

simple change.

%MACRO CHG_SITE;

DATA TEMP.&DATASET;

SET WAREHSE.&DATASET

(WHERE=(INV NE '

875'

));

IF PT IN ('

2488'

,'

2487'

,'

6213'

,'

2486'

)

AND INVSITE='

7371'AND PHASE=2

THEN INVSITE='

0661'

;

Appropriate documentation of data warehousing needs to

be maintained. This is extremely important in a regulated

environment such as a clinical research system. The

documentation should include the original requests, test

plan, expected and actual results, implementation

procedure, etc. A SAS audit trail facility can also be

developed.

RUN;

%MEND;

*** Macro to apply global changes ***;

A critical issue about maintaining a data warehouse is how

to identify which tables to fix. A SAS data dictionary can

be very useful.

Knowing where to fix would

fundamentally improve the effectiveness of data

warehouse maintenance.

%MACRO CHG_ALL;

%DO I = 1 %TO &SQLOBS;

DATA _NULL_;

I = &I;

SET TO_CHG POINT=I;

CALL SYMPUT('

DATASET'

,

COMPRESS(MEMNAME));

STOP;

RUN;

Due to the data redundancy in a data warehouse,

efficiency is often a concern for building and maintaining

a data warehouse. A small efficiency gain in a macro

program can be multiplied when applying repeatedly and

globally. Consider the tradeoff of computer resources and

human efforts. After all, efficiency is measured by a

combination of your CPU, I/O and. human costs

%CHG_SITE

%COMPARE(&DATASET, INV PT)

%END;

%MEND;

Use the data driven approach rather than writing a new

program each time. Use the macro facility to generate

SAS program code, instead of writing them explicitly.

®

SAS/ASSIST can also be utilized as a CASE tool for

code generation. All these techniques are geared towards

minimizing chances for human errors in building and

updating a data warehouse. Build your data warehouse

with flexible data schema to accommodate future business

requirement changes. Let your data warehouse work as

hard as you do!

*** Call the macro for global changes ***;

%CHG_ALL

RUN;

This program will automatically update tables in the

warehouse one after another, and print the differences

before and after change.

A couple of tips:

• The SQL procedure sets up a macro variable

SQLOBS, which contains the number of rows

processed.

• COMPRESS in SAS OPTIONS needs to be set to

NO, otherwise the POINT= option won’t work.

Acknowledgements

The author is thankful to Lindley Frahm and Oscar

Cheung for reviewing the manuscript and providing

invaluable feedback.

More Considerations

We live in a fast-paced, dynamic information age. Data

warehousing turns quantitative raw data into more user

friendly, qualitative information for better decisionmaking. However, data warehousing is a painstaking,

laborious process. Due to its complexity, if mismanaged,

this seemly value-added effort can quickly turn into

nightmares. In addition, due to the characteristics of a

data warehouse, special considerations are needed to build

and maintain a data warehouse environment. We must

consider both efficiency (doing it right) and effectiveness

Author Information

Tyson Lee provides technical and management consulting

services. He has over 12 years of industry experience

working for market leaders and has served as Associate

Director of Biometrics. He spearheaded the data

warehousing initiative at Genentech and has won the

prestigious Technological Achievement Award at Eli Lilly

and Company.

4

Tyson is a recognized contributor and speaker at WUSS,

SUGI and PharmSUG. He has won the best paper award

at WUSS and has been inducted in International Who’s

Who in Information Technology.

Tyson holds an MBA from UC-Berkeley’s Haas School of

Business and an MA in Econometrics & Computer

Science.

Contact:

Tyson Lee

LEAP Consulting

Palo Alto, CA 94303

ttlee88@yahoo.com

5