Making a Paper Form Electronic - Business Forms Management

Making a Paper Form Electronic

Jessica Enders

Director

Formulate Information Design

Copyright © 2008 Formulate Information Design.

Formulate Information Design grants BFMA the right to use and distribute this copyright material.

INTRODUCTION

This practical, experience-based paper presents a step-by-step method for taking an existing paper form into an electronic medium. These steps are, broadly:

•

•

•

•

•

Supplementary form elements and considerations

•

.

An important note about scope

This paper recommends a process for translating an existing paper form into the electronic medium. This is distinct from designing an

-or paper--from scratch .

When designing from scratch, it is best to begin at the highest level, namely working out the process surrounding the form. For example:

•

How is the form to be distributed, completed, returned and processed?

•

Who are the owners and/or stakeholders for the form?

•

What information need is the form attempting to address?

The answers to these and other process questions are going to be the greatest influencer of the design of the form and this is why work on a new form is best begun at this high level.

On the other hand, translation of an existing form from paper to electronic is an exercise in construction rather than design .

Construction always works best if you begin at the lowest level, the foundations as it were. In the case of a form, the lowest level is the foundation.

2

DESCRIBING YOUR PAPER FORM

The Q&A

The first step in making a paper form electronic is documenting your existing questions. Spelling out the different characteristics of each question makes it easy to systematically transfer each question to the electronic medium.

The document that contains this information can be called a Q&A , which is an abbreviation for ‘‘Questions and their respective Answer formats’’. Q&As are not only useful for transferring a form from one first place. The Q&A concentrates stakeholders on developing just the right language for collecting the information they need. Once the Q&A has been finalised and agreed, the designer can then proceed to work on the physical layout of the form.

For each question in the form, this is what needs to documented in the

Q&A:

•

Whether the question is open or closed.

•

Whether the question is compulsory or optional.

•

How many answers the respondent is allowed to give.

•

What type of answers the respondent is allowed to give.

Further information about each of these characteristics is given below.

Whether the question is open or closed

Open questions allow the respondent to provide their answer in their own words. Closed questions, conversely, require choice from a predefined set of options.

When documenting a closed question, be sure to note what set of options are to be provided to the respondent. This includes whether or not an ‘‘Other specify’’ option is to be provided.

3

Whether the question is compulsory or optional

As would be expected, a compulsory question is one that must be answered. Having said that, it is worth noting that the question may be compulsory for only some respondents, usually because of sequencing. For example, all respondents who indicate that they wish to pay by credit card must give a credit card number, but those paying by cheque can leave this question blank.

One of the major benefits of electronic forms is that we can programmatically enforce sequencing and thus the complex criteria about whether a question is compulsory or not. In the paper medium, this type of information is much harder to successfully communicate to respondents.

How many answers the respondent is allowed to give

This characteristic refers to whether the respondent is to choose only one answer, or can provide multiple answers. For example, respondents should only choose one title (Mr, Mrs, Ms etc) but they can provide more than one given name.

What type of answers the respondent is allowed to give

The most common question types are:

•

Text (alphabetic or numeric, single or multiple lines)

•

Tick boxes (i.e. choice among many options, with or without the ability to provide an ‘‘other’’ response)

•

Dates

•

Times

•

Ratings (from a scale)

•

Ranking (putting items in an order).

Form flow

The other key piece of documentation of your existing paper form is a map of its flow , i.e. how different questions in the form relate to each other.

The most common relationship between questions is conditional branching , where the answer to one or more earlier questions will determine what later questions are presented to the respondent. For example, questions collecting credit card information should only be asked if the respondent has chosen credit card as their payment method.

4

Flow is often documented via a flowchart. In such a diagram, the elements are individual questions and the links between the elements describe their relationship.

5

CREATING THE ELECTRONIC FORM

Once you have documented your existing paper form, with a Q&A and a

Flow, you have the components ready to build your electronic form.

This can be done incrementally: electronic questions are built from the Q&A; then the electronic sequencing is built from the Flow.

Electronic questions

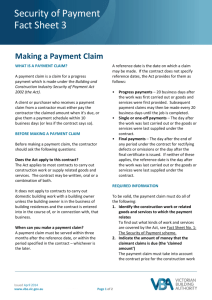

Mapping using paper characteristics

Start by mapping each question in the Q&A to its electronic equivalent.

The mapping proposed in the table below is based on previous research, as well as over a decade of observing users interact with different question variants. In particular, note that:

•

Respondents use the size of a text box as a cue for how much information is should be entered

.

•

Radio buttons are almost always preferred over drop downs, because they allow the respondent to see all the available options at once

and take less time to use . However, drop downs take up considerably less space.

•

Dates and times are split into components to minimise errors (e.g. forgetting to give the year) in the most efficient way possible.

•

The recommended format for some questions (e.g. ratings for large numbers of items) has been chosen to prevent the need for a matrix

(see below for further information about matrices).

The table is used as follows:

•

The first 3 columns contain the information documented in the Q&A.

•

The last column contains the recommended electronic question design based on the particular permutation given in thee first 3 columns.

1

Derham P. (2004) “ The impact of space and survey format on open ended responses’’. Australasian Journal of Market Research , Volume 12, Number

2, Australian Market & Social Research Society: Melbourne.

2

Schaik P.V. & Ling J. (2007) ‘‘Design parameters of rating scales for web sites’’. ACM Transactions on Computer-Human Interaction (TOCHI) , Volume

14, Issue 1, Association for Computer Machinery: New York.

6

Question type

Number of answer options provided

Text n/a

Tick boxes 5 or less

More than 5

Number/Size of answers allowed

1 line only

Multiple lines

1 only

Suggested electronic format

Single line text box

Multiple line text box

Radio buttons

1 only Drop down

Date

Time n/a

24

(i.e. one for each hour) n/a n/a

In-built date element or else 3 drop downs, one each for day, month and year.

Words rather than numbers should be used for the months, to prevent confusion with the day

field .

In-built time element or else 2 drop downs, one containing the hour and the other containing ‘‘AM/PM’’.

In-built time element or else 3 drop downs, one each for hour, minute and AM/PM.

Ratings

More than 24

(i.e. finer level of detail than the hour)

5 or less n/a

1 only

More than 5

(e.g. 10 point scale)

Rankings n/a

1 only

First 3 choices only

More than 3 choices

Radio buttons, one for each rating

Single line, reduced width text box into which the respondent enters their rating or else a slider.

Ask 3 questions, i.e.

‘‘Which would be your first/second/third choice’’

Single line, reduced width text boxes, one for each option, into which the respondent enters their ranking

3

For example, in some countries, dates are written numerically with the month first followed by the day (e.g. 06/30/08 for 30 June 2008) while in other countries, dates are written numerically with the day first followed by the month (e.g. 30/06/08 for 30 June 2008).

7

A word about text boxes

In the paper forms world, there is an ongoing debate about whether or not to use single character text boxes and/or combs to ensure that the respondent wrote legibly. In the electronic forms world, there is no such debate because computer type has eliminated problems of illegibility. This means that in the majority of cases, text data should be captured via one or more whole lines, rather than boxes for individual characters.

A common exception to this rule is when the text box is used for the entering of long strings of digits, such as credit card or account numbers. To help the respondent ‘see’ where they are up to when entering such numbers, some designers like to provide multiple smaller text boxes rather than one large text box.

For example, a unique 6-digit number called a BSB (Bank, State &

Branch) is used to identify financial institutions in Australia. To help the user enter this number correctly, some forms will present two

3-digit text boxes rather than a single 6-digit text box.

A word about matrices

In the context of forms design, a matrix is the presentation of more than one question in a grid layout, where all questions use the same set of answer options. For example, matrices are often used when respondents are being asked their level of satisfaction with a range one attribute.

Matrices can be used in the electronic medium as much as they can in the paper medium. However, experience suggests that many respondents have difficulty understanding matrices and how to complete them.

Therefore, as a general rule, matrices should be avoided.

8

Compulsory questions

In the electronic medium it is common practice for compulsory questions is to mark them with a red asterisk ( * ) and as a result, this become a standard by default

. Ideally this asterisk will be placed immediately before the question, because then the respondent:

• knows they are going to have to answer the question before reading it, thereby prompting them to pay more attention to it than they may otherwise; and

• can quickly identify the compulsory questions by scanning, as the position of the indicator is not influenced by the length of individual questions.

The exception to this rule is when all of the questions on the form are compulsory. In this case, the red asterisk is redundant, provided respondents are told that all questions must be answered up front.

If not all, but most, of the questions must be answered, the recommended approach is to indicate optional, rather than compulsory questions. Placing the words ‘‘(Optional)’’ in the question is a good way to indicate as much. It is less likely to be missed (compared to being a separate instruction, for example) thereby minimising unnecessary work for the respondent.

Regardless of whether it is the compulsory or the optional questions that are marked on the form, information about which approach has been used should appear at the beginning of the form, before any of the questions, e.g. ‘‘Mandatory questions are marked with a red asterisk

( * )’’ or ‘‘All questions must be answered unless otherwise indicated’’.

Flow

Your Flow document spells out what conditional branching you will need to build into the electronic form. The particular software or language being used to build the form will determine how such logic is to be programmed.

4

Adopting an approach that respondents are familiar with, because of its frequent use, will reduce the learning required to complete the form.

9

STRUCTURE AND PAGINATION

After the individual questions have been moulded into the appropriate format, the electronic form is starting to take shape. It is now time to ‘pull back’ one level and work on the structure of the entire form.

Differences in structure: paper vs electronic

A paper form has two main structural layers: pages and sections . Pages contain one or more sections and sections contain one or more questions. Sections are used to group related questions and pages can be used to group related sections.

An electronic form has the same two structural layers. Electronic sections are exactly the same as paper sections. However, I would argue that there are two differences between paper pages and electronic pages:

• in the electronic medium, the page is displayed on screen rather than on a physical sheet of paper; and, more importantly,

• moving between pages has more significance than it does in the print medium.

in the electronic medium

Moving between form pages means more in the electronic medium because it is done by use of buttons (e.g. ‘‘Next’’ or ‘‘Continue’’), which are also used for key actions like ‘‘Submit’’ and ‘‘Sign-up” . These buttons often trigger actions, such as validation, which do not happen when the user is filling out a paper form.

Also, some systems do not allow backward movement. Users have learnt from this to use forward-movement actions with caution.

In the paper realm, however, moving between pages is very ‘undoable’ and the action is completely different to that of submitting the form

(e.g. by posting it). Therefore, respondents move between pages in a paper form much more subconsciously.

The implication is that the form designer needs to focus more on pagination when working in the electronic medium.

What is an electronic page?

Experienced form designers try not to split related questions across pages, and this technique applies equally in the electronic realm as it does in print.

10

The other major constraint on pagination when working with printed forms is the size of the paper they are printed on. In most cases, are familiar to respondents, allow enough room for handwriting and are relatively cheap to post.

11

When working electronically, there are multiple constraints on what the user sees on the screen, namely the:

• size of their monitor (e.g. 15-inch or 17-inch);

• screen resolution (e.g. 800 pixels wide x 600 pixels long or 1024

pixels wide x 768 pixels wide) ;

• size of the window the form is appearing in (e.g. whether or not it is maximised to the full width of the screen);

• text size chosen by the respondent (e.g. 12-point or 14-point).

If the form is being viewed with a web browser, then the type of browser the respondent is using (e.g. Internet Explorer or Firefox) can also have an effect.

All this means we can never be sure exactly how our electronic form displays for the respondent . However, there are some ‘rules of thumb’ that can be applied to give the designer a better approximation for what the majority of users will see.

First you need to decide what minimum screen resolution you are going to design for. At present the design community is divided between 800 x 600 and 1024 x 768 as a workable minimum. If you wish to be conservative, or expect that a disproportionate number of your respondents will be working with older hardware (i.e. smaller resolution screens), then 800 x 600 is the better minimum.

When working with a minimum of 800 x 600, deduct at least 50 pixels for vertical scrollbars and the edge of the window, leaving an estimated available width of 750 pixels.

When working with 1024 x 768, deducting 50 pixels leaves the rather awkward and not-nicely-divisible number of 974. One recommendation

is

960 divides nicely for grid-based design.

5

Note that as the monitor size gets larger, it can offer greater screen resolution. So, for example, you wouldn’t usually have a resolution of

1280 x 1024 on a 13-inch monitor.

6

Moll C. (2006) “ Optimal width for 1024px resolution?’’ Entry on

Authentic Boredom website

( http://www.cameronmoll.com/archives/001220.html

). Accessed 3 March 2008.

12

We now have some guidelines for page width … what about length? Some sources will say that main content should always be above the fold , with the term ‘‘fold’’ referring to the part of the screen the user can see without having to scroll vertically. The problem with this advice is that we cannot know where the fold is, because of all the variables described above. Moreover, it is unlikely that the design of a form is going to change significantly because of fold considerations, which are really more of a concern when designing websites.

Deciding where to make a new page

Designers of electronic forms are not limited in page length to the same extent as paper forms designers, because of the ubiquitous vertical scrollbar. It is theoretically possible to have a 200question form appear all on one page (i.e. screen). It is equally possible to have a 200-question form appear in 200 pages, one question per page.

Neither of these extremes is a good choice. In the case of the single screen 200-question form:

• a large amount of scrolling is required;

• it is harder for the respondent to keep their place in the form; and

• the data from the form is more likely to be lost or corrupted, as it is only submitted to the server when an action button like

‘‘Submit’’ is used (at the end of the 200 questions).

In the case of the 200-page form:

• a large amount of button clicking is required;

• this in turn will make respondents more conscious of the length of the form; and

• completing the form will take longer as the respondent has to wait for each page to load.

Obviously the ideal is an appropriate middle ground between these two extremes. From experience, this middle ground appears to be somewhere between 5 and 7 pages with no more than 4 ‘‘screenfuls’’ (the amount that can be seen in one screen without scrolling) on each. When the form spreads over more than 7 pages, it begins to feel too long; more than 4 screenfuls and the user is likely to get lost or confused.

A good starting point for determining appropriate pagination is, obviously, the sections that are present in the paper form.

13

Moving between pages

Respondents move between pages of an electronic form using action buttons , such as:

•

Next

•

Continue

•

Previous

•

Back

•

Cancel

•

Reset

•

Submit

•

Clear

•

Save

•

OK

•

Cancel.

Placing a short line of descriptive text underneath the button helps alleviate user fears or confusion about what the button will do. For example, the description underneath a ‘‘Next’’ button on Step 3 should, at a minimum, mention that they user will be taken to Step 4.

For forms in English or other languages that read from left to right horizontally

, it is generally accepted that the best location for action buttons is at the bottom of the screen, i.e. where the respondent naturally finds themselves after completing the page. A second set of action buttons can be provided at the top of the screen, if appropriate and useful.

I usually recommend that on multiple page forms, the ‘‘Previous’’ button should be toward the left hand edge and the ‘‘Next’’ button toward the right hand edge. My (untested) belief is that the movement of the eye from left to right when reading creates a sense that right is forward and left is backward.

7

These recommendations can easily be adapted for languages that read in other directions.

14

Horizontal order of other types of action buttons is a little more contestable. For example, should ‘‘Submit’’ be to the left or right of

‘‘Cancel’’? One argument is that ‘‘Submit’’ should be to the right of

‘‘Cancel’’, as it is the action that is ‘moving forward’ with the form.

The counter argument is that ‘‘Submit’’ should be to the left of

‘‘Cancel’’, as it is the primary action that respondents want. Either way, the tab order should be set such that moving from the last question to the action buttons with the keyboard takes the user to

‘‘Submit’’ rather than ‘‘Cancel’’, to minimise the chance of accidental data loss.

Another way to minimise accidental data loss is to avoid using

‘‘Reset’’ and ‘‘Clear’’. It most cases, there is no real need for such an action and it is all too easy for them to be used unintentionally.

When these buttons are provided, the action should be confirmed with the user before being carried out.

Conversely, providing a ‘‘Save’’ button gives respondents the flexibility to fill out the form over more than one sitting and manage interruptions.

Pagination and web accessibility

While the electronic forms designer is freed from page length constraints, making a web form accessible can be harder when working electronically. Web accessibility is about ensuring everyone can use the form (or an equivalent) regardless of disability, and whatever technology they are using to browse. There isn’t scope in this paper to discuss accessibility of web forms in detail, suffice to say that

and CSS

-will go a long way toward meeting this goal.

However, there is one major stumbling block for web forms and accessibility, and that is how conditional branching is implemented.

This challenge is best illustrated by an example.

8

HTML, or HyperText Markup Language, is a language for programming websites. For forms, valid HTML means using the <fieldset> and <label> tags, as well as the tabindex attribute.

9

CSS stands for Cascading Style Sheets, a language to describe the presentation (e.g. formatting) of a document written in a markup language, most commonly HTML.

15

Fictional Internet Service Provider (ISP) ‘‘Internet Is Us’’ provides internet access to householders in only one state of Australia, and the cost varies depending on whether the customer is in the city or the country. Internet Is Us has an online sign-up form, the first question of which asks where the customer is located. This allows them to give the right pricing and screen out customers from other states.

Being an ISP, Internet Is Us is keen to make the sign-up form ‘sexy’ and as technologically advanced as possible, so they use JavaScript to implement the conditional branching within the form. This means that as answers are input, parts of the form change, without the whole page reloading.

The problem with this approach is that the form is unlikely to be accessible, as:

• many screen readers cannot cope with JavaScript (e.g. they do not

‘see’ that the page has changed); and

• some users have client-side scripting (of which JavaScript is one example) switched off for security reasons.

(The usability of the form may also be compromised. Depending on how the form is designed, dynamic changes may not be noticed by the respondent.)

In fact, any use of client-side scripting is likely to lead to accessibility issues. Therefore, it is better if the form does not rely on client-side scripting to function. This means that only server-side scripting is used to present the respondent with the right questions. Server-side scripts only runs when data is sent to the server; this data transmission happens when the respondent moves between pages using action buttons. The server then uses this data to determine which page, with which questions, to send to the user’s browser.

The implication of this accessible approach is that questions that trigger branching need to be on separate (earlier) pages from the questions they branch to. In the case of the Internet Is Us sign-up, the questions about location need to be on a separate page from the rest of the form. In this case, as in many others, it makes sense from a design point-of-view to have these ‘set-up’ questions on the first page with the remainder of the form in the second and subsequent pages. In other cases, however, the need to break questions over pages can lead to a clumsy design. Until more accessible client-side scripting available, it is up to the designer and stakeholders to determine the appropriate balance between web accessibility and pagination.

16

BULIDING IN INTELLIGENCE

One of the primary benefits of electronic forms is the intelligence that can be built into them.

Computers are much better placed to do certain tasks, such as mathematical calculations and comparisons, and this should be leveraged in forms. For example, an electronic form can ensure that arrival dates are not before departure dates, sum components to check totals, calculate percentages and ensure that someone doesn’t say they have a negative number of children.

There are 3 main ways that intelligence can be built into a form:

• sequencing;

• auto-population of data; and/or

• validation.

Sequencing

An electronic form can implement sequencing on behalf of the respondent, ensuring that only relevant questions are displayed.

Auto-population of data

Auto-population of data refers to when the computer ‘answers’ questions for the respondent. For example, if a user is filling out a form after logging in to a system, some identification questions can be answered (or not even asked) using their log in information. This might include shipping information or preferred credit card, whatever is stored in the user’s login.

Auto-population reduces the burden on the respondent and improves data quality, provided respondents are given the opportunity to modify any auto-populated data.

Validation

Validation is the process of checking the answers provided by the respondent. Validation usually checks one or both of the following elements:

•

Data completeness: i.e. all compulsory questions are answered.

•

Logic: i.e. answers are not logically inconsistent.

17

Validation may occur immediately after an answer is entered, at the end of each page or at the end of the whole form. Validation failures are reported back to the respondent, so that they maybe corrected before submission.

18

SUPPLEMENTARY FORM ELEMENTS AND CONSIDERATIONS

The majority of the form is in place once the questions and their answer formats, sequencing and pagination have been implemented. What remains are the following supplementary elements: progress indicators and assistance. The form can be completed without these elements, but the user experience and resulting data quality is better for their inclusion.

Progress indicators

A progress indicator tells the respondent approximately how far through the form they are. Progress indicators should:

• be present on every page, typically at the top right hand corner; and

• describe the user’s progress in meaningful terms, e.g. ‘‘Step 2 of

4’’ rather than ‘‘63% complete’’.

Progress indicators can be made ‘clickable’. For example, clicking on

‘‘Step 3’’ in the progress indicator will load Step 3 of the form. This should only be done when the form does not need to be completed in a linear fashion. That is, clickable progress indicators are suitable only when the different pages of the form can be completed in any order, with the respondent moving forwards and backwards as they please.

Assistance

It is useful to distinguish between the two types of assistance that can be provided in forms: help and tips.

Tips are question-specific supplementary information such as definitions, clarification and examples. As they are questionspecific, they should be visually associated with the questions they refer to (e.g. to the right hand side or immediately underneath the question).

Help, on the other hand, is assistance pertaining to the form as a whole, such as descriptions of how the software works. Ideally the form will be so well designed that the user will not need help!

However because respondents are diverse, and because it is impossible to anticipate every situation of use, it is wise to provide access to indicators, this help should be available on every page and can also be located in the top right hand corner.

19

Slick pipeline

The term slick pipeline describes the streamlining of web forms, relative to the websites they sit within. The idea is that once the respondent has entered into the form, unnecessary elements such as website navigation are removed. (Visual branding is retained to convey to the respondent that they are still within the originating site.)

A slick pipeline is used to minimise distraction as well as the chance of accidental (or intentional) movement away from the form. However it and navigation elements should be provided to give them total control over the form filling experience.

20

TESTING

There are two main types of testing relevant to electronic forms:

• testing of the programming of the form; and

• testing of the usability of the form.

Programming testing

Testing of the programming behind the form aims to ensure there are minimal defects in the software or markup. Note the reference to minimal rather than no defects: it is very difficult to ensure that a program is defect free but it can be measured against criteria that specify the acceptable level of quality.

Part of these quality criteria will be a specification of what systems the program should be able to run on. For all electronic forms, this means deciding which operating systems will be supported. At the time of writing this paper, the main operating systems are Microsoft

Windows (XP, Vista and 2000), Macintosh and Linux

.

For web forms, developing quality criteria will also mean deciding which browsers will be supported. Currently the most common browsers are Microsoft Internet Explorer (versions 6 and 7) and Firefox

. Other browsers in use include Safari, Opera, Netscape, Konquerer and older versions of Internet Explorer.

For non-web forms, there is also a need to decide which versions of relevant software will be supported. For example, the current version of Microsoft Word for Windows is Word 2008, but Word 2007 and probably

Word 2003, 2002, 2001, 2000 and 98 are still in use.

The quality criteria will be used to develop a series of test cases .

Test cases describe what should happen when a particular input is used. These test cases are programmed into a series of test scripts , which are then run and the output examined.

10 http://www.w3schools.com/browsers/browsers_os.asp

. Accessed 29 February

2008.

11 http://www.w3schools.com/browsers/browsers_stats.asp

. Accessed 29

February 2008.

21

The resources needed for program testing should not be underestimated.

A very basic application like Microsoft WordPad has 325 possible interactions

--form.

Usability testing

What and why

The best design guidelines cannot account for specific contexts or every variant of a form. This is why usability testing is strongly recommended.

Usability testing is exploring the ability of representative users to successfully and easily complete the form in realistic circumstances.

As the definition suggests, usability testing involves replicating, as closely as possible, the likely context of use. This may mean going out into people’s homes or government offices and watching them fill out the form as crying children and ringing telephones distract them.

The definition also stresses the importance of using typical respondents as test participants, as opposed to colleagues, friends or family. It may be easy to ask your colleagues, friends or family to

‘have a go’ at your form. While this is better than no testing at all, it is unlikely to draw out the majority of problems with the form.

This is because your colleagues, friends and family are more like you than a representative sample of respondents. If they weren’t, they wouldn’t be your colleagues, friends and family! To get the true diversity that leads to useful feedback, you need to test with the sorts of people that would actually be filling out the form.

Concurrent or retrospective

There are two main ways that a usability test can be conducted, and they relate to the time at which feedback is collected.

12

Memon A.M., Pollack M.E. & Soffa M.L. (1999) ‘‘Using a goal-driven approach to generate test cases for GUIs’’. Proceedings of the 21st

International Conference on Software Engineering , IEEE Computer Society

Press: Los Alamitos.

22

Concurrent probing gathers feedback while the participant is working through the form, by the participant thinking aloud. Concurrent probing yields ‘top-of-mind’ and real-time responses but it does incur a cognitive burden. For this reason, concurrent probing should not be used if you wish to collect quantitative metrics about the form’s usability, such as completion time and error rates. Comprehension and self-censoring are also better assessed using retrospective probes

.

Retrospective probing gathers feedback after the participant has finished filling some or all of the form. Because the participant is not interrupted, metrics can be gathered. However, there is a greater reliance on the participant’s ability to accurately recall and report their thoughts and feelings at different times while completing the form. Ericsson & Simon

show that retrospective probing does yield less information than concurrent probing.

The importance of good facilitation

The quality of a usability testing rests very heavily with the skills of the facilitator. Their job is to elicit detailed information in an unbiased way, while making the test participant comfortable with what may seem like a very unusual activity.

A good facilitator:

• is professional yet friendly;

• is able to ‘think on their feet’;

• does not lead information;

the participant but instead probes for more

• uses appropriate back channelling and body language; and, most importantly,

• knows how to listen and when to remain silent .

More information

For more information about usability testing, see:

13

Sudman S., Bradburn N.M. & Schwarz N. (1996) Thinking about answers: the application of cognitive processes to survey methodology . Jossey-Bass:

San Francisco, p. 18.

14

Ericsson K.A., Simon H.A. (1984) Protocol analysis: verbal reports as data . MIT Press: Cambridge, p. 371.

23

•

‘‘Learn about usability testing’’ on the Usability.gov website

( http://www.usability.gov/refine/learnusa.html

).

•

‘‘What is usability testing’’ on the website of Information & Design

( http://www.infodesign.com.au/usabilityresources/evaluation/usabilit ytesting.asp

).

A good collection of resources for conducting usability testing can be found on the Usability and User Experience

website at http://www.stcsig.org/usability/resources/toolkit/toolkit.html

.

15

Usability and User Experience is a community within the Society for

Technical Communication.

24