Multitouch - Squarespace

advertisement

CISC 425 Week 6

Multitouch

Plan

- History of Multitouch

- Part II: Empirical Work

- Group Presentations Assignment 2

Main Sources:

Bill Buxton. Multi-Touch Systems that I Have Known and Loved (2007-2014). http://www.billbuxton.com/multitouchOverview.html

Florence Ion. From touch displays to the Surface: A brief history of touchscreen technology (2013). http://arstechnica.com/gadgets/2013/04/from-touch-displays-to-the-surface-a-brief-history-of-touchscreentechnology/

Sam Mallery. A Visual History of Pinch to Zoom. (2012). http://www.sam-mallery.com/2012/09/a-visual-history-of-pinch-to-zoom/

Wikipedia

ACM SIGCHI Proceedings

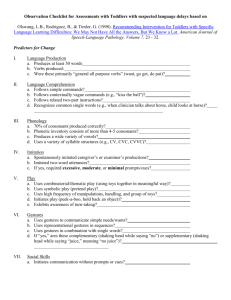

Readings

Jefferson Y. Han. 2005. Low-cost multi-touch sensing through frustrated total

internal reflection. In Proceedings of the 18th annual ACM symposium on User

interface software and technology (UIST '05). ACM, New York, NY, USA, 115-118.

Jacob O. Wobbrock, Meredith Ringel Morris, and Andrew D. Wilson. 2009. Userdefined gestures for surface computing. In Proceedings of the SIGCHI Conference

on Human Factors in Computing Systems (CHI '09). ACM, New York, NY, USA,

1083-1092.

Queen’s University: Hugh LeCaine

1954

E. A. Johnson. (8-9-1965) Touch display—a novel input/

output device for computers

Royal Radar Establishment Malvern, UK. Single capacitive

touch system used for air traffic control (top). Touch

pictures on a screen that would navigate them between

menus showing details of each aircraft in the area. Two

years later, Johnson further expounded on the technology

with photographs and diagrams in "Touch Displays: A

Programmed Man-Machine Interface," published

in Ergonomics in 1967.

E.A. Johnson

Touchscreens were originally integrated with cathode ray

tube (CRT) technology, the same as old TV screens, and

so they weren’t flat,” explains Michal Diakowski, from UTouch, a UK-based touchscreen manufacturer. This

cumbersome design resulted in a lack of accuracy in

knowing where the screen had been touched

1965

Capacitive Touch Screen

A capacitive touchscreen panel uses an insulator, like

glass, that is coated with a transparent conductor such

as indium tin oxide (ITO). The "conductive" part is

usually a human finger, which makes for a fine

electrical conductor. Johnson's initial technology could

only process one touch at a time, and what we'd

describe today as "multitouch" was still somewhat a

ways away. The invention was also binary in its

interpretation of touch—the interface registered

contact or it didn't register contact. Pressure sensitivity

would arrive much later.

Although capacitive touchscreens were designed first,

they were eclipsed in the early years of touch by

resistive touchscreens. American inventor Dr. G.

Samuel Hurst developed resistive touchscreens almost

accidentally. The Berea College Magazine for alumni

described it like this:

Resistive Touch Screen

Elographics

1970

To study atomic physics the research team used an

overworked Van der Graaff accelerator that was only

available at night. Tedious analyses slowed their

research. Sam thought of a way to solve that problem.

He, Parks, and Thurman Stewart, another doctoral

student, used electrically conductive paper to read a

pair of x- and y- coordinates. That idea led to the first

touch screen for a computer. With this prototype, his

students could compute in a few hours what otherwise

had taken days to accomplish. Hurst and the research

team had been working at the University of Kentucky.

The university tried to file a patent on his behalf to

protect this accidental invention from duplication, but

its scientific origins made it seem like it wasn't that

applicable outside the laboratory.

Hurst, however, had other ideas. "I thought it might be

useful for other things," he said in the article. In 1970,

after he returned to work at the Oak Ridge National

Laboratory (ORNL), Hurst began an after-hours

experiment. In his basement, Hurst and nine friends

from various other areas of expertise set out to refine

what had been accidentally invented. The group called

its fledgling venture "Elographics," and the team

discovered that a touchscreen on a computer monitor

By 1971, a number of different touch-capable

machines had been introduced, though none were

pressure sensitive. One of the most widely used touchcapable devices at the time was the University of

Illinois's PLATO IV terminal—one of the first

generalized computer assisted instruction systems.

The PLATO IV eschewed capacitive or resistive touch

in favor of an infrared system (we'll explain shortly).

PLATO IV was the first touchscreen computer to be

used in a classroom that allowed students to touch the

screen to answer questions. It sensed a 16x16 array of

points.

Plato IV

1971

DynaBook

1972

Mueller: Direct television drawing and image manipulating

system

1973

The KiddiComp concept, envisioned by Alan Kay in

1968, while a PhD candidate[1][2] and later developed

and described as the Dynabook in his 1972 proposal A

personal computer for children of all ages, outlines the

requirements for a conceptual portable educational

device that would offer similar functionality to that now

supplied via a laptop computer or (in some of its other

incarnations) a tablet or slate computer with the

exception of the requirement for any Dynabook device

offering near eternal battery life. Adults could also use

a Dynabook, but the target audience was children.

Though the hardware required to create a Dynabook is

here today, Alan Kay still thinks the Dynabook hasn't

been invented yet, because key software and

educational curricula are missing.[citation needed]

When Microsoft came up with its tablet PC, Kay was

quoted as saying "Microsoft's Tablet PC, the first

Dynabook-like computer good enough to criticize".[4]

A comment he had earlier applied to the Apple

Macintosh. The ideas led to the development of the

Xerox Alto prototype, which was originally called “the

interim Dynabook”.[6][7][8] It embodied all the elements

of a graphical user interface, or GUI, as early as 1972.

The software component of this research was

Smalltalk, which went on to have a life of its own

independent of the Dynabook concept. Kay wanted the

Dynabook concept to embody the learning theories of

Jerome Bruner and some of what Seymour Papert—

who had studied with developmental psychologist

Jean Piaget and who was one of the inventors of the

Logo programming language — was proposing.

First use of Total Internal Reflection and multiple

simultaneous inputs. This invention enables a person

to paint or draw directly into color television. No

special probe or stylus is required since a person can

use brushes or pens, fingertips, rubber stamps, or any

drawing or painting object whatsoever. At the same

time, a person can play his free hand over a piano-like

keyboard to synthesize images by manipulating or

altering the images or forms as they are introduced. It

is applicable to graphic productions of all sorts,

computer input-output graphic processing systems, for

visualizing mathematical transformations, or for use

with scanning lasers or electron microscopes that etch

or score.

In addition, every point touched on the draw or

paintscreen is introduced, so that multiple tipped

styluses can introduce large shapes, or rubber stamplike shapes can introduce symbols or letters, instantly

positioned by hand anywhere on the paintscreen and

therefore on the output area.

One of the early implementations of mutual

capacitance touchscreen technology was developed at

CERN in 1977[9][10] based on their capacitance touch

screens developed in 1972 by Danish electronics

engineer Bent Stumpe. This technology was used to

develop a new type of human machine interface (HMI)

for the control room of the Super Proton Synchrotron

particle accelerator.

CERN: Stumpe & Beck

Beck/Stumpe

1977

In a handwritten note dated 11 March 1972, Stumpe

presented his proposed solution – a capacitive touch

screen with a fixed number of programmable buttons

presented on a display. The screen was to consist of a

set of capacitors etched into a film of copper on a

sheet of glass, each capacitor being constructed so

that a nearby flat conductor, such as the surface of a

finger, would increase the capacitance by a significant

amount. The capacitors were to consist of fine lines

etched in copper on a sheet of glass – fine enough (80

μm) and sufficiently far apart (80 μm) to be invisible

(CERN Courier April 1974 p117).In the final device, a

simple lacquer coating prevented the fingers from

actually touching the capacitors.

At the time, the Super Proton Synchrotron, the

predecessor to today’s Large Hadron Collider, was

nearing completion and required complex settings and

controls, rendering its control room cumbersome and

costly. Beck and Stumpe devised a system where all

aspects could be controlled from only six

touchscreens, through displays and menus navigable

by touch alone.

Unlike a mouse, once in contact with the screen, the

user can exploit the friction between the finger and the

screen in order to apply various force vectors. For

example, without moving the finger, one can apply a

force along any vector parallel to the screen surface,

including a rotational one. These techniques were

described as early as 1978.

MIT: Herot & Weinzapfel

The screen demonstrated by Herot & Weinzapfel could

sense 8 different signals from a single touch point: position in X & Y, force in X, Y, & Z (i.e., sheer in X & Y

& Pressure in Z), and torque in X, Y & Z.

Herot, C., & Weinzapfel, G. One-point touch input of vector information for

computer displays. Computer Graphics, 1978, 12(3), 210-216

1978

While we celebrate how clever we are to have multitouch sensors, it is nice to have this reminder that

there are many other dimensions of touch screens that

can be exploited in order to provide rich interaction

University of Toronto: Flexible Machine Interface by Nimish Mehta

Mehta, Nimish (1982), A Flexible Machine Interface,

M.A.Sc. Thesis, Department of Electrical Engineering,

University of Toronto supervised by Professor K.C.

Smith. In 1982, the first human-controlled multitouch device

was developed at the University of Toronto by Nimish

Mehta. It wasn't so much a touchscreen as it was a

touch-tablet. The Input Research Group at the

university figured out that a frosted-glass panel with a

camera behind it could detect action as it recognized

the different "black spots" showing up on-screen. Bill

Buxton has played a huge role in the development of

multitouch technology (most notably with the

PortfolioWall, to be discussed a bit later), and he

deemed Mehta's invention important enough to include

in his informal timeline of computer input devices:

1982

The touch surface was a translucent plastic filter

mounted over a sheet of glass, side-lit by

a fluorescent lamp. A video camera was mounted

below the touch surface, and optically captured the

shadows that appeared on the translucent filter. (A

mirror in the housing was used to extend the optical

path.) The output of the camera was digitized and fed

into a signal processor for analysis.

Gestural interaction was introduced by Myron Krueger,

an American computer artist who developed an optical

system that could track hand movements. Krueger

introduced Video Place (later called Video Desk) in

1983, though he'd been working on the system since

the late 1970s. It used projectors and video cameras to

track hands, fingers, and the people they belonged to.

Unlike multitouch, it wasn't entirely aware of who or

what was touching, though the software could react to

different poses. VideoPlace: Myron Krueger

1983

Though it wasn't technically touch-based—it relied on

"dwell time" before it would execute an action—

Buxton regards it as one of the technologies that

"'wrote the book' in terms of unencumbered… rich

gestural interaction. The work was more than a decade

ahead of its time and was hugely influential, yet not as

acknowledged as it should be." Krueger also

pioneered virtual reality and interactive art later on in

his career.

Note that this video shows one of the first uses of the

pinch gesture.

2:20 in the video

First Pinch Gesture

Myron W. Krueger, Thomas Gionfriddo, and Katrin Hinrichsen. 1985. VIDEOPLACE—An artificial

reality. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI

'85). ACM, New York, NY, USA, 35-40.

1985

A prototype touch-sensitive tablet Is presented. The

tablet's main innovation is that It Is capable of sensing

mare than one point of contact at a time. In addition to

being able to provide position coor- dinates, the tablet

also gives a measure of degree of contact,

independently for each point of contact. In order to

enable multitouch sensing, the tablet surface is divided

Into a grid of discrete points. The points are scanned

using a recursive area subdivision algorithm. In order to

minimize the resolution lost due to the discrete nature

of the grid, a novel interpolation scheme has been

developed. Finally, the paper briefly discusses how

multi-touch sensing, interpolation, and degree of

contact sensing can be combined to expand our

vocabulary In human-computer Interaction. University of Toronto: Bill Buxton

SK Lee, William Buxton, and K. C. Smith. 1985. A multi-touch three dimensional touch-sensitive tablet. In

Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI '85). ACM, New York, NY,

USA, 21-25.

1985

First, It can sense the degree of contact in a

continuous manner. Second, it can sense the amount

and location of a number of simultaneous points of

contact. These two features, when combined with

touch sensing, are very Important In respect to the

types of Interaction that we can support. Soma of

these are discussed below, but sea Buxton, Hill, and

Rowley (1985) and Brown, Buxton and Murtagh (1985)

for more dotall. The tablet which we present Is a

continuation of work done in our lab by Sasakl et al

(1981)and Metha (1982).

Pinch gesture in envisionment: 3:06

Xerox PARC: Digital Desk

1992

CMU: Multitouch Gesture Recognition

1992

First smartphone and first mobile device with touch

input.

IBM Simon

1992

Diffused Illumination

Diffused Illumination (Rear)

A diffuser on a pane of glass is illuminated using

invisible infrared light sources. When the fingers touch

the diffuser, the touches light up as they are in focus.

This allow easy background removal, and blob

detection using computer vision libraries such as

OpenCV.

The TUIO toolkit allows anyone with an infrared camera

to build these interfaces and supply multitouch

coordinates over Open Sound Control (UDP) to an

application.

The HoloWall is a wall-sized computer display that

allows users to interact without special pointing

devices. The display part consists of a glass wall with

rear-projection sheet behind it. A video projector

behind the wall displays images on the wall. One of the

first Diffused Illumination multitouch systems.

HoloWall (SONY Jun Rekimoto)

Nobuyuki Matsushita and Jun Rekimoto. 1997. HoloWall: designing a finger, hand, body, and object

sensitive wall. In Proceedings of the 10th annual ACM symposium on User interface software and

technology (UIST '97). ACM, New York, NY, USA, 209-210.

1998

Inputs are recognized in an interesting way. This is

done with infrared (IR) lights (we use an array of IR

light-emitting diodes (LEDs)) and a video camera with

an IR filter (an optical filter that blocks light less below

840 nm) installed behind the wall.

Since the rear-projection panel is semi-opaque and

diffusive, the user's shape or any other objects in front

of the screen are invisible to the camera. However,

when a user moves a finger close enough to the screen

(between 0 cm to 30 cm, depending on the threshold

value of the recognition software), it reflects IR light

and thus becomes visible to the camera. With a simple

image processing technique such as frame

subtraction, the finger shape can easily be separated

from the background.

Front projected Capacitive, One of the first multitouch

tabletops, capable of identifying multiple people

through capacitors in the chairs.

DiamondTouch (MERL)

2001

MERL/University of Calgary

(2006)

Acquired by Apple in 2005 and basis for iPhone. Dr.

Westerman and his co-developer, John G. Elias, a

professor in the department, are trying to market their

technology to others whose injuries might prevent

them from using a computer. The TouchStream Mini

from their company, FingerWorks

(www.fingerworks.com), uses a thin sensor array that

recognizes fingers as they move over the keyboard.

The sensors monitor disturbances in the touch pad's

electric field, not pressure, so typing requires only a

very light touch. Unlike similar touch pads on handheld computers or on laptops, which only recognize

input from a single point, this surface can process

information from multiple points, allowing for more

rapid typing.

Fingerworks

Westerman,Wayne(1999).HandTracking,FingerIden0fica0on,andChordicManipula0onona

Mul0-TouchSurface.UofDelawarePhDDisserta:on

1992

2002

''We thought there would already be something out

there that would do multifinger input,'' Mr. Westerman

said. ''We ended up building the whole thing from

scratch.''

The TouchStream technology also replaces computer

mouse movements with gestures across the screen. To

issue commands, the user runs various finger

combinations over the pad. For ''cut,'' the thumb and

middle finger are pulled together in a snipping motion,

and for ''open,'' the thumb and next three fingers are

drawn in a circle on the pad, as if they are opening a

jar. (''Close'' is the opposite motion.) Because the

software knows the difference between a typing

movement and a mouse or command gesture, the user

can give mouse commands anywhere on the pad, even

right on top of the keyboard area.

SmartSkin is one of the earliest examples of

multi-touch systems developed in 2001by Jun

Rekimoto at Sony Computer Science Laboratories.

It is based on capacitive sensing and recognizes

multiple fingers / hands. This work was first

presented at ACM CHI 2002.

SmartSkin (SONY Jun Rekimoto)

2002

Diffused Illumination. First Modern Multitouch screen.

TouchLight (MSR Andy Wilson)

Andrew D. Wilson. 2004. TouchLight: an imaging touch screen and display for gesture-based interaction.

In Proceedings of the 6th international conference on Multimodal interfaces (ICMI '04). ACM, New York,

NY, USA, 69-76.

2004

The Lemur was the first 'multi-touch enabled'

interfaces that was commercially available. Conceived

in late 2001 before multi-touch screens were even

available, it was not until 2003 that they finally realised

the first multi-touch capable screen. Development

continued and by late 2004 the first fully functional

Lemur was unveiled at IRCAM. The first version of

Lemur was commercially available from Jazz Mutant in

2005

JazzMutant Lemur

2005

JazzMutant Lemur

2005

The Lemur Input Device is a highly customizable multitouch device from French company JazzMutant, which

serves as a controller for musical devices such as

synthesizers and mixing consoles, as well as for other

media applications such as video performances. As an

audio tool, the Lemur's role is equivalent to that of a

MIDI controller in a MIDI studio setup, except that the

Lemur uses the Open Sound Control (OSC) protocol, a

high-speed networking replacement for MIDI. The

controller is especially well-suited for use with Reaktor

and Max/MSP, tools for building custom software

synthesizers. It is currently discontinued in light of

competition from current multitouch input computers.

Frustrated Total Internal Reflection

When light encounters an interface to a medium with a

lower index of refraction (e.g. glass to air), the light

becomes refracted to an extent which depends on its

angle of incidence, and beyond a certain critical angle,

it undergoes total internal reflection (TIR). Fiber optics,

light pipes, and other optical waveguides rely on this

phenomenon to transport light efficiently with very little

loss. However, another material at the interface can

frustrate this total internal reflection, causing light to

escape the waveguide there instead.

This phenomenon is well known and has been used in

the biometrics community to image fingerprint ridges

since at least the 1960s [25]. The first application to

touch input appears to have been disclosed in 1970 in

a binary device that detects the attenuation of light

through a platen waveguide caused by a finger in

contact [7].

Mueller exploited the phenomenon in 1973 for an

imaging touch sensor that allowed users to “paint”

onto a display using free-form objects, such as

brushes, styli and fingers.

Frustrated Total Internal Reflection (FTIR)

Jeff Han NYU

Jefferson Y. Han. 2005. Low-cost multi-touch sensing through frustrated total internal reflection. In

Proceedings of the 18th annual ACM symposium on User interface software and technology (UIST '05). ACM,

New York, NY, USA, 115-118.

1992

2005

Perceptive Pixel

Reactable Universitat Pompeu Fabra in Barcelona, Spain

2006

Apple iPhone

2007

Microsoft Surface (Andrew Wilson)

2008

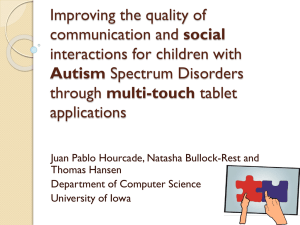

So what do we do with Multitouch interfaces?

How do we design for multi finger input?

Natural User Interface (NUI) Design Principles

-

Design directly for finger and gestural input.

Principle of Performance Aesthetics Principle of Direct Direct Manipulation: What you do is what you get.

Principle of Scaffolding

Principle of Context-Awareness

Principle of the Super Real: Design physical interactions with digital

information

Principle of Spatial Representation

Principle of Seamlessness

Principle of Performance Aesthetics Unlike GUI experiences that focus on and privilege

accomplishment and task completion, NUI experiences

focus on the joy of doing. NUI experiences should be

like an ocean voyage, the pleasure comes from the

interaction, not the accomplishment. Daniel Wigdor and Dennis Wixon. 2011. Brave NUI World: Designing Natural User Interfaces for

Touch and Gesture.Morgan Kaufmann Publishers Inc. San Francisco, CA, USA.

Principle of Direct Manipulation

Touch screens and gestural interaction functionality

enable users to feel like they are physically touching

and manipulating information with their fingertips.

Instead of what you see is what you get, successful

NUI interfaces embody the principle of what you do is

what you get.

Principle of Scaffolding

Successful natural user interfaces feel intuitive and

joyful to use. Information objects in a NUI behave in a

manner that users intuitively expect. Unlike a

successful GUI in which many options and commands

are presented all at once and depicted with very subtle

hierarchy and visual emphasis, a successful NUI

contains fewer options with interaction scaffolding.

Scaffolding is strong cue or guide that sets a user’s

expectation by giving them an indication of how the

interaction will unfold. Good NUIs supports users as

they engage with the system and unfold or reveal

themselves through actions in a natural. Example: Bumptop (UofT)

2006

Summary

Section II: Empirical Work

Toolglasses and Magic Lenses

Eric A. Bier, Maureen C. Stone, Ken Pier, William Buxton, and Tony D. DeRose. 1993. Toolglass and magic lenses:

the see-through interface. In Proceedings of the 20th annual conference on Computer graphics and interactive

techniques (SIGGRAPH '93). ACM, New York, NY, USA, 73-80.

Efficiency of Two-Handed vs. One-Handed Input

Andrea Leganchuk, Shumin Zhai, and William Buxton. 1998. Manual and cognitive benefits of two-handed input: an

experimental study. ACM Trans. Comput.-Hum. Interact. 5, 4 (December 1998), 326-359.

One of the recent trends in computer input is to utilize

users’ natural bimanual motor skills. We have observed

that bimanual manipulation may bring two types of

advantages to human- computer interaction: manual

and cognitive. Manual benefits come from increased

time- motion efficiency, due to the twice as many

degrees of freedom simultaneously available to the

user. Cognitive benefits arise as a result of reducing the

load of mentally composing and visualizing the task at

an unnaturally low level imposed by traditional

unimanual techniques.

Area sweeping was selected as our experimental task.

It is representative of what one encounters, for

example, when sweeping out the bounding box

surrounding a set of objects in a graphics program.

Such tasks can not be modeled by Fitts' Law alone

(Fitts, 1954) and have not been previously studied in

the literature. In our experiments, two bimanual

techniques were compared with the conventional onehanded GUI approach. B

oth bimanual techniques employed the two-handed

“stretchy” (Pinch) technique first demonstrated by

Krueger (1983). We also incorporated the “Toolglass”

technique introduced by Bier, Stone, Pier, Buxton and

DeRose (1993). Overall, the bimanual techniques

resulted in significantly faster performance than the

status quo one- handed technique, and these benefits

increased with the difficulty of mentally visualizing the

task, supporting our bimanual cognitive advantage

hypothesis. There was no significant difference

Efficiency of Two-Handed vs. One-Handed Input

User Defined Gestures (Wobbrock, Morris, Wilson 2009)

Jacob O. Wobbrock, Meredith Ringel Morris, and Andrew D. Wilson. 2009. User-defined gestures for surface

computing. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI '09). ACM, New

York, NY, USA, 1083-1092.

Many surface computing prototypes have employed

gestures created by system designers. Although such

gestures are appropriate for early investigations, they

are not necessarily reflective of user behavior. We

present an approach to designing tabletop gestures

that relies on eliciting gestures from non-technical

users by first portraying the effect of a gesture, and

then asking users to perform its cause. In all, 1080

gestures from 20 participants were logged, analyzed,

and paired with think-aloud data for 27 commands

performed with 1 and 2 hands. Our findings indicate

that users rarely care about the number of fingers they

employ, that one hand is preferred to two, that desktop

idioms strongly influence users’ mental models, and

that some commands elicit little gestural agreement,

suggesting the need for on-screen widgets. We also

present a complete user-defined gesture set,

quantitative agreement scores, implications for surface

technology, and a taxonomy of surface gestures. Our

results will help designers create better gesture sets

informed by user behavior.

These observations indicated that a portion of the

variability in endpoints is independent of the

performer's desire to follow the specified precision and

cannot be controlled by a speed-accuracy tradeoff.

This portion of variability reflects the absolute precision

of the finger input. In other words, the observed

variability in the endpoints may originate from two

sources: the relative precision governed by the speedaccuracy tradeoff of human motor systems, and the

absolute precision uncertainty of the finger per se.

More formally, we propose a dual normal distribution

User Defined Gestures (Wobbrock, Morris, Wilson 2009)

The 27 commands for which participants chose

gestures. Each command’s conceptual complexity was

rated by the 3 authors (1=simple, 5=complex). During

the study, each command was presented with an

animation and recorded verbal description.

Jacob O. Wobbrock, Meredith Ringel Morris, and Andrew D. Wilson. 2009. User-defined gestures for surface

computing. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI '09). ACM, New

York, NY, USA, 1083-1092.

Agreement Scores

Group size was then used to compute an agreement

score A that reflects, in a single number, the degree of

consensus among participants. (This process was

adopted from prior work [33].)

In Eq. 1, r is a referent in the set of all referents R, Pr is

the set of proposed gestures for referent r, and Pi is a

subset of identical gestures from Pr. The range for A is

[|Pr|-1, 1]. As an example, consider agreement for move a little (2hand) and select single (1-hand). Both had four groups

of identical gestures. The former had groups of size 12,

3, 3, and 2; the latter of size 11, 3, 3, and 3. For move a

little, we compute

The overall agreement for 1- and 2-hand gestures was

A1H=0.32 and A2H=0.28, respectively. Referents’

conceptual complexities (Table 1) correlated

significantly and inversely with their agreement (r=-.52,

F1,25=9.51, p<.01), as more complex referents elicited

lesser gestural agreement.

Fat Finger Problem

We present two devices that exploit the new model in

order to improve touch accuracy. Both model touch on

per-posture and per-user basis in order to increase

accuracy by applying respective offsets. Our RidgePad

prototype extracts posture and user ID from the user’s

fingerprint during each touch interaction. Christian Holz and Patrick Baudisch. 2010. The generalized perceived input point model and how to double

touch accuracy by extracting fingerprints. In Proceedings of the SIGCHI Conference on Human Factors in

Computing Systems (CHI '10). ACM, New York, NY, USA, 581-590.

RidgePad

The fingerprint interface was implemented using

RidgePad and employed the algorithm described in the

previous section.The control interface simulated a

traditional touchpad inter- face using a Fingerworks

touchpad. It received the same input from the

fingerprint scanner as the fingerprint interface.

However, this condition did not use the fingerprint

features and instead reduced the finger- print to a

contact point at the center of the contact area.

The optical tracker interface was implemented based

on a six-degree of freedom optical tracking system (an

8-camera OptiTrack V100 system). To allow the system

to track the participant’s fingertip, we attached five 3mm retro- reflective markers to the participant’s

fingernail (Fig- ure 10). The extreme accuracy of the

optical tracker made this interface a “gold standard”

condition that allowed us to obtain an upper bound for

the performance enabled by the generalized perceived

input point model.

In a user study, it achieved 1.8 times higher accuracy

than a simu- lated capacitive baseline condition. A

prototype based on optical tracking achieved even 3.3

times higher accuracy. The increase in accuracy can

be used to make touch inter- faces more reliable, to

pack up to 3.32 > 10 times more controls into the

same surface, or to bring touch input to very small

mobile devices.

Fitts’ Law for Fat Fingers (Bi, Li & Zhai 2013)

In finger input, this assumption faces challenges.

Obviously a finger per se is less precise than a mouse

pointer or a stylus. Variability in endpoints was

observed no matter how quickly/slowly a user

performed the task. For example, Holz and Baudisch’s

studies [13, 14] showed that even when users were

instructed to take as much time as they wanted to

acquire a target on a touchscreen, there was still a

large amount of variability in endpoints.

These observations indicated that a portion of the

variability in endpoints is independent of the

performer's desire to follow the specified precision and

cannot be controlled by a speed-accuracy tradeoff.

This portion of variability reflects the absolute precision

of the finger input. In other words, the observed

variability in the endpoints may originate from two

sources: the relative precision governed by the speedaccuracy tradeoff of human motor systems, and the

absolute precision uncertainty of the finger per se.

More formally, we propose a dual normal distribution

hypothesis

Summary

1. Long History of Touch Input

2. Multitouch history dates back to 1982

3. Natural User Interfaces

4. Fat Finger problem

5. Time-efficiency of bimanual input

Questions?