Annual Conference on Learning and Assessment

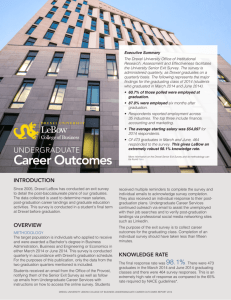

advertisement