Image retrieval: current techniques, promising directions and open

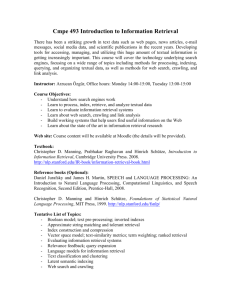

advertisement