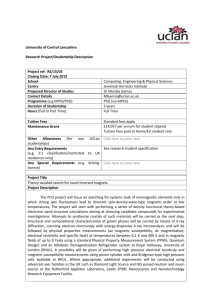

Engineering and Environmental Geophysics

advertisement