Research Design

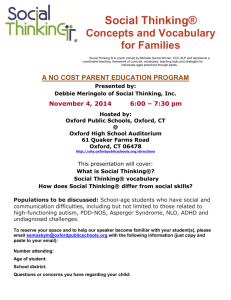

advertisement

Research Design Skopje 6-7 November 2008 Daniel Bochsler and Lucas Leemann Center for Comparative and International Studies, University of Zurich bochsler@ipz.uzh.ch; leemann@ipz.uzh.ch Introduction The goal of this workshop session is to review the current literature on issues of research design in social sciences and to allow the participants to improve on their skills in designing research. The workshop starts off with a short review of some basic principles of scientific research, before turning to two of the main goals of scientific work, namely descriptive and causal inferences. In a second part different research designs are discussed, relying on the basic distinction between experimental and quasi-experimental work. Given that despite the important forays in experimental studies in the social sciences (see Druckman, Green, Kuklinski and Lupia, 2006) quasiexperimental designs will be discussed in more detail. Special emphasis will be put on the dangers to our inferences in such quasi-experimental designs. The third part will consist of a discussion of a few chosen research designs to highlight their strengths and weaknesses. Finally, based on the participants own experience, in a short training session they will have to sketch possible research designs for chosen research questions. Plan, readings and literature Most of the literature on which this workshop will rely comes from King, Keohane and Verba (1994), a book that, from a very specific perspective, highlights the similarities between quantitative and qualitative approaches. Needless to say, this book has attracted a series of critiques (e.g., Caporaso, 1995; Collier, 1995; Rogowski, 1995; Tarrow, 1995; Brady and Collier, 2004). Other useful books to consult are the following: Cook and Campbell (1979), Achen (1986), Gerring (2001), Geddes (2003), George and Bennett (2005), Weingast and Wittman (2006),Gerring (2007), Box-Steffensmeier, Brady and Collier (2008), and Reus-Smit and Snidal (2008). 6. November 2008, morning session, 9.30h – 12.30h 1 Introduction (20’) In the first part of the workshop, we discuss why methodology plays a central role in empirical research, highlight the different goals of descriptive studies and of studies that are interested in causal mechanisms, and discuss how hypotheses are best constructed in order to be testable with empirical data. We discuss briefly, why both quantitative and qualitative observations can help us to make empirically based conclusions. 1.1. What does scientific research mean? (15’) Mandatory reading: King, Keohane and Verba (1994, 3-12) 11/07/11, program_skopje.doc 1.2. Descriptive and causal inference (30’) Mandatory readings: King, Keohane and Verba (1994, 34-99); Levitt and Dubner (2005) Further readings: (Donohue and Levitt 2001; Holland, 1986; Pearl, 2001; Brady and Seawright, 2004; Morgan and Winship, 2007; Brady, 2008) 1.3. Where to start from: asking questions and getting the hypotheses right (30’ + 30’ exercise) Mandatory readings: Grofman (2001, 1-11); King, Keohane and Verba (1994, 99-114) Further readings: (Goertz, 2006, 1-67, 237-268; Goertz, 2008; Ragin, 1987) Exercise: Hypotheses 6. November 2008, afternoon session, 14h-17.30h 2 Research designs Before we start to investigate our cases, it is important to think about how we should structure our research so that at the end we can draw any conclusions on the cases that we are interested. In this part of the workshop, we discuss different research designs that are often applied in social sciences, and that can lead to meaningful and credible conclusions. 2.1 Introduction (10’) Mandatory reading: King, Keohane and Verba (1994, 13-33,115-128) 2.2. Experimental designs (45’) Experimental research is better known from natural sciences, such as chemistry, but there are important reasons why we should think about experiments in social sciences too: On the one hand, experiments are extremely expressive when we want to make causal conclusions about a mechanism. On the other hand, we will discuss the limits of experimental research in social sciences. Mandatory reading: Druckman, Green, Kuklinski and Lupia (2006) Further reading: (Kinder, 1993) 2.3. Quasi-experimental designs and ex-post facto designs Usually, we have situations where experiments are not feasible or where we can only hardly conclude from experimental data on general effects. This is why most of our research is based on our observations of the empirical reality. Sometimes, we try to imitate experiments, and can draw conclusions from quasi-experiments, but often this is not possible, and then we apply ex-post facto designs for our research. We discuss how we need to chose our observations for quasi-experimental research or ex-post facto designs, and we discuss general problems that occur in this research, both when applying quantitative or qualitative methods. 2.3.1. Creating counter-factual cases; how to make conclusions out of observational data (30’) Page 2 Mandatory reading: King, Keohane and Verba (1994, 75-91) Further readings: (Cook and Campbell, 1979; Achen, 1986; Collier and Mahoney, 1996; Fearon, 1991; Tetlock and Berlin, 1996; Przeworski, 2008, 2008) 7. November 2008, morning session, 9.30h-12.00h 2.3.2. Typical quasi-experimental research designs (30’ + 30’ exercise) Mandatory reading: Card and Krueger (1994) 2.3.3. Ex-post facto designs: Comparing most-similar cases or statistical controls (30’) Mandatory reading: Card and Krueger (1994) 2.3.4. Populations, samples, random selection of cases (20’) Mandatory reading: King, Keohane and Verba (1994, 115-149) Further readings: (Card and Krueger, 1994) 2.3.5. Problems in ex-post facto designs: Endogeneity (20’) Mandatory reading: Colomer (2005) Further readings: (Hug, 2006; King, Keohane and Verba, 1994, 91-97; Przeworski, 2004, 2008) 2.3.6. Problems in ex-post facto designs: Selection bias, selecting on the (in)dependent variable (45’) Mandatory readings: Geddes (1991); King, Keohane and Verba (1994, 115-149) Further readings: (Achen and Snidal, 1989; Geddes, 1991; Geddes, 2003; Hug, 2003; Bodenstein, 2004; Plümper, Schneider and Troeger, 2005 forthcoming; Hug, 2006; Hewitt/Goertz, 2005; Mahoney/Goertz, 2004) 2.3.7. Problems in quasi-experimental research: Multi-collinearity (15’) Further readings: (Wooldridge 2008) 7 November 2008, afternoon session, 13.30h-18.00h 3. Research designs in practice In the practical part of the workshop, you will have the opportunity to elaborate a few research questions and research designs in groups, considering the aspects that we had discussed in the plenary lectures. Afterwards, we will discuss the proposed research designs jointly. 3.1. Exercises: part A (in groups) 3.2. Exercices: part B (plenum) Page 3 4. Conclusion and evaluation of the workshop References Achen, Christopher H. 1986. Statistical Analysis of Quasi-Experiments. Berkeley: University of California Press. Achen, Christopher H. and Duncan Snidal. 1989. “Rational Deterrence Theory and Comparative Case Studies.” World Politics 41(2): 143–169. Bodenstein, Thilo. 2004. “Domestic Bargaining and the Allocation of Structural Funds in Objective 1 Regions.” Paper prepared for presentation at the Joint Session of Workshops of the ECPR, Uppsala. Box-Steffensmeier, Janet M., Henry E. Brady and David Collier, eds. 2008. The Oxford handbook of political methodology. Oxford: Oxford University Press. Brady, Henry. 2008. Framing social inquiry: from models of causation to statistically based causal inference. In The Oxford handbook of political methodology, ed. Janet M. Box-Steffensmeier, Henry E. Brady and David Collier. Oxford: Oxford University Press. Brady, Henry E. and David Collier, eds. 2004. Rethinking Social Inquiry: Diverse Tools, Shared Standards. Lanham, Md.: Rowman & Littlefield. Brady, Henry and Jason Seawright. 2004. “Framing Social Inquiry: From Models of Causation to Statistically Based Causal Inference.” Paper prepared for presentation at the Annual Meeting of the American Political Science Association, Chicago, September 2-5, 2004. Campbell, Donald T. and Julian C. Stanley. 1963. Experimental and Quasi-Experimental Designs for Research. Boston: Houghton Mifflin Company. Caporaso, James A. 1995. “Research Design, Falsification, and the Qualitative-Quantitative Divide.” American Political Science Review 89(2): 457–460. Collier, David. 1995. “Translating Quantitative Methods For Qualitative Researchers: The Case of Selection Bias.” American Political Science Review 89(2): 461–466. Collier, David and James Mahoney. 1996. “Insights and Pitfalls: Selection Bias in Qualitative Research.” World Politics 49(1): 56–91. Colomer, Josep M. 2005. "It's Parties That Choose Electoral Systems (or, Duverger's Laws Upside Down)." Political Studies 53(1): 1-21. Cook, Thomas D. and Donald T. Campbell. 1979. Quasi-Experiments. Chicago: Rand-McNally. Donohue, John J. and Steven D. Levitt. 2001. “The Impact of Legalized Abortion on Crime.” The Quarterly Journal of Economics 116(2): 379–420. Druckman, James N., Donald P. Green, James H. Kuklinski and Arthur Lupia. 2006. “The Growth and Development of Experimental Research in Political Science.” American Political Science Review 100(4): 627– 635. Fearon, James D. 1991. "Counterfactuals and Hypothesis Testing in Political Science." World Politics 43(2): 169195. Geddes, Barbara. 1991. How the Cases You Choose Affect the Answers You Get: Selection Bias in Comparative Politics. In Political Analysis, ed. James A. Stimson. Ann Arbor: University of Michigan Press pp. 131–152. Geddes, Barbara. 2003. Paradigms and Sand Castles. Theory Building and Research Design in Comparative Politics. Ann Arbor: University of Michigan Press. George, Alexander L. and Andrew Bennett. 2005. Case Studies and Theory Development. Cambridge: MIT Press. Gerring, John. 2001. Social Science Methodology : A Critical Framework. Cambridge: Cambridge University Press. Gerring, John. 2007. Case Study Research : Principles and Practices. New York: Cambridge University Press. Goertz, Gary. 2006. Social Science Concepts: A User’s Guide. Princeton: Princeton University Press. Page 4 Goertz, Gary. 2008. A checklist for constructing, evaluating, and using concepts or quantitative measures. In The Oxford handbook of political methodology, ed. Janet M. Box-Steffensmeier, Henry E. Brady and David Collier. Oxford: Oxford University Press. Grofman, Bernard. 2001. Political Science as Puzzle Solving. xxx Hewitt, J. Joseph, Gary Goertz. 2005. Conceptualizing Interstate Conflict: How Do Concept-Building Strategies Relate to Selection Effects? International Interactions 31(2): 163-182. Ho, Daniel E., Kosuke Imai, Gary King and Elizabeth A. Stuart. 2007. “Matching as Nonparametric Preprocessing for Reducing Model Dependence in Parametric Causal Inference.” Political Analysis 15: 199–236. Holland, Paul W. 1986. “Statistics and Causal Inference.” Journal of the American Statistical Association 81: 945–960. Hug, Simon. 2003. “Selection Bias in Comparative Research. The Case of Incomplete Datasets.” Political Analysis 11(3): 255–274. Hug, Simon. 2006. “Endogeneity and Selection Bias in Comparative Research.” APSA-CP Newsletter 17(1): 14– 17. Kinder, Donald R.: Palfrey, Thomas R. 1993. Experimental Foundations of Political Science. Ann Arbor: University of Michigan Press. King, Gary, Robert O. Keohane and Sidney Verba. 1994. Designing Social Inquiry. Scientific Inference in Qualitative Research. Princeton: Princeton University Press. Levitt, Steven D. and Stephen Dubner. 2005. Freakonomics : A Rogue Economist Explores the Hidden Side of Everything. William Morrow. Mahoney, James and Gary Goertz. 2004. "The Possibility Principle: Choosing Negative Cases in Comparative Research." American Political Science Review 98(4): 653-669. Martin, Lisa. 1993a. “Credibility, Costs, and Institutions: Cooperation on Economic Sanctions.” World Politics 45(3): 406–432. Martin, Lisa L. 1993b. Coercive Cooperation: Explaining Multilateral Economic Sanctions. Princeton: Princeton University Press. Morgan, Stephen L. and Christopher Winship. 2007. Counterfactuals and Causal Inference: Methods and Principles for Social Research. 1 ed. New York: Cambridge University Press. Pearl, Judea. 2001. Causality. New York: Cambridge University Press.Persson, Torsten and Guido Tabellini. 2001. Political Economics. Explaining Economic Policy. Cambridge: MIT Press. Persson, Torsten and Guido Tabellini. 2002. “Do Constitutions Cause Large Governments? Quasi-experimental Evidence.” European Economic Review 46(May 4): 908–918. Plümper, Thomas, Christina J. Schneider and Vera E. Troeger. 2005 forthcoming.“ Regulatory Conditionality and Membership Selection in the EU. Evidence from a Heckman Selection Model.” British Journal of Political Science . Przeworski, Adam. 2004. “Institutions Matter?” Government and Opposition 39(4): 527–540. Przeworski, Adam. 2008. Is the science of comparative politics possible? In Oxford handbook of comparative politics, ed. Carles Boix and Susan Stokes. Oxford: Oxford University Press. Putnam, Robert D. 1993. Making Democracy Work: Civic Traditions in Modern Italy. Princeton: Princeton University Press. Reus-Smit, Christian and Duncan Snidal, eds. 2008. The Oxford handbook of international relations. Oxford: Oxford University Press. Rogowski, Ronald. 1995. “The Role of Theory and Anomaly in Social-Scientific Inference.” American Political Science Review 89(2): 467–470. Tarrow, Sidney. 1995. “Bridging the Quantative-Qualitative Divide in Political Science.” American Political Science Review 89(2): 471–474. Tetlock, Philip E. and Aaron Berlin, eds. 1996. Counterfactual Thought Experiments in World Politics. Princeton: Princeton University Press. Page 5 Weingast, Barry R. and Donald A. Wittman, eds. 2006. The Oxford Handbook of Political Economy. Oxford: Oxford University Press. Wooldridge, Jeffrey. 2008. Introductory Econometrics. A Modern Approach. 3. Ed. Mason: Thomson Southwestern. Page 6