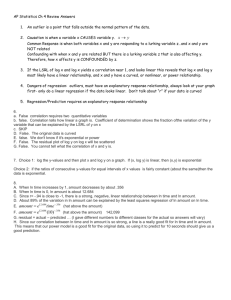

Chapter 4 - Princeton High School

advertisement