Evolving Signal Processing for Brain–Computer Interfaces

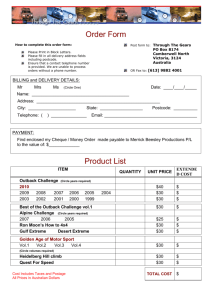

advertisement