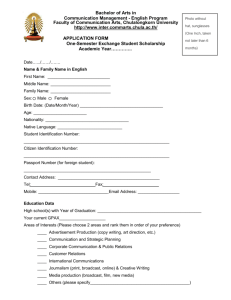

Recommender Systems

advertisement

Recommender Systems

Daniel Rodriguez

University of Alcala

Some slides and examples based on Chapter 9,

Mining of Massive Datasets, Rajaraman et al., 2011

(DRAFT)

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

1 / 101

Outline

1

Introduction

2

Recommender Systems

3

Algorithms

4

Evaluation of Recommender Systems

5

Dimensionality Reduction

6

Tools

7

Problems and Challenges

8

Conclusions

9

References

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

2 / 101

Introduction

1

Introduction

2

Recommender Systems

3

Algorithms

4

Evaluation of Recommender Systems

5

Dimensionality Reduction

6

Tools

7

Problems and Challenges

8

Conclusions

9

References

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

3 / 101

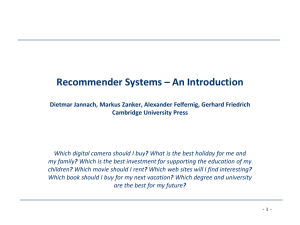

Recommender systems

Introduction

What are recommender systems?

Denition (by Ricci et al. [10])

Recommender Systems (RSs) are software tools and techniques providing

suggestions for items to be of use to a user.

The suggestions relate to various decision-making processes, such as what

items to buy, what music to listen to, or what online news to read.

Item is the general term used to denote what the system recommends to

users.

A RS normally focuses on a specic type of item (e.g., CDs, or news) and

accordingly its design, its graphical user interface, and the core

recommendation technique used to generate the recommendations are all

customized to provide useful and eective suggestions for that specic type

of item.

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

4 / 101

Recommender systems

Introduction

What are recommender systems?

http://en.wikipedia.org/wiki/Recommender_system

Recommender systems (RS) are a subclass of information ltering system

that seek to predict the 'rating' or 'preference' that user would give to an

item (such as music, books, or movies) or social element (e.g. people or

groups) they had not yet considered, using a model built from the

characteristics of an item (content-based approaches) or the user's social

environment (collaborative ltering approaches).

The goal of these systems is to serve the right items to a user in a

given context or create bundle packs of similar products to optimize

long term business objectives

Foundations based on data mining, information retrieval, statistics,

etc.

Established area of research with an ACM Conference:

http://recsys.acm.org/

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

5 / 101

Recommender systems: Applications

Introduction

What are recommender systems?

Product Recommendations: Perhaps the most important use of

recommendation systems is at on-line retailers, etc., e.g, Amazon or

similar on-line vendors strive to present users suggestions of products

that they might like to buy. These suggestions are not random, but

based on decisions made by similar customers.

News Articles: News services usually classify interesting news for some

people to oer them to similar users. The similarity might be based on

the similarity of important words in the documents, or on the articles

that are read by people with similar reading tastes. (the same

principles apply to recommending blogs, videos, etc.)

Movie/Music Recommendations, e.g. Netix oers customers

recommendations of movies they might be interested in. These

recommendations are based on ratings provided by users.

Application stores

Social Networks

etc.

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

6 / 101

Recommender systems: Examples

Introduction

Figure:

Windsor Aug 5-16, 2013 (Erasmus IP)

What are recommender systems?

Amazon Recommendations

Recommender Systems

7 / 101

Recommender systems: Examples

Introduction

Figure:

Windsor Aug 5-16, 2013 (Erasmus IP)

What are recommender systems?

Netix recommendations

Recommender Systems

8 / 101

Recommender systems: Examples

Introduction

Figure:

Windsor Aug 5-16, 2013 (Erasmus IP)

What are recommender systems?

Spotify recommendation

Recommender Systems

9 / 101

Recommender systems: Examples

Introduction

Windsor Aug 5-16, 2013 (Erasmus IP)

What are recommender systems?

Recommender Systems

10 / 101

Long Tail Phenomenon

Introduction

Long Tail Phenomenon

Physical institutions can only provide what is popular (shelf space is

scarce), while on-line institutions can make everything available [2]. The

greater the choice, the greater need for better lters.

Long Tail Phenomenon

Recommender systems take advantage of large amounts of data available

on the Internet to create recommendations that in bricks and mortar

stores would be impossible to do.

This phenomenon is called long tail phenomenon and explains the

impossibility of the physical stores to create personalized recommendations.

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

11 / 101

Long Tail Phenomenon

Introduction

Windsor Aug 5-16, 2013 (Erasmus IP)

Long Tail Phenomenon

Recommender Systems

12 / 101

Recommender systems: History

Introduction

Recommender systems: History

The rst recommender system, Tapestry, was designed to recommend

documents from newsgroups. The authors also introduced the term

collaborative ltering

as they used social collaboration to help users with

large volume of documents.

P. Resnick, N. Iacovou, M. Suchak, P. Bergstrom, J. Riedl, GroupLens: An

Open Architecture for Collaborative Filtering of Netnews, Proc. of

1

Computer Supported Cooperative Work (CSCW), pp. 175-186, 1994

1

http://ccs.mit.edu/papers/CCSWP165.html

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

13 / 101

The Netix Prize

Introduction

Netix Prize

The importance of predicting ratings accurately is so high that on Oct

2006, Netix, decided to create a contest to try to improve its

recommendation algorithm, it was the Netix Prize:

http://www.netflixprize.com/

Netix oered

$1m to the team capable of proposing an algorithm 10%

better than their current algorithm (CineMatch). To so so, Netix

published a dataset with:

approximately 17,000 movies

500,000 users

100 million ratings (training set of 99 million ratings)

The Root-mean-square error (RMSE) was used to measure the

performance of algorithms. CineMatch had an RMSE of 0.9525.

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

14 / 101

The Netix Prize: Results

Introduction

Netix Prize

The contest nished almost 3 years later and the winner improved the

algorithm in 10.6%. The winner, a team of researchers called Bellkor's

Pragmatic Chaos.

They must also share the algorithm with Netix and the source code.

In this contest participated more than 40,000 teams from 186 dierent

countries.

The winning algorithm was a composition of dierent algorithms that had

been developed independently.

Also Moshfeghi [7] made another approach adding semantics to the

algorithms to improve the accuracy.

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

15 / 101

Characteristics

Introduction

Characteristics

A recommender system must be reliable providing good recommendations and showing

information about the recommendations (explanations, details, etc.). Another important

point of these systems is how they should display the information about the

recommended products:

The item recommended must be easy to identify by the user.

Also the item must be easy to evaluate/correct (I don't like it, I already have it,

etc.).

The ratings must be easy to understand and meaningful.

Explanations must provide a quick way for the user to evaluate the

recommendation.

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

16 / 101

Characteristics: Degree of Personalisation

Introduction

Characteristics

The degree of personalisation of this recommendations can be dierent for

each site. Galland [4] classied the recommendations into 4 groups:

Generic: everyone receives same recommendations.

Demographic: everyone in the same category receives same

recommendations.

Contextual: recommendation depends only on current activity.

Persistent: recommendation depends on long-term interests.

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

17 / 101

Other Characteristics

Introduction

Characteristics

A recommender system usually involves [1]:

Large scale Machine Learning and Statistics

O-line Models (capture global and stable characteristics)

On-line Models (incorporates dynamic components)

Explore/Exploit (active and adaptive experimentation) Multi-Objective Optimization

Click-rates (CTR), Engagement, advertising revenue, diversity, etc. Inferring user interest

Constructing User Proles - Natural Language Processing to

understand content.

Topics, aboutness , entities, follow-up of something, breaking news,

etc.

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

18 / 101

Recommender Systems

1

Introduction

2

Recommender Systems

3

Algorithms

4

Evaluation of Recommender Systems

5

Dimensionality Reduction

6

Tools

7

Problems and Challenges

8

Conclusions

9

References

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

19 / 101

Utility Matrix

Recommender Systems

Utility Matrix

Recommender Systems are often seen as a function:

C ∈ Customers

I ∈ Items

R ∈ Ratings , e.g., [1-5], [+,-], [a+,E-],

Utility function: u : C × I → R

U1

U2

I1

I2

4

?

1

5

5

7

6

etc.

In

...

6

...

Un

3

3

Blanks correspond to not rated items.

Objective: would

U1

I

like 2 ?

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

20 / 101

Sparse Matrices

Recommender Systems

Utility Matrix

Typically most of the values are empty, these matrices are called sparse

matrices.

Users rate just a few percentage of all available items

An unknown rating implies that we have no explicit information about

the user's preference for that particular item.

The goal of a RS is to predict the

blanks

(ll the gaps) in the matrix.

Generally, it is not necessary to ll up all of the blanks because we

usually are not interested in low rated items

The majority of RS recommend a few of the highest rated items

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

21 / 101

Sparse Matrices - Representation

Recommender Systems

Utility Matrix

In order to t in memory, zero values are not explicitly represented. For

instance, in ARFF format (Weka)

0, X, 0, Y, "class A"

0, 0, W, 0, "class B"

is represented by their attribute number and value as:

{1 X, 3 Y, 4 "class A"}

{2 W, 4 "class B"}

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

22 / 101

Work-ow in recommendations

Recommender Systems

Utility Matrix

There are a lot of dierent ways to get recommendations but the most

used are based on the previous knowledge of similar users or contents [5].

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

23 / 101

Naïve Recommender Systems

Recommender Systems

RS Classication

Editorial recommendations

Simple aggregates

Top 10, Most Popular, etc.

From now on, we will refer to systems tailored to individual users (Amazon,

Netix, etc.)

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

24 / 101

Recommender Systems: Classication

Recommender Systems

RS Classication

Typically, also in Rajamaran et al. [8], recommender systems are classied

according to the technique used to create the recommendation (ll the

blanks in the utility matrix):

Content-based systems examine properties of the items recommended

and oer similar items

Collaborative ltering (CF) systems recommend items based on

similarity measures between users and/or items. The items

recommended to a user are those preferred by similar users

Hybrid mixing both previous approaches

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

25 / 101

Recommender Systems: Classication

Recommender Systems

Figure:

RS Classication

Classication Recommender Systems [8]

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

26 / 101

Recommender Systems: Content-based

Recommender Systems

Content based Recommender Systems

Content-based systems examine properties of the items to recommend

items that are similar in content to items the user has already liked in

the past, or matched to attributes of the user.

For instance, movie recommendations with the same actors, director,

genres, etc., if a Netix user has watched many action movies, then

recommend movies classied in the database as having the action

genre.

Textual content (news, blogs, etc) recommend other sites, blogs, news

with similar content (we will cover how to measure similar content)

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

27 / 101

Item Proles

Recommender Systems

Content based Recommender Systems

For each item, we need to create an item prole

A prole is a set of features

Context specic (e.g. with lms: actors, director, genre, title, etc.)

Documents: sets of important words. Important words are usually

selected using the is TF .IDF (Term Frequency times Inverse Doc

Frequency) metric

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

28 / 101

Term Frequency-Inverse Document Frequency

Recommender Systems

Content based Recommender Systems

The Term Frequency-Inverse Document Frequency (TF-IDF) is a statistic

2

which reects how important a word is to a document . The prole of a

document is the set of words with highest

to the term

t

in a document

d.

tf − idf ,

which assigns a weight

tf .idfd ,j = tft ,d · idft

(1)

where

tft ,d

no. of times term

t

occurs in document

d

(there are better

approaches)

idft = log dfNt , dft is no. of documents

and N is total no. of documents.

2

that that contain the term

ti

http://en.wikipedia.org/wiki/Tf-idf

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

29 / 101

Tags

Recommender Systems

Content based Recommender Systems

Tags are also used to create item proles. Then, tags are used to

provide similar content.

For example, it is dicult to automatically extract features form

images. In this case, users are asked to tag them.

A real example is

del.icio.us

in which users tag Web pages:

http://delicious.com/

The drawback of this approach is that it is dicult to get users to

tags items.

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

30 / 101

User Proles

Recommender Systems

Content based Recommender Systems

We also need to create vectors with the same components that describe

the user's preferences.

It is classied as implicit or explicit.

Implicit refers to observe user's behaviour with the system, for

example by watching certain lms, listening to music, reading a kind

of news or downloading applications/documents

Explicit refers when the user provides information to the system

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

31 / 101

Collaborative Filtering

Recommender Systems

Collaborative Filtering

In Collaborative Filtering (CF), a user is recommended items based on the

past ratings of all users collectively. CF can be of two types:

User-based collaborative ltering

1

2

Given a user U , nd the set of other users D whose ratings are similar

to the ratings of the selected user U .

Use the ratings from those like-minded users found in Step 1 to

calculate a prediction for the selected user U .

Item-based collaborative ltering

1

2

Build an item-item matrix determining relationships between pairs of

items

Using this matrix and data on the current user, infer the user's taste

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

32 / 101

Hybrid Methods

Recommender Systems

Collaborative Filtering

Implement two separate recommenders and combine predictions

Add content-based methods to collaborative ltering

Item proles for new item problem

Demographics to deal with new user problem

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

33 / 101

Ratings

Recommender Systems

Collaborative Filtering

Rating

A numeric (usually) value given by a user to specic item/user [10].

The way we populate the ratings matrix is also very important. However

acquiring data from which to build a ratings matrix is often a very dicult

step. There are two general approaches to discover the rating value of the

users:

Ask directly to the user to insert ratings. This approach is typically

used by the movie sites (Netix) but is limited by the willing of the

users to rate items.

Spy the behaviour of the user. If a user watches a movie, reads an

article or buys an item we can say that the user likes such particular

item. In this case, the ratings matrix will be lled with the value 0 if

the user does not buy/watch/read the item and 1 if the user

buy/watch/read it.

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

34 / 101

Ratings Classication

Recommender Systems

Collaborative Filtering

Implicit ratings

Based on interaction & time: Purchases, clicks, browsing (page view

time), etc.

Used to generate an implicit numeric rating.

Explicit ratings

Numeric ratings: numeric scale between 2 (+/-) and 15 values (the

more levels, the more variance; should be normalized)

Partial order: comparison between two items.

Semantic information: tags, labels.

Hybrid

Mixing both previous approaches.

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

35 / 101

Measuring Similarity

Recommender Systems

Measuring similarity

One of the more complicated task is to determine is the similarity between

the users/items (the duality of similarity [8]).

Following the example by Rajaraman and Ullman [8], we can observe that

users

A

and

C

both rated items

TW 1

and

SW 1

totally dierent.

Intuitively we could say that there is a large distance regarding their

similarity. We next explain dierent methods that can be used to check the

similarity between users.

A

B

C

D

HP1

HP2

HP3

4

5

5

TW 1

SW 1

5

1

2

4

SW 3

4

3

5

3

Table:

Windsor Aug 5-16, 2013 (Erasmus IP)

SW 2

Utility Matrix [8]

Recommender Systems

36 / 101

Jaccard Distance

Recommender Systems

Measuring similarity

The Jaccard index (aka Jaccard similarity coecient) measures the

3

similarity of two sets :

J (A, B ) =

|A∩B |

|A∪B |

(2)

The Jaccard distance measures the dissimilarty and is dened as the

complement of Eq(2):

Jδ (A, B ) = 1 − J (A, B ) =

|A∪B |−|A∩B |

|A∪B |

(3)

The Jaccard distance only takes into account the number of rated items

but not the actual rating which is discarded, therefore, it loses accuracy in

case that detailed recommendations were needed.

3

http://en.wikipedia.org/wiki/Jaccard_index

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

37 / 101

Jaccard Distance

Recommender Systems

Measuring similarity

Following with the previous example [8]:

A

B

C

D

A

and

size 5,

HP1

HP2

HP3

4

5

5

TW 1

SW 1

5

1

2

4

SW 2

SW 3

4

5

3

3

B have an intersection of size 1, i.e., | A ∪ B |= 1 and a union of

| A ∩ B |= 5 (items rated). Thus, their Jaccard similarity is 1/5, and

their Jaccard distance is 4/5; i.e., they are very far apart.

A

and

C

have a Jaccard similarity of 2/4, so their Jaccard distance is the

same, 1/2.

However, although

A

appears to be closer to

seems intuitively wrong.

watched, while

A

and

B

A

and

C

C

than to

B,

that conclusion

disagree on the two movies they both

seem both to have liked the one movie they

watched in common [8].

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

38 / 101

Cosine Distance

Recommender Systems

Measuring similarity

Cosine Distance:

Items are represented as vectors over the user space

Similarity is the cosine of the angle between two vectors

Range is between 1 (perfect) and -1 (opposite)

Given two vectors of attributes,

A

and

B,

the cosine similarity, cos(θ), is

4

represented using a dot product and magnitude as :

n

× Bi

A·B

i = 1 Ai p

cos(θ) =

= pPn

Pn

2

2

k A kk B k

i =1 (Ai ) ×

i =1 (Bi )

P

(4)

In information retrieval, the cosine similarity of two documents will range

tf − idf

from 0 to 1, since the term frequencies (

weights) cannot be

negative. The angle between two term frequency vectors cannot be greater

than 90 degrees.

4

http://en.wikipedia.org/wiki/Cosine_similarity

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

39 / 101

Cosine Distance: Example

Recommender Systems

Measuring similarity

Following with the previous example [8], the Cosine distance between users

A

and

B

is:

√

·5

√

= 0.380

42 + 52 + 12 ·

42 + 52 + 52

4

The Cosine distance between

√

A

and

C

(5)

is:

·2+1·4

√

= 0.322

+ 12 · 22 + 42 + 52

5

42

+

52

(6)

A larger (positive) cosine implies a smaller angle and therefore a smaller

distance, this measure tells us that

A

is slightly closer to

B

than to

C

[8].

Empty values as set to 0 (questionable election as it could seem that users

do not like the item instead of no rating form the user)

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

40 / 101

Pearson correlation

Recommender Systems

Measuring similarity

Another common measure of similarity is the Pearson correlation

coef [U+FB01]cient between the ratings of the two users,

a

and

u:

− ra )(ru,i − ru )

P

P

2 2

i ∈I ((ra,i − ra )

i ∈I ( i ∈I ((ra,i − ra ) )

Pearsona,u = pP

i ∈I (ra,i

P

2

(7)

where:

I is the set of items rated by both users

ru,i is the rating of given to item i by user u

ra , ru are the mean ratings given by users a and u

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

respectively

41 / 101

Rounding Data

Recommender Systems

Measuring similarity

Rounding data is more like a pre-processing step of the information before

applying any distance measure.

Following the example, we could consider ratings of 3, 4, and 5 as a 1

and consider ratings 1 and 2 as unrated (blanks).

A

B

C

D

HP1

HP2

HP3

1

1

1

1

C

SW 1

1

1

1

1

The Jaccard distance between

Now,

TW 1

appears further away

SW 2

SW 3

1

1

A and B is 3/4, while between A and C

from A than B does, which is intuitively

is 1.

correct. Applying cosine distance allows us to reach the same conclusion.

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

42 / 101

Normalizing Ratings

Recommender Systems

Measuring similarity

As with the rounding data method, this is also a preprocessing step applied

before any other measure distance.

Normalised ratings are calculated subtracting the average value of the

ratings of the user to each single rating. Then low ratings will be negative

and high ratings positive.

Following the example, the normalised rating matrix will be as follows:

A

B

C

D

HP1

HP2

HP3

2/3

1/3

1/3

TW 1

SW 1

5/3

-7/3

-5/3

1/3

SW 3

-2/3

0

Windsor Aug 5-16, 2013 (Erasmus IP)

SW 2

4/3

0

Recommender Systems

43 / 101

Normalizing Ratings: Example

Recommender Systems

Measuring similarity

Applying the Jaccard distance to this normalised matrix, it is noted that

ratings from the user

D

are 0. The ratings given by the user

D

are

(probably) not interesting ( as this user has always rated items with the

same value).

The Cosine distance in this new case for users

A

and

B:

(2/3) · (1/3)

q

q

= 0.092

( 23 )2 + ( 35 )2 + (− 73 )2 ( 13 )2 + ( 13 )2 + (− 23 )2

Now, the Cosine distance for the users

A

and

C

(8)

with normalized values:

(5/3) · (−5/3) + (−7/3) · (1/3)

q

q

= −0.559

( 23 )2 + ( 53 )2 + (− 73 )2 (− 53 )2 + ( 31 )2 + ( 43 )2

A

and

C

because

are much further apart than

A

and

C

A

and

B.

(9)

This result makes sense

rated 2 lms with very dierent values and

A

and

B

rated only one lm with similar values.

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

44 / 101

Algorithms

1

Introduction

2

Recommender Systems

3

Algorithms

4

Evaluation of Recommender Systems

5

Dimensionality Reduction

6

Tools

7

Problems and Challenges

8

Conclusions

9

References

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

45 / 101

Knowledge Discovery in Databases (KDD)

Algorithms

KDD

RSs, natural language processing (NLP) are based on a subeld of

computer science that tries to discover patterns in large data sets, called

Data mining or Knowledge Discovery in Databases (KDD).

In fact,

data mining

is typically one step within the process of what is

known as Knowledge Discovery in Databases (KDD).

The main goal of the data mining (the analysis step of the Knowledge

Discovery in Databases (KDD) process [3]) is to extract information from a

data set and transform it into an understandable structure for further use.

This transformation involves several steps that includes: database and data

management aspects, data pre-processing, model and inference

considerations, interestingness metrics, complexity considerations,

post-processing of discovered structures, visualization, and on-line updating.

The step which requires most of the time is the preparation of data.

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

46 / 101

KDD Process

Algorithms

KDD

KDD is composed of the following steps [3]:

1

Selection

2

Pre-processing

3

Transformation

4

Data Mining

5

Interpretation/Evaluation

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

47 / 101

KDD Process

Figure:

Windsor Aug 5-16, 2013 (Erasmus IP)

Algorithms

KDD

Data mining steps (Fayyad et al, 96)

Recommender Systems

48 / 101

Text Mining

Algorithms

KDD

Text mining, aka text analytics or Information Retrieval tries to obtain

usable information for a computer using no structured (no measurable)

data.

The Oxford Dictionary denes text mining as process or practice of

examining large collections of written resources in order to generate new

information, typically using specialized computer software.

Typically no measurable data refers to e-mails, newspapers, research

papers, etc.

The process of text mining usually is divided in 4 dierent steps

1

Information retrieval (IR) systems.

2

Natural language processing (NLP).

3

Information extraction (IE).

4

Data mining (DM).

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

49 / 101

Web Mining

Algorithms

KDD

Web mining uses data mining tools to extract information from:

Web pages (Web content mining)

Links (Web structure mining)

User's navigation data (Web usage mining) based on Web server logs

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

50 / 101

Nearest Neighbour Algorithm

Algorithms

kNN

k

The Nearest Neighbour ( -NN) algorithm is one of the simplest machine

5

learning algorithms but works very well in practice .

The idea is to predict a classication based on the

k-nearest

neighbours of

the item we want to classify.

No model is in fact learned, but the active user is used to search for the

k

most similar cases

5

http://en.wikipedia.org/wiki/K-nearest_neighbor_algorithm

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

51 / 101

Nearest Neighbour Algorithm

Algorithms

A simple approximation of

k NN

kNN

is shown in Figure where we need to

classify the green circle:

1

If we select

k = 3,

the nearest neighbours are 2 red triangles and a

blue square, represented inside the solid black circle, the class of the

circle will be red triangle.

2

In the case of

k=5

(discontinuous black circle), the nearest

neighbours are 2 red triangles and 3 blue squares, then the class of the

circle will be blue square.

The selection of

k

is very important (it can change the class)

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

52 / 101

kNN: Complexity

1

Expensive step is nding

Algorithms

k

kNN

most similar customers Too expensive to

do at runtime, need to pre-compute

2

Can use clustering, partitioning as alternatives, but quality degrades

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

53 / 101

Clustering

Algorithms

Clustering

With little data (ratings) or to reduce the matrix, clustering can be used.

A hierarchical clustering (until a desired level can be used).

A

B

C

D

Windsor Aug 5-16, 2013 (Erasmus IP)

HP

TW

SW

4

5

1

2

4.5

4.67

3

3

Recommender Systems

54 / 101

Clustering

Windsor Aug 5-16, 2013 (Erasmus IP)

Algorithms

Clustering

Recommender Systems

55 / 101

Association Rules

Algorithms

Association Rules

Association Rules are a data mining technique to nd relations between

variables, initially used for shopping behaviour analysis.

Associations are represented as rules,

Antecedent → Consequent

If a customer buys A, that customer also buys B

Association among A and B means that the presence of A in a record

implies the presence of B in the same record

{Nappies , Chips } → Beer

Quality of the rules need a minimum support and condence

Support: the proportion of times that the rule applies

Condence: the proportion of times that the rule is correct

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

56 / 101

Slope One

Algorithms

Slope One

This algorithm was introduced in 2005 and is the simplest form of

item-based algorithm based on ratings. For a better understanding we are

going to explain this algorithm with a little example.

First we need a dataset, for example, the next table shows the ratings from

3 people to 3 dierent items.

Customer

Item A

Item B

Item C

John

5

3

2

Mark

3

4

-

Lucy

-

2

5

Table:

Windsor Aug 5-16, 2013 (Erasmus IP)

Example Slope One algorithm

Recommender Systems

57 / 101

Slope One

Algorithms

Slope One

This algorithm is based on the average dierences between users who rated

the same items that we want to predict.

We want to know the rating that Lucy will give to the item A. Then we

need to calculate the average dierences of the other users with the item A

and other item as reference (item B):

John, item A rating 5, item B rating 3, dierence 2

Mark, item A rating 3, item B rating 4, dierence -1

Average dierence between item A and item B, (2+(-1))/2=0.5

Rating from Lucy to item B, 2

Estimated rating from Lucy to item A, 2+0.5=2.5

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

58 / 101

Slope One

Algorithms

Slope One

Also we want to know the rating of Mark for the item

C.

We need to

calculate the average dierences of the other users with the item

other item as reference (item

B ):

C

and

John, item C rating 2, item B rating 3, dierence -1

Lucy, item C rating 5, item B rating 2, dierence 3

Average dierence between item C and item B, ((-1)+3)/2=1

Rating from Mark to item B, 4

Estimated rating from Mark to item C, 4+1=5

Therefore, the nal table of ratings is completed as follows:

Customer

Item A

Item B

Item C

John

5

3

2

Mark

Lucy

Windsor Aug 5-16, 2013 (Erasmus IP)

3

2.5

4

5

2

5

Recommender Systems

59 / 101

Decision Trees

Algorithms

Decision Trees

A decision tree is a collection of nodes, arranged as a binary tree.

A decision tree is constructed in a top-down approach. The leaves of the

tree correspond to classes (decisions such as likes or dislikes), Each

interior node is a condition that correspond to features, and branches to

their associated values.

To classify a new instance, one simply examines the features tested at the

nodes of the tree and follows the branches corresponding to their observed

values in the instance.

Upon reaching a leaf, the process terminates, and the class at the leaf is

assigned to the instance.

The most popular decision tree algorithm is C4.5 which uses the gain ratio

criterion to select the attribute to be at every node of the tree.

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

60 / 101

Naïve Bayes

Algorithms

Probabilistic Networks

The naïve Bayes algorithm uses the Bayes theorem to predict the class for

each case, assuming that the predictive attributes are independent given a

category.

A Bayesian classier assigns a set of attributes

such that

P (C |A1 , A2 , . . . , An )

A1 , A2 , . . . , An

to a class

C

is maximum, that is the probability of the

class description value given the attribute instances, is maximal.

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

61 / 101

Evaluation of Recommender Systems

1

Introduction

2

Recommender Systems

3

Algorithms

4

Evaluation of Recommender Systems

5

Dimensionality Reduction

6

Tools

7

Problems and Challenges

8

Conclusions

9

References

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

62 / 101

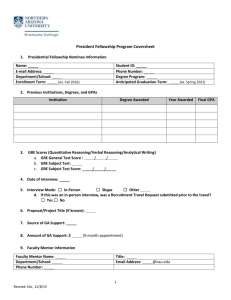

Evaluation Measures: Confusion Matrix

Evaluation of Recommender Systems

Table:

Positive

Pred

Negative

Evaluation Measures based on the Confusion Matrix

Confusion Matrix for Two Classes

Actual

Negative

Positive

True Positive

(TP )

False Positive

(FP )

Type I error

(False alarm)

Positive Predictive

Value (PPV )=

Condence =

Precision =

False Negative

(FN )

Type II error

True Negative

(TN )

Recall =

Sensitivity =

TPr = TPTP

+FN

Specicity =

TNr = FPTN

+TN

Negative

Predictive

Value

(NPV )= FNTN

+TN

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

= TPTP

+FP

63 / 101

Evaluation Measures using the confusion Matrix

Evaluation of Recommender Systems

Evaluation Measures based on the Confusion Matrix

True positive rate (TP /TP + FN )

is the proportion of positive cases

correctly classied as belonging to the positive class.

False negative rate (FN /TP + FN )

is the proportion of positive cases

misclassied as belonging to the negative class.

False positive rate (FP /FP + TN )

is the proportion of negative cases

misclassied as belonging to the positive class.

True negative rate (TN /FP + TN )

is the proportion of negative cases

correctly classied as belonging to the negative class.

There is a trade-o between

false positive rates

and

false negative rates

as

the objective is to minimize both metrics (or conversely, maximize the true

negative and positive rates). Both metrics can be combined to form single

metrics. For example, the predictive

accuracy =

Windsor Aug 5-16, 2013 (Erasmus IP)

accuracy

is dened as:

TP + TN

TP + TN + FP + FN

Recommender Systems

(10)

64 / 101

Evaluation Measures using the confusion Matrix

Evaluation of Recommender Systems

Evaluation Measures based on the Confusion Matrix

Another metrics from the Information Retrieval eld that is widely used

when measuring the performance of classiers is the

f-measure,

which is

just an harmonic median of the following two proportions:

f1=

· precision · recall

precision + recall

2

(11)

where

precision = TP /TP + FP )

Precision (

is the proportion of positive

predictions that are correct (no. of good item recommended / no. of

all recommendations)

recall

is the

true positive rate

dened as

TP /TP + FN ,

no. of good

items recommended / no. of good items

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

65 / 101

Receiver Operating Characteristic (ROC) Curve

Evaluation of Recommender Systems

Evaluation Measures based on the Confusion Matrix

The ROCcurve provides a graphical visualisation to analyse the relationship

between the

true positive rate

Windsor Aug 5-16, 2013 (Erasmus IP)

and the

true negative rate.

Recommender Systems

66 / 101

Receiver Operating Characteristic (ROC) Curve

Evaluation of Recommender Systems

Evaluation Measures based on the Confusion Matrix

The optimal point in the graph is the top-left corner, i.e., all positive

instances are classied correctly and no negative instances are misclassied

as positive. The diagonal line on the graph represents the scenario of

randomly guessing the class, and so it represents the minimum baseline for

all classiers.

The Area Under the ROC Curve (AUC) also provides a quality measure

with a single value and is used to compare dierent algorithms.

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

67 / 101

Rule Evaluation Measures

Evaluation of Recommender Systems

The

Support

Rule Evaluation

of a rule refers to the ratio between the number of

instances satisfying both the antecedent and the consequent part of a

rule and the total number of instances.

n(Antecedent · Consequent ) TP

=

N

N

n(Antecedent · Consequent ) corresponds to the TP

Sup (Ri ) =

where the

(12)

and

N

is the total number of instances.

Condence (Conf ), also known as Precision or Positive Predictive

Value (PPV ) of a rule is the percentage of positive instances of a rule,

i.e. relative frequency of the number of instances satisfying the both

the condition (

Antecedent )

and the

Consequent

and the number of

instances satisfying the only the condition.

Conf (Ri ) =

Windsor Aug 5-16, 2013 (Erasmus IP)

TP

n(Antecedent · Consequent )

=

n(Antecedent )

TP + FP

Recommender Systems

(13)

68 / 101

Rule Evaluation Measures - Association Rules

Evaluation of Recommender Systems

Coverage

Cov )

of a rule (

Rule Evaluation

is the percentage of instances covered by a

rule of the induced set of rules

Cov (Ri ) = p (Cond ) =

where

Ri

is a single rule,

by condition

Cond

Windsor Aug 5-16, 2013 (Erasmus IP)

and

N

n(Cond )

n(Cond )

N

(14)

is the number of instances covered

is the total number of instances.

Recommender Systems

69 / 101

Numeric Evaluation

Evaluation of Recommender Systems

Numeric Evaluation

With numeric evaluation (e.g. dierence between predicted rating and

actual rating), the following measures are typically used.

Mean Absolute Error

(u ,i ) |p(u ,i )

P

MAE =

− r u ,i |

N

(15)

Root mean squared error

sP

RMSE =

RMSE

(u ,i ) (p(u ,i )

N

− r u ,i ) 2

(16)

penalises more larger errors.

Other are also possible such as Mean-squared error, Relative-squared error

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

70 / 101

Dimensionality Reduction

1

Introduction

2

Recommender Systems

3

Algorithms

4

Evaluation of Recommender Systems

5

Dimensionality Reduction

6

Tools

7

Problems and Challenges

8

Conclusions

9

References

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

71 / 101

Dimensionality Reduction

Dimensionality Reduction

An dierent approach to estimating the blank entries in the utility matrix is

to break the utility matrix into the product of smaller matrices.

This approach is called SVD (Singular Value Decomposition) or a more

concrete approach based on SVD called UV-decomposition.

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

72 / 101

Singular Value Decomposition

Dimensionality Reduction

Singular Value Decomposition is a way of breaking up (factoring) a matrix

into three simpler matrices.

M = Um,m × Sm,n × VnT,n

where S a diagonal matrix with non-negative sorted values

Finding new recommendations:

1

New user

B

comes in with some ratings in the original feature space

[0,0,2,0,4,1]

B

k

BUS 1

2

Map

3

Any distance measure can be used in the reduced space to provide

to a

dimensional vector:

= [-0.25, -0.15]

recommendations

Example taken from:

http://www.ischool.berkeley.edu/node/22627

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

73 / 101

Singular Value Decomposition

Dimensionality Reduction

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

74 / 101

Singular Value Decomposition

Dimensionality Reduction

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

75 / 101

Singular Value Decomposition

Dimensionality Reduction

Example using R

N=matrix(c(5,2,1,1,4,

0,1,1,0,3,

3,4,1,0,2,

4,0,0,0,3,

3,0,2,5,4,

2,5,1,5,1),byrow=T, ncol=5)

svd<-svd(N)

S <- diag(svd$d)

svd$u %*% S %*% t(svd$v)

svd <- svd(N,nu=3,nv=3)

S <- diag(svd$d[1:3])

svd$u %*% S %*% t(svd$v)

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

76 / 101

Singular Value Decomposition

Dimensionality Reduction

Selecting

k =3:

svd <- svd(N,nu=3,nv=3)

S <- diag(svd$d[1:3])

svd$u %*% S %*% t(svd$v)

> svd$u %*% S %*% t(svd$v)

[,1]

[,2]

[,3]

[,4]

[,5]

[1,] 4.747427 2.077269730 1.0835420 0.9373748 4.237575

[2,] 1.602453 0.513149695 0.3907818 0.4122864 1.507976

[3,] 3.170104 3.964360658 0.5605769 0.1145642 1.913918

[4,] 3.490307 0.136595437 0.6203060 -0.2117173 3.392280

[5,] 3.064186 -0.008805885 1.7257425 5.0637172 3.988441

[6,] 1.821143 5.042020528 1.3558031 4.8996100 1.111007

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

77 / 101

Singular Value Decomposition

Dimensionality Reduction

New user with ratings: 0,0,2,0,4,1

b <-matrix(c(0,0,2,0,4,1),byrow=T, ncol=6)

S1 <- diag(1/svd$d[1:3])

b %*% svd$u %*% S1

> b %*% svd$u %*% S1

[,1]

[,2]

[,3]

[1,] -0.2550929 -0.1523131 -0.3437509

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

78 / 101

UV Decomposition

Dimensionality Reduction

Mm,n is the utility

M = Um,k × Vk ,n

m1,1

m2,1

.

.

.

matrix

m1,2

m2,2

···

···

.

.

.

..

m1,n

m2,n

.

.

.

.

mm,1 mm,2 · · ·

mm ,n

Windsor Aug 5-16, 2013 (Erasmus IP)

u1,1

u2,1

=

.

.

.

···

···

..

.

um,1 · · ·

Recommender Systems

u1,k

v

v

···

u2,n 1.,1 1.,2 .

v1,n

vk ,1 vk ,2 · · ·

vk ,n

.

.

.

um,k

×

.

.

.

.

..

.

.

.

79 / 101

UV Decomposition: Example

Dimensionality Reduction

Mm,n is the utility

M = Um,k × Vk ,n

5

2

3

2

2

1

4

4

4

2

4

3

1

5

4

3

4

5

4

matrix

u1,1

u2,1

1

4 = u3,1

u4,1

5

u5,1

3

Windsor Aug 5-16, 2013 (Erasmus IP)

u1,2

u2,2

v1,1 v1,2 v1,3 v1,4 v1,5

u3,2

×

v2,1 v2,2 v2,3 v2,4 v2,5

u4,2

u5,2

Recommender Systems

80 / 101

Tools

1

Introduction

2

Recommender Systems

3

Algorithms

4

Evaluation of Recommender Systems

5

Dimensionality Reduction

6

Tools

7

Problems and Challenges

8

Conclusions

9

References

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

81 / 101

Apache Mahout

Tools

Implementations

Apache Mahout machine learning library is written in Java that is designed

to be scalable, i.e. run over very large data sets. It achieves this by

ensuring that most of its algorithms are parallelizable (map-reduce

paradigm on Hadoop).

The Mahout project was started by people involved in the Apache

Lucene project with an active interest in machine learning and a desire

for robust, well-documented, scalable implementations of common

machine-learning algorithms for clustering and categorization

The community was initially driven by the paper

Machine Learning on Multicore

Map-Reduce for

but has since evolved to cover other

machine-learning approaches.

http://mahout.apache.org/

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

82 / 101

Apache Mahout

Tools

Implementations

Mahout is designed mainly for the following use cases:

1

Recommendation mining takes user's behaviour and from that tries to

nd items users might like.

2

Clustering takes e.g. text documents and groups them into groups of

topically related documents.

3

Classication learns from existing categorized documents what

documents of a specic category look like and is able to assign

unlabelled documents to the (hopefully) correct category.

4

Frequent item-set mining takes a set of item groups (terms in a query

session, shopping cart content) and identies, which individual items

usually appear together.

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

83 / 101

Apache Mahout

Tools

Implementations

Mahout is built on top of Hadoop:

http://hadoop.apache.org/

which implements the MapReduce model.

Hadoop is most used implementation of MapReduce model and

Mahout is one of the projects of the Apache Software Foundation.

Hadoop divides data into small pieces to process it and in case of

failure, repeats only the pieces that failed. In order to achieve this

objective, Hadoop uses his own le system called HDFS (Hadoop

Distributed File System).

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

84 / 101

Apache Mahout Workow

Tools

Windsor Aug 5-16, 2013 (Erasmus IP)

Implementations

Recommender Systems

85 / 101

R

Tools

Implementations

R with RecommnederLab and other packages for text mining.

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

86 / 101

R and extensions

Tools

Implementations

RecommnederLab

http://lyle.smu.edu/IDA/recommenderlab/

http://cran.r-project.org/

web/packages/recommenderlab/

Arules (for association rules)

http://lyle.smu.edu/IDA/arules/

http://cran.r-project.org/web/packages/arules/

Text mining

http://cran.r-project.org/web/packages/tm/

Examples and further pointers:

http://www.rdatamining.com/

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

87 / 101

Rapidminer

Tools

Implementations

Rapidminer and its plug-in for recommendation.

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

88 / 101

KNIME

Tools

Implementations

KNIME

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

89 / 101

Weka

Tools

Implementations

Weka

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

90 / 101

Problems and Challenges

1

Introduction

2

Recommender Systems

3

Algorithms

4

Evaluation of Recommender Systems

5

Dimensionality Reduction

6

Tools

7

Problems and Challenges

8

Conclusions

9

References

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

91 / 101

Cold start Problem

Problems and Challenges

When a new user or new item is added to the system, it knows nothing

about them and therefore, it is dicult to draw recommendations.

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

92 / 101

Explore/Explode

Problems and Challenges

Recommend items at random to a small number of randomly chosen users.

To do so, we need to removed one of the highly ranked item to include the

random items.

There is trade-o between recommend new items or highly ranked items:

Exploiting a model to improve quality

Exploring new items that can be good items and help to

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

93 / 101

Conclusions

1

Introduction

2

Recommender Systems

3

Algorithms

4

Evaluation of Recommender Systems

5

Dimensionality Reduction

6

Tools

7

Problems and Challenges

8

Conclusions

9

References

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

94 / 101

Conclusions

Conclusions

Nowadays, recommendation systems are increasingly gaining notoriety due

to their hight number of applications.

The users cannot manage all the information available on Internet because

of this is necessary some kind of lters or recommendations. Also the

companies want to oer to the user specic information to increase the

purchases.

The contest organized by Netix resulted in a big jump in the research of

the recommender systems, more than 40,000 teams were trying to create a

good algorithm. The work of Krishnan [6] was also very interesting trying

to compare the recommendation results of machine against humans. As

stated in the article, the machine won in most of the comparatives because

machines can handle more data than humans can.

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

95 / 101

Current and Future Work

Conclusions

The following improvements can be applied to the recommendation

systems [9]:

Improve the security regarding false ratings or users. E.g., Second

Netix challenge was in part cancelled because it was possible to

identify users based on their votes on IMBD.

Take the advantage of the social networks:

The users likes the same as his friends.

Explore the social graph of the users.

Potential friends could be suggested using recommendation techniques.

Improve the evaluation method when there are no ratings.

Proactive recommender systems.

Privacy preserving recommender systems.

Recommending a sequence of items (e.g. a play list).

Recommender systems for mobile users.

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

96 / 101

References

1

Introduction

2

Recommender Systems

3

Algorithms

4

Evaluation of Recommender Systems

5

Dimensionality Reduction

6

Tools

7

Problems and Challenges

8

Conclusions

9

References

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

97 / 101

References I

References

Deepak Agarwal.

Oine components: Collaborative ltering in cold-start situations.

Technical report, YAHOO, 2011.

Chris Anderson.

The Long Tail: Why the Future of Business Is Selling Less of More.

Hyperion, 2006.

Usama Fayyad, Gregory Piatetsky-Shapiro, and Padhraic Smyth.

The kdd process for extracting useful knowledge from volumes of data.

Communications of the ACM,

39(11):2734, November 1996.

A. Galland.

Recommender systems.

Technical report, INRIA-Saclay, 2012.

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

98 / 101

References II

References

Joseph A. Konstan and John Riedl.

Recommender systems: from algorithms to user experience.

User Modeling and User-Adapted Interaction,

22(1-2):101123, April

2012.

Vinod Krishnan, Pradeep Kumar Narayanashetty, Mukesh Nathan,

Richard T. Davies, and Joseph A. Konstan.

Who predicts better?: results from an online study comparing humans

and an online recommender system.

In

Proceedings of the 2008 ACM conference on Recommender systems,

RecSys '08, pages 211218, New York, NY, USA, 2008. ACM.

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

99 / 101

References III

References

Yashar Moshfeghi, Deepak Agarwal, Benjamin Piwowarski, and

Joemon M. Jose.

Movie recommender: Semantically enriched unied relevance model for

rating prediction in collaborative ltering.

In Proceedings of the 31th European Conference on IR Research on

Advances in Information Retrieval, ECIR '09, pages 5465, Berlin,

Heidelberg, 2009. Springer-Verlag.

Anand Rajaraman, Jure Leskovec, and Jerey David Ullman.

Mining of Massive Datasets.

Cambridge University Press, 2011.

Francesco Ricci.

Information search and retrieval, 2012.

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

100 / 101

References IV

References

Francesco Ricci, Lior Rokach, and Bracha Shapira.

Recommender Systems Handbook,

chapter Introduction to

Recommender Systems Handbook, pages 135.

Springer, 2011.

Windsor Aug 5-16, 2013 (Erasmus IP)

Recommender Systems

101 / 101