1. Seven Suggestions to Successfully Deliver Your Cost Estimate

advertisement

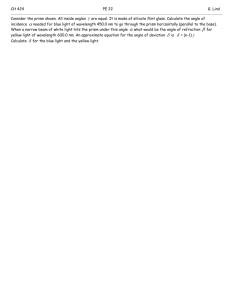

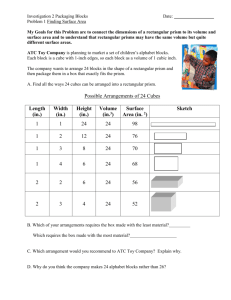

1st Prism User Group Conference 2006 – Calgary, Alberta, Canada Seven Suggestions to Successfully Deliver Your Cost Estimate Case Study Processing Aspen Kbase™ Data for Load into ARES Prism™ Project Manager Robert B. Fookes, M.Sc., P.Eng. TriGem Technology Ltd September, 2006 Copyright © 2006 TriGem Technology Ltd info@trigemtech.com Seven Suggestions to Successfully Deliver Your Cost Estimate Page 1 of 19 1st Prism User Group Conference 2006 – Calgary, Alberta, Canada Abstract Seven Suggestions to Successfully Deliver Your Cost Estimate discusses several key points that address fundamental information management principles that will help with your estimating effort. A case study Processing AspenTech Kbase™ Data for Load into ARES Prism™ Project Manager illustrates each of these key principles, and a system comprised of three software applications demonstrates one possible data processing model that could be used to manage your Kbase™ estimating information on any size project. A detailed progression through the work flow is given, and some of the benefits of using software tools for data processing are highlighted. Clearly it is possible to process data more effectively with sound processes and capable tools. Data model and process consistency provides for predictable results, and the use of enterprise software systems deployed as part of a larger solution can result in significant workflow advantages for your organization. This paper is geared toward senior cost estimators, cost estimating leads and estimating, cost control and project control managers. IS/IT support personnel will also benefit from the case study. Copyright © 2006 TriGem Technology Ltd info@trigemtech.com Seven Suggestions to Successfully Deliver Your Cost Estimate Page 2 of 19 1st Prism User Group Conference 2006 – Calgary, Alberta, Canada Acknowledgements I would like to acknowledge some special people who took the time to discuss many of the issues surrounding estimating, project controls and information management to name a few. I appreciate the input throughout this creative process, and as I wrote the document I realized how valuable the feedback was during its development. • Kevin R. Rowe Manager, Project Management Services ARES Corporation • Ross Kelman On-Track Projects Inc. • John P. Holgate, P.Eng. Proactive Technologies International, Inc. Not only do I consider each of them a friend and colleague, I consider them all mentors and thank them for their contributions and ongoing support. Copyright © 2006 TriGem Technology Ltd info@trigemtech.com Seven Suggestions to Successfully Deliver Your Cost Estimate Page 3 of 19 1st Prism User Group Conference 2006 – Calgary, Alberta, Canada Table of Contents 1. Seven Suggestions to Successfully Deliver Your Cost Estimate …5 Effective Data Processing 1.1 Consistent Coding 1.2 Naming Conventions 1.3 Structured Data 1.4 Single Source of Data 1.5 Defined Processes 1.6 Separate Data and Presentation 1.7 Think Quality …5 …6 …6 …7 …7 …8 …8 2. Case Study …9 Processing Aspen Kbase™ Data for Load into ARES Prism™ Project Manager 2.1 Problem 2.2 Software Tools 2.3 Data Management 2.4 Workflow 2.5 Solution 2.6 Results 2.7 Interpretation 2.8 Case Study Review of the Seven Suggestions 2.9 Conclusions … 9 … 9 … 11 … 11 … 14 … 15 … 15 … 16 … 16 3. Benefits of Using Software Tools for Data Processing … 17 4. References … 18 5. Acronyms … 19 Copyright © 2006 TriGem Technology Ltd info@trigemtech.com Seven Suggestions to Successfully Deliver Your Cost Estimate Page 4 of 19 1st Prism User Group Conference 2006 – Calgary, Alberta, Canada 1. Seven Suggestions to Successfully Deliver Your Cost Estimate Effective Data Processing Seven Suggestions If you find it difficult to consolidate estimating data that is developed in multiple formats, a disciplined approach to data processing may be the solution you are looking for. While there are many reasons why we struggle when it comes time to rollup multiple datasets into a common format, ranging from technology, to process, to people, there are steps that can be taken to improve efficiency and create value both internally and for clients. Organizations often use templates that develop some data consistency, but a more disciplined approach to the entire estimating process is required. The seven suggestions described below address some key areas where significant benefits can be realized. A case study, Processing Aspen Kbase™ Data for Load into Prism™ Project Manager, illustrates these benefits. Suggestions 1. 2. 3. 4. 5. 6. 7. 1.1 Consistent Coding Early definition of WBS and COA is crucial. Copyright © 2006 TriGem Technology Ltd info@trigemtech.com Consistent Coding Naming Conventions Structured Data Single Source of Data Defined Processes Separate Data and Presentation Think Quality 1. A key element to the success of any estimating effort is early definition of the work breakdown structure (WBS) and code of accounts (COA). This crucial step is often not given adequate attention up front and coding is sometimes left to be done while work progresses or even after it is complete. The WBS which is hierarchical by nature establishes the physical work packages (scope) and their activities (deliverables) that completely define the project. The COA is the logical breakout of the project into like elements (e.g. Seven Suggestions to Successfully Deliver Your Cost Estimate Page 5 of 19 1st Prism User Group Conference 2006 – Calgary, Alberta, Canada labour, equipment, materials and services) that are used to consistently compile quantity and cost data across WBS items for summarization at progressively higher WBS levels. The WBS and COA provide a consistent organization of the data throughout the project life and should be developed early in the process. 1.2 Naming Conventions Consistent naming improves the quality of your data. 1.3 Structured Data Well-structured data creates value for your organization. Copyright © 2006 TriGem Technology Ltd info@trigemtech.com 2. Naming conventions make data more understandable by making it easier to read. The goal is to improve readability and thereby the understanding, the maintainability and the general quality of the data. A naming convention is a collection of rules, which, when applied to data, results in a set of data elements named in a logical and standardized way. The naming system determines the syntax that the element must follow, and this syntax is sometimes called the naming convention. Developing a naming scheme for the directory structure on your project, for example, can potentially save many hours by making it easier for the members of the project team to find documents. This idea can be extended to the datasets themselves where consistent naming allows the information to be filtered and sorted into more meaningful result sets. Many conventions already exist in the literature and choosing one is a matter of preference. If you choose to develop your own, remember consistency is the key. 3. Structured data allows for ease of consolidation, analysis and reporting. Spreadsheets that are formatted so they print in a legible format are very often developed without any consideration for structure. Ideally a single source of data (see 4 below) should exist, and presentation or reporting of this data can be developed early in the process. With wellstructured data and clearly defined business processes output is easily generated using predefined reporting templates, and data mining across multiple structured datasets can be done to create additional value-added datasets. System integration efforts are made easier when the data to be mapped or translated is organized and structured in a consistent format. It is also important to document the data’s metadata which captures the meaning and context of the underlying data. Metadata provides the context for the structured data, and through the use of Seven Suggestions to Successfully Deliver Your Cost Estimate Page 6 of 19 1st Prism User Group Conference 2006 – Calgary, Alberta, Canada powerful search engines it allows your organization to access and better interpret its information assets. Document management systems include some type of information card that captures the document’s metadata. An example of metadata can be seen in Excel® by opening a workbook, selecting the File-Properties menu item and viewing the Summary tab. This information is accessible through the BuiltinDocumentProperties component object model (COM) interface. 1.4 Single Source of Data A single data source ensures data consistency. 1.5 Defined Processes Automating workflows improve efficiency. Copyright © 2006 TriGem Technology Ltd info@trigemtech.com 4. Creating a single data source for your estimate output is an important concept that cannot be over-emphasized, and through proper information management strategy it ensures data consistency, reliability and security. Centralized data and systems that work on the data (i.e. search engines) are able to keep up with the dynamic nature of data needs and requirements. People copy files to do some work and inevitably multiple versions of the same data exist. Document management systems are designed to address this problem through single point access and file versioning, however, there is training required and dedicated use of the system to realize the full benefits. A single source of data eliminates duplication of data and saves time working the data reconciliation problem that often follows. Your data is central to your operation, and understanding its true value and relevance and putting it to work for the benefit of the organization is crucial. Applied basic data management principles, such as the single source of data, will save countless hours of work understanding what the true state of your information is, and further a valuable historical record is developed over time. 5. Defined processes or business intelligence (BI) transform raw data using workflows into usable formats for reporting and analysis. A workflow is simply a description of everything that needs to occur to complete each step in a business process. Most organizations have workflows or instructions on how to carry out a task, and automating workflows that are repetitive in nature streamline the process enhancing overall efficiency and thus reducing the effort required to complete a particular assignment. By improving internal efficiencies tasks are completed sooner Seven Suggestions to Successfully Deliver Your Cost Estimate Page 7 of 19 1st Prism User Group Conference 2006 – Calgary, Alberta, Canada in a consistent manner thus making your organization more responsive. When developing business processes it is important to focus your efforts on the business logic of what users need to accomplish, not on how the tool works. 1.6 Separate Data and Presentation Data should be separated from other processes. 1.7 Think Quality Quality should be built into all processes. Copyright © 2006 TriGem Technology Ltd info@trigemtech.com 6. Data, business logic and presentation are the well-accepted logical layers which are used in the development of complex data-driven systems, and these principles can be used when developing any large set of data. Data creation and the presentation of this data are fundamentally different issues, and their proper separation is a key when developing large complex cost estimates because of the shear volume of data that needs to be managed. By separating data from the other layers future data-mining can be done and other corporate information assets can be developed using new business processes that utilize multiple large estimate datasets. Data integration layers further consolidate disparate datasets to provide even greater value because information can be consumed at a higher-level with broader context. Consistent format in the presentation of the data is accomplished by clearly defining the reporting layout to be used for the project. A variety of reports that summarize the data at progressively higher levels should be developed, and report consistency makes the information more understandable. 7. Data entry is often blamed for data-quality problems, and everyone seems to blame their quality woes on the systems they use. Although some of this may be true, a significant part of the quality issue is the lack of data consistency as well as the timeliness and relevancy of the data. Valuable datasets are generated by building quality processes into the data creation, processing and reporting steps, and all members of the team need to take responsibility for the development of these assets. Maintenance of your estimating data is important in order to preserve current as well as historical data. You can think of this as all part of quality and carefully-considered data procedures must be in place. Someone with senior-level experience in the data management field is a valuable addition to any estimating team and this individual can provide guidance and support to improve the overall quality of the process and ultimately the final estimate dataset. Seven Suggestions to Successfully Deliver Your Cost Estimate Page 8 of 19 1st Prism User Group Conference 2006 – Calgary, Alberta, Canada 2. Case Study Processing Aspen Kbase™ Data for Load into ARES Prism™ Project Manager 2.1 Problem Developing large cost estimates using Aspen Kbase™ is often done by breaking the project into smaller manageable pieces using the work breakdown structure (WBS). This approach allows for focussed work by members of the cost estimating team, and although sound it does create the data consolidation problem when more than a single Kbase™ project file needs to be rolled up, analyzed, modified and ultimately formatted for load into ARES Prism™ Project Estimator (PPE) or Prism™ Project Manager (PPM). 2.2 Software Tools Three commercial-off-the-shelf (COTS) software applications form the basis of the system solution, and each package is described below. Aspen Kbase™ (formerly ICARUS 2000) is Aspen Technology's flagship estimation tool. It is built on Aspen Icarus™ technology and is a fully integrated design, estimating, and scheduling system used to help evaluate the capital cost of process plants quickly in the early stage of the project life cycle. With as little information as a sized equipment list and general organization of the project, experienced estimators, working with key engineering discipline people, can effectively use Kbase™ to develop a complete and detailed engineering, procurement, and construction estimate and schedule. The system includes sophisticated models and a comprehensive database which are based on industry-standard design codes and construction practices. Aspen Kbase™ (ICARUS 2000®) uses these built-in algorithms for design, estimating and scheduling procedures to automatically perform mechanical designs for equipment and bulks, so you have the detailed answers you need at the early stages of engineering. Copyright © 2006 TriGem Technology Ltd info@trigemtech.com Seven Suggestions to Successfully Deliver Your Cost Estimate Page 9 of 19 1st Prism User Group Conference 2006 – Calgary, Alberta, Canada ARES Prism™ Project Manager is an Earned Value Management System (EVMS) designed and developed by professional project managers and project controls engineers and is suitable for all sizes and types of projects in virtually all industries. With Prism™ Project Manager you can plan, budget and control a project through all project phases including proposal, planning, engineering, procurement, construction, startup, and operations. Prism™ Project Manager is a collection of integrated project management software applications designed to help project managers: • • • • • Reduce Project Risk by providing critical project information needed for corrective action decision making in a timely manner, Provide Earned Value reporting required by government agencies, Achieve Cost/Schedule Integration with project planning data through seamless integrations with Primavera Project Manager© and Microsoft Project©, Reduce project costs by approximately 5% to 10% by providing single source data entry and data integrity. Easily integrate actual and committed financial/accounting data with project budget data for timely forecasting and reporting. TriGem Technology KbProc™ (Knowledge Based Processing) is a data processing system designed to consolidate Kbase™ user databases and process the information according to user specified business rules. Analysis capabilities (comparative, metrics and risk), MTO dumps (piping, valves and other) and a comprehensive export of all estimate data to Excel® for direct load into Prism™ Project Manager is built into the system. When a project has been created and an estimate has been fully configured in the system (i.e. COA mapping, processing logic, metrics, etc) the settings can be re-used for each version of the estimate Copyright © 2006 TriGem Technology Ltd info@trigemtech.com Seven Suggestions to Successfully Deliver Your Cost Estimate Page 10 of 19 1st Prism User Group Conference 2006 – Calgary, Alberta, Canada thus ensuring a consistent baseline to make comparisons meaningful. The KbProc™ technology is designed to specifically address the Kbase™ project data consolidation challenges and to provide a unified data processing model that promotes data consistency and reliability. The KbProc software tool is the interface between Kbase™ and Prism™ Project Manager. Figure 1 illustrates the relationship between the three software tools that make up the solution in this case study. Kbase™ KbProc™ Prism™ Figure 1 – Relationship Between Software Tools 2.3 Data Management The Kbase™ estimating package is a file-based system that requires proper file management. A well thought out directory structure where all versions of the estimating data reside is essential, and basic data management policies should be established so that a complete file backup is done at least daily. During times when the pace of data input is significant more frequent backups should be done to ensure minimal loss of information in the event of a complete system failure. In large organizations both KbProc™ and Prism™ Project Manager are deployed as enterprise solutions with all data being stored and managed in a corporate SQL Server database for data integrity and security. The estimating and cost data is maintained by the in-house database administrator (DBA), and this single source of data is managed according to corporate procedures in order to achieve corporate goals. 2.4 Workflow Aspen Kbase™ The estimate is created in Kbase™ as a single project organized by areas and possibly sub-areas or as a set of project files with meaningful structure that allows the estimating team to develop the baseline dataset. When this work is complete the user databases are exported from each Kbase™ project file, and the exported Microsoft® Access files are loaded into KbProc™ for additional processing. Copyright © 2006 TriGem Technology Ltd info@trigemtech.com Seven Suggestions to Successfully Deliver Your Cost Estimate Page 11 of 19 1st Prism User Group Conference 2006 – Calgary, Alberta, Canada TriGem KbProc™ A project file is created in KbProc™ and the COA mapping database is loaded into the system. This COA mapping is created only once and each estimate that is created within the project uses the information. It is within this set of data all Kbase™ codes are mapped to your corporate COA. Data processing rules are specified within KbProc™, and other costs whether they are input directly or are a functional relationship of other data elements are specified in the system. The ability to functionally specify costs that are calculated based on Kbase™ direct cost data provides a powerful capability that enforces the defined relationship between certain data (e.g. electrical freight is 3% of the electrical material cost). These costs which are input and grouped at the estimate level optionally can be distributed to one or more of the individual user databases. The user database that is exported from Kbase™ outputs the direct cost data as either a labour or material cost. The KbProc™ system allows the estimator the flexibility to move costs to other predefined cost elements, for example, move all the civil labour and material costs to subcontract. Any number of cost elements can be specified in the system, and explicit data blocks can be assigned to these user defined cost elements. KbProc™ includes a metric measurement and analysis capability that allows the user to define the exact metric they are interested in calculating. These key indicators highlight areas of the estimate that need to be focussed on and provide valuable information during the development and review of the estimate. The metrics that are developed offer a basis for comparison as the estimate evolves and form part of the historical record. Detailed code of account or summary level comparisons can be done within the system at the estimate or database level, and at any time during the development of the estimate a Monte Carlo simulation can be performed on the cost model to generate a frequency distribution histogram and cumulative probability distribution. The current calculated estimate forms the basis of the cost model, and the user is required to input a labour and material range for each of the major Kbase™ groups (equipment, piping, civil, steel, instrumentation, electrical, insulation, painting) as well as for the other costs. The risk analysis algorithm takes the user input number of iterations and based on a uniform probability distribution randomly generates and collects values for the variables over and over to simulate the model and create both tabular and graphical results. Major group cost sensitivities and statistical information is generated as well, and contingency calculations are done that allow the estimator to quickly identify the 50/50 point and determine confidence intervals. The availability of metrics, the use of the comparison utility and the ability to perform cost risk analysis all add to the confidence you have in the estimate you deliver to create your baseline budget. Copyright © 2006 TriGem Technology Ltd info@trigemtech.com Seven Suggestions to Successfully Deliver Your Cost Estimate Page 12 of 19 1st Prism User Group Conference 2006 – Calgary, Alberta, Canada ARES Prism™ Project Manager When KbProc™ definition is complete and analysis is done the estimate is exported to Excel® and Prism™ Project Manager can directly load the dataset to create the baseline budget for cost control of the project. During execution of the export the complete dataset is mapped to your corporate COA which is loaded in Prism™ Project Manager. Figure 2 illustrates the basic workflow process and software tools that are described above and used in the case study. The relationship between the workflow element and the software tool is shown by the dotted line. Creation of the estimate is done using Kbase™ and KbProc™, and Prism™ Project Manager generates the baseline budget from the estimate and does all the cost control. Workflow Software Tools Create Estimate Kbase™ Baseline Budget KbProc™ Cost Control Prism™ Project Manager Figure 2 - Basic Workflow Process and Software Tools Although the workflow described above is simple, each of the three software applications or tools plays an integral part in the overall solution. Complex system solutions that are problem domain (industry) specific are very often developed using the best-of-breed software applications that are integrated to produce the overall solution. Proper assessment of each application and a clear understanding of the integration paradigm are necessary in order to design the complete system that solves your particular problem. Copyright © 2006 TriGem Technology Ltd info@trigemtech.com Seven Suggestions to Successfully Deliver Your Cost Estimate Page 13 of 19 1st Prism User Group Conference 2006 – Calgary, Alberta, Canada 2.5 Solution The KbProc™ system is architected to be the container for the Kbase™ user databases. The exported Microsoft® Access mdb files are loaded into the system and stored in a corporate SQL Server database for data integrity and security. The data flow model for the case study solution is shown in Figure 3. Other Costs KbaseTM Excel® Format Export KbaseTM User Databases Load Load Process, document, analyze. KbProcTM Excel® Export Import Prism™ Project Manager SQL Server Database Baseline budget, cost control Figure 3 – Solution Dataflow Model Copyright © 2006 TriGem Technology Ltd info@trigemtech.com Seven Suggestions to Successfully Deliver Your Cost Estimate Page 14 of 19 1st Prism User Group Conference 2006 – Calgary, Alberta, Canada Experienced estimators can work with the data in KbProc™ to analyze cost sensitivities, review metrics and perform risk analysis to better understand contingency. To reiterate custom data processing logic can be specified to distribute costs to user-defined cost elements or to exclude certain blocks of data all together. Consolidated MTOs can be exported for review, and custom metrics can be defined. As the estimate evolves the system manages each version and estimate and database comparisons can be done to quantify the changes. Data records are mapped to the project code of accounts (COA) during creation of the Prism™ export file (Excel®) which can then be directly loaded into PPE or PPM. Multi-billion dollar capital projects are more easily managed by using systems that promote consistent process, and KbProc™ as part of the larger solution can assist in these considerable estimating efforts. 2.6 Results Data processing using the initial release of KbProc™ clearly illustrates the potential utility for such a system and the value of effective data processing. After initial setup of the COA mapping table and data processing criteria the KbProc™ specialist working with the cost estimate lead can quickly load the Kbase™ user databases into the system and generate reports for review. Other costs are defined in the system, and the user has the ability to define these costs as functional relationships of other costs. Cost sensitivity, metrics and risk analysis tools are part of the system and give the estimator confidence in the dataset being generated. The Prism™ export file is created at the click of a button and can be directly loaded into Prism™ Project Manager. When the original Kbase™ project files are modified, a new estimate is easily created in KbProc™ and the new user databases can be loaded. All the original estimate setup is retained, and comparisons can be done to show the impact of the changes. 2.7 Interpretation Clearly it is possible to process data more effectively with sound processes and capable tools. Data model and process consistency provides for predictable results, and the use of KbProc™ to format large Kbase™ datasets for load into Prism™ Project Manager can result in significant workflow advantages for your organization. Other data streams that are developed outside the system, for example Excel® worksheets with owner’s costs, are easily formatted and loaded into the KbProc™ SQL database, and all the power and benefits of the solution are available. Copyright © 2006 TriGem Technology Ltd info@trigemtech.com Seven Suggestions to Successfully Deliver Your Cost Estimate Page 15 of 19 1st Prism User Group Conference 2006 – Calgary, Alberta, Canada 2.8 Case Study Review of the Seven Suggestions 1. Consistent Coding The system includes WBS and COA definitions which are applied to each data record. 2. Naming Conventions The system through its flexible cost element definition and standardized data organization inherently enforces basic naming conventions. 3. Structured Data All system data is stored in a hierarchical database that enforces data integrity through the defined data relationships. 4. Single Source of Data The single source of data is the database server where all estimating data resides and security of the data is enforced. 5. Defined Processes The system allows for definition of the business logic by allowing the user to input the data processing rules that are sequentially applied during processing. 6. Separate Data and Presentation The system is designed using standard three-tier application architecture that separates data from logic from presentation. 7. Think Quality Quality of the system data is achieved by automating the business rules and providing consistent mechanisms for outputting results. 2.9 Conclusions Industry solutions are developed by carefully analyzing the specific problem you are trying to solve and applying creative thinking to design a system that produces the desired result. An indepth understanding of technology is needed in order to accurately scrutinize each commercialoff-the-shelf (COTS) software package that is under consideration to be a part of a larger solution. More importantly, an in-depth understanding of the problem domain is necessary. Effective data processing can be realized when software systems are thoughtfully deployed within an organization, and the long-term benefits to the organization are significant. Copyright © 2006 TriGem Technology Ltd info@trigemtech.com Seven Suggestions to Successfully Deliver Your Cost Estimate Page 16 of 19 1st Prism User Group Conference 2006 – Calgary, Alberta, Canada 3. Benefits of Using Software Tools for Data Processing • • • Standardized systems ensure processing consistency with reproducible results. A single source of data and model consistency improves data quality and gives you the ability to effectively secure, manage and benefit from your information assets. Quality software allows you to manage increasing demands on time with fewer resources, and you are better able to compete. The system described in the case study above uses a combination of enabling technologies as the cornerstones of the estimating solution. Kbase™, KbProc™ and Prism™ Project Manager each provide key functionally that forms the overall system, and these software applications have many common attributes which are easily summarized as fast, accurate, consistent and powerful. FAST Quickly review, analyze and process estimate data. ACCURATE Create and deliver detailed estimates with confidence. CONSISTENT Structured input and flexible algorithms produces consistent detailed output. POWERFUL User defined data processing model ensures consistency and reproducible results. Well-architected solutions provide the framework for organizing the relationships between data and form the basis for further incremental development based on successively more detailed descriptions of the business processes which ultimately become the logic for the system. These solutions evolve and help organizations realize their corporate objectives and strategies. Copyright © 2006 TriGem Technology Ltd info@trigemtech.com Seven Suggestions to Successfully Deliver Your Cost Estimate Page 17 of 19 1st Prism User Group Conference 2006 – Calgary, Alberta, Canada 4. References 1. Aspen Technology, Inc (April 2005). Icarus Reference Icarus Evaluation Engine (IEE) 2004.1 2. Aspen Technology, Inc (April 2005). Aspen Kbase 2004.1 User Guide 3. ARES Corporation, (2006). Prism Project Manager Version 5.0b, Build 246, Enterprise Application and Help File. 4. Walpole, Ronald E., Myers, Raymond H., (1989). Probability and Statistics for Engineers and Scientists. Fourth Edition, Macmillan Publishing Company, New York. 5. Rubinstein, Reuven Y. (1981). Simulation and The Monte Carlo Method. John Wiley & Sons, Inc., New York. 6. Dean, Edwin B., Wood, Darrell A., Moore, Arlene A., Bogart, Edward H. (1986). Cost Risk Analysis on Perception of the Engineering Process. International Society of Parametric Analysts. 7. Lorance, Randal B., Wendling, Robert V. (1999). Basic Techniques for Analyzing and Presentation of Cost Risk Analysis. AACE International Transactions. 8. Wilson, Scott F., KiZAN Corporation (1999). Analyzing Requirements and Defining Solution Architectures. Microsoft Press, Penguin Books Canada Limited. 9. Booch, Grady (1994). Object-Oriented Analysis and Design with Applications. Second Edition, Addison Wesley Longman, Inc. Reading Massachusetts. 10. Date, C.J. (1995). An Introduction to Dataase Systems. Sixth Edition, Addison-Wesley Publishing Company, Inc., Reading, Massachusetts. 11. Stephens, Ryan K., Plew, Ronald R. (2001). Database Design. Sams Publishing, Indianapolis, IN. 12. Sherman, Rick (2004). Ten Principles for Increasing the Value of Your Data Warehouse and Business Intelligence Investments, Athena IT Solutions White Paper 13. AACE International (2004). Edited by Dr.Scott J. Amos, PE, Skills & Knowledge of Cost Engineering. 5th Edition, Morgantown, WV. Copyright © 2006 TriGem Technology Ltd info@trigemtech.com Seven Suggestions to Successfully Deliver Your Cost Estimate Page 18 of 19 1st Prism User Group Conference 2006 – Calgary, Alberta, Canada 5. Acronyms BI COA COM COTS DBA EVMS KbProc MTO PPE PPM SQL WBS Copyright © 2006 TriGem Technology Ltd info@trigemtech.com Business Intelligence Code of Accounts Component Object Model Commercial-off-the-Shelf Database Administrator Earned Value Management System Knowledge Based Processing Material Take Off Prism Project Estimator Prism Project Manager Structured Query Language Work Breakdown Structure Seven Suggestions to Successfully Deliver Your Cost Estimate Page 19 of 19