ADA evidence analysis manual

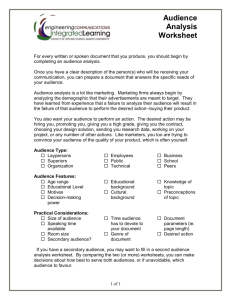

advertisement