Objectives and Scope Progress Future Work Expected Impact

advertisement

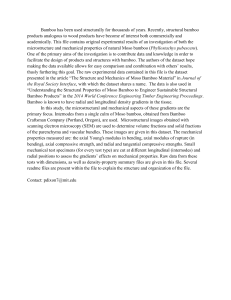

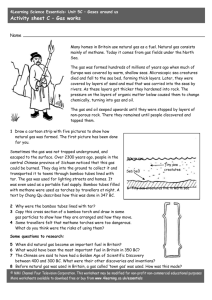

Scott B. Baden University of California, San Diego March 15, 2013 Objectives and Scope The goal of this project is to build a robust translator, Bamboo, that rewrites MPI applications in order to reduce their communication overheads through communication overlap and improved workload assignment. The translator will handle a commonly used subset of the MPI interface, roughly 20 routines, including point-to-point and collective message passing and communicator management. Progress Our translator applies a set of transformations to MPI source code that capture MPI library semantics to (1) analyze dependence information expressed via MPI calls and (2) automatically generate a semantically equivalent task precedence graph representation, which can run asynchronously and automatically hide communication delays. At present Bamboo supports the following point to point calls–Send/Isend, Recv/Irecv and Wait/Waitall. It also supports 3 collectives: Barrier, Broadcast and Reduce. Bamboo translates each collective routine into its component point-to-point calls. We currently use the algorithms described in [2], however, in the future we will provide support to enable 3rd parties to implement their own, i.e. proprietary algorithms tuned to specific hardware structures and also to implement more advanced polyalgorithms. In [1], we showed that Bamboo improved the performance of a 3D iterative solver, the dense matrix multiply kernel (traditional 2D formulation) and the communication avoiding variant (2.5D) on up to 96K cores of a Cray XE6 cluster. We are currently evaluating Bamboo on an Intel Phi cluster. Fig.1 shows preliminary results on Stampede at TACC (UT Austin). We have found that Bamboo enables overlap, which we are unable to realize with Intel MPI. We will report results of other applications in the near future. To increase the programming usability, Bamboo also supports communicator management. Bamboo can now handle communicator splitting, arising, in linear algebra, i.e. when generating row and column processor communicators. Future Work In the future we plan to validate Bamboo on whole applications including multigrid. We will develop support for additional collectives, e.g. AlltoAll, including polyalgorithms that choose one of many algorithms depending on the size of the data and the number of processes. Support for MPI datatypes, in particular, vector with stride, will also be added. Expected Impact Our code transformation techniques will enable existing MPI applications to scale forward to Exascale without having to resort to split-phase coding techniques that entangle implementation policy with application correctness. Restructured applications will have new computational capabilities as a result of their ability to run at scale. Bamboo’s translation techniques apply to a wide range of MPI applications. Though it currently handles C/C++, Bamboo is language-neutral. Bamboo is built using the ROSE infrastructure, and supports other languages such as Fortran. References [1] T. Nguyen, P. Cicotti, E. Bylaska, D. Quinlan and S. B. Baden, Bamboo - Translating MPI applications to a latency-tolerant, data-driven form, Proceedings of the 2012 ACM/IEEE conference on Supercomputing (SC12), Salt Lake City, UT, Nov. 10 -16, 2012. [2] R. Thakur, R. Rabenseifner, and W. Gropp, “Optimization of Collective Communication Operations in MPICH, Intl Journal of High Performance Computing Applications, 19(1):49-66, Spring 2005. 1 A6.@-A6.@"46=>" %#!!" %#!!" *+,-./01" %!!!" *+,-2./01" $#!!" 324566" 7)89" $!!!" """"""B%C" $(" =6;<"16>0?" (a) MIC-MIC mode 6.33789:0"35;7" )%" *,:-*,:"46;<" )*+,-./0" )*+,1-./0" 213455" '#!!" '!!!" &!!" %!!" &" <#$=" !" '%" C5;7"05D/8" )%" $!!"# ,!"# +!"# *!"# )!"# (!"# '!"# &!"# %!"# $!"# !"# <'>=" &" $(" <6=>"16?0@" (&" (b) Host-Host mode <'(=" $" '" "<'&=" '%!!" '$!!" (&" "#3451# '" '&!!" ?@AB*6" "B#C" !" &" #!!" ""B(C" ""B(C" #!!" !" $!!" 324566" $!!!" 7%89" 7$)9" *+,-2./01" $#!!" 7)(9" #!!" B(C" *+,-./01" %!!!" 789:+;" @ABC+D" 7)(9" 678# 9/55# 9/5:# $# %# '# +# $)#&%#)'# (#" ;<9=;<9# (c) Symmetric mode (on up to 32 nodes only) $# %# '# +# $)#&%#)'# >/2?=>/2?# $# %# '# +# $)# &%# -./012# @A551?B4C# (d) Time distribution. P/U: pack/unpack. Figure 1: Weak scaling study of a 7-point iterative Jacobi solver running on up to 64 Intel Phi processors of the Stampede cluster (TACC). The local problem size is 256×256×512P, where P the number of Phi processors. Bamboo was able to overlap communication with computation, thereby improving performance by as much as 36% over hand coded MPI that doesn’t overlap communication with computation. We tested three different variants of the solver. MPI-sync is an MPI code that doesn’t overlap communication with computation. MPI-async is the split-phase variant that attempts to realize overlap with immediate mode communication. This variant could not overlap communication with computation and ran slower than the MPI-sync variant. Bamboo is the result of passing MPI-sync through the Bamboo translator. We ran in 3 different modes. Symmetric mode (Fig. 1(c)) yields higher performance than MIC-MIC mode (Fig. 1(a)), which transparently utilizes the host processors to move data between nodes, and HostHost mode, where the host processor handles all computation and communication. Bamboo significantly increases performance in all modes. The greater the communication overhead, the greater the improvement achieved. Fig. 1(d) compares the relative communication overhead incurred by the 3 different modes. In MIC-MIC mode, communication is costly because messages have to be physically buffered by hosts (MIC-CPU-CPU-MIC). In Host-Host mode (Sandy Bridge processors only), the overhead of communication is small since data is communicated among hosts only. Symmetric mode considers hosts and MICs as independent nodes and utilizes both, thereby increasing performance and decreasing communication overhead. 2 $#