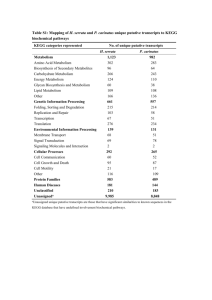

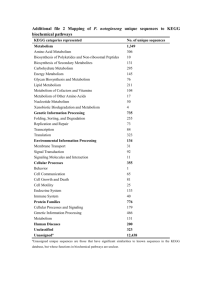

supplementary material Appendix I

advertisement