Evaluating Your Communication Tools

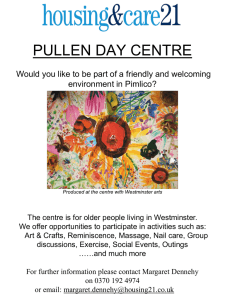

advertisement