CASE STUDY

Cray System “Blue Waters” Helps Researchers Develop a Defense Against

Damaging Space Weather

Organization

Situation

National Center for Supercomputing Applications

Urbana-Champaign, IL

www.ncsa.illinois.edu

The sun may be the key to life-sustaining functions here on Earth. But it also poses a

significant threat. The Earth is embedded in the Sun’s extended atmosphere and, as

a result, the Earth and its technological systems are in constant threat from magnetic

storms on the Sun. While most of these storms are not directed towards the Earth, when

one does come our way the results can be devastating.

About Blue Waters

Housed at the National Center for

Supercomputing Applications (NCSA) at the

University of Illinois at Urbana-Champaign

and supported by the National Science

Foundation, the Blue Waters supercomputer is

one of the most powerful supercomputers in

the world and the fastest system anywhere on

a university campus. Scientists and engineers

use the computing and data power of Blue

Waters to tackle a wide range of challenging

problems, from predicting the behavior of

complex biological systems to simulating the

evolution of the cosmos. The system is based

on Cray® XE6™ and XK7™ technology and

uses hundreds of thousands of computational

cores to achieve over 13 petaflops of peak

performance.

System Overview

•System: Cray XE/XK hybrid supercomputer

•Cabinets: 288

•Peak Performance: 13 PF

•System Memory: 1.5 PB

•XE Compute Nodes: 22,640 AMD 6276

“Interlagos” processors

•XK Compute Nodes: 4,224 NVIDIA® GK110

“Kepler” GPU accelerators

•Interconnect: Gemini

“The time we have on this machine is really

precious and it’s limited, so we need to get the

codes to run as efficiently as possible. Working

with the [team] has been extremely helpful.”

—Homa Karimabadi

Space Physics Group Leader UCSD

Cray Inc.

901 Fifth Avenue, Suite 1000

Seattle, WA 98164

Tel: 206.701.2000

Fax: 206.701.2500

www.cray.com

At one time, solar storms had little detrimental effect on the Earth. But now that our daily

lives depend on electronics, satellites and power grids, what’s happening on the surface

of the Sun really starts to matter. A strong storm can disable high-voltage transformers,

knock satellites out of orbit and cripple communications worldwide. The figures register

in the billions of dollars when talking about losses in satellite technology that have been

linked to space weather damage.

The Sun consists of a hot, ionized gas called plasma with an embedded magnetic field.

The more the plasma roils, the more it moves the magnetic field around, causing extreme

tension. At some point the magnetic field lines snap, heating and accelerating the plasma

in the process. This gives rise to solar flares and coronal mass ejections (CME). CMEs spew

out billions of tons of matter and electromagnetic radiation into space. A flare can easily

span 10 times the Earth’s diameter in size and release energy in the order of 160 million

megatons of TNT equivalent. With the release of CMEs into the solar wind — the medium

between the Sun and the Earth — a phenomenon known as space weather occurs.

Mitigating the impact of solar storms is the focus for space physics group leader Homa

Karimabadi from the University of California, San Diego and his research team.

Challenge

The solution — and the challenge — to coping with the effect of solar storms on the Earth

and its technological systems is forecasting this space weather.

A given CME can take anywhere from one to five days to reach the Earth, providing

sufficient time to take evasive action. However, during solar maxima (a period of greatest

solar activity in the 11 year solar cycle of the Sun), there can be over three solar storms

per day. “You can’t just shut off the power grids, the electronics, and the satellites every

time there is a storm. We really need to be able to judge the severity of a given storm, its

impact, and its location of impact,” says Karimabadi.

The challenge then becomes to develop accurate space weather forecasting models that

can be put to use in real-time situations.

While the Earth has a built-in defense called the magnetosphere that deflects most of

the energetic particles and radiation coming from the Sun, a process called magnetic

reconnection allows some solar wind to penetrate the planet’s protective shield. In order

to forecast space weather, the research team must simulate and understand the physics

behind this magnetic reconnection, a process that occurs on electron scales but has

global consequences.

Existing global magnetospheric codes can model the interaction of the solar wind with

the Earth’s magnetosphere, says Karimabadi, but the ultimate goal is to run these codes

in real time based on measured properties of a given CME heading toward the Earth and

predict the geographical location and the severity of the impact. An added challenge

is that the current models lack certain details, including the proper physics of magnetic

reconnection essential for developing accurate forecast models. Thus much of his team’s

effort is going toward developing models of magnetic reconnection that can be inserted

into the global codes.

The types of simulations required by this kind of research are some of the most challenging

in terms of the data and memory requirements.

©2014 Cray Inc. All rights reserved. Cray is a registered trademark of Cray Inc.

All other trademarks mentioned herein are the properties of their respective owners. 20140107_V1KJL

CASE STUDY

Page #2

Solution

A petascale computing allocation received through the National Science Foundation enabled Karimabadi and his research team to prepare

their codes for extreme-scale supercomputers and tap into the computing and data power of Blue Waters. “We wanted to know if we

changed the parameters, such as system size, would the physics change,” Karimabadi says, “and if it did, could we develop scaling laws to

extrapolate the results to real systems in nature.”

The Cray® XE™/XK™ hybrid “Blue Waters” supercomputer made it possible for the team to push their simulations to the largest size possible on

any supercomputer today. But even more, technical collaborations between the NCSA Blue Waters team, Cray, the San Diego Supercomputer

Center and Los Alamos National Laboratory helped the team optimize their codes. One collaboration resulted in increased performance of

their H3D code — a global code that treats electrons as fluids and ions as kinetic particles. Another resulted in improvements to VPIC — the

kinetic code that models individual particles for the inclusion of electron physics — as a part of the sustained petascale performance (SPP)

optimization effort.

Prior to petascale computing, almost all of the global simulations were based on fluid

models. But Blue Waters has given the team new options. With hundreds of thousands of

computational cores, the system is enabling global simulations that include important ion

kinetic physics using H3D code and local simulations that resolve both ion and electron

kinetic physics using the code VPIC.

The performance gains took some work, though. NCSA research programmer Kalyana

Chadalavada implemented the use of more fused multiply-add (FMA) instructions, while

Jim Kohn of Cray eliminated some redundant stores to temporary variables and reordered

some independent Streaming SIMD Extensions (SSE) instructions for better resource

utilization on the chip. Chadalavada says they were able to achieve an improvement of

12–18 percent by using more FMA instructions, as well as another 5 percent improvement

by eliminating some redundancy and rearranging some parts of the code.

“At scale, a significant portion of the run time is spent transferring particle data between

processors. Changing the relevant communication routines by, for example, overlapping

more communication with computation, could reduce the total simulation time

considerably,” Chadalavada says.

The holy grail of space plasmas and space physics would be to figure out how to model

magnetic reconnection—which we now know depends heavily on and is strongly

affected by electron kinetic effects—in a global code where it is not possible to resolve

electron kinetic effects. Capturing the essence of magnetic reconnection in this way is the

key to precise prediction of space weather and Karimabadi’s team is starting to see that

breakthrough form in their future.

In fact, their work with NCSA and Blue Waters has already produced a major revolution

for their discipline says Karimabadi. In a fluid model, there aren’t any particles so there

aren’t things bouncing off or deflecting. There is nothing to generate waves, so a lot of

important information is lost.

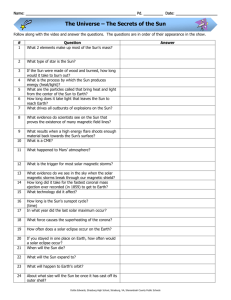

MODELING SPACE WEATHER: Magnetic field lines as visualized

by the line integral convolution technique. The color is based on the

magnitude of the magnetic field. The “closed” lines along the magnetopause current sheets are 3-D flux ropes.

“There have long been speculations about how the electron and ion kinetic effects affect

details of the reconnection process and its global consequences. Many of the ideas can

now be tested and new ideas and questions formulated to make further progress,”

Karimabadi says. “Now that we have been able to go beyond fluid models, which ignore

details on small scales, we have uncovered new and unexpected effects and are

finding ample evidence that physical processes occurring on small scales have global

consequences.”

Overall, the team has discovered new regimes of reconnection and scaling relations with system size and parameters. “It’s almost like you

have always had really poor eyesight and someone gave you glasses for the first time. You start to see a lot of details that were completely

absent in the previous simulations,” says Karimabadi.

For more information on NCSA’s space weather work, visit www.ncsa.illinois.edu/news/story/so_much_detail.

Content courtesy of National Center for Supercomputing Applications at the University of Illinois Urbana-Champaign