d - Zi Wang

advertisement

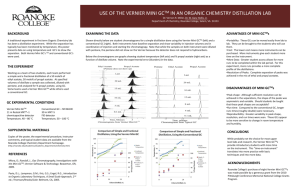

Scalable Inference for Logistic-Normal Topic Models Jianfei Chen, Jun Zhu, Zi Wang, Xun Zheng, Bo Zhang chenjf10@mails.tsinghua.edu.cn, dcszj@mail.tsinghua.edu.cn, wangzi10@mails.tsinghua.edu.cn, xunzheng@cs.cmu.edu, dcszb@mail.tsinghua.edu.cn Fast Approximate Sampling Problem: Discovering latent semantic structures from data eciently, particularly inference of non-conjugate logistic-normal topic models. Topic Model Background: Integrate out Φ. Gibbs sampling for marginalized distribution: Fast approximate sampling method to draw PG(n, ρ) samples, reducing the time complexity from O(n) to O(1). a qd zdn wdn N D Fk b k p(zdn For Existing methods for logistic-normal topic models: − = 1, Z¬n , W¬dn )e wdn Ck,¬n + βwdn k ηd e ∝ PV PV j j=1 βj j=1 Ck,¬n + k 2 λk (η k 2 d d) N (ηd |µ, σ ) 2 k p(λd |Z, W, η) k 2 λk (η k d d) ∝ exp − p(λ |N , 0) d d 2 k Nd from PG(1, ηd ). = k k 2 N (γd , (τd ) ) = PG k k λd ; Nd , ηd Σ|κ, W ∼ IW(Σ; κ, W inapplicability to real world for large scale of data Contribution: p(µ, Σ|η, Z, W) ∝ p0 (µ, Σ) 140 150 160 z ∼ PG(z ; m, ρ) 170 p(ηd |µ, Σ) = 1500 0 10 0 2200 2000 1800 1. draw vector ηd ∼ N (µ, Σ) and compute the topic mixing k ηd e k proportion θd : θd = PK ηj j=1 e d 2. for each word n (1 ≤ n ≤ Nd ): (a) draw topic zdn ∼ Mult(θd ) (b) draw word wdn ∼ Mult(Φzdn ), Φk ∼ Dir(β) Posterior distribution p(η, Z, Φ|W) ∝ p0 (η, Z, Φ)p(W|Z, Φ) • Non-conjugacy between normal prior and logistic likelihood. • Variational approximate methods -> strict factorization assump- tions. Parallel Implementation 20 No communication is needed infering ηd and λd . Broadcasting the global variables µ and Σ to every machine after each iteration. For each iteration: • For each document d: k p(zdn = |Z¬dn , W, η) • For each document d: for each topic k: ηdk ∼ N (γdk , (τdk )2 ); λkd ∼ PG λkd ; Nd , ηd k • µ, Σ ∼ 10 10 2 10 3 10 time (s) 4 10 1000 1 5 10 4 16 64 256 1024 a • Scalability analysis 4 x 10 15 Fixed M=8 Linearly scaling M Ideal 10 1.2M 2.4M 3.6M #docs 4.8M 6M - M = 8, 16, 24, 32, 40 each machine processes 150K documents. - Wikipedia data set with K = 500, as a practical problem. - As we pour in the same proportion of data and machines, the training time is almost kept constant. Parallel gCTM enjoys nice scalability. • We present a scalable Gibbs sampling algorithm for logistic-normal topic models. 10 vCTM gCTM (M=1, P=1) gCTM (M=1, P=12) 40 60 K 10 80 100 20 40 60 K 80 100 10 gCTM (M=1, P=12) gCTM (M=40, P=480) Y!LDA (M=40, P=480) 4000 • Expeirmental results show signicant improvement in time e- ciency over existing variational methods, with slightly better perplexity. • The algorithm enjoys excellent scalability, suggesting the ability to 6 4500 extract large structures from massive data. 4 3500 10 0 200 400 600 800 1000 K Selected References 2 10 2500 • Build upon state-of-the-art distributed sampler [3] 0 0 0 0 N IW(µ0 , ρ , κ , W ) 3 2 3000 For each word n (1 ≤ n ≤ Nd ): zdn ∼ 10 1500 1 10 3 Conclusion time (s) • For each document d: k ρk C z k 1−z k ρk d) d e d 1 dn dn = (e ρk ρk ρk N 1+e d 1+e d (1+e d ) d 1400 10 0 Γ(βi ) iP Γ( i βi ) perplexity QNd n=1 2 −1 PD (Nd −L) d=1 vCTM gCTM (M=1, P=1) gCTM (M=1, P=12) Q k (ρk )2 λ k R − d d κk ρ ∞ k 1 2 = e d d 0 e p(λk |N , 0)dλ d d d 2Nd 1600 4 1400 is the partition function of Dirichlet distribution. b C t is the number of times topic k being assigned to the term t over the whole k corpus. c With κk = C k − N /2 and p(λk |N , 0) the Polya-Gamma distribution[2] d d d d d PG(Nd , 0), likelihood `(ηdk |ηd¬k ) is 1800 5 Measurement: Perplexity Logistic-Normal Topic Models a δ(β) = 1 ηkd ∼ P(ηkd | ηd¬ k, Z, W ) 1600 CTM Generating Process[1]: 2 T 0 −1 180 1 Data Sets: NIPS paper abstracts, 20Newsgroups, NYTimes (New York Times) corpora, and the Wikipedia corpus. perplexity • Ecient parallel implementation. 2000 K=50 K=100 4000 • ρkd = −4.19, Cdk = 19, Nd = 1155, µkd = 0.40, σd2 = 0.31, ζ = 5.35. 0 0 0 0 N IW(µ0 , ρ , κ , W ) 2000 gCTM (S=1) gCTM (S=2) gCTM (S=4) gCTM (S=8) gCTM (S=16) gCTM (S=32) vCTM 1200 2000 130 2000 • frequency of f (z) with z ∼ PG(m, ρ); frequency of samples from ηdk ∼ p(ηdk |ηd¬k , Z, W). d • A partially collapsed gibbs sampling algorithm; 6000 • Qualitative analysis on perplexity and training time. ), µ|Σ, µ0 , ρ ∼ N (µ; µ0 , Σ/ρ) Y 8000 QD QNd Perp(M) = ( d=1 i=L+1 p(wdi |Mwd,1:L )) where M denotes the model parameters, wd,1:L is the observed part of document d. • Fully-Bayesian models −1 m=1 m=2 m=4 m=8 m=n (exact) Experiments k λd : draw 1 k ηd k p(wdn |zdn For ηdk : p(ηdk |ηd¬k , Z, W, λkd ) ∝ exp 1.5 0 120 = 1|Z¬n , wdn , W¬dn , η) ∝ k k κd ηd m=1 m=2 m=4 m=8 m=n (exact) 0.5 techniquec CTM) • limitations: subject to high computational cost, inaccuracy and j ηd ab N (ηd |µ, Σ) • Sampling Logistic-Normal parameters with data augmentation • Logistic-normal Topic Models (including CTM, DTM, innite • variational inference, based on mean-eld assumption • Sampling topic assignments K For each document n (1 ≤ n ≤ Nd ): 1. draw topic mixing proportion θd ∼ Dir(α) 2. for each word n: (a) draw topic zdn ∼ M ult(θd ) (b) draw word wdn ∼ M ult(Φzdn ), Φk ∼ Dir(β) d=1 n=1 j=1 e 10000 time (s) k=1 e PK x 10 2 zdn ηd Nd D Y Y 2.5 2500 gCTM (S=1) gCTM (S=2) gCTM (S=4) gCTM (S=8) gCTM (S=16) gCTM (S=32) vCTM 4 perplexity ∝ δ(Ck + β) δ(β) j=1 e j ηd • Sensitivity analysis on hyper-parameters. 2500 time (s) • Latent Dirichlet Allocation (LDA) K Y e PK N (ηd |µ, Σ) Frequency d=1 n=1 zdn ηd Frequency p(η, Z|W) ∝ p(W|Z) Nd D Y Y Experiments perplexity Gibbs Sampling with Data Augmentation perplexity Introduction 10 gCTM (M=1, P=12) gCTM (M=40, P=480) Y!LDA (M=40, P=480) 200 400 600 800 1000 K • Eciency vCTM becomes impractical when the data size reaches 285K. gCTM can handle larger data sets with considerable speed. Training time of vCTM and gCTM (M = 40) data set D K vCTM gCTM NIPS 1.2K 100 1.9 hr 8.9 min 20NG 11K 200 16 hr 9 min NYTimes 285K 400 N/A* 0.5 hr Wiki 6M 1000 N/A* 17 hr *not nished within 1 week. [1] D. Blei and J. Laerty. Correlated topic models. In Advances in Neural Information Processing Systems (NIPS), 2006. [2] N. G. Polson, J. G. Scott, and J. Windle. Bayesian inference for logistic models using Polya-Gamma latent variables. arXiv:1205.0310v2, 2013. [3] A. Ahmed, M. Aly, J. Gonzalez, S. Narayanamurthy, and A. Smola. Scalable inference in latent variable models. In International Conference on Web Search and Data Mining (WSDM), 2012. [4] D. Newman, A. Asuncion, P. Smyth, and M. Welling. Distributed algorithms for topic models. Journal of Machine Learning Research, (10):18011828, 2009. [5] J. Paisley, C. Wang, and D. Blei. The discrete innite logistic normal distribution for mixed-membership modeling. In International Conference on Articial Intelligence and Statistics (AISTATS), 2011. [6] D. Blei and J. Laerty. Dynamic topic models. In International Conference on Machine Learning (ICML), 2006. [7] M.I. Jordan, Z. Ghahramani, T.S. Jaakkola, and L.K. Saul. An introduction to variational methods for graphical models. Machine learning, 37(2):183233, 1999.