process design 2– analysis

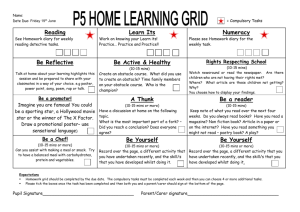

advertisement