Joint Probability Distributions and Random

advertisement

STAT511 — Spring 2014

1

Lecture Notes

Chapter 5

February 19, 2014

Joint Probability Distributions and Random Samples

5.1

Jointly Distributed Random Variables

Chapter Overview

• Jointly distributed rv

– Joint mass function, marginal mass function for discrete rv

– Joint density function, marginal density function for continuous rv

– Independent random variables

• Expectation, covariance and correlation between two rvs

–

–

–

–

Expectation

Covariance

Correlation

Interpretations

• Statistics and their distributions

• Distribution of the sample mean

• Distribution of a linear combination

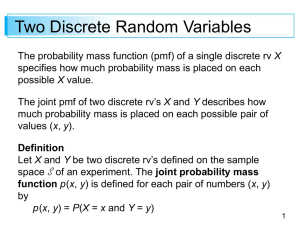

Joint Mass Function of Two Discrete RVs

Definition 1. Let X and Y be two discrete rvs defined on the sample space S of a

random experiment. The joint probability mass function p(x, y) is defined for each

pair of numbers (x, y) by:

p(x, y) = P (X = x and Y = y)

Let A be the set consisting of pairs of (x, y) values, then the probability P [(X, Y ) ∈ A]

is obtained by summing the joint pmf pairs in A:

XX

P [(X, Y ) ∈ A] =

p(x, y)

(x,y) ∈A

Example of Joint PMF

Example 5.1.1 Exercise 5.3: A market has two check out lines. Let X be the

number of customers at the express checkout line at a particular time of day. Let Y

denote the number of customers in the super-express line at the same time. The joint

pmf of (X, Y ) is given below:

x =, y =

0

1

2

3

4

0

0.08

0.06

0.05

0.00

0.00

1

0.07

0.15

0.04

0.03

0.01

2

0.04

0.05

0.10

0.04

0.05

3

0.00

0.04

0.06

0.07

0.06

What is P (X = 1, Y = 0)? What is P (X = 1, Y > 2)? What is P (X = Y )?

Purdue University

Chapter5˙print.tex; Last Modified: February 19, 2014 (W. Sharabati)

STAT511 — Spring 2014

2

Lecture Notes

Marginal Probability Mass Function

Definition 2. The marginal probability mass functions of X and Y , denoted pX (x)

and pY (y), respectively, are given by

X

pX (x) = P (X = x) =

p(x, y)

y

pY (y) = P (Y = y) =

X

p(x, y)

x

Example of Marginal Probability Mass Function

Example 5.1.1 Now let’s find the marginal mass function.

x =, y =

0

1

2

3

4

p(y)

0

0.08

0.06

0.05

0.00

0.00

0.19

1

0.07

0.15

0.04

0.03

0.01

0.30

2

0.04

0.05

0.10

0.04

0.05

0.28

3

0.00

0.04

0.06

0.07

0.06

0.23

p(x)

0.19

0.30

0.25

0.14

0.12

1.00

What is P (X = 3)? What is P (Y = 2)?

Joint Probability Density Function of Two Continuous RVs

Definition 3. Let X and Y be continuous rv’s. Then f (x, y) is the joint probability

density function for X and Y if for any two-dimensional set A:

Z Z

P [(X, Y ) ∈ A] =

f (x, y)dxdy

A

In particular, if A is the two-dimensional rectangle {(x, y) : a ≤ x ≤ b, c ≤ x ≤ d},

Z bZ

P [(X, Y ) ∈ A] =

d

f (x, y)dydx

a

c

Joint Probability Density Function of Two Continuous RVs

P [(X, Y ) ∈ A] = Volume under density surface above A

Purdue University

Chapter5˙print.tex; Last Modified: February 19, 2014 (W. Sharabati)

STAT511 — Spring 2014

3

Lecture Notes

Marginal Probability Density Function

Definition 4. The marginal probability density function of X and Y , denoted

fX (x) and fY (y), respectively, are given by:

Z ∞

f (x, y)dy,

−∞ < X < ∞.

fX (x) =

−∞

∞

Z

f (x, y)dx,

fY (y) =

−∞ < Y < ∞.

−∞

Independent Random Variables

Definition 5 (Independence between X and Y ). Two random variables X and Y are

said to be independent if for every pair of x and y values,

p(x, y) = pX (x) · pY (y)

when X and Y are discrete or

f (x, y) = fX (x) · fY (y)

when X and Y are continuous

If X, Y are independent, we have:

P (a < X < b, c < Y < d) = P (a < X < b) · P (c < Y < d)

If the conditions are not satisfied for all (x, y) then X and Y are dependent.

Example of Independence

Example 5.1.2 X follows an exponential distribution with λ = 2, Y follows an

exponential distribution with λ = 3, X and Y are independent, find f (x, y).

f (x, y) = fX (x) · fY (y) = 2e−2x 3e−3y = 6e−(2x+3y) , x ≥ 0, y ≥ 0

Example 5.1.3 Toss a fair coin, and a die. Let X = 1 if coin is head, let X = 0 if coin

is tail. Let Y be the outcome of the die. if X and Y are independent, find p(x, y) and

find the probability that the outcome of the die is greater than 3 and the coin is a head?

x=,y=

0

1

1

2

1

2

1

· 16

· 16

1

2

1

2

2

· 16

· 16

1

2

1

2

3

· 16

· 16

1

2

1

2

4

· 16

· 16

1

2

1

2

5

· 16

· 16

1

2

1

2

6

· 16

· 16

P (X = 1, Y > 3) = P (X = 1) · P (Y > 3)

Examples Continued

Example 5.1.4 Given the following p(x, y), is X and Y independent?

x =, y =

0

1

2

3

4

p(y)

Purdue University

0

0.04

0.08

0.16

0.04

0.08

0.4

1

0.03

0.06

0.12

0.03

0.06

0.3

2

0.01

0.02

0.04

0.01

0.02

0.1

3

0.02

0.04

0.08

0.02

0.04

0.2

p(x)

0.1

0.2

0.4

0.1

0.2

1.00

Chapter5˙print.tex; Last Modified: February 19, 2014 (W. Sharabati)

STAT511 — Spring 2014

4

Lecture Notes

Example 5.1.5 Give fX (x) = 0.5x, 0 < x < 2, fY (y) = 3y 2 , 0 < y < 1, f (x, y) =

1.5xy 2 , 0 < x < 2 and 0 < y < 1, is X, Y independent?

fX (x)fY (y) = 0.5x · 3y 2 = 1.5x · y 2 = f (x, y)

More Than Two Random Variables

If X1 , X2 , · · · , Xn are all discrete random variables, the joint pmf of the variables

is the function

p(x1 , · · · , xn ) = P (X1 = x1 , · · · , Xn = xn )

If the variables are continuous, the joint pdf is the function f such that for any n intervals

[a1 , b1 ], · · · , [an , bn ],

P (a1 ≤ X1 ≤ b1 , · · · , an ≤ Xn ≤ bn )

Z

b1

Z

bn

f (x1 , · · · , xn )dxn · · · dx1

···

=

a1

an

Independence – More Than Two Random Variables

The random variables X1 , X2 , · · · , Xn are independent if for every subset

Xi1 , Xi2 , · · · , Xin

of the variables, the joint pmf or pdf of the subset is equal to the product of the marginal

pmf’s or pdf’s.

Conditional Distributions

Definition 6. Let X, Y be two continuous rv’s with joint pdf f (x, y) and marginal

pdfs fX (x) and fY (y). Then for any X value x for which fX (x) > 0, the conditional

probability density function of Y given that X = x is:

fY |X (y|x) =

f (x, y)

,

fX (x)

−∞ < y < ∞.

If X and Y are discrete, replace pdf’s by pmf’s in this definition. That then gives

conditional probability mass function of Y when X = x.

Example of Conditional Mass

Example 5.1.1 Joint mass is given below. What is the conditional mass function

of Y , given X = 1?

x =, y =

0

1

2

3

4

p(y)

Purdue University

0

0.08

0.06

0.05

0.00

0.00

0.19

1

0.07

0.15

0.04

0.03

0.01

0.30

2

0.04

0.05

0.10

0.04

0.05

0.28

3

0.00

0.04

0.06

0.07

0.06

0.23

p(x)

0.19

0.30

0.25

0.14

0.12

1.00

Chapter5˙print.tex; Last Modified: February 19, 2014 (W. Sharabati)

STAT511 — Spring 2014

5

Lecture Notes

Example 5.1.6 Given f (x, y) = 65 (x + y 2 ), 0 ≤ x ≤ 1, 0 < y < 1. fX (x) = 65 x + 25 .

What is the conditional density of Y given X = 0?

fY |X (y|0) =

5.2

6 2

y

f (0, y)

= 52

fX (0)

5

Expected Values, Covariance, and Correlation

Expected Values

Definition 7. Let X and Y be jointly distributed rvs with pmf p(x, y) or pdf f (x, y)

according to whether the variables are discrete or continuous. Then the expected value

of a function h(X, Y ), denoted E[h(X, Y )] or µh(x,y) is:

µh(X,

Y)

= E [h(X, Y )] =

P P

x

y h(x, y) · p(x, y),

R∞ R∞

−∞ ∞

discrete;

h(x, y) · f (x, y)dxdy, continuous.

Examples of Expected Values

• Example 5.2.1 The joint pmf is given below.

E[max(X, Y )]?

p(x, y)

x=0

1

10

y=0

0.02

0.04

0.01

1

0.06

0.15

0.15

10

0.02

0.20

0.14

What is E(XY )?

What is

20

0.10

0.10

0.01

• Example 5.2.2 Joint pdf of X and Y is: f (x, y) = 4xy, 0 < x < 1, 0 < y < 1.

What is E(XY )?

Covariance

Definition 8. Let E(X) and E(Y ) denote the expectations of rv X and Y . The

covariance between X and Y , denoted Cov(X, Y ) is defined as:

Cov(X, Y ) = E[(X − E(X))(Y − E(Y ))]

i.e.,

=

P P

x

y [x − E(X)] [y − E(Y )] p(x, y),

R∞ R∞

−∞ ∞

discrete;

(x − E(X))(y − E(Y ))f (x, y)dxdy, continuous.

Properties of Covariance and Shortcut Formula

• Cov(X, X) = V ar(X)

• Cov(X, Y ) = Cov(Y, X)

• Cov(aX, bY ) = abCov(X, Y ), Cov(X + a, Y + b) = Cov(X, Y ), i.e., Cov(aX +

b, cY + d) = acCov(X, Y )

Purdue University

Chapter5˙print.tex; Last Modified: February 19, 2014 (W. Sharabati)

STAT511 — Spring 2014

6

Lecture Notes

• Shortcut formula:

Cov(X, Y ) = E(XY ) − E(X)E(Y )

• If X and Y are independent, then Cov(X, Y ) = 0. However, Cov(X, Y ) = 0 does

not imply independence.

Interpretation of Covariance

Similar to Variance, Covariance is a measure of variation.

• Covariance measures how much two random variables vary together.

– As opposed to variance: a measure of variation of a single rv.

• If two rv’s tend to vary together, then the covariance between the two variables

will be positive.

– For example, when one of them is above its expected value, then the other

variable tends to be above its expected value as well.

• If two rv’s vary differently, then the covariance between the two variables will be

negative.

– For example, when one of them is above its expected value, the other variable

tends to be below its expected value.

Examples of Covariance

• Example 5.2.1 Exercise The joint pmf is given below. What’s Cov(X, Y )?

p(x, y)

x=0

1

10

y=0

0.02

0.04

0.01

1

0.06

0.15

0.15

10

0.02

0.20

0.14

20

0.10

0.10

0.01

• Example 5.2.2 Joint pdf of X and Y is: f (x, y) = 4xy, 0 < x < 1, 0 < y < 1.

What’s Cov(X, Y )?

• Example 5.2.3 Given the pmf below, what’s Cov(X, Y )?

p(x, y)

x=0

1

pY (y)

y=1

0.04

0.06

0.1

2

0.36

0.54

0.9

pX (x)

0.4

0.6

1

Correlation

Definition 9. The correlation coefficient of two rv’s X and Y , denoted Corr(X, Y ),

ρX,Y or just ρ is defined by:

Cov(X, Y )

p

Corr(X, Y ) = ρX,Y = p

V ar(X) V ar(Y )

i.e.,

Cov(X, Y )

σX · σY

are the std dev’s of X and Y , respectively.

Corr(X, Y ) = ρX,Y =

where σX and σY

Purdue University

Chapter5˙print.tex; Last Modified: February 19, 2014 (W. Sharabati)

STAT511 — Spring 2014

7

Lecture Notes

Properties and Shortcut Formula of Correlation

• For any two rv’s X and Y , −1 ≤ Corr(X, Y ) ≤ 1

• For a and c both positive or both negative, Corr(aX + b, cY + d) = Corr(X, Y )

• If X and Y are linearly related, i.e., Y = aX + b, then Corr(X, Y ) = ±1

• Shortcut formula:

E(XY ) − E(X)E(Y )

p

Corr(X, Y ) = p

2

E(X ) − (E(X))2 E(Y 2 ) − (E(Y ))2

• If X and Y are independent, Corr(X, Y ) = 0. However, Corr(X, Y ) = 0 does not

imply independence.

Interpretation of Correlation

Correlation is a standardized measure.

• Correlation coefficient indicates the strength and direction of a linear relationship

between two rv’s.

• X and Y approximately positively linearly related, Corr(X, Y ) will be close to 1.

• X and Y approximately negatively linearly related, Corr(X, Y ) will be close to

−1.

• X and Y not linearly related, Corr(X, Y ) = 0. Especially, when X, Y not related,

i.e., independent, Corr(X, Y ) = 0.

Examples of Correlation

• Example 5.2.1 Exercise The joint pmf is given below. Find Cov(X, Y ).

p(x, y)

x=0

1

10

y=0

0.02

0.04

0.01

1

0.06

0.15

0.15

10

0.02

0.20

0.14

20

0.10

0.10

0.01

• Example 5.2.2 Joint pdf of X and Y is: f (x, y) = 4xy, 0 < x < 1, 0 < y < 1.

Find Corr(X, Y ).

• Example 5.2.3 Given the pmf below, what’s Corr(X, Y )?

p(x, y)

x = −1

0

1

Purdue University

y = −1

1

3

1

9

1

9

1

9

1

9

1

9

1

9

1

9

1

9

1

9

Chapter5˙print.tex; Last Modified: February 19, 2014 (W. Sharabati)

STAT511 — Spring 2014

5.3

8

Lecture Notes

Statistics and their Distributions

Statistic

Definition 10 (Statistic). A statistic is any quantity whose value can be calculated

from sample data. Or, a statistic is a function of random variables. We denote a statistic

by an uppercase letter; a lowercase letter is used to represent the calculated or observed

value of the statistic.

Examples:

• Two rv X1 and X2 , denote X̄ =

X1 +X2

,

2

X̄ is a statistic.

• 5 rv’s X1 , X2 , · · · , X5 , denote Xmax = max(X1 , X2 , · · · , X5 ), Xmax is a

statistic.

2+ Independent RV’s

Definition 11 (Joint pmf and pdf for more than two rv’s). For n rv’s X1 , X2 , · · · , Xn ,

the joint pmf is:

p(x1 , x2 , · · · , xn ) = P (X1 = x1 , X2 , = x2 , · · · , Xn = xn )

and the joint pdf is for any intervals [a1 , b1 ], · · · , [an , bn ]:

Z b1

Z bn

f (x1 , · · · , xn )dx1 · · · dxn

P (a1 ≤ X1 ≤ b1 , ..., an ≤ Xn ≤ bn ) =

···

a1

an

Definition 12 (Independence of more than two rv’s). The random variables X1 , X2 , · · · , Xn

are said to be independent if for any subset of Xi ’s, the joint pmf or pdf is the product

of the marginal pmf or pdf’s.

Random Samples

Definition 13 (Random Sample). The rv’s X1 , X2 , · · · , Xn are said to form a (simple)

random sample of size n if:

1. The Xi ’s are independent rv’s.

2. Every Xi has the same probability distribution.

Such Xi ’s are said to be independent and identically distributed.

• Example: Let X1 , X2 , · · · , Xn be a random sample from standard normal, i.e., X1

follows N (0, 1), X2 follows N (0, 1), ... ... Xn follows N (0, 1) And, X1 , X2 , · · · , Xn

are independent.

Examples of Statistic of a Random Sample

• Example 5.3.1 Sample mean Take a random sample of size n from a specific distribution (say

standard normal). X̄ = X1 +X2n+···+Xn is the random variable sample mean.

X̄ =

X1 + X2 + · · · + Xn

, is a statistic

n

If X1 = x1 , X2 = x2 , · · · , Xn = xn ,

x̄ =

Purdue University

x1 + x2 + · · · + xn

is a value of the rv X̄

n

Chapter5˙print.tex; Last Modified: February 19, 2014 (W. Sharabati)

STAT511 — Spring 2014

9

Lecture Notes

• Example 5.3.2 Sample variance Take a random sample of size n from a specific distribution(say

standard normal). X̄ = X1 +X2n+···+Xn is the random variable sample mean.

P

(Xi − X̄)2

S2 =

, is a statistic

n−1

If X1 = x1 , X2 = x2 , · · · , Xn = xn ,

P

(xi − x̄)2

2

s =

, is a value of the rv S 2

n−1

Deriving the Sampling Distribution of a Statistic

Definition 14 (Sampling Distribution). A statistic is a random variable, the distribution of a statistic is called the sampling distribution of the statistic.

We may use probability rules to obtain the sampling distribution of a statistic.

Example 5.3.3

Example 5.20 in textbook. A large automobile service center charges $40, $45, and

$50 for a tune-up of four-, six-, and eight-cylinder cars, respectively. 20% of the tune-ups

are done for four-cylinder cars, 30% for six-cylinder cars and 50% for eight-cylinder cars.

Let X be the service charge for a single tune-up. Then the distribution of X is:

x

p(x)

40

0.2

45

0.3

50

0.5

Now let X1 and X2 be the service charges of two randomly selected tune-ups. Find the

distribution of:

1. X̄ =

X1 +X2

2

2. S 2 =

(X1 −X̄)2 +(X2 −X̄)2

2−1

Example 5.3.3

x1

40

40

40

45

45

45

50

50

50

5.4

x2

40

45

50

40

45

50

40

45

50

p(x1 , x2 )

0.04

0.06

0.10

0.06

0.09

0.15

0.10

0.15

0.25

x̄

40

42.5

45

42.5

45

47.5

45

47.5

50

s2

0

12.5

50

12.5

0

12.5

50

12.5

0

The Distribution of the Sample Mean

Sampling Distribution of Sample Sum and Sample Mean

Proposition (Mean and Std Dev of Sample Sum). Let X1 , X2 , ..., Xn be a random

sample from a distribution with mean µ and standard deviation σ. Sample sum is To =

X1 + X2 + ... + Xn . Then:

Purdue University

Chapter5˙print.tex; Last Modified: February 19, 2014 (W. Sharabati)

STAT511 — Spring 2014

Lecture Notes

10

1. E(To ) = nµ

2. V ar(To ) = nσ 2 and σTo =

√

nσ

Proposition (Mean and Std Dev of Sample Mean). Let X1 , X2 , ..., Xn be a random

sample from a distribution with mean µ and standard deviation σ. Sample mean is

X̄ = X1 +X2n+...+Xn . Then:

1. E X̄ = µ

2

2. V ar X̄ = σn and σX̄ = √σn

Sample variance S 2 related sampling distribution will be introduced in Chapter 7.

The Case of Normal Population Distribution

Proposition. Let X1 , X2 , ..., Xn be a random sample from a normal distribution with

mean µ and standard deviation σ (N (µ, σ)). Then To and X̄ both follow normal distributions:

√

1. To ∼ N (nµ, nσ)

2. X̄ ∼ N (µ, √σn )

Example 5.4.1

Example 5.25. Let X be the time it takes a rat to find its way through a maze.

X ∼ N (µ = 1.5, σ 2 = 0.352 ) (in minutes). Suppose five rats are randomly selected.

Let X1 , X2 , · · · , X5 denote their times in the maze. Assume X1 , X2 , · · · , X5 be a

random sample from N (µ = 1.5, σ 2 = 0.352 ). Let total time To = X1 + X2 + · · · + X5 ,

average time X̄ = X1 +X25+···+X5 . What is the probability that the total time of the 5

rats is between 6 and 8 minutes? What is the probability that the average time is at

most 2.0 minutes?

Example 5.4.1 Continued...

T0 ∼ N (5 · 1.5 = 7.5, 5 · 0.352 = 0.6125)

So,

8 − 7.5

6 − 7.5

P (6 < To < 8) = P ( √

<Z< √

0.6125

0.6125

P (6 < To < 8) = Φ(0.64) − Φ(−1.92) = 0.7115

X̄ ∼ N (1.5,

So,

P (X̄ ≤ 2.0) = P (Z <≤

0.352

)

5

2.0 − 1.5

√ ) = P (Z ≤ 3.19)

0.35/ 5

P (X̄ ≤ 2.0) = Φ(3.19) = 0.9993

Purdue University

Chapter5˙print.tex; Last Modified: February 19, 2014 (W. Sharabati)

STAT511 — Spring 2014

Lecture Notes

11

Central Limit Theorem

Theorem 15. Let X1 , X2 , · · · , Xn be a random sample from a distribution with

mean µ and variance σ 2 . Then if n is sufficiently large, X̄ has approximately a normal

2 = σ 2 . T then has approximately a normal distribution

distribution with µX̄ = µ and σX̄

o

n

with µTo = nµ and σT2o = nσ 2 . The larger the value of n, the better the approximation.

Rule of Thumb: If n > 30, the Central Limit Theorem can be used.

Central Limit Theorem

Central Limit Theorem

Examples

• Example 5.5.1 Example 5.26 in textbook. The amount of a particular impurity in a batch of some chemical product is a random variable with mean 4.0g

and standard deviation 1.5g. If 50 batches are independently prepared, what is

the (approximate) probability that the sample average amount of impurity X̄ is

between 3.5g and 3.8g?

• Example 5.5.2 Example 5.27 in textbook. The number of major defects for a

certain model of automobile is a random variable with mean 3.2 and standard

deviation 2.4. Among 100 randomly selected cars of this model, how likely is it

that the average number of major defects exceeds 4? How likely is it that the

number of major defects of all 100 cars exceeds 20?

5.5

The Distribution of a Linear Combination

Linear Combinations of RV’s

Purdue University

Chapter5˙print.tex; Last Modified: February 19, 2014 (W. Sharabati)

STAT511 — Spring 2014

12

Lecture Notes

Definition 16. Given a collection of n random variables X1 , X2 , · · · , Xn and n

numerical constants a1 , a2 , · · · , an , the rv:

Y = a1 X1 + a2 X2 + · · · + an Xn =

n

X

ai Xi

i=1

is called a linear combination of the Xi0 s.

1. Sample sum To = X1 + X2 + · · · + Xn is a linear combination with a1 = a2 = · · · =

an = 1.

2. Sample mean X̄ =

an = n1 .

X1 +X2 +···+Xn

n

is a linear combination with a1 = a2 = · · · =

Mean and Variance of Linear Combinations

Proposition. Let X1 , X2 , · · · , Xn have expectations µ1 , µ2 , · · · , µn respectively

and variances σ12 , σ22 , · · · , σn2 respectively. Let Y be the linear combination of Xi0 s,

Y = a1 X1 + a2 X2 + · · · + an Xn . Then:

1. E(Y ) = a1 µ1 + a2 µ2 + · · · + an µn .

P P

2. V ar(Y ) = ni=1 nj=1 ai aj Cov(Xi , Xj )

3. If X1 , X2 , · · · , Xn are independent, V ar(Y ) = a21 σ12 + a22 σ22 + · · · + a2n σn2 .

Corollary 17. If X1 , X2 are independent, then E(X1 − X2 ) = E(X1 ) − E(X2 ) and

V ar(X1 − X2 ) = V ar(X1 ) + V ar(X2 ).

The Case of Normal Random Variables

Proposition. If X1 , X2 , · · · , Xn are independent,normally distributed rv’s, with

means µ1 , µ2 , · · · , µn and variances σ12 , σ22 , · · · , σn2 , then any linear combination

of the Xi0 s Y = a1 X1 + a2 X2 + · · · + an Xn also has a normal distribution.

Y ∼ N (a1 µ1 + a2 µ2 + · · · + an µn , a21 σ12 + a22 σ22 + · · · + a2n σn2 )

Example

Example 5.5.3 Example 5.30 in textbook. Three grades of gasoline are priced at

$1.20, $1.35 and $1.50 per gallon, respectively. Let X1 , X2 and X3 denote the amounts of

these grades purchased (gallons) on a particular day. Suppose Xi ’s are independent and

normally distributed with µ1 = 1000, µ2 = 500, µ3 = 100, σ1 = 100, σ2 = 80, σ3 = 50.

The total revenue of the sale of the three grades of gasoline on a particular day is

Y = 1.2X1 + 1.35X2 + 1.5X3 . Find the probability that total revenue exceeds $2500.

Purdue University

Chapter5˙print.tex; Last Modified: February 19, 2014 (W. Sharabati)