DECOMPOUNDING GERMAN WORDS

advertisement

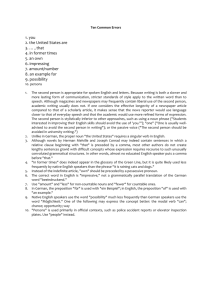

DECOMPOUNDING GERMAN WORDS Introduction Costanza Conforti 05.02.2015 Overview § Bird’s eye view § Preliminary steps: – Identification – Structure Analysis § German Compounds § Decompounding German words – Decompounding Pipeline § Tasks – State-of-the-art model (Alfonseca 2008) Decompounding German Words BIRD’S EYEVIEW Bird’s eye view § “There are probably no languages without either compounding, affixing, or both. In other words, there are probably no purely isolating languages. There are a considerable number of languages without inflection, perhaps none without compounding and derivation” Greenberg 1963. In Scalise 2010 § [X r Y]X [N+A]N camposanto (sp, ‘field+holly, graveyard’) [N+[P+N]]N arc-en-ciel (fr, ‘arch in sky, rainbow’) [X r Y]Y [A+N]N green card (engl), [N+N]N Taxifahrer (germ) [X r Y]Z [V+N]A 缺德 Quēdé (jap, ‘lack+morals, immoral’) Decompounding German Words Scalise 2010 Bird’s eye view § Hybrid status: Syntactically complex units which behave in a syntactically simple way Matthews 1947, In: Scalise 2010 § Phonetics + Syntax-Morphology Interface + Semantics Complex interface domains of language competence Baroni 2010 § Orthograpy Distinct words -Unusual POS sequence -Simplify parsing vs. one-words compounds -Lexical recognition -Segmentation Decompounding German Words PRELIMINARY STEPS IDENTIFICATION STRUCTURE ANALYSIS Preliminary steps - Identification § High type frequency/ low token frequency § Productive compounding: Sizeable number of new orthographic words will constantly be added to the language Baroni 2002 § Training corpus: Data sparseness Decompounding: compound as concatenation of its units Decompounding German Words Right No head Left Both 66.7 16.3 6.8 5.9 Preliminary steps - Structure analysis § Table Bracketing/Labelling internal structure 2. Frequency of compoundcompound’s types with different head positions in different language groups Right No head Left Both General % Rom % Germ % Slav % East A. % 66.7 16.3 6.8 5.9 40,7 31,4 20,3 6,8 87,0 8,9 1,9 1,3 61,9 12,2 6,0 3,1 57,5 17,7 6,8 15,0 Scalise 2010 As can be seen, the Slavic group follows the general pattern more closely than the other groups. The Romance languages exhibit a relatively high percentage of exocentric constructions, while Germanic languages are more consistently right-headed. The East Asian languages are different in allowing a relatively high percentage of compounds with two heads, most of which are coordinate compounds. In languages such as Italian, the exocentric pattern V+N is one of the most productive processes in compound formation. In some languages we also find a pattern that has been called ‘absolute exocentricity’ German (Scalise, Fabregas Decompounding Words & Forza 2009), from both a categorial and semantic point of view. In such compounds, the output category This allows listeners, upon hearing the ith compound constituent, to reuse the compound structure built up on the sequence w1, …, wi-1. Compounds that are easier to understand are more frequent overall. Lauer (1995) states the problem in probabilistic terms. The two structures in (8) and (9) presuppose the dependency chains illustrated in (11) below, where each pointed arc represents a ‘dependant → head relationship’. Given two relations such as § Recursion child → language and language → acquisition, the three words can give rise to one compound only:language] child language acquisition. In contrast, the pair of dependencies [child child[[child → acquisition and language → acquisition can in principle produce two equally language]acquisition] likely compounds: child language acquisition and language child acquisition. This means [[[child language]acquisition]research] that child → acquisition and language → acquisition can generate twice as many com[[[[child pounds as child →language]acquisition]research]group] language and language → acquisition do. If this is true in general, it [[[[[child language]acquisition]research]group]member] implies, by straightforward application of Bayes’ rules, that, given a compound ABC, the probability that it has a left-branching structure is twice the probability that it has a right-branching structure. This is what corpus data tell us. Preliminary steps - Structure analysis (11) [[childN languageN] acquisitionN]N [childN [languageN acquisitionN]N]N Baroni 2010 How can a parser choose between the different bracketing structures in (11)? Work in the 80’s and early 90’s (Finin 1980, McDonald 1982, Gay & Croft 1990, Vanderwende Decompounding German Words intensive algorithms. The 1994) proposes to deal with the problem through knowledge immediate constituents of this compound are Kraftfahrzeug and steuer, with the first constituent then splitting further into Kraft and fahrzeug, etc. (see Figure 1). In order to be able to systematically link compounds in GermaNet to their constituent parts, compound splitting needs to be ermaNet applied recursively and has to identify only the § L/R branching: Not Dichotomous! work that is immediate constituents at each level of analysis. Net for Engnto a set of ets) that are synset is a where all the aning. There n GermaNet. two synsets, elations, enons hold be- hero’s courille; ‘will to Schmerz + Preliminary steps - Structure analysis a systematic absent from ailable. The ow to treat Figure 1. Compounds in GermaNet. 4 Related Work on Compound Splitting Decompounding German Words GermaNet 8.0 Henrich 2011 GERMAN COMPOUNDS German compounds § Statistics: APA newswire corpus (28 mill words) 47% types 7% tokens hapax/rare <5 Baroni 2002 § NN – AN – VN – PN – BN 62% - 95% Baroni 2002 - Heinrich 2011 Decompounding German Words German compounds [[Modifier Table 2. Linking morphemes allo + (Linking morpheme) + (-)] + Head] bound form: - Hände-druck - Farb-fernseher - Doktor-/Doktoren- Paradigmatic morphemes German == Primitive words Baroni 2002 Alfonseca 2008 Morpheme Semantic-(halb ∅ Halbblutprinz -e Wundfieber (Wunde morphologic +s Rechtsstellung properties(Rech +e Mauseloch (Maus Lo +en Armaturenbrett (Arm +nen +ens Herzensangst (Herz A +es Tagesanbruch (Tag A +ns Willensbildung (Will +er Bilderrahmen (Bild R Decompounding German Words German compounds Langer 1998 Decompounding German Words DECOMPOUNDING GERMAN WORDS Guidelines Decompounding German words § Decompounding: LINGUISTIC – CONSERVATIVE – AGGRESSIVE Braschler 2003 § Overrecognize (Schweiner-ei) + Lexicalized Compounds (Frühstück, Freitag) Goldsmith 2002 Decompounding German Words Decompounding Pipeline Empirical Methods for Compound Splitting Philipp Koehn Kevin Knight 1.Sciences [Prior step: compound list] ormation Institute Information Sciences Institute rtment of Computer Science Department of Computer Science es. Bispo Santos 2014, GermaNet 8.0: semi-automatically generated ersity of Southern California University of Southern California knight@isi.edu 2. Calculate every possible way of splitting a word in 1+ parts - each of one is a known word > corpus + allowing for linking morphemes - only binary splits/recursively koehn@isi.edu Abstract ed words are a challenge for ations such as machine trans). We introduce methods to ng rules from monolingual corpora. We evaluate them old standard and measure t on performance of statistiems. Results show accuracy d performance gains for MT LEU on a German-English e translation task. Aktionsplan Akti on Akt actionplan plan action plan plan act ion plan Figure 1: Splitting options for the German word Decompounding German Words A ktionsplan Koehn 2003 Decompounding Pipeline 3. Score those parts Weighting function: - Frequency-Based Methods - Probability-Based Methods 4. Take the highest-scoring decomposition. (if it contains just one part, it means that word is not a compound) Decompounding German Words TASKS Tasks § Prediction in AAC systems Baroni et. al 2002 § Speech Understanding Systems Adda et al. 2009 § Machine Translation Brown 2002 - Koehn 2003 – Stymne 2008 - ecc § Information Retrieval Braschler 2003 - ecc § Query Keywords Alfonseca 2008 Decompounding German Words German Decompounding in a Difficult Corpus 129 State-of-the-art model Alfonseca 2008 able 1. Some examples of words found on German user queries that can be difficult handle by a German decompounder § Query Keywords noisy data Word Probable interpretation achzigerjahre achtzig+jahre (misspelled) activelor unknown word adminslots admin slots (English) agilitydog agility dog (English) akkuwarner unknown word alabams alabama (misspelled) almyghtyzeus almyghty zeus (English and misspelled) amaryllo amarillo (Spanish and misspelled) ampihbien amphibian (misspelled) German, contain small fragments in other languages. This problem exists to § Unknown Compounds: useful information larger degree when handling user queries: they are written quickly, not paying Turingmaschine, Blumenstrause, Bismarkausseenpolitik tention to mistakes, and the language used for querying can change during the Decompounding German Words me query session. Language identification of search queries is a difficult prob- Correct splits: no. of words that should be split and are split correctly. Correct non-splits: no. of words that should not be split and are not. Wrong non-splits: no. of words that should be split and are not. Alfonseca 2008 Wrong faulty splits: no. of words that should be split and are, but incorrectly. Wrong splits: no. of words that should no be split but are. State-of-the-art model P recision = Recall = correct splits correct splits + wrong faulty splits + wrong splits correct splits correct splits + wrong faulty splits + wrong no splits correct splits Accuracy = correct splits + wrong splits Approaches Koehn 2003 approaches for German compound splitting can be considered as having general structure: given a word w, Calculate every possible way of splittingGerman w in Words one or more parts. Decompounding Accuracy 64.09% 65.58% 76.23% 80.52% 87.21% ations. State-of-the-art model Alfonseca 2008 Method Never split Geometric mean of frequencies Compound probability Mutual Information Support-Vector Machine Table 3: Precision 39.77% 60.41% 82.00% 83.56% Recall 0.00% 54.06% 80.68% 48.29% 79.48% Accuracy 64.09% 65.58% 76.23% 80.52% 87.21% Language P R A Results the several German of 83.56% 79.48% configurations. 87.21% Dutch 78.99% 76.18% 83.45% Danish 81.97% 87.12% 85.36% Norwegian 88.13% 93.05% 90.40% Swedish 83.34% 92.98% 87.79% Finnish 90.79% 91.21% 91.62% Concerning the second step, there is some work hat forTable scoring, additional information such some uses, work Decompounding German Words 4: Results in all the different languages. References § S. Langer. 1998. Zur Morphologie und Semantik von Nominalkomposita. Tagungsband der 4. Konferenz zur Verarbeitung naturlicher Sprache (KONVENS). § M. Baroni, J. Matiasek. 2002. Wordform- and class-based prediction of the components of German compounds in AAC system. OeFAI Technical Report § R.D. Brown. 2002. Corpus-driven splitting of compound words. In TMI-2002. § M. Braschler and B. Ripplinger. 2004. How effective is stemming and decompounding for german text retrieval? Information Retrieval, 7:291–316. P. Koehn and K. Knight. 2003. Empirical methods for compound splitting. In ACL-2003. § § E. Alfonseca, S. Bilac, and S. Pharies. 2008. German decompounding in a difficult corpus. In CICLING § V. Pirrelli, E. Guevara, and M. Baroni. 2010. Computational issues in compound processing. In: Scalise 2010 § S.Scalise, I.Vogel. 2010. Why Compounding? Cross-Disciplinary Issues in Compounding § V. Heinrich, Hinrichs E. 2011. Determining Immediate Constituents of Compounds in GermaNet. Proceedings of Recent Advances in NLP Thank you - Danke - Grazie