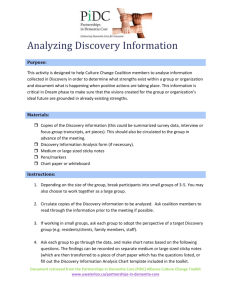

District and School Data Team Toolkit

advertisement