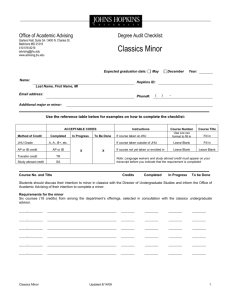

Project Summary - Johns Hopkins University

advertisement