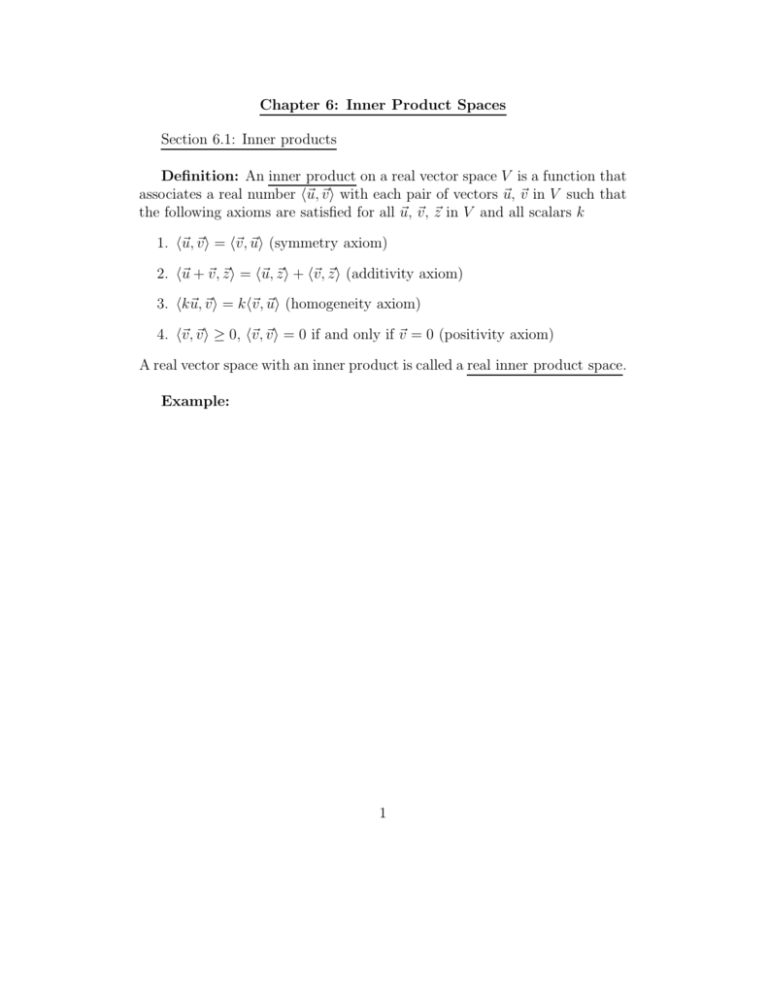

Chapter 6: Inner Product Spaces Section 6.1: Inner products

advertisement

Chapter 6: Inner Product Spaces

Section 6.1: Inner products

Definition: An inner product on a real vector space V is a function that

associates a real number h~u, ~vi with each pair of vectors ~u, ~v in V such that

the following axioms are satisfied for all ~u, ~v , ~z in V and all scalars k

1. h~u, ~vi = h~v , ~ui (symmetry axiom)

2. h~u + ~v , ~zi = h~u, ~z i + h~v, ~z i (additivity axiom)

3. hk~u, ~vi = kh~v , ~ui (homogeneity axiom)

4. h~v, ~v i ≥ 0, h~v, ~vi = 0 if and only if ~v = 0 (positivity axiom)

A real vector space with an inner product is called a real inner product space.

Example:

1

Definition: If ~u = (u1 , u2 , . . . , un ), ~v = (v1 , v2 , . . . , vn ) are vectors in Rn

and w1 , w2 , . . . , wn are positive real numbers, then the formula

h~u, ~vi = w1 u1 v1 + w2 u2 v2 + · · · + wn un vn

defines an inner product on Rn called the weighted inner product with weights

w1 , w2 , . . . , wn .

Note: Unless specified otherwise, Rn is assumed to have the usual Euclidean inner product which we refer to as Euclidean n-space.

Example:

2

Definition: If V is an inner product space, then the norm (or length) of

a vector ~u in V is denoted by k~uk and is defined by

k~uk = h~u, ~ui1/2

The distance between two points (vectors) ~u and ~v is denoted by d(u, v) and

is defined by

d(~u, ~v ) = k~u − ~v k

If a vector has norm 1, it is called a unit vector.

Example:

3

Definition: If A is an invertible nxn matrix, ~u, ~v are vectors in Rn , then

the inner product on Rn generated by A is defined as

h~u, ~v i = A~u · A~v = (A~v )T A~u = ~v T AT A~u

Example:

Theorem 6.1.1 Properties of inner products

If ~u, ~v , w

~ are vectors in real inner product space, k is a scalar, then

1. h0, ~ui = h~u, 0i = ~0

2. h~u, ~v + wi

~ = h~u, ~v i + h~u, wi

~

3. h~u, k~vi = kh~u, ~vi

4. h~u − ~v, wi

~ = h~u, wi

~ − h~v , wi

~

5. h~u, ~v − wi

~ = h~u, ~vi − h~u, wi

~

Note: These relations hold for all real inner product spaces.

4

Section 6.2: Angle and orthogonality in inner product spaces

Theorem 6.2.1 Cauchy-Schwarz inequality

If ~u, ~v are vectors in real inner product space, then

|h~u, ~v i| ≤ k~ukk~vk

Equivalently,

h~u, ~vi2 ≤ h~u, ~uih~v, ~vi

or

h~u, ~vi2 ≤ k~uk2 k~v k2

Theorem 6.2.2: Properties of length

If ~u, ~v are vectors in inner product space V , k is a scalar, then

1. k~uk ≥ 0

2. k~uk = 0 if and only if k~uk = 0

3. kk~uk = |k|k~uk

4. k~u + ~v k ≤ k~uk + k~vk (triangle inequality)

Theorem 6.2.3: Properties of distance

If ~u, ~v , w

~ are vectors in inner product space V , k is a scalar, then

1. d(~u, ~v) ≥ 0

2. d(~u, ~v) = 0 if and only if ~u = ~v

3. d(~u, ~v) = d(~v , ~u)

4. d(~u, ~v) ≤ d(~u, w)

~ + d(w,

~ ~v ) (triangle inequality)

Definition: If ~u, ~v are vectors in a real inner product space, the angle between ~u and ~v

is defined by

h~u, ~v i

cos(θ) =

,0≤θ≤π

k~ukk~v k

Definition: Two vectors ~u, ~v in an inner product space are orthogonal

if h~u, ~v i = 0

5

Theorem 6.2.4: Generalized Pythagorean Theorem

If ~u, ~v are orthogonal vectors in a real inner product space, then

k~u + ~v k = k~uk2 + k~vk2

Note: All of these theorems and definitions hold for ANY vector space

with inner product!

Example:

6

Definition: Let W be a subspace of an inner product space V . A vector ~u in V is orthogonal to W if it is orthogonal to every vector in W ,

and the set of all vectors in V that are orthogonal to W is called the

orthogonal complement of W , denoted W ⊥ .

Example:

7

Terminology: We call W and W ⊥ orthogonal complements.

Theorem 6.2.5: Properties of orthogonal complements

If W is a subspace of a finite dimensional inner product space V , then

1. W ⊥ is a subspace of V

2. The only vector common to W and W ⊥ is ~0

3. The orthogonal complement of W ⊥ is W . That is, (W ⊥ )⊥ = W

Theorem 6.2.6: If A is an mxn matrix then

1. the nullspace of A and the row space of A are orthogonal complements

in Rn with respect to the Euclidean inner product. That is,

(Row space of A)⊥ = nullspace of A

2. The nullspace of AT and the column space of A are orthogonal complements in Rm with respect to the Euclidean inner product. That

is,

(Column space of A)⊥ = nullspace of AT

Example:

8

Theorem 6.2.7: If TA : Rn → Rn is multiplication by an n × n matrix

A, then the following are equivalent

1. A is invertible

2. A~x = 0 has only the trivial solution

3. The reduced row echelon form of A is In

4. A can be expressed as a product of elementary matrices

5. A~x = ~b is consistent for every n × 1 matrix ~b

6. A~x = ~b has exactly one solution for every n × 1 matrix ~b

7. det(A) 6= 0

8. The range of TA is Rn

9. TA is one-to-one

10. The column vectors of A are linearly independent

11. The row vectors of A are linearly independent

12. The column vectors of A span Rn

13. The row vectors of A span Rn

14. The column vectors of A form a basis for Rn

15. The row vectors of A form a basis for Rn

16. A has rank n

17. A has nullity 0

18. The orthogonal complement of the nullspace of A is Rn

19. The orthogonal complement of the row space of A is ~0

9

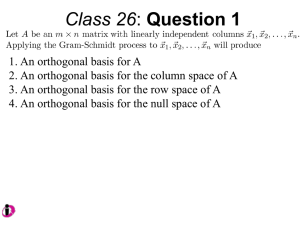

Section 6.3: Orthonormal bases; Gram-Schmidt process; QR-decomposition

Definition: A set of vectors in an inner product space is called an

orthogonal set if all pairs of distinct vectors in the set are orthogonal. An

orthogonal set in which each vector has norm 1 is called orthonormal. A

basis of an inner product space consisting of orthogonal vectors is called an

]underlineorthogonal basis while a basis consisting of orthonormal vectors is

called an orthonormal basis.

Theorem 6.3.1: If S = {v~1 , v~2 , . . . , v~n } is an orthonormal basis for an

inner product space V , and ~u is any vector in V , then

~u = h~u, v~1 iv~1 + h~u, v~2 iv~2 + · · · + h~u, v~n iv~n

Example:

10

Theorem 6.3.2: If S is an orthonormal basis for an n-dimensional inner

product space, and if

(~u)S = (u1 , u2 , . . . , un ), (~v)S = (v1 , v2 , . . . , vn )

then

p

u21 + u22 + · · · + u2n

p

2. d(~u, ~v) = (u1 − v1 )2 + (u2 − v2 )2 + · · · + (un − vn )2

1. k~uk =

3. h~u, ~vi = u1 v1 + u2 v2 + · · · + un vn

Example:

Theorem 6.3.3: If S = {v~1 , v~2 , . . . , v~n } is an orthogonal set of nonzero

vectors in an inner product space, then S is linearly independent.

Example:

11

Theorem 6.3.4: Projection theorem

If W is a finite dimensional subspace of an inner product space V , then every

vector ~u in V can be expressed in exactly one way as

~u = w~1 + w~2

where w~1 is in W and w~2 is in W ⊥ . w~1 is called the orthogonal projection of ~u on W ,

denoted projW ~u, and w~2 is called the complement of ~u orthogonal to W , denoted projW ⊥ ~u, and we can write

~u = projW ~u + projW ⊥ ~u = projW ~u + (~u − projW ~u)

Theorem 6.3.5: Let W be a finite dimensional subspace of an inner

product space V

1. If {v~1 , . . . , v~r } is an orthonormal basis for W , and ~u any vector in V

then

projW ~u = h~u, v~1 iv~1 + h~u, v~2 iv~2 + · · · + h~u, v~r iv~r

2. If {v~1 , . . . , v~r } is an orthogonal basis for W , and ~u any vector in V then

projW ~u =

h~u, v~r i

h~u, v~1 i

v~1 + · · · +

v~r

2

kv~1 k

kv~r k2

Example:

12

Theorem 6.3.6: Every nonzero finite dimensional inner product space

has an orthonormal basis.

Gram-Schmidt process: method to convert an arbitrary basis into an

orthogonal basis. This basis can then be normalized to produce an orthonormal basis.

Example:

13

Summary of Gram-Schmidt process

Let S = {u~1, . . . , u~n } be an arbitrary basis of an inner product space V

v~1 = u~1

hu~2, v~1 i

v~1

kv~1 k2

hu~3, v~2 i

hu~3, v~1 i

v

~

−

v~2

= u~3 −

1

kv~1 k2

kv~2 k2

..

.

hu~n , v~1 i

hu~n , v~2 i

hu~n , vn−1

~ i

= u~n −

v~1 −

v~2 − · · · −

vn−1

~

2

2

kv~1 k

kv~2 k

kvn−1

~ k2

v~2 = u~2 −

v~3

v~n

Then S ′ = {v~1 , . . . , v~n } is an orthogonal basis for V and S ′′ = { kvv~~11 k , . . . , kvv~~nn k }

is an orthonormal basis for V .

Theorem 6.3.7: QR-decomposition

If A is an mxn matrix with linearly independent column vectors u~i , then A

can be factored as

A = QR

where Q is an mxn matrix with orthonormal column vectors q~i , and R is an

invertible upper triangular matrix where

hu~1 , q~1 i hu~2 , q~1 i · · · hu~n , q~1 i

0

hu~2 , q~2 i · · · hu~n , q~2 i

R=

..

..

.

.

0

0

Example:

14

· · · hu~n , q~n i

Section 6.5: Change of basis

Recall: If the bases S, S ′ span the same space then the vectors in S can

be written as a linear combination of vectors in S ′ , and the vectors in S ′ can

be written as a linear combination of vectors in S. Also recall the coordinate

vector of ~u with respect to S, written as a column vector, is

k1

k2

[~v ]S = ..

.

kn

Change of basis: If B = {b~1 , b~2 , . . . , b~n } is a basis for inner product

space V and C = {~

c1 , c~2 , . . . , c~n } is another basis for V then there is a unique

nxn matrix PC←B , denoted the transition matrix from B to C, such that

[~v ]C = PC←B [~v ]B

where the columns of PC←B are the coordinate vectors of the basis B relative

to the basis C. That is,

h

i

PC←B = [b~1 ]C , [b~2 ]C , . . . , [b~n ]C

Theorem 6.5.1: If PC←B is the transition matrix from a basis B to

a basis C for a finite dimensional space V , then PC←B is invertible and

(PC←B )−1 is the transition matrix from C to B. That is,

[~v ]C = PC←B [~v ]B

[~v ]B = (PC←B )−1 [~v ]C

Example:

15

Section 6.6: Orthogonal matrices

Definition: A square matrix A with the property

A−1 = AT

is said to be an orthogonal matrix. That is, a square matrix is orthogonal if

and only if

AAT = AT A = I

Example:

16

Theorem 6.6.1: For an nxn matrix A, the following statements are

equivalent

1. A is orthogonal

2. The row vectors of A form an orthonormal set in Rn with Euclidean

inner product

3. The column vectors of A form an orthonormal set in Rn with Euclidean

inner product

Theorem 6.6.2:

1. The inverse of an orthogonal matrix is orthogonal

2. The product of orthogonal matrices is orthogonal

3. If A is orthogonal, then det(A) = ±1

Example:

17

Theorem 6.6.3: For an nxn matrix A, the following statements are

equivalent

1. A is orthogonal

2. kA~xk = k~xk for all ~x in Rn

3. A~x · A~y = ~x · ~y for all ~x, ~y in Rn

Example:

18

Definition: If T : Rn → Rn is multiplication by an orthogonal matrix

A, then T is called an orthogonal operator on Rn .

Theorem 6.6.4: If PC←B is the transformation matrix from one orthonormal basis to another orthonormal basis for an inner product space,

then PC←B is an orthogonal matrix. That is,

(PC←B )−1 = (PC←B )T

19