Inner Product Spaces

advertisement

Outline

Section 6: Inner Product Spaces

6.1 Inner Products

6.2 Angle and orthogonality in inner vector spaces

6.3 Orthogonal Bases; Gram-Schmidt Process

6.5 Orthogonal Matrices: Change of Basis

6.1 Inner Products

Definition

An inner product on real vector space V is a function that

associates a real number hu, vi with each pair of vectors u and

v in V such that ∀u, v, w ∈ V and ∀k ∈ R:

1. hu, vi = hv, ui

2. hu + v, wi = hu, wi + hv, wi

3. hk u, vi = k hu, vi

4. hv, vi ≥ 0 and hv, vi = 0 iff v = 0

A real space with an inner product is called a real inner

product space.

Example

For u = (u1 , . . . , un ) and v = (v1 , . . . , vn ) from R n and positive

real numbers w1 , . . . , wn the formula

hu, vi = w1 u1 v1 + · · · + wn un vn

defines the weighed Euclidean inner product.

Example

Verify that for u, v ∈ R 2 the weighted Euclidean inner product

hu, vi = 3u1 v1 + 2u2 v2

satisfies the four inner product axioms.

Definition

If V is an inner product space, then the norm of u ∈ V is

kuk = hu, ui1/2

The distance between u, v ∈ V is defined by

d(u, v) = ku − vk

Example

If V = R n and u, v ∈ V then

1/2

kuk = hu, ui

1/2

= (u · u)

q

= u12 + · · · + un2

q

d(u, v) = ku − vk = (u1 − v1 )2 + · · · + (un − vn )2

Inner products generated by matrices

Let u =

u1

u2

..

.

and v =

v1

v2

..

.

be in R n and A be an

vn

un

invertible n × n matrix. Then

hu, vi = Au · Av

is the inner product on R n generated by A.

Since u · v = vT u, one has

hu, vi = (Av)T Au

or, equivalently,

hu, vi = vT AT Au

Example

The Euclidean inner product hu, vi = 3u1 v1 + 2u2 v2 is

generated by

· √

¸

3 √0

A=

2

0

Indeed,

· √

¸· √

¸·

¸

3 √0

3 √0

u1

hu, vi = [v1 v2 ]

u2

0

2

0

2

·

¸·

¸

3 0

u1

= [v1 v2 ]

0 2

u2

= 3u1 v1 + 2u2 v2 .

Example

If

·

U=

u1 u2

u3 u4

¸

·

and V =

v1 v2

v3 v4

¸

then the following formula defines an inner product on M22 :

hU, V i = tr(U T V ) = tr(V T U) = u1 v1 + u2 v2 + u3 v3 + u4 v4

Example

If

p = a0 + a1 x + a2 x 2

and

q = b0 + b1 x + b2 x 2

then the following formula defines an inner product on P2 :

hp, qi = a0 b0 + a1 b1 + a2 b2

Theorem (Properties of Inner Products)

∀u, v ∈ V and k ∈ R:

1. h0, vi = hv, 0i = 0

2. hu, k vi = k hu, vi

3. hu, v ± wi = hu, vi ± hu, wi

4. hu ± v, wi = hu, wi ± hv, wi

Proof.

For part (3):

hu, v ± wi = hv ± w, ui

(symmetry)

= hv, ui ± hw, ui

(additivity)

= hu, vi ± hu, wi

(symmetry)

6.2 Angle and orthogonality in inner vector spaces

Recall that for the dot product one has

u · v = kukkvk cos θ,

so

cos θ =

u·v

kukkvk

cos θ =

hu, vi

kukkvk

For u, v ∈ V define

Theorem (Cauchy-Schwartz Inequality)

|hu, vi| ≤ kukkvk

∀u, v ∈ V

Proof.

Without loss of generality assume u 6= 0 and v 6= 0.

Denote a = hu, ui, b = 2hu, vi, c = hv, vi. Then ∀r ∈ R:

0 ≤ hr u + v, r u + vi = hu, ui + 2hu, vi + hv, vi

= ar 2 + br + c

Hence, b2 − 4ac ≤ 0, that is,

4hu, vi2 − 4hu, uihv, vi ≤ 0

or

|hu, vi| ≤ kukkvk

Alternative forms of Cauchy-Schwartz Inequality:

hu, vi2 ≤ hu, uihv, vi and

hu, vi2 ≤ kuk2 kvk2

Theorem (Properties of Length)

∀u, v ∈ V and ∀k ∈ R:

1. kuk ≥ 0

2. kk uk = k kuk

3. kuk = 0 if and only if u = 0

4. ku + vk ≤ kuk + kvk

Theorem (Properties of Distance)

∀u, v, w ∈ V and ∀k ∈ R:

1. d(u, v) ≥ 0

2. d(u, v) = d(v, u)

3. d(u, v) = 0 if and only if u = v

4. d(u, v) ≤ d(u, w) + d(w, v)

Proof.

For the last part one has:

ku + vk2 = hu + v, u + vi

= hu, ui + 2hu, vi + hv, vi

≤ hu, ui + 2|hu, vi| + hv, vi

≤ hu, ui + 2kukkvk + hv, vi

= kuk2 + 2kukkvk + kvk2

= (kuk + kvk)2

The second inequality is based on the Cauchy-Schwartz

Inequality.

Angle between vectors

By Cauchy-Schwartz Inequality:

·

hu, vi

kukkvk

¸2

≤1

or

−1≤

hu, vi

≤1

kukkvk

Hence, there exists a unique angle θ such that:

cos θ =

hu, vi

kukkvk

and

0 ≤ θ ≤ π.

Definition

u, v ∈ V are called orthogonal if hu, vi = 0.

Example

·

U=

1 0

1 1

¸

·

and

V =

0 2

0 0

¸

are orthogonal matrices, since

hU, V i = 1 · 0 + 0 · 2 + 1 · 0 + 1 · 0 = 0

Theorem (Generalized Theorem of Pythagoras)

For any orthogonal vectors u and v in an inner product space:

ku + vk2 = kuk2 + kvk2

Proof.

ku + vk2 = hu + v, u + vi = kuk2 + 2hu, vi + kvk2

= kuk2 + kvk2

Orthogonal complements

Definition

Let W be a subspace of an inner product space V . A vector

u ∈ V is said to be orthogonal to W if it is orthogonal to every

vector in W , and the set of vectors in V that are orthogonal to

W is called the orthogonal complement of W (notation W ⊥ ).

Theorem (Properties of orthogonal complements)

If W is a subspace of a finite-dimensional inner product space

V , then

1. W ⊥ is a subspace of V

2. W ∩ W ⊥ = {0}

¡

¢⊥

3. W ⊥ = W

Proof.

For now we only prove parts (1) and (2).

1. We show that W ⊥ is closed under addition and scalar

multiplication. Indeed, ∀u, v ∈ W ⊥ and ∀w ∈ W :

hu + v, wi = hu, wi + hv, wi = 0 + 0 = 0

hk u, wi = k hu, wi = k · 0 = 0

2. If v ∈ W ∩ W ⊥ then hv, vi = 0, so v = 0.

Theorem

If A is an n × n matrix, then:

1. The nullspace of A and the row space of A are orthogonal

complements in R n w.r.t the Euclidean inner product.

2. The nullspace of AT and the column space of A are

orthogonal complements in R n w.r.t the Euclidean inner

product.

Proof.

We only prove part (1) since the one for part (2) is similar.

Assume first that v is orthogonal to every vector in the row

space of A. In particular, v is orthogonal to the row vectors

r1 , . . . , rn of A:

r1 · v = r2 · v = · · · = rn · v = 0

Since for a solution x to the linear system Ax = 0 it holds

r1 · x

0

r2 · x 0

.. = .. ,

. .

rn · x

0

one has Av = 0, so v is in the nullspace of A.

Conversely, if Av = 0, then, by above,

r1 · v = r2 · v = · · · = rn · v = 0

Since every vector r from the row space is a l.c. of the rows,

r = c1 r1 + c2 r2 + · · · + cn rn ,

one has

r · v = (c1 r1 + c2 r2 + · · · + cn rn ) · v

= c1 (r1 · v) + c2 (r2 · v) + · · · + cn (rn · v)

= 0 + 0 + · · · + 0 = 0,

so v is orthogonal to every vector in the row space of A.

Example

Let W be the subspace of R 5 spanned by the vectors

w1 = (2, 2, −1, 0, 1), w2 = (−1, −1, 2, −3, 1),

w3 (1, 1, −2, 0, −1), w4 = (0, 0, 1, 1, 1). Find a basis for the

orthogonal complement of W .

Solution: Compose the matrix

2

2 −1

0

1

−1 −1

2

−3

1

A=

1

1 −2

0 −1

0

0

1

1

1

Since the vectors v1 = (−1, 1, 0, 0, 0) and v2 = (−1, 0, −1, 0, 1)

form a basis for the nullspace of A, these vectors also form a

basis for the orthogonal complement of W .

Orthogonal Bases

Definition

A set of vectors is called orthogonal set if every two distinct

vectors are orthogonal. An orthogonal set in which each vector

has norm 1 is called orthonormal.

Example

Let u1 = (0, 1, 0), u2 = (1, 0, 1), u3 = (1, 0, −1) and assume R 3

has the Euclidean inner product. Then the set S = {u1 , u2 , u3 }

is orthogonal.

√

√

Since ku1 k = 1, ku2 k = 2, ku3 k = 2, the vectors

v1 = kuu11 k = (0, 1, 0), v2 = kuu22 k = ( √1 , 0, √1 ) and

v3 =

u3

ku3 k

2

2

= ( √1 , 0, − √1 ) build an orthonormal set.

2

2

Theorem

If S = {v1 , v2 , . . . , vn } is an orthonormal basis for an inner

product space V and u ∈ V , then

u = hu, v1 iv1 + hu, v2 iv2 + · · · + hu, vn ivn

Proof.

Since S = {v1 , v2 , . . . , vn } is a basis, u is a l.c. of the basic

vectors:

u = k1 v1 + k2 v2 + · · · + kn vn .

We show that ki = hu, vi i for i = 1, 2, . . . , n. Indeed,

hu, vi i = hk1 v1 + k2 v2 + · · · + kn vn , vi i

= k1 hv1 , vi i + k2 hv2 , vi i + · · · + kn hvn , vi i

= ki hvi , vi i = ki

Example

For an orthonormal basis v1 = (0, 1, 0), v2 = (− 54 , 0, 35 ),

v3 = ( 53 , 0, 45 ) and u = (1, 1, 1) one has

hu, v1 i = 1,

so

1

hu, v2 i = − ,

5

hu, v3 i =

7

,

5

1

7

u = v1 − v2 + v3

5

5

That is,

(1, 1, 1) = (0, 1, 0) − 15 (− 45 , 0, 53 ) + 75 ( 35 , 0, 45 )

Theorem

If S is an orthonormal basis for an n-dimensional inner product

space, and if (u)S = (u1 , . . . , un ) and (v)S = (v1 , . . . , vn ) then

q

(a) kuk = u12 + u22 + · · · + un2

p

(b) d(u, v) = (u1 − v1 )2 + (u2 − v2 )2 + · · · + (un − vn )2

(c) hu, vi = u1 v1 + u2 v2 + · · · + un vn

Proof for part (a):

kuk

2

= hu, ui =

* n

X

ui vi ,

n

X

i=1

=

n X

n

X

+

uj vj

j=1

(ui uj )hvi , vj i

i=1 j=1

=

n

X

i=1

ui2 hvi , vi i =

q

u12 + u22 + · · · + un2

Theorem

If S = {v1 , v2 , . . . , vn } is an orthogonal set of non-zero vectors

in an inner product space, then S is linearly independent.

Proof.

Assume

k1 v1 + k2 v2 + · · · + kn vn = 0

We show that ki = 0, i = 1, . . . , n. For each vi ∈ S one has

hk1 v1 + k2 v2 + · · · + kn vn , vi i = h0, vi i = 0

or, equivalently,

k1 hv1 , vi i + k2 hv2 , vi i + · · · + kn hvn , vi i = 0

which for an orthogonal set reduces to

ki hvi , vi i = 0

Since vi 6= 0, we obtain ki = 0 for i = 1, 2, . . . , n.

Orthogonal Projections

Theorem (Projection Theorem)

Let W be a finite-dimensional subspace of an inner product

space V , then every vector u ∈ V can be expressed in exactly

one way as

u = w1 + w2

where w1 ∈ W and w2 ∈ W ⊥ .

We will prove this theorem later. For now:

Definition

The vector w1 in the preceeding theorem is called the

orthogonal projection of u on W and is denoted by projW u.

The vector w2 is called the component of u orthogonal to W

and is denoted by projW ⊥ u.

Theorem (Orthonormal Basis Theorem)

Let W be a finite-dimensional subspace of an inner product

space V .

(a) If {v1 , . . . , vn } is an orthonormal basis for W and u ∈ V ,

then

projW u = hu, v1 iv1 + hu, v2 iv2 + · · · + hu, vn ivn

(b) If {v1 , . . . , vn } is an orthogonal basis for W and u ∈ V ,

then

projW u =

hu, v1 i

hu, v2 i

hu, vn i

v1 +

v2 + · · · +

vn

2

2

kv1 k

kv2 k

kvn k2

Proof for part (a):

Denote

w1 = hu, v1 iv1 + hu, v2 iv2 + · · · + hu, vn ivn

and w2 = u − w1 . Then w1 ∈ W as a l.c. of its basis vectors.

We show w2 ∈ W ⊥ , that is, w2 ⊥w for any w ∈ W . Let

w = k1 v1 + k2 v2 + · · · + kn vn .

Then hw2 , wi = hu − w1 , wi = hu, wi − hw1 , wi. One has

hu, wi = hu, k1 v1 + k2 v2 + · · · + kn vn i

= k1 hu, v1 i + k2 hu, v2 i + · · · + kn hu, vn i.

Part (c) of the Orthonormal Basis Theorem implies

hw1 , wi = hu, v1 ik1 + hu, v2 ik2 + · · · + hu, vn ikn .

Hence, hw2 , wi = 0, that is, w2 ⊥w. So, w2 ∈ W ⊥ .

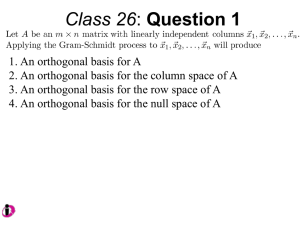

Theorem (Gram-Schmidt Process)

Every nonzero finite-dimensional inner product space has an

orthonormal basis.

Proof.

Given a basis {u1 , . . . , un } of V , we construct an orthonormal

one {v1 , . . . , vn }.

Step 1: Set v1 = u1 .

Step 2: Let W1 = span{v1 }. Set

v2 = u2 − projW1 u2 = u2 −

hu2 , v1 i

v1

kv1 k2

Step 3: Let W2 = span{v1 , v2 }. Set

v3 = u3 − projW2 u3 = u3 −

... and so on, (totally n steps).

hu3 , v2 i

hu3 , v1 i

v1 −

v2

2

kv1 k

kv2 k2

Example

Let u1 = (1, 1, 1), u2 = (0, 1, 1), u3 = (0, 0, 1).

Step 1: v1 = u1 = (1, 1, 1).

Step 2:

hu2 , v1 i

v1

kv1 k2

µ

¶

2

2 1 1

= (0, 1, 1) − (1, 1, 1) = − , ,

3

3 3 3

v2 = u2 −

Step 3:

hu3 , v1 i

hu3 , v2 i

v1 −

v2

2

kv1 k

kv2 k2

µ

¶

1

1/3

2 1 1

= (0, 0, 1) − (1, 1, 1) −

− , ,

3

2/3

3 3 3

µ

¶

1 1

=

0, − ,

2 2

v3 = u3 −

Proof of the uniqueness in the Projection Theorem:

The existence of an orthonormal basis for W is provided by the

Gram-Schmidt Theorem. Let

u = w1 + w2

for some w1 ∈ W and w2 ∈ W ⊥ , and assume

u = w01 + w02

for some other w01 ∈ W and w02 ∈ W ⊥ . Then

0 = (w01 − w1 ) + (w02 − w2 ),

or

w1 − w01 = w02 − w2 .

But w01 − w1 ∈ W and w02 − w2 ∈ W ⊥ . Since W ∩ W ⊥ = {0},

we get w01 = w1 and w02 = w2 .

6.5 Orthogonal Matrices: Change of Basis

Definition

A square matrix A is called orthogonal matrix if

A−1 = AT .

For an orthogonal matrix A it holds AAT = AT A = I.

Example

The following matrix is orthogonal:

3 2

A=

AT A =

3

7

2

7

6

7

− 67

3

7

2

7

2

7

6

7

− 37

7

− 67

2

7

3

7

6

−7

2

7

6

7

2

7

− 37

7

3

7

6

7

2

7

3

7

6

7

6

7

2

7

− 37

1 0 0

= 0 1 0

0 0 1

Theorem

The following statements are equivalent for an n × n matrix A:

(a) A is orthogonal.

(b) The row vectors of A form an orthonormal set in R n

with the Euclidean inner product.

(c) The column vectors of A form an orthonormal set in R n

with the Euclidean inner product.

Proof for (a) ⇐⇒ (b):

AAT =

r1 · r1 r1 · r2 · · ·

r2 · r1 r2 · r2 · · ·

..

..

..

.

.

.

rn · r1 rn · r2 · · ·

r1 · rn

r2 · rn

..

.

rn · rn

=

1 0 ···

0 1 ···

.. .. . .

.

. .

0 0 ···

Hence, ri · rj = 0 for i 6= j and ri · ri = 1 for i = 1, 2, . . . , n.

0

0

..

.

1

Theorem

(a) The inverse of an orthogonal matrix is orthogonal.

(b) A product of orthogonal matrices is orthogonal.

(c) If A is orthogonal then det(A) = 1 or det(A) = −1.

Proof for part (b):

If A and B are orthogonal, then

A−1 = AT

and

B −1 = B T .

One has

(AB)−1 = B −1 A−1 = B T AT = (AB)T .

Proof for the other parts is obvious.

Theorem

If A is an n × n matrix, then the following are equivalent:

(a) A is orthogonal.

(b) ∀x ∈ R n it holds kAxk = kxk.

(c) ∀x, y ∈ R n it holds Ax · Ay = x · y.

Proof.

(a) ⇒ (b): If A is orthogonal then AT A = I, so

kAxk = (Ax · Ax)1/2 = (x · AT Ax)1/2 = (x · x)1/2 = kxk.

(b) ⇒ (c): Assume kAxk = kxk. Then

Ax · Ay =

=

=

1

1

kAx + Ayk2 − kAx − Ayk2

4

4

1

1

2

kA(x + y)k − kA(x − y)k2

4

4

1

1

kx + yk2 − kx − yk2 = x · y.

4

4

.

(c) ⇒ (a): Assume Ax · Ay = x · y. Then

x · y = x · AT Ay,

which is equivalent to

x · (AT Ay − y) = 0

or

x · (AT A − I)y = 0.

In particular, for x = (AT A − I)y one gets

(AT A − I)y · (AT A − I)y = 0,

which implies

(AT A − I)y = 0.

Since the above homogeneous system is satisfied ∀y ∈ R n , we

conclude AT A − I = 0, or AT A = I, so A is orthogonal.

Change of Orthonormal Basis

Recall, that if P is a transition matrix from a basis B 0 to a basis

B, then ∀x ∈ R n :

[v]B = P[v]B 0

and

[v]B 0 = P −1 [v]B

Theorem

If P is the transition matrix from an orthonormal basis to

another orthonormal basis, then P is orthogonal.

Proof.

By the Orthonormal Basis Theorem (part (c)), ∀u ∈ R n :

kuk = k[u]B 0 k = k[u]B k = kP[u]B 0 k.

Now, let x ∈ R n and u be such that x = [u]B 0 . Then

kuk = kxk = kPxk.

Hence, P is orthogonal.