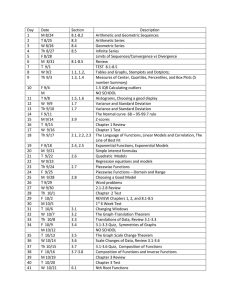

Math 2B - Recitation Notes 5

advertisement

Math 2B - Recitation Notes 5

Maria M. Nastasescu

Exam instructions: Please use a standard bluebook and put your name and section on the

outside. You may use the textbook (Pitman), handouts, solution sets, your notes and homework,

and TA notes (Someone elses notes handcopied by you are OK.) Calculators and computers are

allowed, but only to do elementary numerical calculations (like a finite sum) and to use elementary

built-in functions like logs and normal distribution functions (as an alternative to using tables)not to do programming, simulations, numerical inte- gration, calculus, symbolic computations, or

functions like ones that calculate binomial or Poisson probabilities (built in discrete probability

density functions). Please indicate clearly any work done in overtime. Points will be recorded

separately and considered informally in course grades.

1

Basic Formulas in Probability Theory

• P (Ac ) = 1 − P (A)

• If A and B are independent, P (AB) = P (A)P (B)

• If A and B are disjoint, P (A ∪ B) = P (A) + P (B)

• Inclusion-Exclusion: P (A ∪ B) = P (A) + P (B) − P (AB)

2

Conditional Probability

• Definition: The conditional probability of A given B is P (A|B) =

P (AB)

P (B)

• Average and Bayes’ Rules, special case: for any events A and B,

P (A) = P (A|B)P (B) + P (A|B c )P (B c )

P (B|A) =

P (A|B)P (B)

P (A|B)P (B) + P (A|B c )P (B c )

(2.1)

(2.2)

• Average and Bayes’ Rules, general case: Suppose B1 , B2 , · · · , Bn partition the sample

space. Then

P (A) = P (A|B1 )P (B1 ) + · · · + P (A|Bn )P (Bn )

(2.3)

P (Bi |A) =

P (A|Bi )P (Bi )

P (A|Bi )P (B1 ) + · · · + P (A|Bn )P (Bn )

(2.4)

Example. Suppose people can be classified in terms of the risk they have of getting involved in

an accident: small risk (5% chance of an accident in any given year), average risk (15%) and high

risk (30%). Suppose 20% of the population has a small risk of accidents, 50% of the population

has an average risk of accidents and 30% of the population has a high risk of accidents. What

1

proportion of the populatin have accidents in a fixed year? If a given person has no accidents in

a year what is the probability that he or she is small risk?

Proof. Let S be the event that a person has small risk, A that it has average risk and H

that it has high risk. Let X the event that a person has an accident in a given year. We know

P (X|S) = 0.05, P (X|A) = 0.15 and P (X|H) = 0.30. We know that P (S) = 0.20, P (A) = 0.50

and P (H) = 0.30.

P (X) = P (X|S)P (S) + P (X|A)P (A) + P (X|H)P (H) = 0.175

P (S|X c ) =

=

3

P (X c |S)P (S)

P (X c |S)P (S) + P (X c |A)P (A) + P (X c |H)P (H)

(1 − 0.05) · 0.20

= 0.2303

(1 − 0.05) · 0.20 + (1 − 0.15) · 0.50 + (1 − 0.30) · 0.30

(2.5)

(2.6)

(2.7)

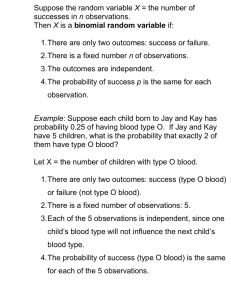

Binomial Distribution

• If Xi is a random variable

Pn which is 1 with probability p and 0 otherwise and if the Xi are

independent then X = i=1 Xi is a random variable and it has the binomial distribution

n k

P (X = k) =

p (1 − p)n−k

(3.1)

k

• If p is close to 0.5 and n large, then can

p approximate binomial distribution with the normal

distribution. Let µ = np and σ = np(1 − p). If X is a random variable in the (n, p)

binomial distribution then

b + 1/2 − µ

b − 1/2 − µ

P (a ≤ X ≤ b) ≈ Φ

−Φ

.

(3.2)

σ

σ

Remark: If we don’t have the 1/2 in the above formula we just get the result of the central

limit theorem. The 1/2 however makes this approximation better.

• If p is small then

P (X = k) ≈ e−µ

µk

k!

(3.3)

where µ = np.

Example. In a sample of 80, 000 married couple, what is the probability that both partners

were born on December 1st? The probability that both partners were born on December 1st is

1

· 1 = 7.51 · 10−6 which is clearly very small. The mean is then µ = 80, 000 · 7.51 · 10−6 ≈ 0.6.

365 365

Let X be the number of couples that share December 1st as their birthday. Then

P (X ≥ 1) = 1 − P (X = 0) = 1 − e−0.6

2

(0.6)0

= 0.4512.

0!

(3.4)

4

Continuous Distributions

• Given a probability density function f we have

b

Z

f (x)dx

P (a < X < b) =

(4.1)

a

• The cumulative distribution function is

Z

t

f (x)dx

F (t) = P (X < t) =

(4.2)

−∞

5

Expectation

• E(X) =

R∞

−∞

xf (x)dx where f is the density function

R∞

• E(X i ) = −∞ xi f (x)dx

P

P

• E( i Xi ) = i E(Xi ) (this holds even if Xi is not independent)

• E(XY ) = E(X)E(Y ) if X and Y are independent

• V ar(X) = E(X 2 ) − (E(X))2

6

Central Limit Theorem

The Central Limit Theorem says that if you have n identically distributed random variables (with

finite variance), then as n → ∞, the sume (or average) of the n variables converges in distribution

to the normal distribution.

Let Sn = X1 +· · ·+Xn be the sum of n independent

random variables with the same distribution.

√

For n large, E(Sn ) = nµ and SD(Sn ) = σ n, where µ = E(Xi ) and σ = SD(Xi ). Then for all

a≤b

Sn − nµ

√

P a≤

≤ b ≈ Φ(b) − Φ(b)

(6.1)

σ n

where Φ is the standard normal cumulative distribution function. The error of this approximation

goes to 0 as n → ∞.

7

Change of variables

Let X be a random variable with density fX (x) on (a, b). Let Y = g(X), where g is either strictly

increasing or strictly decreasing on (a, b). The density of Y on (g(a), g(b)) is then

fY (y) = fX (x)

1

|dy/dx|

(7.1)

where y = g(x). Here we solve y = g(x) for x in terms of y and then substitute this value of x into

fX (x) and and dy/dx.

From the proof of the above theorem (see p. 304) we can write the above expression in another

form:

fY (y) = fX (x(y))|dx/dy|.

(7.2)

3

Another way to do it is the following:

fY (t) =

8

dFY (t)

dP (g(X) < t)

dP (X < g −1 (t))

dFX (g −1 (t)

dg −1 (t)

=

=

=

= fX (g −1 (t))

dt

dt

dt

dt

dt

(7.3)

Independent Normal Variables

If X and Y are independent with normal (λ, σ 2 ) and normal (µ, τ 2 ) distribution, then X + Y has

normal (λ + µ, σ 2 + τ 2 ) distribution.

Example. Let X and Y be independent normal (0, 9) and normal (3, 16) distributions respectively. What is P (X < Y )? From above we obtain that X − Y is a normal (−3, 25) distribution.

Thus we have

X −Y +3

0+3

√

= Φ(.6) = .7257

(8.1)

P (X < Y ) = P (X − Y < 0) = P

< √

25

25

9

Continuous Joint Distributions

If f is the joint distribution of X and Y then

ZZ

P ((X, Y ) ∈ B) =

f (x, y)dxdy.

(9.1)

B

The marginal density function of X is

Z

∞

fX (x) =

f (x, y)dy.

(9.2)

−∞

Example. If X1 and X2 are independent exponential random variables with parameters λ1 and

X1

λ2 respectively. Find the distribution of Z = X

. Also compute P (X1 < X2 ).

2

−λ1 x1 −λ2 x2

1

First note that fX1 X2 (x1 , x2 ) = λ1 λ2 e

and then

e

. We start with the c.d.f. for Z = X

X2

take the derivative to get fZ . We have

Z ∞ Z ax2

X1

≤ a = P (X1 ≤ aX2 ) =

λ1 λ2 e−λ1 x1 e−λ2 x2 dx1 dx2

(9.3)

FZ (a) = P

X2

0

0

ax

Z ∞

Z ∞

1 −λ1 x1 2

−λ2 x2

=

λ1 λ2 e

− e

dx2 = λ2

e−λ2 x2 1 − e−λ1 ax2 dx2

(9.4)

λ1

0

0

0

∞

Z ∞

1

−λ2 x2

−x2 (λ2 +λ1 a)

−y(λ2 +λ1 a)

−λ2 x2

= λ2

e

−e

dx2 = λ2

e

−e

(9.5)

λ2 + λ1 a

0

0

λ2

λ1 a

λ2

−

−1 =1−

=

(9.6)

λ2 + λ1

λ2 + λ1 a

λ2 + λ1 a

Then

fZ (a) = FZ0 (a) =

4

λ1 λ2

(λ1 a + λ2 )2

(9.7)

Finally,

P (X1 < X2 ) = P

X1

λ1

<1 =

.

X2

λ2 + λ1

(9.8)

Example. Suppose that the random variables X1 , · · · , Xk are independent and Xi has an

exponential distribution with parameter λi . Let Y = min{X1 , ·Xk }. Show that Y has exponential

distribution with parameter λ1 + · · · + λk .

If X has an exponential distribution with parameter λ then for every t,

Z ∞

1 − FX (t) = P (X ≥ t) =

λe−λx dx = e−λx

(9.9)

t

Because Y = min{X1 , · · · , Xk } for every t > 0

P (Y > t) = P (X1 > t, · · · , Xk > t) = P (X1 > t)P (X2 > t) · · · P (Xk > t) = e−λ1 t · · · e−λk = e−t(λ1 +···+λk )

(9.10)

Therefore Y has an exponential distribution with parameter λ = λ1 + · · · + λk .

10

Density Convolution Formula

If (X, Y ) has density f (x, y) in the plane, then X + Y has density on the line

Z ∞

f (x, z − x)dx

fX+Y (z) =

(10.1)

−∞

If X and Y are independent then

Z

∞

fX (x)fY (z − x)dx.

fX+Y (z) =

(10.2)

−∞

Example. [Pitman, 5.4.7] Let X and Y have joint density f (x, y). Find formulae for the

densities of each of the following random variables: a) XY ; b) X − Y ; c) X + 2Y

For the first part, we apply the method used to derive the distribution of ratios on p.382. Such

that

Z

P (z < Z < z + dz) = P (x < X < x + dz, z < XY < z + dz).

(10.3)

x

Instead of the cone on p. 382 we now have an area between curves xy = z and xy = z + dz. Thus

we have that the area of the parallelogram for fixed x is approximately equal to

z + dz z

dxdz

dx

−

=

(10.4)

x

x

x

We get the density of Z by integration over x. Thus,

Z

1 z

fZ (z) =

f x,

dx

x

x x

(10.5)

For the second part, we can apply the linear change of variable formula and get that f−Y (t) =

fY (−t)(−1). Then if X and Y are independent

Z

Z

fX−Y (t) = fX (x)f−Y (t − x)dx = −fX (x)fY (x − t)dx

(10.6)

Finally, for the third part we have the density f2Y (t) = 12 fY 2t . We then conclude that

Z t−x

1

fX+2Y (t) = f x,

· · dx

(10.7)

2

2

x

5