Data Structures and Algorithms

advertisement

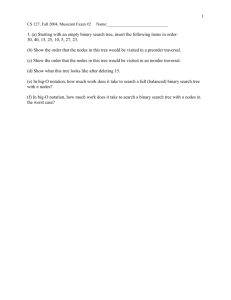

Beginning Algorithms (0-7645-9674-8) Classic Data Structures in Java (0-201-70002-6) Data Structures and Algorithms (0-201-00023-7) Introduction to Algorithms, 2Ed (0-262-03293-7) Data Structures and Algorithms in Java (1-5716-9095-6) Algorithms in Java: Parts 1-4, 3Ed (0-201-36120-5) 1 Table of Contents Data Structures, Growth of Functions ............................................................. 3 Basic Sorting ......................................................................................................... 4 Advanced Sorting ................................................................................................. 5 Linked Lists ........................................................................................................... 6 Stacks, Queues, Deques ...................................................................................... 7 Binary Search and Insertion ............................................................................... 8 Binary Search Trees ............................................................................................. 9 AVL ........................................................................................................... 10 Splay ........................................................................................................... 10 2-3............................................................................................................... 11 B-Tree ........................................................................................................ 11 Red-Black .................................................................................................. 12 Heap ..................................................................................................................... 13 Hashing, Hash tables ......................................................................................... 14 Sets, Maps ............................................................................................................ 16 Matrices................................................................................................................ 17 Graphs ................................................................................................................. 18 Ternary Search Trees ......................................................................................... 20 Trie ....................................................................................................................... 21 String Searching .................................................................................................. 22 Dynamic Programming ..................................................................................... 23 2 Data Structures, Growth of Functions Implementations: o BitSet o Array (sorted, accumulative, sparse, circular) o Linked list (sorted) o SkipList o Binary search tree (self-balancing – AVL, Red-Black, 2-3, etc) o Ternary search tree o Trie o Heap o Hashtable (open-address, buckets) Interfaces: o Stack o Queue o Priority queue o Deque o List o Map o Set o Matrix o Graph Growth of Functions o O(1) – constant o O(logN) – logarithmic Problem size cut by constant fraction at each step o O(N) – linear Small amount of processing is done on each input element o O(N*logN) Problem is solved by breaking up into smaller subproblems + solving them independently + combining the solutions o O(N^2) – quadratic Processing all pairs of data items (double-nested loop) o O(N^3) – cubic Processing triples of data items (triple-nested loop) o O(2^N) – exponential Brute-force solutions 3 Basic Sorting S – Stable algorithm, U – Unstable algorithm Bubble sort – swap from left to right, bubling up the maximum. S o Sorting people by their height o Each pass brings one element into its final position Selection sort – select maximum and put it to the right. U o Sorting books on the shelf o Each pass brings one element into its final position Insertion sort – take next and put at the proper location. S o Sorting cards in the hand o Very effective on nearly sorted data ! Bubble / insertion sort – elements move only one location at a time. Bubble / selection sorts – always O(N^2) comparisons, unlike insertion. Counting sort – sorts N positive numbers from limited range M: o Array – count the number of appearances of each number BitSet – if numbers don’t repeat (count = 0/1) O(N + M) – effective only if M << N*logN o Map (value => count) – store + sort keys n – number of unique elements O(N + n*log(n)) – effective only if n << N Radix (punchcards) sort – sorts N positive numbers, each D digits max: o Orders successively on digit positions, from right to left o j = [0,9], a[ j ] points to the queue (linked list) of numbers o Each pass preserves elements’ relative order from the previous one o O(N) – each of D passes o O(N * D) – effective only if D << logN Bucket sort – sorts N positive numbers distributed uniformly: o Divide the interval into N buckets (each bucket - 1/N of interval) o Distribute all numbers into buckets o Sort numbers in each bucket using insertion sort o Go through the buckets in order and collect the result o O(N) total, insertion sorting of all buckets – O(N) Multiple-pass sort – sorts large inputs by reading them multiple times: o When entire input (stored externally) doesn’t fit into memory o Each pass reads and sorts an interval (0-9999, 10000-19999, etc) 4 Advanced Sorting Sorting algorithm analysis: o Time - best case, worst case, average case (random input) o Number of comparisons between the keys o Number of time elements are moved Shellsort – H-Sorting: 40 -> 13 -> 4 -> 1 (H = 3H + 1) U o Modified insertion sort: elements move H positions instead of just 1 o H = 1: insertion sort is very fast when elements are almost in place Quicksort – each partition places one item in the final sorted position. U o Partioning o Choosing pivot: First / last / middle / random element Midpoint of the range of elements Median of K random elements o O(N*logN) fastest average case if pivot breaks array in half o O(N^2) worst case when input is already sorted or constant Depends on partitioning (one/two-sided) and pivot o Speedups: Choosing pivot carefully (median of K random elements) Small sub-arrays are sorted using insertion sort Keep array of pointers to records and swap them instead o Finds N-th smallest/largest element(s) without sorting all input Apply quicksort on a relevant partition only Mergesort – merge two sorted lists in one, creates new list. S o O(N*logN) guaranteed o Accesses the data primarily sequentially, good for linked lists Heapsort – uses max-heap without creating a new array. U o O(N*logN) worst case and average case Stability can be achieved with compound comparator (compare >1 field) 5 Linked Lists Header: no header / header node Terminator: null / sentinel Links: single / double Pointer: to first / to first and last Sorted lists – fast merge o Self-organizing lists – speed up repeating queries Skip lists – O(logN) search/insert/remove operations (on average) o Search may be faster if element is found on upper levels 6 Stacks, Queues, Deques Linear data structures – elements added at ends and retain insertion order Stack – LIFO: o Usages – recursion, parentheses, Hanoi, postfix calculator o DFS backtracking: Moves deeply into the structure before alternatives One path is analyzed as far as possible before alternatives May find a solution more quickly but not necessarily the shortest one o Implementations: array, linked list o Java Collections – Stack (extends Vector), Collections.asLifoQueue Queue – FIFO: o Usages – buffers, caterpillar, producer/consumer o BFS backtracking: All paths are investigated at the same time What would happen if ink were pour into the structure … More thorough but requires more time than DFS Always finds shortest path – all paths of length N+1 are examined after all paths of length N o Implementations: (circular) array, (circular) linked list, two stacks Circular linked list – “ring buffer” o Java I/O – PipedInputStream, PipedOutputStream (circular array) Deque – combination of stack and queue, double-ended queue: o Implementations: (circular) array, (circular) linked list o Stack + Queue = Scroll: insertions at both ends, removal from one 7 Binary Search and Insertion Recursive binary search Iterative binary search Binary insertion – better than populate + sort (N*logN ~ logN) Performing a sort after every insertion into a list can be very expensive. Performing a sort after all of the data has been added to a list is more expensive than inserting the data in sorted order right from the start. 8 Binary Search Trees H = Height – the longest path from root to leaf Ancestor / descendant Binary search tree: o No node has more than two children o All children to the left have smaller values o All children to the right have larger values. o In-order traversal produces a sorted order of elements o Array implementation: Children of i – (2i + 1), (2i+2) Parent of i – ((i – 1) / 2 ) Full binary tree of height H – N = ((2^(H + 1)) – 1) – number of nodes Complete (balanced) binary tree: o Every node has 2 children except bottommost two levels (l -> r) o Height-balanced – for each node: abs(H(left) – H(right)) <= 1 o Performance characteristics are those of a sorted list o H <= logN: (2^H – 1) <= N <= ((2^( H + 1)) – 1) o Tree with N internal nodes: N + 1 external nodes 2N links: N - 1 links to internal nodes, N + 1 to external nodes o Insert/Delete operations break the tree balance When data inserted/deleted is ordered – shoestring! o Self-balancing: AVL, Red-Black/AA, Splay, 2-3/B-Tree, Scapegoat Minimum – follow the left links (left-most: leaf or with right child) Maximum – follow the right links (right-most: leaf or with left child) Successor – one with the next largest value (smallest of all larger): o Has right child – minimum (leftmost) of that o Otherwise – climb up until first “right-hand turn” Climb up from right child, stop when you did so from left one TreeMap.successor() Predecessor – one with the next smallest value (largest of all smaller): o Has left child – maximum (rightmost) of that o Otherwise – climb up until first “left-hand turn” Climb up from left child, stop when you did so from right one TreeMap.predecessor() Deletion: o No children – remove it o One child (left or right) – replace it with deleted node o Two children – swap with successor/predecessor => above options Traversals: o DFS (stack): in-order, pre-order, post-order o BFS (queue): level-order o Euler tour: beforeLeft(), left, inBetween(), right, afterRight() 9 In-order traversal: o Recursive: left subtree, node, right subtree o Iterative: start with minimum (leftmost) and visit each successor Used by java.util.TreeMap iterators Using stack – (Classic DS in Java – p.344) Pre-order traversal: o Recursive: node, left subtree, right subtree o Iterative: requires explicit use of stack (Classic DS in Java – p.343) o X descendant of Y: pre(y) <= pre(x) <= pre(y) + desc(y) pre(n) – position of node n in preorder listing of nodes desc(n) – number of proper descendants of node n Nodes in the sub-tree with root n numbered consecutively from pre(n) to pre(n) + desc(n) o Amortized analysis: Complete iteration – O(N) Each step – [O(1), O(N)], O(1) amortized cost Post-order traversal: o Recursive: left subtree, right subtree, node o Iterative: requires explicit use of stack (Classic DS in Java – p.341) o X descendant of Y: post(y) – desc(y)<= post(x) <= post(y) post(n) – position of node n in postorder listing of nodes desc(n) – number of proper descendants of node n Nodes in the sub-tree with root n numbered consecutively from post(n) – desc(n) to post(n) o Amortized analysis – same as for pre-order AVL tree: o Height-balanced o Sentinel (empty leaf marker) height is always -1 o Insert/Delete – two passes are necessary: down (insert/delete node) and up (rebalance tree). Not as efficient as red-black trees. o Rebalance: climb up from inserted/deleted node and rotate: Inbalanced Left-heavy Left-heavy Left-heavy Right-heavy Right-heavy Right-heavy => => => => => => => Child Left-heavy Balanced Right-heavy Right-heavy Balanced Left-heavy : : : : : : : action right rotation right rotation child left rot. + right rot. left rotation left rotation child right rot. + left rot. Splay tree (Wikipedia): o Self-optimizing: accessed elements are becoming roots When node is accessed – splay operation moves it to the root o Insertion / lookup / removal – O(logN) amortized o Bad uniform access (accessing all elements in the sorted order) o Works with nodes containing identical keys, preserve their order 10 2-3 tree: o Each interior node has 2 or 3 children o Keeps smallest value from the 2-nd / 3-rd (if exists) child sub-tree o Each path from the root to a leaf has the same length o Insert: add + split all the way up (if required) o Deletion: remove + join all the way up (if required) B-Tree: o 2-3 tree extension o Disk access: Seek time – repositioning of disk heads Latency – disk rotation into position Transfer time – of data o Designed for managing indexes on secondary storage providing efficient insert, delete, and search operations. Tends to be broader and shallower than most other tree structures, so the number of nodes traversed tends to be much smaller. o Balanced – O(logN) search time o Variations – B+Trees, B×Trees, etc. Designed to solve other aspects of searching on external storage. o Each node contains up to N keys (N determined by the size of a disk block) o Non-leaf node with K keys has K+1 children o Each non-root node is always at least half full o Insertion (push up!) – tree grows from the leaves up: Start at the root and search down for a leaf node Insert new value in order If node becomes “full” – split it to two (each containing half of keys) The “middle” key from the original node is pushed up to the parent and inserted in order with a reference to the newly created node Height of a B-Tree only increases when root node becomes full and needs to be split. Whenever the root node is split, a new node is created and becomes the new root o Deletion (pull down!) – involves merging of nodes: To correct the K/K+1 structure – some of the parent keys are redistributed among the children: keys from parent nodes are pulled down and merged with those of child nodes Height of a B-Tree only decreases when root node must be pulled down (or removed). 11 Red-Black tree: o Java Collections – TreeSet / TreeMap implementation o The most efficient balanced tree when data is stored in memory o Searching works the same way as it does in an ordinary binary tree o Insert/Delete – top-down single-pass (rebalance while searching) Bottom-up – two passes (insert/delete + rebalance on the way up) o Nodes are colored – every node is either black or red o Insert/Delete – red-blacks rules keep the tree balanced: Root is always black If a node is red – its children must be black “Black height” (inclusive number of black nodes from between a given node and the root) must be the same for all paths from root to leaf or “null child” (a place where a child could be attached to a non-leaf node) o Correction (rebalancing) actions: Color flips Rotations o Delete is complex – sometimes nodes are just marked as “deleted” 12 Heap Max-heaps, min-heaps – element stored at the root o Min-heaps – priority queue o Max-heaps – heapsort Complete (balanced) binary tree with heap property: o Min-heap : node’s value <= any child’s value o Max-heap : node’s value >= any child’s value Siftup (usually, last element) – swap with parent Siftdown (usually, root) – swap with lesser (smallest) child Priority queue: o Find and remove the smallest value fast o Insert – add last and siftup() o ExtractMin – take root, replace root with last and siftdown() Heapsort with one array – max-heap: o Build array into heap – grow heap and squeeze arbitrary elements: siftup all elements o Use the heap to build sorted sequence – squeeze heap and grow sorted area: swap root (top) with array’s last element, siftdown root. Skew heaps: o Heap implemented as a tree, no fix-size limitations o No attempt to maintain the heap as balanced binary tree o Order of left and right children doesn’t affect heap-order property o Insert/delete operations – O(logN) amortized: Left and right children are swapped Merging two trees into single heap Unbalanced tree affects badly performance of one operation … but subsequent operations are very fast 13 Hashing, Hash tables O(1) data lookup, randomizing vs. ordering Hash table size – prime number (^2 in Java Collections: % -> & / >>) Key + Hash function => Hash value + (% / & / >>) => Hash table index Hash function: o Transforms possibly non-integer key into an integer index Long (64 bits) is required, integer (32 bits) is not enough o Perfect: N elements => [0, N-1] without collisions Not available for an arbitrary set of elements o Good: uniformly distributes all elements over range of hash values o Mapping – int values from key are transformed or mapped o Folding – key is partitioned, int values are combined (+/-/XOR/…) o Shifting – bit shifting, folding with commutative operator (+) o Casting – converting numeric type into an integer o java.lang.String – for i in [0..(length-1)]: H = (31*H + ch[ i ]) “elvis” = ((((e * 31 + l) * 31 + v) * 31 + i) * 31 + s) Load factor – average number of elements in each bucket o N / M, N – number of elements, M – size of the hash table Collisions: o Resizing + recalculating all hashes – expensive (time and space). Required when table is full or when load factor is reached. o Open-address hashing: All elements are stored in the hash table itself Probe for free location: Linear probing by a constant amount n (usually, n=1) Quadratic probing: n+1, n+4, n+9, n+16, etc Double hashing – hashes the original hash value Random probing – reproducible! Load factor – [0, 1] Clustering! Removal – put a tombstone marker instead of just empty cell Degenerates into linked list as load factor increases – O(N) o Buckets – store n items at each bucket (linked list, AVL tree) Load factor > 1 but usually kept < 1 Performs as O(1) regardless of bucket’s list length ! Requires good hashing function (distributes uniformly) Fixed-size bucketing – limit bucket’s list in size, use next bucket’s list when becomes full 14 java.uti.HashMap: o Uses buckets with linked list o Size – number of entries (key/value mappings) o Capacity – number of buckets (array’s length) in the hash table o Load factor – how full the table gets before it’s capacity is increased The definition differs from the classic one above … but in the end, they’re the same due to rehashing policy 0.75 by default o Threshold – ( capacity * load factor ) o Rehashed to twice the capacity when size >= threshold o Initial capacity > ( maximum entries / load factor ) => no rehash 15 Sets, Maps Map: aka dictionary, lookup table, associative array, etc o Sorted array o (Sorted) linked list – O(N), predictable iteration o Skiplist o Binary self-balancing search tree – O(logN), predictable iteration o Hashtable – O(1) , random iteration o Java Collections: HashMap LinkedHashMap – hashtable and predictable iteration NavigableMap extends SortedMap – keys total ordering ConcurrentSkipListMap TreeMap Set: unordered pool of data containing no duplicates o Usually backed by Map o Set operations: Union : Collection.addAll(), bitwise OR in BitSet Intersection : Collection.retainAll(), bitwise AND in BitSet Difference : Collection.removeAll() Subset : Collection.containsAll() Union-find : Divide elements into partitions (merge of sets) Are two values end up in the same partition/set? o Java Collections: HashSet LinkedHashSet – hashtable and predictable iteration NavigableSet extends SortedSet – elements total ordering ConcurrentSkipListSet TreeSet 16 Matrices Two-dimensional array: a[row][column] Binary matrix – BitSets array: row – BitSet, column – bit Sparse array: o Array : empty entries, a[ index ] o Linked list : (index / value), find( index ) o Map : index => value, get( index ) Sparse matrix – two-dimensional sparse array: o Any combination of above (9 options) Map of Maps : Map.get( row ).get( column ) Map of Linked lists : Map.get( row ).find( column ) Array of Linked lists : a[ row ].find( column ) o Orthogonally linked-list – 2 arrays for rows / columns + linked lists Allows matrix traversal by either rows or columns o Clean up the structure when default values are assigned ! 17 Graphs Vertices V and edges E Directed / undirected Weighted / unweighted Directed graphs: o Acyclic (DAG) – good for expression with common sub-expressions o DFS traversal – tree arcs, back arcs, forward arcs, cross arcs Spanning tree – edges subset forming a tree with all vertices Test for DAG acyclity – cycle DFS finds a back arc Strongly connected components: Maximal set of vertices with path from any vertex in the set to any other vertex in the set DFS + number vertices, reverse edges, DFS from high o Topological sort / order: Find vertex without successor, add to the front of the result Builds the result list from the last to the first vertex DFS + print vertex after traversing all it’s adjacent vertices Prints vertices in a reverse topological order Undirected graphs: o Connected graph – every pair of it’s vertices is connected o Cycle – path of length >= 3 that connects a vertex to itself o Free tree – connected + acyclic graph: Every tree with N >= 1 vertices contains exactly N-1 edges Adding an edge to a free tree causes a cycle o Spanning tree – free tree connecting all the vertices o Minimum cost spanning tree: Data Structure and Algorithms (Aho et al.) – p.234 MST property Prim – start with U of one vertex and “grow” it using MST Kruskal – analyze edges in order, connect components Adjacency matrix – stores the vertices (good for dense graphs – E ~ V^2): o Graph vertices are matrix indices o Matrix value = 0/1 or eternity/weight o O(1) – Is there an edge between vertex A and vertex B ? o O (V^2) space regardless of the number of edges … unless sparse matrix is used Edge list – stores only the edges (good for sparse graphs – E << V^2): o Unweighted graph – each vertex holds a Set of adjacent vertices o Weighted graph – Set => Map (vertex => weight) o O (V + E) – Process all the edges in a graph (rather than O(V^2)) o O (V + E) space 18 Single-source reachability – set of vertices reachable from given vertex o Unweighted edge list – DFS (stack), BFS (queue) search O(E), E <= V^2 o Weighted positively edge list – Dijkstra’s “greedy” algorithm: Finds shortest path to all reachable nodes in graph DFS + priority queue is used instead of stack O(E*logE) All-pairs reachability – single-source simultaneously for all vertices o Unweighted adjacency matrix – Warshall’s algorithm: Adjacency matrix => reachability matrix 3 nested loops : k, i, j = [o, V-1] Innermost loop : a[i][j] |= a[i][k] & a[k][j] O(V^3) o Weight adjacency matrix – Floyd’s algorithm: Finds shortest path between all pairs of vertices Modification of Warshall’s algorithm: (|= -> =), (& -> +) Innermost loop : a[i][j] = a[i][k] + a[k][j] (if less than) O(V^3) Recovering the shortest path – keep matrix p[i][j] = k 19 Ternary Search Trees Specialized structures for storing and retrieving strings A node holds: o Reference to the smaller and larger nodes – like binary search tree o Single letter from the world – instead of holding the entire word o Child node – subtree of nodes containing any following letters Performs far fewer individual character comparisons compared with an equivalent binary search tree Words that share a common prefix are compressed – prefix-sharing Not every letter of the alphabet is represented on each level Searching for a word: o Perform a binary search starting with the first letter of the word o On matching node – move down one level to its child and start again with the second letter of the word o Continue until either all the letters are found (match!) or you run out of nodes (no match!) Inserting a word: o Add extra leaf nodes for any letters that don’t already exist Prefix searching (finding all words with a common prefix): o Perform a standard search of the tree using only the prefix o Perform an in-order traversal looking for every end-of-word marker in every subtree of the prefix: Traverse the left subtree of the node Visit the node and its children Traverse the right subtree Pattern matching (finding all words matching a pattern, like “a-r--t-u“): o Similar to a straight word search except that anytime you come across a wildcard, rather than look for a node with a matching letter, you instead visit each node as if it was a match. 20 Trie Retrieval Appropriate when number of distinct prefixes p << length of all words l o l/p >> 1 o Dictionary: l/p < 3 (hash table may be more space-efficient) Each path from the root to a leaf corresponds to one word in the set Insert/delete/search – O(length of the word) Considerably faster then hash tables for dictionaries with string elements 21 String Searching Finding one string within another Worst case – no pattern character occurs in the text Brute-force algorithm: o Start at the leftmost character o Compare each character in the pattern to those in the text Match End of text Move the pattern along one character to the right and repeat o Worst case – every pattern char is compared with every text char: O( length of text * length of pattern ) Boyer-Moore algorithm: o Many of the brute-force algorithm moves were redundant o Large portions of text are skipped – the longer the pattern, the greater the improvement o Shifts the pattern N places to the right when mismatch is found o Pattern is compared backwards – from right-to-left o When mismatch – “bad character” is a text character where text and pattern mismatch (rightmost due to backward comparing) o If this character exists in the pattern – shift it right to align them If you need to shift pattern left – shift it right one position o Otherwise, shift pattern right just beyond the “bad character” o Worst case – length of pattern is skipped each time: O ( length of text / length of pattern ) java.lang.String.indexOf(): o Find location of the pattern’s fist character in the text – i o Try matching the rest of the pattern in the text starting from (i+1) o Repeat until whole pattern is matched or end of text 22 Dynamic Programming Top-Down – save known values o Memoization o Mechanical transformation of a natural problem solution o The order of computing sub-problems takes care of itself o We may not need to compute answers to all sub-problems Bottom-Up – precompute the values 23