bailey

advertisement

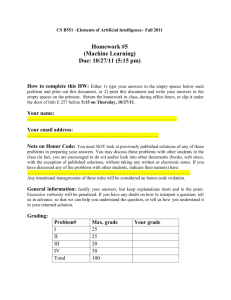

.ls 4

ANSI-C IMPLEMENTATION OF THE BAILEY-MAKEHAM WORKSTATION

William H. Rogers, Ph.D.

April 27, 1991

Work supported by contract 500-90-0048,

Health Care Financing Agency,

and placed in the public domain.

This report describes a set of computer programs written to estimate the

Bailey-Makeham survival model. It is designed to operate on

workstations delivered to the Professional Review Organizations along

side, and integrated with the STATA statistical package, produced by

Computing Resource Center.

In addition, the computer programs are designed to work in a standalone environment using ASCII files.

This report comes in three parts:

1.

1.

The Bailey-Makeham model and the numerical strategy for

estimation.

2.

STATA user's guide for the Bailey-Makeham model.

3.

ASCII user's guide for the Bailey-Makeham model.

THE BAILEY-MAKEHAM MODEL AND ITS ESTIMATION

@The Bailey-Makeham Model@

The Bailey-Makeham model is designed to estimate failure time, or

"survival" variables in which the time to failure follows a hazard

that decreases exponentially to a baseline rate. This model is

typically used to describe time to rehospitalization or death,

following a hospitalization.

Intuitively, these bad outcomes have two causes: underlying chronic

illness (represented by the baseline hazard rate) or simply the

fragility of old age, and acute illness or risk that is coincident with

the hospitalization, unless the patient dies. The initial excess hazard

represents the severity of the illness, and the rate of exponential

design describes the recovery time.

Mathematically, the model was described by Bailey(1988):

r(t) = $\alpha$ exp(-$\gamma$t) + $\delta$

where r(t) describes the incremental hazard for failure per unit time.

The parameters $\alpha$, $\gamma$, and $\delta$ are called the structural

parameters, since

they describe the deterministic information about each individual. The

hazard function describes the stochastic behaviour of that individual

subject to the structural parameters.

The parameter $\delta$ represents the long-term baseline risk, while the

the

parameter $\alpha$ describes the excess short-term risk, and $\gamma$ is

the decay

rate (in units of 1/time). Thus, a large value of $\gamma$ means that

the

process decays quickly, and a small value of $\gamma$ means that it

decays very

slowly.

The structural, or "natural" parameters $\alpha$, $\gamma$, and $\delta$

are a function

of covariates:

$\alpha$ = exp ($\alpha$`0 + $\alpha$`1x`1 + ... + $\alpha$`k)

$\gamma$ = exp ($\gamma$`0 + $\gamma$`1x`1 + ... + $\gamma$`k)

$\delta$ = exp ($\delta$`0 + $\delta$`1x`1 + ... + $\delta$`k)

The choice of covariates is formally identical across all three

parameters. However, covariates may be effectively removed from the

model by fixing (constraining) their parameter values at zero. They

may also be fixed at any other value.

Let R(t) be the survivor function:

log R(t) = - <integral> ($\alpha$ exp(-$\gamma$t) + $\delta$) dt

= -($\alpha$/$\gamma$)(1-exp(-$\gamma$t)) - $\delta$t

Censored data nonfailures have log likelihood log R(t).

Observed failures are assumed to occur in some interval D subsequent to

the observed survival time T. That is, the individual survived to time

T but was known to fail by T+D. Their likelihood is R(T) - R(T+D). The

log likelihood is log(R(T)-R(D)), which is approximately:

log r(t) + log R(t) + log(D)

Thus, the numerical value of the log likelihood is substantially

affected by the choice of the interval length D, as well as the units

for T. The software permits the interval length to vary according to

the subject. For example, if patient A is observed on a daily basis and

patient B on a weekly basis, D would be 1 day for A and one week for B.

@Solution Algorithm@

The solution algorithm is based loosely on the modified Marquardt

maximization procedure programmed by Jim Summe. Briefly, this method

produces a compromise between steepest descent methods and Newton-

Raphson methods, depending on how close to quadratic the function

appears to be. The program emphasizes the steepest descent method if it

runs into numerical difficulties, such as non-positive definite second

derivatives.

For more information on this algorithm, see Stewart(1973)

and Bard(1974). The algorithm was modified by Jim Summe and has

been further modified here.

This algorithm has the following parameters that control convergence:

ssexp --

The relative stepsize for parameter values, as a power

of 10, default -5. The algorithm will not converge if

changes in parameter values are greater than this

amount.

lambda --

The Marquardt parameter. Intuitively, this controls

how much to mix a steepest descent step with a Newton

step. This is changed by the program from step

to step, depending on convergence experience, so that

if the likelihood function appears to be quadratic, the

Newton solution will be emphasized. The initial default

is 1.0.

rhomax --

The maximum stepsize in the parameter values allowed

at any one step.

tolerance -- The minimum change in log likelihood that will be

accepted as convergence.

Unfortunately, the nicely interpretable Bailey-Makeham model appears to

have a nasty likelihood function. A nice problem like the logistic

maximum likelihood has a convex likelihood surface with a single

maximum, but this does not exist here. The likelihood function may have

flat spots, saddle points, and local maxima that are easily confused

with the global maximum, even in seemingly well-defined problems. The

local maxima appear to fit individual points or clumps of points with

early failure times. A dataset that demonstrates the type of problem

that can occur is discussed in Appendix A.

Fortunately, these problems seem to arise only rarely in the kinds of

datasets HCFA analyzes. For example, Jim Summe's substantial experience

has been "I have seen no evidence of this. However, the model is very

richly parameterized. This richness is both a blessing and a curse. A

curse because models can easily be specified which include parameters

that cannot be readily distinguished in the outcome space. Of course,

collinearity amoung the variables being modelled will cause some

problems but even if the variables are not collinear, parameters

associated with them will not be distinguishable."

As with just about every other kind of likelihood method, there is a

problem in the Bailey-Makeham model with likelihood functions that

maximize at infinite values of the parameters. Provisions are made for

dealing with this situation automatically.

We can also show cases where the Bailey-Makeham model elucidates

structure that was not obvious in simpler survival models. A

success of this kind is worth many failures.

The following modifications were made to the maximization procedure in

order to improve its convergence properties:

1.

A reference model is fit first, consisting only of the constant

term for each of the three structural parameters. Remaining

parameters are released only after the reference model has

converged.

2.

Starting values are estimated for the reference model from

the data.

3.

Covariates (other than the constant) are scaled by their

standard deviations in certain computations.

4.

Additional likelihood evaluations are performed when certain

conditions apply, and the step length is reduced if an

unprofitable step is contemplated.

5.

An option has been added to assess whether parameters are

tending to infinity, and to fix them at appropriate values

if it appears that they are.

6.

An option has been added to quit iterations on the basis of

a change in the log likelihood rather than a change in parameter

values.

@Ancillary programs@

Programs are also supplied for the following purposes:

1.

To evaluate predictions of the survival probability at

specific points in time.

2.

To estimate values of the structural parameters and their

standard errors for individual observations.

3.

To perform an evaluation of the surface of the likelihood

function at the point of convergence.

4.

To test sets of structural parameters for significance,

using the estimated covariance matrix. (* NOTE: This is

not supplied directly as a part of this contract, but will

be provided at a future date)

2.

ANSI INTERFACE: ARGUMENTS TO THE PROGRAM

A.

The Bailey-Makeham Model

The program commands are communicated to the program through a

command file. This section describes the syntax of that file.

Note: if you use the program from STATA, this syntax is not of

direct concern to you because the commands will be given directly

in STATA.

The ANSI syntax of this program is frankly old-fashioned. It is

designed this way because this program is intended to be used as a back

end to STATA. However, it is possible to invoke the command language

directly.

The syntax consistes of a series of statements. Each statement consists

of a keyword followed by sets of tokens, ending with a semicolon.

Consistent with C style conventions, everything is lower case.

There are 5 types of statement:

Type 1: How the data are acquired. There are 2 methods: from STATA

datasets and from ASCII datasets.

The relevant keywords are: stata, ascii, read, format.

Type 2: Program options.

Keywords: options, logfile, output.

Type 3: Independent and dependent variables.

and varlist.

Type 4: Starting values.

sv_delta.

Type 5: Fixed parameters.

and fx_delta.

Two lists: depend

Three lists: sv_alpha, sv_gamma, and

Again, three lists: fx_alpha, fx_gamma,

Using your text editor, create an ASCII file with the necessary

commands. The following example conducts an analysis of some

data that are described in the October 19, 1990 issue of JAMA

on the PPS medicare intervention:

options trace interval=1 autofix=.005;

logfile "chf3.log";

stata "chf.dta";

depend Survive Dead;

varlist Acute Chronic age NurseHm female Post1984;

output "chf3.out"; /* this is the binary output */

This 6-line command file was stored in a dataset named chf3.cmd. In

the same directory was a STATA dataset chf.dta with the 8 variables

Acute ... Post1984, Survive, and Dead. There is no importance in

any of the dataset names. However, STATA generally recognizes the

"dta" suffix as its internal datasets. The variable names can

be any combination of letters and numbers, upper or lower case

(case matters), starting with a letter.

The option "interval" defines the interval D in which all failures

occur, defined in the introduction. D may vary in the dataset,

if there is uneven followup, for example. In this example, it is

1 unit--the units happen to be days. The trace option requests

more detail on the progress of the maximization. The autofix

option specifies the aggressiveness with which the program will

attempt to solve "infinite parameter" problems.

Two datasets are created. First, chf3.log is an ASCII dataset that will

contain details on the maximization process. Second, chf3.out is a

binary dataset that will contain the values of the covariance matrix. A

utility program is provided to create an ASCII printout of the

covariance matrix from this binary dataset. The purpose of the binary

dataset is to communicate all important information in the problem to

other programs in the set.

Note that comments may be enclosed by "/*" and "*/".

While the program is running, the user sees the following on the screen:

.ls 2

Iteration

0: Log Likelihood

Iteration

1: Log Likelihood

Iteration

2: Log Likelihood

Iteration

3: Log Likelihood

Iteration

4: Log Likelihood

Iteration

5: Log Likelihood

Iteration

0: Log Likelihood

Iteration

1: Log Likelihood

Iteration

2: Log Likelihood

Iteration

3: Log Likelihood

Iteration

4: Log Likelihood

Iteration

5: Log Likelihood

Iteration

6: Log Likelihood

Iteration

7: Log Likelihood

Stopping with argument 0.

.ls 4

=

=

=

=

=

=

=

=

=

=

=

=

=

=

-14666.9614

-14384.0103

-14344.7678

-14337.8540

-14337.8475

-14337.8475

-14337.8475

-14209.0564

-14121.4334

-14029.5159

-14002.5517

-14001.2944

-14001.2860

-14001.2860

(1)

(2)

(2)

(2)

(2)

(2)

(1)

(2)

(2)

(2)

(2)

(2)

(2)

(2)

Stopping with argument 0 means that the maximization finished;

the user did not press Ctrl+Break or Command+. on the Macintosh.

(Other values are system specific and refer to the type of kill

signal received by the program. Kills are always acknowledged at

the end of an iteration, and an attempt is made to write an output

file showing progress so far.)

The output from the log consists of details on the above (not shown)

and the following summary:

.ls 2

(convergence achieved)

Bailey-Makeham Survival Model 0.4

Log Likelihood (C) =

-14337.848

2590

Number of observations =

Chi2( 18)

14001.286

Prob>chi2

=

673.123

=

0.0000

Log Likelihood

=

-

Structural

|

parameter

Var. |

Coef.

Std. Err.

t

Sig.

Mean

------------------+--------------------------------------------------------alpha

Acute |

0.131239

0.0195267

6.721 0.0000

32.3816

gamma

Acute |

0.193007

0.028999

6.656 0.0000

32.3816

delta

Acute |

0.00999832

0.0123248

0.811 0.4173

32.3816

alpha Chronic |

-0.0153143

0.0132135

-1.159 0.2466

38.3352

gamma Chronic |

-0.112104

0.0219194

-5.114 0.0000

38.3352

delta Chronic |

0.0208428 0.00886573

2.351 0.0188

38.3352

alpha

age |

0.0145094

0.0100164

1.449 0.1476

78.3613

gamma

age |

0.00547471

0.0151997

0.360 0.7187

78.3613

delta

age |

0.013165 0.00466809

2.820 0.0048

78.3613

alpha NurseHm |

0.452992

0.217983

2.078 0.0378

0.0976834

gamma NurseHm |

0.855248

0.297866

2.871 0.0041

0.0976834

delta NurseHm |

0.107426

0.123731

0.868 0.3854

0.0976834

alpha

female |

-0.196235

0.16327

-1.202 0.2295

0.55251

gamma

female |

-0.427492

0.259656

-1.646 0.0998

0.55251

delta

female |

-0.22033

0.0781749

-2.818 0.0049

0.55251

alpha Post1984 |

-0.540054

0.158425

-3.409 0.0007

0.528185

gamma Post1984 |

-0.624134

0.263733

-2.367 0.0180

0.528185

delta Post1984 |

0.057761

0.0887166

0.651 0.5151

0.528185

alpha

_cons |

-10.233

0.858745

-11.916 0.0000

1

gamma

_cons |

-6.30148

1.29032

-4.884 0.0000

1

delta

_cons |

-9.12453

0.356974

-25.561 0.0000

1

------------------+--------------------------------------------------------.ls 4

The interpretation of this output, first, is that the log likehood of the

full model is -14001.286, and the log likehood of the reference model

(constants only) is -14337.848. The Chi-square with 18 degrees of

freedom for the likelihood ratio test is 673, which is of course highly

significant. This means that the variables, taken as a set, make a

difference in the fit. Note that the likelihood is probably not

comparable

to the likelihood from any other kind of model due to the importance of

the interval length.

One might then want to search through the output for the reference model.

These parameters were:

.ls 2

Variable |

alpha

gamma

delta

---------+-----------------------------------------------_cons |

-5.30972

-3.93415

-7.1072

.ls 4

The base hazard is about exp(-7.11), which implies an average survival

time

of just over 1200 days. The short term hazard is exp(-5.31), which must

be

added to the base hazard. Together, these suggest an average expected

survival of 173 days, if the initial hazard prevailed. However, the

initial hazard moves toward the long term hazard with an exponental

decay with time constant 1/exp(-3.93) or 51 days. After 51 days, the

hazard is about 1/500.

Usually, the full model represents a purturbation around the parameters

of the reference model. But sometimes the algorithm will find another

solution. This can be checked by examining the average values of the

predictions of the log parameters. In this case, the means are -5.77,

-4.39, and -7.05, which are close to the above. A similar solution was

found.

Turning to the effects of the individual variables, one can view the

effect of each parameter as a modification of the overall mean.

For example, if a person's acute illness score were 12.4 instead of 32.4,

their alpha value would be -5.77 -2.62 or -8.39, their gamma would be

-4.39 -3.86 or -8.25, and their delta would be -7.05 -0.20 or -7.25.

So their long term risk would be slightly lower, and their short

term risk would only be about 25% higher. In addition, the decay

period would be much longer.

The effect of Chronic sickness is mostly to push out the time constant,

thereby extending the acute risk, whatever its level. The effect of

Post1984 is negative on the short term risk, but the time constant

is pushed out. This is consistent with the idea that improvements

in care at the time of hospitalization are responsible for mortality

improvements around the time of the PPS intervention.

The specific program statements are:

Type 1: Acquisition of datasets

ascii "datatset_name";

read var1 var2 var3 var4 ... vark;

format "c_scanf format";

or

stata "stata_dataset_name";

If ascii input is specified, then a read list and a format may be

included. If a read list is not included, then the option labheads

should be specified, and the variable names should be included,

white- space delimited, as the first record of the dataset itself.

If the format list is not included, the format is considered to be

white-space delimited, meaning that the values are separated by tabs

or spaces.

Some advice: Don't use formats. For one thing, the Think-C

Macintosh compiler misreads them under certain conditions, giving

plausible looking but incorrect answers.

Variable names may include any keyword or option names (this bug

is fixed).

Type 2:

Program options.

All options begin with the options keyword and end in a semicolon.

There are many options:

interval=number. The value of the interval D, applied across

the entire sample. (Interval may also be specified as a

dependent variable.)

weight=identifier; names a variable that will be used as a

weight. Currently, only one interpretation of weights is

supported--frequency weighting.

rescale:

Causes the weights to be rescaled. (unimplemented)

debug: Causes debugging output to be printed.

yydebug: Causes yacc debugging output to be printed.

maxiter=number. Sets the maximum number of iterations allowed.

The default is 100.

trace: Causes maximization details to be printed in the log.

The default is to print only the log likelihoods and the

summary table.

insight: Causes the dataset _checkpt.spm to be written after

each point, to be used by other potential addon programs. For

example, in DESQVIEW a program might look for such a dataset to

indicate recent progress of the maximizer. It is written in

the same format as the output dataset. The default is not to

write this dataset.

onestep: the reference model is not fit. Instead, all

parameters are released simultaineously. The default

is to fit the reference model first.

autofix=number. The algorithm is given license to fix

(constrain, or "stop") all non-constant parameters that

appear headed to infinite values. This only takes place

when successive changes of the likelihood are less than

(nobs/200) * number. The number is scaled in this way

for numerical accuracy reasons. The default is that this

option is turned off.

rhomax=number. This is the maximum Marquardt rho. The

value of number should be greater than 1. It represents

the aggressiveness with which the program will extrapolate

the parabolic solution, if it appears to be profitable.

The default is 5.

lambda=number. This is the

A small positive value says

solution. A large positive

descent. The default is 1;

common.

marquardt mixing parameter.

to trust the Gauss-Newton

value says to trust steepest

values such as 0.1 or 0.01 are

nsf=number. Number of significant figures. This option

affected printing in the mainframe version, but has no effect

in this version due to a different printing design.

ssexp=number. This is a parameter that tells the program

how much the absolute and relative stepsize can be for

the program to consider the answer as converged. It

is interpreted as a power of 10. The default is -5,

meaning that the stepsize needs to be less than 10^-5,

in both absolute and relative terms.

tolerance=number. The minimimum change in log likelihood that

will be considered as evidence of convergence. The default is

zero, meaning that this criterion is not considered.

altquote: This option changes the string quote character to "#".

It is used to facilitate command file setup by languages where

the literal double quote is problematic.

labheads: This option says that the raw dataset has label

headings in the first row.

missing="string". This option says that missing values in the

raw dataset are denoted by the character sequence "string"

the default is "M" (a single capital M).

There are also two separate statements that specify program

options. logfile "dataset name"; specifies the name of

a file which receives program ouptut. output "dataset name"

receives binary output including the covariance matrix and

parameter values.

Type 3:

Independent and Dependent Variables

varlist: following this keyword is the list of independent

variables to be applied to all three parts of the problem.

Order is unimportant.

depend: following this keyword is a list of all the dependent

variables. This list is ordered. The first variable is the

survival time. The second variable is an indicator of whether

the case is a death at the time specified by dependent variable

1. The third variable is optional. It is the length of the

observation interval in which death would have occurred. If

present a value must be specified. If this variable is not

specified, then the option interval is used for all cases.

If this is not specified, the default is 1 unit.

Type 4: Starting values.

sv_delta.

Three lists: sv_alpha, sv_gamma, and

Each of these keywords is followed by a series of expressions

variable = value with space between the sets. For example:

sv_alpha _cons=4

x=2

The default starting values are 0, except for the constant

terms, which are estimated from the data.

Type 5: Fixed parameters.

and fx_delta.

Three lists: fx_alpha, fx_gamma,

Each of these keywords is followed by a list of variables whose

coefficients are to be fixed in the maximization. Note: to

drop a variable from consideration, fix it. Since the starting

value is zero, it effectively drops out of the equation.

fx_alpha zz;

Example of a complete command file:

options autofix=0.001;

stata "small.dta";

depend psurv rd;

varlist x;

This command file tells the program to fit a Bailey-Makeham survival

model with one independent variable (x) and a constant term. Each of

these variables applies to each of the structural parameters. The

input data is a STATA data set. The time to failure or sensoring is

is psurv, and the indicator for failures is rd.

Running the program.

On PCs, type at the command line:

C> bailey commandfile

where commandfile is the name of

described immediately above. On

the finder. It will request the

must be in the same directory as

@B.

the ASCII dataset with the commands

Macs, just invoke the program from

name of the command dataset, which

the program.

Prediction program@

The predict program enables the user to calculate a vector of

failure probabilities, one for each individual, at a sequence of

times. It also enables the user to calculate parameter values.

The predict program is run by giving the command

C> predict commandfile

where commandfile has a format similar to the command file for

the main Bailey-Makeham model.

The statements for acquisition of raw and stata datasets with the

independent variables for prediction are identical to the main

program:

ascii "datatset_name";

read var1 var2 var3 var4 ... vark;

format "c_scanf format";

or

stata "stata_dataset_name";

2. Option statements:

options outascii altquote debug yydebug labheads missing="value";

Only one of these options is new. outascii

says that the output file is to be ascii instead

of a stata dataset.

parmdsn "datatset_name";

This should be the same as the output statement from

the Bailey-Makeham model. It specifies the binary

dataset which contains the covariance matrix and other

statistics.

output "dataset_name";

This specifies where the output values are to be written.

3.

Prediction specifications:

ptime varname1(time1) varname2(time2) ... varnamek(timek);

This specifies the variable names for the failure

probability prediction, and the times at which those

failure times will be calculated.

pparm varnamea(alpha) varnameg(gamma) varnamed(delta)

varnamaa(var(alpha)) varnamag(cov(alpha,gamma))

varnamgg(var(gamma)) varnamgd(cov(gamma,delta))

varnamdd(var(delta)) varnamad(cov(alpha,delta));

Any or all of these choices may be omitted. The

syntax in the parentheses is fixed, but the variable

names are subject to choice.

Example:

stata "small.dta";

parmdsn "final.mta";

ptime day3(3) day5(5);

pparm a(alpha) g(gamma) d(delta);

output "predict.dta";

will produce predicted failure probabilities for 3 units of time

and 5 units of time as well as values of alpha, gamma, and delta.

@C.

Surface Program@

The surface program is invoked by the command

C> surface parmdsn

Parmdsn is the output dataset produced by the Bailey-Makeham model.

The surface program computes the likelihood at one and two standard

errors out from the likelihood maximum, for each coefficient. The

program prints the decrement in log likelihood and the ratio of

that decrement to the expected value.

The standard error reflects the possible distance of the coefficient

from its true value, holding all of the variables fixed. If two

variables are collinear, the standard error will be large. However,

the likelihood decrement depends only on the 2nd derivative of the

variable in question. Thus, one standard error may be substantial

in likelihood terms.

@D.

Covariance Matrix@

A printed version of the covariance matrix may be obtained by typing

C> covar parmdsn

Where

3.

BAILEY-MAKEHAM MODEL STATA INTERFACE

The syntax of the "bailey" command is:

bailey outcome varlist [if exp] [in range] [=exp], [dead(varname)]

interval(# or varname) trace autofix(#) nocons rescale rhomax(#)

lambda(#)

fxalpha(varlist) fxgamma(varlist) fxdelta(varlist)

svalpha(svlist) svgamma(svlist) svdelta(svlist)

See Bailey (1988) for a complete description of this model. The

model is a generalization of the exponential distribution and has

hazard function

alpha e^{-gamma*t} + delta

where $\alpha$, $\gamma$, and $\delta$ are all functions of $X$,

the vector of covariates described in the varlist}. The

quantities $\alpha$, $\gamma$, and $\delta$ are termed

"structural parameters" since all of the effect of the

covariates is represented by them. The functional form of these

structural parameters is:

alpha = e^Sigma alpha_i x_i

gamma = e^Sigma gamma_i x_i

delta = e^Sigma delta_i x_i

The data may be actual failures or censored observations. The time from

the start of observation to failure or censoring is given by the

variable outcome. If there are any censored observations, you must

specify the option "dead()" with a variable that is 1 if the case is a

death and 0 if it is censored. If the observation is a death, it is

assumed to fail in the interval starting at time $T: (T, T+ interval()).

The value of interval() may be a number or a variable name. It

represents the graininess of the observation in the units of the

outcome. The default is 1 unit. For example, if the survival

times are measured in days, the graininess is assumed to be 1 day.

In other words, you know the survival time to one day, but not

closer than that. Although there is little difference between a

fine-grained measurement and a continuous measurement, the

likelihood calculated using the fine-grained approximation is

simpler. If the option is specified as a number, the program

assumes that this number applies to each case. If it is a

variable, then the value of the variable applies to each case.

One important special case is interval(0.00274), which is 1/365

of a year. In other words, this would be saying that the data are

specified in years, but derived from data measured in days. Note

that the value of interval() and the units of measurement affect

the likelihood value, but do not change the nature of the

solution.

Each of the variables in varlist applies to each structural

parameter unless otherwise specified. This process is called

``fixing.'' Variables are fixed for a structural parameter by

including them on its fixed list. The list of variables specified

in fxalpha() is fixed for $\alpha$, the list in fxgamma() is

fixed for $\gamma$, and the list in fxdelta() is fixed for

$\delta$. If a variable is not explicitly fixed, it is assumed to

be free, or available to be maximized.

Starting values are defined by specifying the options

svalpha({\it svlist), svgamma(svlist), or svdelta(svlist). The

argument svlist is a series of statements of the form {\it

variable={\it\#. If starting values are not specified, they start

at other values, usually 0.

Other options are as follows:

trace: Includes a trace of the parameter values at each maximization

step.

nocons: Excludes the automatic constant term from the equation.

Useful if there is no constant or if a mutually exclusive set

of dummy variables is employed.

rescale: Rescales the likelihood by the sum of the weights

(specified by the =exp option). This option is not advised,

but is included for compatibility with other vendors' software.

If this option is not used, the weights are treated like

frequency weights. That is, a weight of 2 means there were two

observations like this that were represented by a single line

of the data. A third meaning of weights is that they represent

inverse sampling probabilities. If this interpretation is

used, the standard errors produced by this program will not be

correct. However, it is better to rescale than not.

maxiter:

Maximum number of iterations allowed.

autofix(#): If this option is specified, then the program will

automatically fix (stop) a coefficient that is rapidly

changing, but not having an appreciable effect on the

likelihood function. The purpose of this option is to

gracefully deal with collinear and infinite coefficients. This

option will kick in only if the change in the likelihood

function is smaller than the given value. If this option is

not specified, the program will make recommendations but will

not take any action.

rhomax(#):

This parameter describes the extent to which we are

willing to extrapolate in the modified Marquardt maximization.

In the Marquardt method, a Newton step (or a ridge-like

approximation thereto) is attempted first. The actual (log)

likelihood gain is computed. This actual gain is combined with

the gradient to calculate an optimal step via a quadratic

approximation. This optimal stepsize is constrained to be at

most rhomax times as large as the original Newton step. The

default is 5; rhomax should always be greater than 1. A larger

value is more aggressive.

lambda(#): This parameter describes the (starting) height

of the ridge that is used if needed in the modified Marquardt

method whenever the 2nd derivative is non-positive definite.

The value is modified during the iterations in light of

experience. The default is 1.0.

Example:

In this dataset of 200 hospitalized cancer patients, we measure the

survival

time as a function of the Karnofsky Performance Scale:

. summ survive karnofsk

Variable |

Obs

Mean

Std. Dev.

Min

Max

---------+--------------------------------------------------survive |

200

46.15

61.3805

1

345

karnofsk |

200

49.6

27.79755

10

100

. cox survive karnofsk, dead(dead)

Iteration 0:

Iteration 1:

Iteration 2:

Log Likelihood =-865.73173

Log Likelihood =-852.31805

Log Likelihood =-852.31781

Cox regression

Number of obs =

200

chi2(1)

= 26.83

Prob > chi2

= 0.0000

Log Likelihood =-852.31781

Variable | Coefficient

Std. Error

t

Prob > |t|

Mean

---------+-------------------------------------------------------------survive|

46.15

dead|

1

---------+-------------------------------------------------------------karnofsk|

-.015111

.0029536

-5.116

0.000

49.6

---------+-------------------------------------------------------------. bailey survive karnofsk, dead(dead) autofix(.01)

Iteration

Iteration

Iteration

Iteration

Iteration

0:

1:

2:

3:

4:

Log

Log

Log

Log

Log

Likelihood

Likelihood

Likelihood

Likelihood

Likelihood

=

=

=

=

=

-959.1589

-955.2354

-954.4966

-954.4889

-954.4889

Iteration

Iteration

Iteration

Iteration

Iteration

Iteration

Iteration

Iteration

0:

1:

2:

3:

4:

5:

6:

7:

Log

Log

Log

Log

Log

Log

Log

Log

Likelihood

Likelihood

Likelihood

Likelihood

Likelihood

Likelihood

Likelihood

Likelihood

=

=

=

=

=

=

=

=

(convergence achieved)

Bailey-Makeham Survival Model 0.4

Log Likelihood (C) =

-954.489

200

Chi2( 3)

=

35.093

936.943

-954.4889

-946.3064

-941.3947

-937.1823

-936.9542

-936.9427

-936.9426

-936.9426

Number of observations =

Log Likelihood

=

-

Structural

|

parameter

Var. |

Coef.

Std. Err.

t

Sig.

Mean

------------------+--------------------------------------------------------alpha karnofsk |

-0.0287193 0.00898029

-3.198 0.0016

49.6

gamma karnofsk |

0.0338422

0.0177919

1.902 0.0586

49.6

delta karnofsk |

0.00799231

0.0107872

0.741 0.4596

49.6

alpha

_cons |

-2.47772

0.25908

-9.564 0.0000

1

gamma

_cons |

-5.52475

1.19964

-4.605 0.0000

1

delta

_cons |

-4.98935

0.893983

-5.581 0.0000

1

------------------+--------------------------------------------------------(Note, the iterations restart because the optimization procedure

first fits a reference model consisting of a constant term for

each of the structural parameters. Only after that model has

converged are the other parameters freed for optimization.)

In the coefficient report, note that the log likelihood of the

reference model and the chi-square of the final model with respect

to the reference model are printed on the left-hand side of the

header.

We conclude from this analysis that patients with a high Karnofsky

rating are less likely to die quickly, and that their hazard

decays to its long term value sooner. However, patients with a

high Karnofsky rating do not have any better long-term survival

rates.

Predictions

There are two types of predictions available for individuals in the

dataset:

failure probabilities at specific points in time and predictions of the

structural parameters and their standard errors. The pertinent variable

names

to receive these quantities are specified as part of the options to the

mpredict command, unlike most other Stata commands. The syntax of

mpredict is

mpredict, pparm(parmlist) ptime(faillist)

where parmlist has one or more elements of the form:

varname[alpha] | varname[gamma] | varname[delta] |

varname[var[alpha]] | varname[var[gamma]] | varname[var[delta]] |

varname[cov[alpha,gamma]] | varname[cov[alpha,delta]] |

varname[cov[gamma,delta]]

and faillist has one or more elements of the form varname[time].

Note that what is typed in the square brackets indicates what is

being predicted; what is in front of the square brackets is the

name of a new variable to be created containing that prediction.

Multiple elements are separated by spaces. In the above Karnofsky

example, we might give the command

. mpredict, pparm(alpha1[alpha] gamma1[gamma] delta1[delta])

ptime(p6[180])

to create four new variables, alpha1, gamma1, delta1, and p6.

These are the estimates of the structural parameters for each

observation and the estimated 180-day failure probability.

Surface Analysis

A surface analysis is performed by typing the msurface command,

which takes no arguments. A surface analysis is an examination of

the likelihood surface if each parameter is moved 1 and 2 standard

errors above or below the estimated parameter, holding all other

parameters fixed. The change in log likelihood is printed, along

with a number in parentheses which is the ratio of the actual

change to that predicted by the quadratic approximation, using the

second derivative matrix.

If the numbers in parentheses are larger than 2 or below 0.5, the

quadratic approximation at the maximum is definitely poor. This

points to problems with the estimated standard errors and possible

problems with multiple or infinite solutions.

References:

Bailey RC (1977): Moments for a modified Makeham Law of mortality,

Naval Medical Research Institute Technical Report, Bethesda MD.

Bailey RC, Homer LD, Summe JP (1977): A proposal for the analysis

of kidney graft survival. Transplantation 24: 309-315

Bailey RC (1988):

The Makeham Model for Analysis of Survival Data.

Bard (1974): Nonlinear Parameter Estimation.

Academic Press.

Gould WW (1990): Stata 2.1 Reference Manual and Update.

Resource Center, Santa Monica, CA. 213-393-9893

Computing

Krakauer HK, Bailey RC (1991): Epidemiologic Oversight of the Medical

Care Provided to Medicare Beneficiaries. Statistics in Medicine

(forthcoming).

Press WH, Teukolsky SA, Flannery BP, Vetterling WT (1990): Numerical

Recipies in C: The Art of Scientific Computing. Cambridge.

Rogers WH, Draper D, Kahn KL, Keeler EB, Rubenstein LV, Kosekoff J,

Brook RH: Quality of care before and after implementation of the

DRG-based prospective payment system. JAMA October 17, 1990.

264(15):1989-1994.

Stewart GW (1973): Introduction to Matrix Computation.

Summe JP (198?):

Communication.

.ls 2

.pb

@Appendix A:

1.

Solution 1:

Bailey Makeham Instructions for SAS mainframe.

Private

Bailey-Makeham Estimation Problem with 2 local maxima.@

The Dataset

psurv rd

.002 1

.003 1

.004 1

1.5 1

1.7 1

2.5 1

6

1

1

1

2

1

3

1

20 1

30 1

40 1

50 1

60 1

80

1

125 1

Academic Press.

(convergence achieved)

Bailey-Makeham Survival Model 0.4

Log Likelihood (C) =

-139.766

17

Chi2( 0)

=

0.000

139.766

Prob>chi2

=

NANFF

Number of observations =

Log Likelihood

=

-

Structural

|

parameter

Var. |

Coef.

Std. Err.

t

Sig.

Mean

------------------+--------------------------------------------------------alpha

_cons |

-0.932204

0.500671

-1.862 0.0823

1

gamma

_cons |

-0.599606

0.482264

-1.243 0.2328

1

delta

_cons |

-3.90168

0.374063

-10.431 0.0000

1

------------------+--------------------------------------------------------Solution 2:

(convergence achieved)

Bailey-Makeham Survival Model 0.4

Number of observations =

17

Log Likelihood

=

-136.256

Structural

|

parameter

Var. |

Coef.

Std. Err.

t

Sig.

Mean

------------------+--------------------------------------------------------alpha

_cons |

3.26155

0.84484

3.861 0.0017

1

gamma

_cons |

4.92238

0.649651

7.577 0.0000

1

delta

_cons |

-3.40707

0.267261

-12.748 0.0000

1

------------------+---------------------------------------------------------