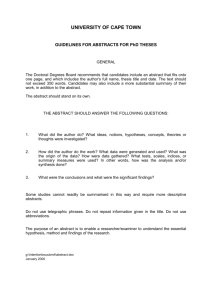

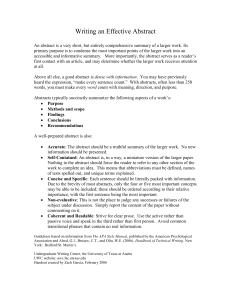

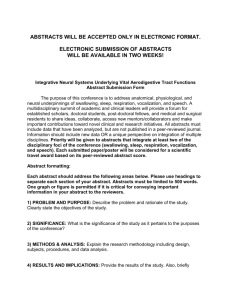

abstracts

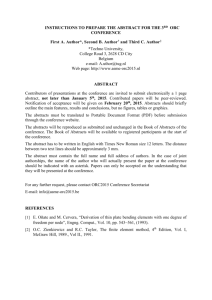

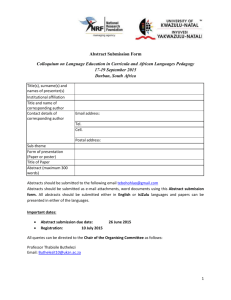

advertisement