Abstract - Computer Science

Study of Hurricane and Tornado Operating Systems

Abstract

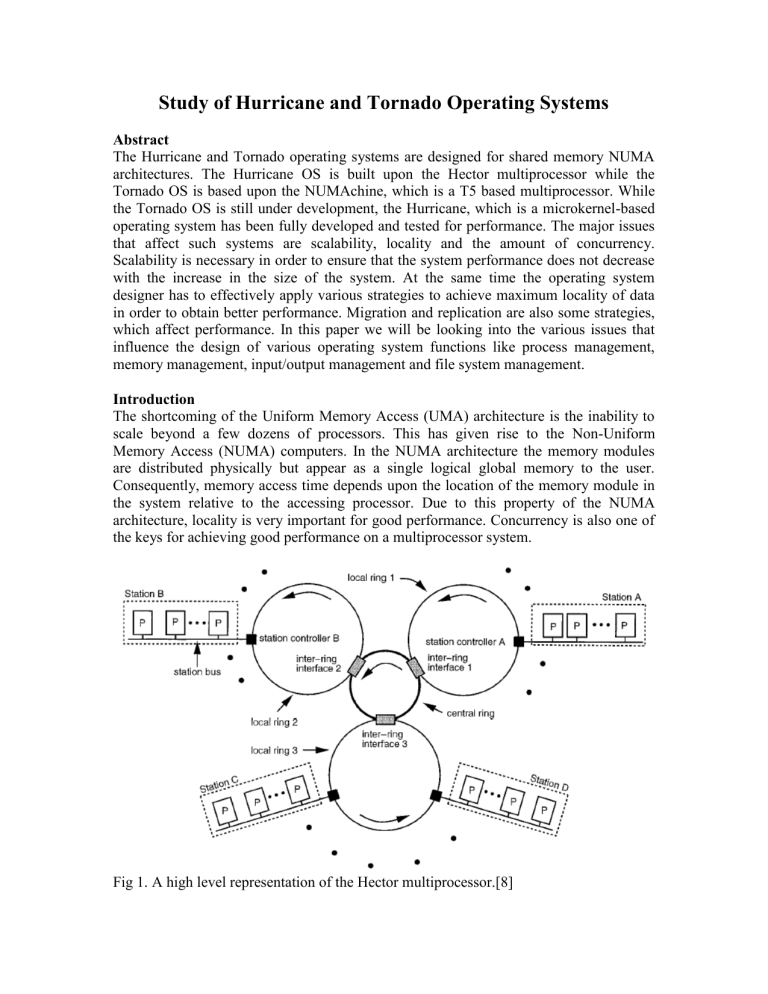

The Hurricane and Tornado operating systems are designed for shared memory NUMA architectures. The Hurricane OS is built upon the Hector multiprocessor while the

Tornado OS is based upon the NUMAchine, which is a T5 based multiprocessor. While the Tornado OS is still under development, the Hurricane, which is a microkernel-based operating system has been fully developed and tested for performance. The major issues that affect such systems are scalability, locality and the amount of concurrency.

Scalability is necessary in order to ensure that the system performance does not decrease with the increase in the size of the system. At the same time the operating system designer has to effectively apply various strategies to achieve maximum locality of data in order to obtain better performance. Migration and replication are also some strategies, which affect performance. In this paper we will be looking into the various issues that influence the design of various operating system functions like process management, memory management, input/output management and file system management.

Introduction

The shortcoming of the Uniform Memory Access (UMA) architecture is the inability to scale beyond a few dozens of processors. This has given rise to the Non-Uniform

Memory Access (NUMA) computers. In the NUMA architecture the memory modules are distributed physically but appear as a single logical global memory to the user.

Consequently, memory access time depends upon the location of the memory module in the system relative to the accessing processor. Due to this property of the NUMA architecture, locality is very important for good performance. Concurrency is also one of the keys for achieving good performance on a multiprocessor system.

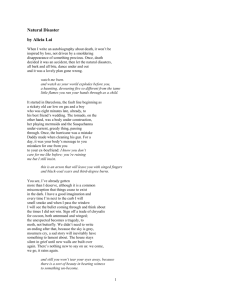

Fig 1. A high level representation of the Hector multiprocessor.[8]

The Hurricane operating system is a microkernel operating system built for the Hector multiprocessor. The Hector multiprocessor consists of 16 MC88100 processors. Each of the processor consists of a 16 KB instruction cache, a 16 KB data cache, and 4 MB of globally addressable memory. Pages are 4 KB. All the processors are grouped into stations. Each station has 4 processor boards. These stations are linked to a local ring.

The local rings are in turn attached to a central ring.

The Tornado operating system is a micro-kernel based object oriented operating system designed for the NUMAchine multiprocessor. The NUMAchine is made up of 4 processors and up to 512 MB of memory and are connected in a hierarchy of rings. The proposed prototype is depicted in the figure 2 and is made up of 64 processors (16 nodes) connected in a 2 level hierarchy of rings with a branching factor of 4 at each ring.

Figure 2: The architecture of NUMAchine is made up of a 2 level hierarchy of rings with a branching of 4.[6]

Hurricane and Tornado use a structuring technique called as hierarchical clustering in their design. In that the whole system is divided into clusters. Clusters can be defined as the basic unit of structuring. Each cluster performs the functions of a small-scale symmetric multiprocessing operating system. A cluster can consist of one or more processors and memory modules. Kernel data and system structures are shared by all the processors within the cluster.

In Hurricane, the scheduling is still in a very primitive stage. Processes are either allocated around the system in a round-robin fashion or they are explicitly bound on each processor. Migration is very rare. According to requirements, processors can also be explicitly reserved for some programs thereby preventing any interference from other programs.

The kernel does process management in Hurricane and Tornado. Most of the interprocess communication in both these operating systems is done using Protected

Procedure Calls (PPC). The PPC restricts communication among processes from crossing processor boundaries. This means that PPC deals only with the inter-address space communication. For inter-processor communication, Hurricane and Tornado use an optimized message passing facility.

Memory management in Hurricane supports traditional page-based virtual memory. A process in Hurricane consists of an independent linear virtual address space partitioned into address ranges mapped to files. Each range of addresses is known as Hurricane region. The significance of each hurricane region is that possesses it’s own attributes that control its allocation and coherence policies. The memory manager handles issues like migration and replication. Various replication and cache coherence protocols are

implemented in the memory management unit. A similar strategy is used in Tornado with the exception that most of the policies are implemented using object-oriented concepts.

Hurricane has adopted an object-oriented model for file system design. The Hurricane

File System has been designed for large-scale multiprocessor systems with disks distributed across the whole system. It has the following features: a) flexibility – HFS can support a wide variety of file structures and file policies, b) customizability – the application can control the file policies and structure invoked by the file system, c) efficiency – all the above features of the HFS are obtained with very little i/o and processor overhead. In case of Tornado, the whole operating system is designed in an object-oriented manner, so there are process objects, memory region objects and file objects to handle client requests.

Input /Output in Hurricane is implemented as an application level library. This provides many advantages. It increases the application portability, because the I/O facility can also be ported to other operating systems. It also increases the application performance by mapping multiple fine-grained application level I/O operations into individual coarsegrained system-level operations. The reason for improvement in performance is the reduction in data copying and the number of system calls.

In this paper we will be looking into the various issues that influence the design of the

Hurricane and Tornado operating systems. The discussion would touch the following topics in that order: hierarchical clustering which is common to both the operating systems, scheduling, process management, memory management, input/output management and file system management. Since the Tornado research is relatively new, not much material is available about this operating system, so most of the discussion will be focused on the Hurricane operating system.

Hierarchical Clustering

The main aim of hierarchical clustering is to maximize locality, provide concurrency that increases linearly as the number of processors is increased. Locality of data is the key to good performance in NUMA systems.

Figure 2 depicts the structure of a cluster in Hurricane. It consists of different layers and the lowermost layer is made up of the processors above which is the micro-kernel. Above that are the various servers and the kernel itself. All the above services are replicated to each cluster. Above this layer is the user level containing the file system server and scheduler. The application level programs are provided an integrated, consistent view of the system as a whole. Hierarchical clustering aims at achieving tight coupling within the cluster while maintaining loose coupling at the inter-cluster level. In other words it aims at distributing the demand, avoiding centralized bottlenecks and increasing concurrency and locality by migrating and replicating data and services. At the same time there is tight coupling within the cluster. This is because interactions occur primarily between components situated within the same cluster.

A system is said to be scalable only if the resources do not get saturated as the system size increases. Hence it is important to handle application requests to independent resources using independent operating system resources. Hence the number of service centers must increase along with the system size.

Figure 3: A single clustered system [8]

Size of Clusters

The size of a cluster in Hurricane can be specified at boot-time. Depending upon the cluster size the system behavior varies between a fully distributed system to a shared memory system. The smaller the cluster size, more the number of inter-cluster operations performed. These operations are costlier than intra-cluster operations. The degree of locality in such operations is also zero, thus increasing its cost further. Memory requirements are also greater due to the necessity of replicating the operating system services and data. Also consistency has to be maintained for state on each cluster. So the overhead on such a system resembles to that of a distributed system.

When the cluster size is increased, these overheads are reduced. At the same time it is quiet clear that there is also an upper bound on the size of the cluster. This is because there are other factors like contention that affect shared memory systems. As the size of the cluster increases and the number of processors within it increases, the contention for shared data structures will increase. The trade-offs between these two factors is depicted in the graph in figure 4.

Some of the advantages of hierarchical clustering are a) system performance can be tuned to particular architecture; b) locking granularity can be optimized according to the size and concurrency requirements.

Various issues related to hierarchical clustering have to be handled within the different services of the Hurricane operating system in order to provide the application with an integrated view of the system that is oblivious to the clustering present within the system.

For instance, in the kernel, inter-cluster message passing is implemented using remote procedure calls. Another instance is the process migration strategy used. In Hurricane a process usually sticks to one cluster. But in case it migrates, then a ghost descriptor recording its new location is maintained at the home cluster. Any operations that require

access to the migrated process look into the home cluster and find the ghost descriptor to determine the new location of the migrated process.

Another example of this is the Memory manager. In Hurricane a program can span multiple clusters. The memory manager is responsible for the replication policy that deals with replicating the address space of the program to other clusters. In order to maintain the consistency of these replicas all modifications are directed through the home cluster .

Home cluster is the cluster on which the program was created. A simple directory mechanism is used for maintaining page consistency between clusters. One entry is maintained for each file block resident in the memory; it identifies the clusters possessing a copy of the page. The directory spans all the clusters, allowing concurrent search and load balancing. Understandably, pages that are write-shared are not replicated to avoid the overhead of maintaining the consistency.

Figure 4: Degree of Coupling vs. Cost [8]

A similar strategy is used with the file system. If a file is replicated to multiple disks, hierarchical clustering can be used to determine the nearest replica of the file and direct read requests to that replica. Write requests can also be distributed in a similar way to the disks in the cluster near page frame that has the dirty page. HFS (Hurricane File System) maintains long term and short-term aggregate disk load statistics for all the clusters and super clusters. This information can be used to influence further file creation requests by directing those requests to disks that are lightly loaded.

Scheduling in Hurricane is divided into two levels. Within the cluster the dispatcher handles the scheduling. This reduces the load on the dispatcher. At the second level, the inter-cluster scheduling is managed by higher level scheduling servers. Placement policy for processes also has to be designed in such a way that locality is maximized.

The current implementation of clusters has only single hierarchy of clusters. However for larger systems the number of levels has to be more than one.

Scheduling

Load balancing is the main function of the scheduler. In Hurricane scheduling is done at two levels. One is within the cluster and the other is between the clusters. Within the cluster the dispatcher handles the scheduling. This way the dispatcher does not have to take care of the load balancing decisions to be taken for the rest of the system and hence reduces its overhead. At the second level, the inter-cluster scheduling is managed by higher level scheduling servers. Placement policy for processes also has to be designed in such a way that locality is maximized. Decisions regarding whether a process is to be migrated or not are taken at this level.

Process Management

One of the important constituents of process management in a shared memory architecture is the mechanism used for locking and shared memory management. Also of importance is the way in which communication takes place. In Hurricane, a hybrid locking strategy is used in order to have a low latency as well as good concurrency. In a shared memory system, four main types of access behaviors are found viz. 1) nonconcurrent accesses, 2) concurrent accesses to independent data structures, 3) concurrent read-shared accesses, and 4) concurrent write-shared accesses. The locking mechanism used in Hurricane addresses all the above access behaviors.

Various types of locks are used in order to achieve better performance in all the above access behaviors. These include coarse-grained locks, fine-grained locks and distributed locks. Also, hierarchical clustering is used which helps to increase the lock bandwidth.

A fine-grained lock helps to increase the concurrency whereas a coarse grained lock reduces the number of atomic operations in the critical path thereby reducing the latency.

Thus the policy adopted by Hurricane uses coarse-grained locks where a single lock is used for short period of time in order to protect several data structures while using a finegrained lock at a very fine granularity in order to protect data for longer periods of time.

For example, in the hash table of the figure 5 the main lock is held only long enough to search the hash table and set the reserve bit in the required element after which the coarse-grained lock is released.

Figure 5: A chained Hash table. Dark shading indicates coarse grained lock. Light shaded boxes indicate reserve bits. [7]

Here the reserve bit performs the function of a fine-grained spin lock with the following exceptions. a) It requires only one bit of space instead of a whole word and is co-located

with other status information, 2) multiple reserve bits can be acquired while the main lock is held and this does not require atomic operations, 3) it is also used for handling deadlocks, which is based upon deadlock avoidance.

Hierarchical clustering plays an important role in enhancing the performance of this approach. In that it bounds the lock contention and also increases the lock bandwidth by instantiating per-cluster system data structures each with its own set of locks and also by replicating read-shared objects each with its reserve bit.

Deadlock Avoidance

The initial algorithm used for deadlock avoidance in Hurricane was a pessimistic algorithm by which the initiator had to release all locks before initiating the RPC, and once the remote operation was completed, reacquire the locks. This strategy added a lot of overhead to the kernel. The new algorithm is an optimistic algorithm that functions as follows. Before releasing the local locks, a reserve bit is set in any of the structure that might be needed after the call is completed. This reserve bit acts as a reader/writer lock or exclusive lock depending upon the data that it protects. Then the local locks are released and the RPC is initiated. Failure in the RPC operation results in a return value indicating that a potential deadlock exists. The local reserve bit set earlier is reset and the RPC is retried until it succeeds. The advantages that this strategy gives are a) For non-concurrent requests the latency is reduced due to the hybrid approach, b) For concurrent requests to independent data structures the concurrency is maximized due to the use of reserve bits which provide fine-grained locking, c) for concurrent requests to read-shared data multiple instances of read-mostly data structures and efficient replication policy provided by the hierarchical clustering enable to increase the access bandwidth, d) concurrent requests to write-shared data are bounded by the number of processors in the cluster.

Hence handling of such requests can be done efficiently.

Apart from the hybrid approach, Hurricane also uses distributed locks. It can also be noted that hybrid locks cannot be used everywhere. In some places the kernel data structures use coarse-grained locks instead of hybrid or fine-grained locks.

Inter-Process Communication (IPC)

The prime intention of the Hurricane IPC facility is to avoid accessing shared memory and to avoid acquiring any locks. Both Hurricane and Tornado use protected procedure calls (PPC) in order to perform inter process communication among processors within a cluster. The PPC is based upon the following model. The server is a passive object holding just an address space. It is the client threads that are active and move from address space to address space as they invoke services. This implies that a) the client requests are always handled on the local processor, b) the client and the server share the processor in a manner similar to hand-off scheduling due to the presence of only a single thread of control, c) there are as many threads in the server as the number of client requests.

Instead of letting the client enter the server’s address space directly, separate worker processes are used for handling the client calls. Worker processes are created dynamically as needed. This approach has three benefits. First, it simplifies exception handling. Second, it saves context switches from the client to the server. Third, it makes for a cleaner implementation.

Figure 6: Per-process PPC data structures. [2]

In the figure 6, CD stands for call descriptor and it performs two functions. First, it maintains the return information during a call. Second, they point to the memory used for stack by the worker process during the call. Following benefits are obtained by this approach. a) No remote memory is accessed, b) there is no sharing of data, hence cache coherence traffic is eliminated, c) no locking is required because the resources are exclusively owned by the local processor.

Memory Management

The Hurricane memory manager supports traditional page-based virtual memory. Every program is provided with an independent linear virtual address space called as the

Hurricane Region. The allocation policies supported include first-hit, round robin and fixed allocation. Options are also provided for page migration and replication.

Additionally, the degree of replication and migration can also be controlled. A per-page counter is currently used for limiting the number of migrations.

The concept of shared regions is used for handling concurrency and shared memory. A shared region can be defined as a portion of some shared data object that is accesses as a unit by a task. The shared region approach is helpful in eliminating the false sharing problem. Special calls are provided to the user for using shared region. The ReadAccess call begins a read only phase, WriteAccess is used for beginning a read/write phase, and

ReadDone and WriteDone are used to end the respective phases. These calls allow the system to perform whatever synchronization and/or coherence operations to be performed on the data in order to maintain consistency.

In Tornado, facility is provided for software cache coherence in addition to the presence of hardware cache coherence. But Hurricane does not have any hardware cache coherence facility and has to depend on the software cache coherence policy.

Protocols

The shared region is managed based on two protocols: replication protocols and coherence protocols. The replication protocol is responsible for decisions related to when replicas are to be created. The coherence protocol is more concerned with the consistency of the replicas.

Various policies can be used in order to achieve cache coherence. The basic idea behind all of them is that the data should travel from the last writer to the next reader/writer. In between there may be many inconsistencies. Coherence can be of three types. a) Cacheto-memory coherence ensures that the changes made to the cache are copied to the memory so that it is made available to any subsequent read/write requests, b) memory-tomemory coherence ensures that a replica is updated before another processor accesses the old copy, c) memory-to-cache coherence ensures that the contents of the cache reflect that of the memory.

Figure 7: Three cases where cache coherence is needed. [1]

These tasks can be performed at different times and by different entities. For example, contents of the cache can be copied to the memory when WriteDone is called.

Alternatively the transfer can take place just before a ReadAccess or a WriteAccess is called. Also who is given the responsibility of doing this task is also an interesting issue.

For example, it can be left to the next reader/writer to flush the cache of any stale data before reading it. Or the data could be copied to the memory at WriteDone and the rest left to the next reader/writer.

Update Protocol

Under this protocol all copies of the data are kept consistent at WriteDone time. This is one of the simplest approaches. A necessary condition is that no dirty data should be left in the cache after WriteDone. So the update protocol uses the following policy. When

WriteDone is performed, not only are the contents of the cache copied back to the memory but also the region in the cache is invalidated both for ReadDone and

WriteDone. This ensures that there is no stale data in the cache.

This approach is simple since it does not require any co-ordination between the processors, requires no state information and also ensures that all data is consistent between Accesses. The figure 8 shows the algorithm used by the update protocol.

Figure 8: The update protocol [1]

It is clear that this simplicity of the algorithm comes with some disadvantages. First of all consistency is performed at every Done operation. So even if the replica is not going to be accessed in the near future before the region is modified again. Secondly false sharing can occur if many replicas exist because of some other region on the same page caused the replication. This problem can be attributed to the fact that pages are used for replication. In summary, most of the updates are costly and unnecessary.

Validate Protocol

The validate protocol enforces the consistency at the Access time rather than at Done time. When an Access is performed, the shared-region software checks whether the copy about to be accessed is valid or not. This is done by checking whether another processor has written to the region since the last Access was performed by this processor.

The figure 9 shows the validate protocol. Processor A is writing to its cache. The other processors are oblivious of it. At WriteDone the processor A updates its main memory but keeps the contents of the cache. Processor B on performing a ReadAccess comes to know that the cache and memory copies are invalid. So it invalidates its cache and obtains the new copy of data from A.

Figure 9: Validate Protocol [1]

In the validate protocol a status vector is needed to keep track of each replica. Each region descriptor indicates the status of each copy and the processors referencing it.

When a WriteDone is performed, the local copy of the status is marked valid and the status of other copies is marked invalid.

The limitation of this protocol is that it puts a lot of overhead on the system in terms of space and time. This overhead can be attributed to the necessity to manage the status vector. Since the coherence protocol works in close co-ordination with the replication protocol it is necessary to know whether the replication protocol to be used can go well with the coherence protocol. In case of the validate protocol, there are very few choices available for the corresponding replication protocol.

Resultantly, in case of Hurricane, the following restrictions are placed on the choice of protocols. First, all regions that share a page must use the same replication protocol.

Second, the policy chosen for Hurricane region must be compatible with the replication policy of the shared regions it contains. Finally, it must be possible and efficient to determine the particular copy of a region that will be referenced by any processor just by looking at the processor id.

Replication Protocol

The replication protocol is responsible for making decisions as to when a new copy is to be made, and where are they to be placed. Replicas can be created due to an Access or

Done operation or else on page fault. Following are two replication protocols used by the

Hurricane memory manager.

Degree of Replication

Controlling the degree of replication is desirable. This can be done by defining an upper limit on the distance to the nearest replica. If the distance is within that limit then no replication is needed and the existing copy is simply mapped to directly. Otherwise a replica is created. To ensure that no processor has duplicate copies of the replica in its local set, the space of memory modules must be partitioned into sets that are disjoint, yet together cover all the modules.

Replicate on Write/Reference

The decision to replicate can also depend upon the type of access. Commonly replication is done on read. But for some systems where the amount of write access is higher than the number of reads, this approach is appealing. But this protocol places a lot of overhead on

the coherence protocol and in the end might turn out to be less efficient. Also one of its disadvantages is that it can be used only with the update protocol.

The Hurricane File System (HFS)

The three main goals of the Hurricane File System are to a) achieve flexibility in that it should support a large number of file structures and file system policies, b) customizability - it should allow the application to specify the file structure to be used and the policies to be invoked at file access, c) efficiency – the flexibility should come with little CPU and I/O overhead.

The HFS uses an object oriented building blocks approach. Files are built using a combination of simple building blocks called storage objects. Each storage object encapsulates member data and member functions. The block storage object is the most basic object in the HFS. The only functionality provided in the block storage object is read and write. There are many types of storage objects. Each type of object provides a different type of functionality. Different type of policies and file structures can be implemented using the various storage objects available in HFS. The important characteristic of HFS is the ability to mix and match these objects for achieving flexibility.

All the storage objects are divided into two classes viz. transitory storage objects and persistent storage objects. The transitory storage objects are created only when the file is being accessed, while the persistent storage objects are stored on to the disk with the file data. The transitory storage objects are used to implement the file system functionality that is not specific to the file structure and does not have to be fixed at file creation time.

A default set of policies is associated with the transitory storage object at the time of creation but can be changed at run time to conform to the application requirements.

Persistent storage objects are used to define the file structure, to store file data and other information like file system state on the disk. Also policies that are specific to a particular file structure or due to security or performance reasons can be defined using persistent objects. Examples of such objects are the replication storage object and the distribution storage object. Unlike transitory storage objects, the persistent storage objects associated with a file cannot be changed at run time.

HFS is logically divided into three layers: an application level library, the physical server layer and the logical server layer. The application level library is responsible for handling the transitory storage object creation and management. In addition to that, it acts as a library for all other I/O servers provided by Hurricane.

The physical server layer directs requests for file data to the disks. Consecutive blocks of a file may be spread over a number of disks. The logical server layer implements all the functionality that does not have to be implemented using the physical server layer but should not be implemented using the application level library. Examples of such functionality are the naming, authentication and locking services.

The physical server layer is responsible for the policies related to disk block placement, load balancing, locality management and cylinder clustering. All physical server layer objects (PSO) are persistent. They store file data and the data of other persistent storage objects. There are three types of PSO classes, basic PSO classes, composite PSO classes and read/write PSO classes. The basic PSO classes provide a mapping between file blocks and the disk blocks on a particular disk.

The Composite PSO services read and write requests by directing them to appropriate sub-objects. Composite PSOs can be used in a hierarchical fashion where a sub-object can themselves be composite PSOs.

The read/write PSOs can only be accessed by an application using a read/write interface rather than a mapped file interface. Example of this type of PSO is the fixed sized record store PSO

The figure 10 shows the three layers of the HFS including the other system servers with which it must cooperate. Most file I/O occurs through mapped files, so the memory manager is involved in most requests to read or write file data. All explicit inter layer requests are denoted by solid lines. Page faults that occur when the application layer accesses a mapped-file region are shown as dotted line.

Figure 10: Hurricane File System Layers [3]

The logical server layer consists of the following types of classes. These consist of

Naming LSO (Logical server layer Object) classes, Access-specific LSO classes, Locking

LSO classes and open authentication LSO classes.

Application level library is called the Alloc Stream Facility (ASF). In addition to providing the file system functions, the ASF provides I/O services. The ASO objects are transitory and specific to an open instance of a file. Following types of classes are present in the application level library: service-specific ASO classes, and Policy ASO classes.

The HFS implementation uses three user-level system servers. The Name Server manages the Hurricane name space. The Open File Server (OFS), maintains the file system state for each open file, and is responsible for authenticating application requests to lower level servers. The Block File Server (BFS) controls the disk by determining which disk an I/O request should target, and directs the request to a corresponding device driver.

The figure 11 depicts the Hurricane File System architecture.

Figure 11: The Hurricane File System [3]

In the figure the memory manager and dirty harry are a part of the micro-kernel. Solid lines indicate cross-server requests while dotted lines indicate page faults.

Alloc Stream Facility

The alloc stream facility is an application level I/O facility for the Hurricane operating system. Having the I/O facility has many advantages. First, their interface can be made to match the programming languages. Second, they increase the application portability, because the I/O facility can be ported to run under other operating systems as well.

Application level I/O facility can also improve the application performance significantly.

These performance improvements are due to a reduction in the amount of data copying and also a reduction in the number of system calls.

The Alloc Stream Facility is divided into three layers. The top layer is interface layer, which implements the interface modules. The bottom layer is the stream layer,

interactions with the underlying operating system and all buffering is managed by stream modules. The top and the bottom layers are separated by a backplane layer. This layer provides code that would have otherwise been common to both these layers. Following diagram shows the structure of the Alloc Stream Facility.

Figure 12: Structure of the Alloc Stream Facility. [5]

Conclusion

The Hurricane and Tornado operating systems are both designed for multiprocessor architectures. But the major difference between them is the size of the system. While the

Hector system on which Hurricane is based is a small system, the NUMAchine is a considerably large system with the prototype having 64 processors. So the design decisions on both these systems have to be different as the latency would not be prominent on Hector but would be an important factor in the NUMAchine. Also from the available information about Tornado it can be inferred that whatever techniques related to hierarchical clustering are used in Hurricane are not flexible because they have to share the same clustering hierarchy whereas due to the object oriented architecture of the

Tornado system, these features can be implemented on a object level with a considerable level of autonomy and flexibility. So decisions can be made at a finer level in Tornado than in Hurricane. But since Tornado is still in the development phase while the

Hurricane operating system has been tried and tested for various application loads. And also the design criterion for both these systems is different. Hence comparison between these systems is inappropriate.

References

[1] Benjamin Gamsa, Region Oriented Main Memory Management in Shared-

Memory NUMA Multiprocessors. Ph.D. Thesis, Department of Computer Science,

University of Toronto, Toronto, Ontario, Canada, 1992.

[2] B. Gamsa, O. Krieger, and M. Stumm. Optimising IPC performance for sharedmemory multiprocessors. In Proceedings of the 1994 International Parallel Processing

Symposium, 1994.

[3] Orran Krieger, HFS: A flexible file system for shared-memory multiprocessors.

Ph.D. Thesis, Department of Electrical and Computer Engineering, Computer

Engineering Group, University of Toronto, Toronto, Ontario, Canada. 1994.

[4] Orran Krieger, Michael Stumm, Ron Unrau and Jonathan Hanna, A Fair Fast

Scalable Reader-Writer Lock. In Proceedings of the 1993 International Conference on

Parallel Processing.

[5] Orran Krieger, Michael Stumm, and Ron Unrau, The Alloc Stream Facility: A

Redesign of Application-Level Stream I/O.

[6] Eric Parson, Ben Gamsa, Orran Krieger, Michael Stumm, (De-)Clustering Objects for Multiprocessor System Software. IWOOOS 1995, pp 72 - 81.

[7] Ron Unrau, Orran Krieger, Benjamin Gamsa, Michael Stumm, Experience with

Locking in a NUMA Multiprocessor Operating System Kernel. OSDI 1994.

[8] Ron Unrau, Orran Krieger, Ben Gamsa, and Michael Stumm, Hierarchical clustering: A structure for scalable multiprocessor operating system design. Journal of

Supercomputing. 1995.