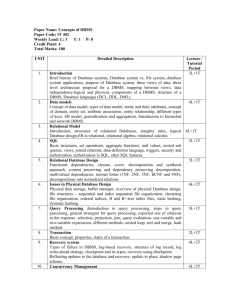

[edit]Some problems in database testing

![[edit]Some problems in database testing](http://s3.studylib.net/store/data/008565783_1-4b0c1437cf9a3dcedbe006865aded92f-768x994.png)

M.TECH

Group I

1.

Database management system

A database management system ( DBMS ) is a software package with computer programs that controls the creation, maintenance, and use of a database. It allows organizations to conveniently develop databases for various applications. A database is an integrated collection of data records, files, and other objects. A DBMS allows different user application programs to concurrently access the same database. DBMSs may use a variety of database models, such as the relational model or object model, to conveniently describe and support applications. It typically supports query languages, which are in fact high-level programming languages, dedicated database languages that considerably simplify writing database application programs. Database languages also simplify the database organization as well as retrieving and presenting information from it. A

DBMS provides facilities for controlling data access, enforcing data integrity, managing concurrency control, andrecovering the database after failures and restoring it from backup files, as well as maintaining database security.

Database servers are dedicated computers that hold the actual databases and run only the DBMS and related software. Database servers are usually multiprocessor computers, with generous memory and RAID disk arrays used for stable storage. Hardware database accelerators, connected to one or more servers via a highspeed channel, are also used in large volume transaction processing environments. DBMSs are found at the heart of most database applications. DBMSs may be built around a custom multitasking kernelwith builtin networking support, but modern DBMSs typically rely on a standard operating system to provide these functions.

[ citation needed ]

2.

Features and capabilities

Alternatively, and especially in connection with the relational model of database management, the relation between attributes drawn from a specified set of domains can be seen as being primary. For instance, the database might indicate that a car that was originally "red" might fade to "pink" in time, provided it was of some particular "make" with an inferior paint job. Such higher arity relationships provide information on all of the underlying domains at the same time, with none of them being privileged above the others.

[edit]

Simple definition

A database management system is the system in which related data is stored in an efficient or compact manner. "Efficient" means that the data which is stored in the DBMS can be accessed quickly and

"compact" means that the data takes up very little space in the computer's memory. The phrase "related data" means that the data stored pertains to a particular topic.

Specialized databases have existed for scientific, imaging, document storage and like uses. Functionality drawn from such applications has begun appearing in mainstream DBMS's as well. However, the main focus, at least when aimed at the commercial data processing market, is still on descriptive attributes on repetitive record structures.

Thus, the DBMS of today roll together frequently needed services and features of attribute management.

By externalizing such functionality to the DBMS, applications effectively share code with each other and are relieved of much internal complexity. Features commonly offered by database management systems include:

Query ability

Querying is the process of requesting attribute information from various perspectives and combination of factors. Example: "How many 2-door cars in Texas are green?" A database query language and report writer allow users to interactively interrogate the database, analyze its data and update it according to the users privileges on data.

Backup and replication

Copies of attributes need to be made regularly in case primary disks or other equipment fails. A periodic copy of attributes may also be created for a distant organization that cannot readily access the original. DBMS usually provide utilities to facilitate the process of extracting and disseminating attribute sets. When data is replicated between database servers, so that the information remains consistent throughout the database system and users cannot tell or even know which server in the DBMS they are using, the system is said to exhibit replication transparency.

3.

A database is an organized collection of data, today typically in digital form. The data are typically organized to model relevant aspects of reality (for example, the availability of rooms in hotels), in a way that supports processes requiring this information (for example, finding a hotel with vacancies).

The term database is correctly applied to the data and their supporting data structures, and not to the database management system(DBMS). The database data collection with DBMS is called a database system.

The term database system implies that the data is managed to some level of quality (measured in terms of accuracy, availability, usability, and resilience) and this in turn often implies the use of a generalpurpose database management system (DBMS).

[1] A general-purpose DBMS is typically a complex software system that meets many usage requirements, and the databases that it maintains are often large and complex. The utilization of databases is now so widespread that virtually every technology and product relies on databases and DBMSs for its development and commercialization, or even may have such software embedded in it. Also, organizations and companies, from small to large, depend heavily on databases for their operations.

Well known DBMSs include Oracle, IBM DB2, Microsoft SQL Server, Microsoft

Access, PostgreSQL, MySQL, and SQLite. A database is not generally portable across different DBMS, but different DBMSs can inter-operate to some degree by using standards like SQLand ODBC together to

support a single application. A DBMS also needs to provide effective run-time execution to properly support (e.g., in terms of performance, availability, and security) as many end-users as needed.

A way to classify databases involves the type of their contents, for example: bibliographic, document-text, statistical, or multimedia objects. Another way is by their application area, for example: accounting, music compositions, movies, banking, manufacturing, or insurance.

The term database may be narrowed to specify particular aspects of organized collection of data and may refer to the logical database, to the physical database as data content in computer data storage or to many other database sub-definitions.

Group II

1.

Topics

External, logical and internal view

A DBMS Provides the ability for many different users to share data and process resources. As there can be many different users, there are many different database needs. The question is: How can a single, unified database meet varying requirements of so many users?

A DBMS minimizes these problems by providing three views of the database data: an external view (or user view), logical view (or conceptual view) and physical (or int ernal) view. The user’s view of a database program represents data in a format that is meaningful to a user and to the software programs that process those data.

One strength of a DBMS is that while there is typically only one conceptual (or logical) and physical (or internal) view of the data, there can be an endless number of different external views. This feature allows users to see database information in a more business-related way rather than from a technical, processing viewpoint. Thus the logical view refers to the way the user views the data, and the physical view refers to the way the data are physically stored and processed.

[edit]

Features and capabilities

Alternatively, and especially in connection with the relational model of database management, the relation between attributes drawn from a specified set of domains can be seen as being primary. For instance, the database might indicate that a car that was originally "red" might fade to "pink" in time, provided it was of some particular "make" with an inferior paint job. Such higher arity relationships provide information on all of the underlying domains at the same time, with none of them being privileged above the others.

[edit]

Simple definition

A database management system is the system in which related data is stored in an efficient or compact manner. "Efficient" means that the data which is stored in the DBMS can be accessed quickly and

"compact" means that the data takes up very little space in the computer's memory. The phrase "related data" means that the data stored pertains to a particular topic.

Specialized databases have existed for scientific, imaging, document storage and like uses. Functionality drawn from such applications has begun appearing in mainstream DBMS's as well. However, the main

focus, at least when aimed at the commercial data processing market, is still on descriptive attributes on repetitive record structures.

Thus, the DBMS of today roll together frequently needed services and features of attribute management.

By externalizing such functionality to the DBMS, applications effectively share code with each other and are relieved of much internal complexity. Features commonly offered by database management systems include:

Query ability

Querying is the process of requesting attribute information from various perspectives and combination of factors. Example: "How many 2-door cars in Texas are green?" A database query language and report writer allow users to interactively interrogate the database, analyze its data and update it according to the users privileges on data.

Backup and replication

Copies of attributes need to be made regularly in case primary disks or other equipment fails. A periodic copy of attributes may also be created for a distant organization that cannot readily access the original. DBMS usually provide utilities to facilitate the process of extracting and disseminating attribute sets. When data is replicated between database servers, so that the information remains consistent throughout the database system and users cannot tell or even know which server in the DBMS they are using, the system is said to exhibit replication transparency.

Rule enforcement

Often one wants to apply rules to attributes so that the attributes are clean and reliable. For example, we may have a rule that says each car can have only one engine associated with it

(identified by Engine Number). If somebody tries to associate a second engine with a given car, we want the DBMS to deny such a request and display an error message. However, with changes in the model specification such as, in this example, hybrid gas-electric cars, rules may need to change. Ideally such rules should be able to be added and removed as needed without significant data layout redesign.

Security

For security reasons, it is desirable to limit who can see or change specific attributes or groups of attributes. This may be managed directly on an individual basis, or by the assignment of individuals and privileges to groups, or (in the most elaborate models) through the assignment of individuals and groups to roles which are then granted entitlements.

Computation

Common computations requested on attributes are counting, summing, averaging, sorting, grouping, cross-referencing, and so on. Rather than have each computer application implement these from scratch, they can rely on the DBMS to supply such calculations.

Change and access logging

This describes who accessed which attributes, what was changed, and when it was changed.

Logging services allow this by keeping a record of access occurrences and changes.

Automated optimization

For frequently occurring usage patterns or requests, some DBMS can adjust themselves to improve the speed of those interactions. In some cases the DBMS will merely provide tools to monitor performance, allowing a human expert to make the necessary adjustments after reviewing the statistics collected.

[edit]

Meta-data repository

Metadata is data describing data. For example, a listing that describes what attributes are allowed to be in data sets is called "meta-information".

[edit]

Advanced DBMS

An example of an advanced DBMS is Distributed Data Base Management

System (DDBMS), a collection of data which logically belong to the same system but are spread out over the sites of the computer network. The two aspects of a distributed database are distribution and logical correlation:

Distribution: The fact that the data are not resident at the same site, so that we can distinguish a distributed database from a single, centralized database.

Logical Correlation: The fact that the data have some properties which tie them together, so that we can distinguish a distributed database from a set of local databases or files which are resident at different sites of a computer network.

2.

development of DMS

Databases have been in use since the earliest days of electronic computing. Unlike modern systems, which can be applied to widely different databases and needs, the vast majority of older systems were tightly linked to the custom databases in order to gain speed at the expense of flexibility. Originally

DBMSs were found only in large organizations with the computer hardware needed to support large data sets.

[edit]

1960s Navigational DBMS

As computers grew in speed and capability, a number of general-purpose database systems emerged; by the mid-1960s there were a number of such systems in commercial use. Interest in a standard began to grow, and Charles Bachman, author of one such product, the Integrated Data Store (IDS), founded the

"Database Task Group" within CODASYL, the group responsible for the creation and standardization of COBOL. In 1971 they delivered their standard, which generally became known as the "Codasyl approach", and soon a number of commercial products based on this approach were made available.

The Codasyl approach was based on the "manual" navigation of a linked data set which was formed into a large network. When the database was first opened, the program was handed back a link to the

first record in the database, which also contained pointers to other pieces of data. To find any particular record the programmer had to step through these pointers one at a time until the required record was returned. Simple queries like "find all the people in India" required the program to walk the entire data set and collect the matching results one by one. There was, essentially, no concept of "find" or "search". This may sound like a serious limitation today, but in an era when most data was stored on magnetic tape such operations were too expensive to contemplate anyway. Solutions were found to many of these problems. Prime Computer created a CODASYL compliant DBMS based entirely on B-Trees that circumvented the record by record problem by providing alternate access paths. They also added a query language that was very straightforward. Further, there is no reason that relational normalization concepts cannot be applied to CODASYL databases however, in the final tally, CODASYL was very complex and required significant training and effort to produce useful applications.

IBM also had their own DBMS system in 1968, known as IMS . IMS was a development of software written for the Apollo program on the System/360. IMS was generally similar in concept to Codasyl, but used a strict hierarchy for its model of data navigation instead of Codasyl's network model. Both concepts later became known as navigational databases due to the way data was accessed, and Bachman's

1973 Turing Award presentation was The Programmer as Navigator . IMS is classified as ahierarchical database. IDMS and CINCOM's TOTAL database are classified as network databases.

[edit]

1970s relational DBMS

Edgar Codd worked at IBM in San Jose, California, in one of their offshoot offices that was primarily involved in the development of hard disk systems. He was unhappy with the navigational model of the

Codasyl approach, notably the lack of a "search" facility. In 1970, he wrote a number of papers that outlined a new approach to database construction that eventually culminated in the groundbreaking A

Relational Model of Data for Large Shared Data Banks .

[1]

In this paper, he described a new system for storing and working with large databases. Instead of records being stored in some sort oflinked list of free-form records as in Codasyl, Codd's idea was to use a "table" of fixed-length records. A linked-list system would be very inefficient when storing "sparse" databases where some of the data for any one record could be left empty. The relational model solved this by splitting the data into a series of normalized tables (or relations ), with optional elements being moved out of the main table to where they would take up room only if needed.

Just as the navigational approach would require programs to loop in order to collect records, the relational approach would require loops to collect information about any one record. Codd's solution to the necessary looping was a set-oriented language, a suggestion that would later spawn the ubiquitous SQL.

Using a branch of mathematics known as tuple calculus, he demonstrated that such a system could support all the operations of normal databases (inserting, updating etc.) as well as providing a simple system for finding and returning sets of data in a single operation.

IBM itself did one test implementation of the relational model, PRTV, and a production one, Business

System 12, both now discontinued. Honeywell did MRDS [ disambiguation needed ] for Multics, and now there are two new implementations: Alphora Dataphor andRel. All other DBMS implementations usually called relational are actually SQL DBMSs.

In 1970, the University of Michigan began development of the MICRO Information Management

System [2] based on D.L. Childs' Set-Theoretic Data model.

[3][4][5] Micro was used to manage very large data sets by the US Department of Labor, the U.S. Environmental Protection Agency, and researchers

from the University of Alberta, the University of Michigan, and Wayne State University. It ran on IBM mainframe computers using the Michigan Terminal System.

[6] The system remained in production until

1998.

[edit]

Late-1970s SQL DBMS

IBM started working on a prototype system loosely based on Codd's concepts as System R in the early

1970s. The first version was ready in 1974/5, and work then started on multi-table systems in which the data could be split so that all of the data for a record (some of which is optional) did not have to be stored in a single large "chunk". Subsequent multi-user versions were tested by customers in 1978 and 1979, by which time a standardized query language – SQL [ citation needed ] – had been added. Codd's ideas were establishing themselves as both workable and superior to Codasyl, pushing IBM to develop a true production version of System R, known as SQL/DS , and, later, Database 2 (DB2).

Many of the people involved with INGRES became convinced of the future commercial success of such systems, and formed their own companies to commercialize the work but with an SQL interface. Sybase, Informix, NonStop SQL and eventually Ingres itself were all being sold as offshoots to the original INGRES product in the 1980s. Even Microsoft SQL Server is actually a re-built version of

Sybase, and thus, INGRES. Only Larry Ellison's Oracle started from a different chain, based on IBM's papers on System R, and beat IBM to market when the first version was released in 1978.

[edit]

1980s object-oriented databases

The 1980s, along with a rise in object oriented programming, saw a growth in how data in various databases were handled. Programmers and designers began to treat the data in their databases as objects. That is to say that if a person's data were in a database, that person's attributes, such as their address, phone number, and age, were now considered to belong to that person instead of being extraneous data. This allows for relations between data to be relations to objects and their attributes and not to individual fields.

[7]

Another big game changer for databases in the 1980s was the focus on increasing reliability and access

[edit]

21st century NoSQL databases

Main article: NoSQL

In the 21st century a new trend of NoSQL databases was started. Those non-relational databases are significantly different from the classic relational databases. They often do not require fixed table schemas, avoid join operations by storing denormalized data, and are designed to scale horizontally. Most of them can be classified as either key-value stores or document-oriented databases.

In recent years there was a high demand for massively distributed databases with high partition tolerance but according to the CAP theorem it is impossible for a distributed system to simultaneously provide consistency, availability and partition tolerance guarantees. A distributed system can satisfy any two of these guarantees at the same time, but not all three. For that reason many NoSQL databases are using what is called eventual consistency to provide both availability and partition tolerance guarantees with a maximum level of data consistency.

The most popular software in that category include: MongoDB, memcached, Redis, CouchDB, Apache

Cassandra and HBase, that all are open-source software products.

3.

Data models

A data model is an abstract structure that provides the means to effectively describe specific data structures needed to model an application. As such a data model needs sufficient expressive power to capture the needed aspects of applications. These applications are often typical to commercial companies and other organizations (like manufacturing, human-resources, stock, banking, etc.). For effective utilization and handling it is desired that a data model is relatively simple and intuitive. This may be in conflict with high expressive power needed to deal with certain complex applications. Thus any popular general-purpose data model usually well balances between being intuitive and relatively simple, and very complex with high expressive power. The application's semantics is usually not explicitly expressed in the model, but rather implicit (and detailed by documentation external to the model) and hinted to by data item types' names (e.g., "part-number") and their connections (as expressed by generic data structure types provided by each specific model).

[edit]

Early data models

These models were popular in the 1960s, 1970s, but nowadays can be found primarily in old legacy systems. They are characterized primarily by being navigational with strong connections between their logical and physical representations, and deficiencies in data independence.

[edit]Hierarchical model

In the Hierarchical model different record types (representing real-world entities) are embedded in a predefined hierarchical (tree-like) structure. This hierarchy is used as the physical order of records in storage. Record access is done by navigating through the data structure using pointers combined with sequential accessing.

This model has been supported primarily by the IBM IMS DBMS, one of the earliest DBMSs. Various limitations of the model have been compensated at later IMS versions by additional logical hierarchies imposed on the base physical hierarchy.

[edit]Network model

In this model a hierarchical relationship between two record types (representing real-world entities) is established by the set construct. A set consists of circular linked lists where one record type, the set owner or parent, appears once in each circle, and a second record type, the subordinate or child, may appear multiple times in each circle. In this way a hierarchy may be established between any two record types, e.g., type A is the owner of B. At the same time another set may be defined where B is the owner of A. Thus all the sets comprise a general directed graph (ownership defines a direction), or network construct. Access to records is either sequential (usually in each record type) or by navigation in the circular linked lists.

This model is more general and powerful than the hierarchical, and has been the most popular before being replaced by the Relational model. It has been standardized by CODASYL. Popular DBMS products that utilized it were Cincom Systems' Total and Cullinet'sIDMS.

[edit]Inverted file model

An inverted file or inverted index of a first file, by a field in this file (the inversion field), is a second file in which this field is the key. A record in the second file includes a key and pointers to records in the first file where the inversion field has the value of the key. This is also the logical structure of

contemporary database indexes. The related Inverted file data model utilizes inverted files of primary database files to efficiently directly access needed records in these files.

Notable for using this data model is the ADABAS DBMS of Software AG, introduced in 1970. ADABAS has gained considerable customer base and exists and supported until today. In the 1980s it has adopted the Relational model and SQL in addition to its original tools and languages.

[edit]

Relational model

Main article: Relational model

The relational model is a simple model that provides flexibility. It organizes data based on twodimensional arrays known as relations, or tables as related to databases. These relations consist of a heading and a set of zero or more tuples in arbitrary order. The heading is an unordered set of zero or more attributes, or columns of the table. The tuples are a set of unique attributes mapped to values, or the rows of data in the table. Data can be associated across multiple tables with a key. A key is a single, or set of multiple, attribute(s) that is common to both tables. The most common language associated with the relational model is the Structured Query Language (SQL), though it differs in some places.

[edit]

Object model

In recent years, the object-oriented paradigm has been applied in areas such as engineering and spatial databases, telecommunications and in various scientific domains. The conglomeration of object oriented programming and database technology led to this new kind of database. These databases attempt to bring the database world and the application-programming world closer together, in particular by ensuring that the database uses the same type system as the application program. This aims to avoid the overhead (sometimes referred to as the impedance mismatch ) of converting information between its representation in the database (for example as rows in tables) and its representation in the application program (typically as objects). At the same time, object databases attempt to introduce key ideas of object programming, such as encapsulation and polymorphism, into the world of databases.

[edit]

Object relational model

[edit]

XML as a database data model

[edit]

Other database models

Products offering a more general data model than the relational model are sometimes classified as postrelational.

[7] Alternate terms include "hybrid database", "Object-enhanced RDBMS" and others. The data model in such products incorporates relations but is not constrained by E.F. Codd's Information Principle, which requires that all information in the database must be cast explicitly in terms of values in relations and in no other way [8]

Some of these extensions to the relational model integrate concepts from technologies that pre-date the relational model. For example, they allow representation of a directed graph with trees on the nodes. The

German company sones implements this concept in itsGraphDB.

Some post-relational products extend relational systems with non-relational features. Others arrived in much the same place by adding relational features to pre-relational systems. Paradoxically, this allows products that are historically pre-relational, such as PICK andMUMPS, to make a plausible claim to be post-relational.

The resource space model (RSM) is a non-relational data model based on multi-dimensional classification.

[9]

SQL for the Relational model

Main article: SQL

A major Relational model language supported by all the relational DBMSs and a standard.

SQL was one of the first commercial languages for the relational model. Despite not adhering to the relational model as described by Codd, it has become the most widely used database language.

[10][11] Though often described as, and to a great extent is a declarative language, SQL also includes procedural elements. SQL became a standard of the American National Standards

Institute (ANSI) in 1986, and of the International Organization for Standards (ISO) in 1987. Since then the standard has been enhanced several times with added features. However, issues of SQL code portability between major RDBMS products still exist due to lack of full compliance with, or different interpretations of the standard. Among the reasons mentioned are the large size, and incomplete specification of the standard, as well as vendor lock-in.

[edit]

OQL for the Object model

Main article: OQL

An Object model language standard (by the Object Data Management Group) that has influenced the design of some of the newer query languages like JDOQL and EJB QL, though they cannot be considered as different flavors of OQL.

[edit]

XQuery for the XML model

Main articles: XQuery, XML, and SQL/XML

XQuery is an XML based database language (also named XQL). SQL/XML combines XQuery and XML with SQL.

[12]

4.

DMS structure

The hierarchical structure

The hierarchical structure was used in early mainframe DBMS. Records’ relationships form a treelike model. This structure is simple but nonflexible because the relationship is confined to a one-to-many relationship. IBM’s IMS system and the RDM Mobile are examples of a hierarchical database system with multiple hierarchies over the same data. RDM Mobile is a newly designed embedded database for a mobile computer system. The hierarchical structure is used primarily today for storing geographic information and file systems.

Hierarchical model redirects here. For the statistics usage, see hierarchical linear modeling.

A hierarchical database model is a data model in which the data is organized into a tree-like structure. The structure allows representing information using parent/child relationships: each parent can have many children, but each child has only one parent (also known as a 1-to-many relationship ). All attributes of a specific record are listed under an entity type.

Example of a hierarchical model

In a database an entity type is the equivalent of a table. Each individual record is represented as a row, and each attribute as a column. Entity types are related to each other using 1:N mappings, also known as one-to-many relationships. This model is recognized as the first database model created by

IBM in the 1960s.

Currently the most widely used hierarchical databases are IMS developed by IBM and Windows

Registry by Microsoft.

[edit]

The Network Structure

The network structure consists of more complex relationships. Unlike the hierarchical structure, it can relate to many records and accesses them by following one of several paths. In other words, this structure allows for many-to-many relationships.

For computer network models, see network topology, packet generation model and channel model.

The network model is a database model conceived as a flexible way of representing objects and their relationships. Its distinguishing feature is that the schema, viewed as a graph in which object types are nodes and relationship types are arcs, is not restricted to being a hierarchy or lattice.

Example of a Network Model.

The network model's original inventor was Charles Bachman, and it was developed into a standard specification published in 1969 by the CODASYLConsortium.

[edit]

The relational structure

The relational structure is the most commonly used today. It is used by mainframe, midrange and microcomputer systems. It uses two-dimensional rows and columns to store data. The tables of records can be connected by common key values. While working for IBM, E.F. Codd designed this structure in 1970. The model is not easy for the end user to run queries with because it may require a complex combination of many tables.

[edit]

The multidimensional structure

The multidimensional structure is similar to the relational model. The dimensions of the cube-like model have data relating to elements in each cell. This structure gives a spreadsheet-like view of data. This structure is easy to maintain because records are stored as fundamental attributes —in the same way they are viewed —and the structure is easy to understand. Its high performance has made it the most popular database structure when it comes to enabling online analytical processing (OLAP).

[edit]

The object-oriented structure

The object-oriented structure has the ability to handle graphics, pictures, voice and text, types of data, without difficultly unlike the other database structures. This structure is popular for multimedia Web-based applications. It was designed to work with object-oriented programming languages such as Java.

The dominant model in use today is the ad hoc one embedded in SQL, despite the objections of purists who believe this model is a corruption of the relational model since it violates several fundamental principles for the sake of practicality and performance. Many DBMSs also support

the Open Database Connectivity API that supports a standard way for programmers to access the DBMS.

Before the database management approach, organizations relied on file processing systems to organize, store, and process data files. End users criticized file processing because the data is stored in many different files and each organized in a different way. Each file was specialized to be used with a specific application. File processing was bulky, costly and inflexible when it came to supplying needed data accurately and promptly. Data redundancy is an issue with the file processing system because the independent data files produce duplicate data so when updates were needed each separate file would need to be updated. Another issue is the lack of data integration. The data is dependent on other data to organize and store it. Lastly, there was not any consistency or standardization of the data in a file processing system which makes maintenance difficult. For these reasons, the database management approach was produced.

Types of tastings and processes

Black box testing in database testing

Black box testing involves testing interfaces, integration of the databases, which includes:

1. Mapping of data including meta data

2. It verifies incoming data from data load.

3. It also verifies out going data from queried function.

4. various techniques such as Cause effect graphing technique,Equivalence partitioning and boundary value analysis are used.With the help of these techniques,we independently test the functionality of the database.

Pros and Cons of black box testing:

Test case generation in black box testing is very easy. Their generation are completely independent of program and we can state them in early stage of development. Because of this programmer has advanced knowledge of how to design the database application resulting in reduction in efforts and debugging. Cost for development of black box test cases is lower as compare to white box test cases.

The major drawback of the black box testing is,we do not know how much part of our program is being tested and it also not applicable for some faults.

[3]

[edit]

White box Testing in database testing

White box testing mainly deals with the internal structure of the database. The specification details are hidden from the user.It involves:

1. It involves the testing of database triggers, logical views which are going to support database refactoring.

2. It performs module testing of our database functions,triggers,views,SQL queries etc.

3. Validate our database tables,data models,database schema etc.

4. Checks Rules of Referential integrity

5. Selection of default table values maintaining database consistency.

6. The techniques used in white box testing are condition coverage,decision coverage,statement coverage,cyclomatic complexity.

pros and cons of white box testing:

The main advantage of white box testing in database testing is that we are aware of coding details, so we can eliminate internal bugs in database. The limitation of white box testing is that, SQL statements are not traced in white box testing.

[edit]

The WHODATE approach for database testing

While generating the test cases for database testing, the semantic of SQL statement need to be included in resulting test cases. For that purpose, a technique called White box database application technique

(WHODATE)is used. As shown in the figure (right), SQL statements are independently converted into

GPL statements, followed by traditional white box testing to generate test cases which include SQL semantics.

[4]

[edit]

Four Stages of database testing

Set Fixture

Tear down.

.

Testing system under test.

Outcome Verification.

Test fixture describes the initial state of your database before entering into the testing. After setting fixtures, we need to test our database behavior under defined test cases.Depending on the outcome we get,we either modify test case or just keep it as it is.Tear down either results in terminating the testing or results in another test cases.

[5] In order to make successful database testing we should follow the following workflow to execute each single test,

1.

Clean up the database:

In the case of database testing,if the testable data is already present in the database,then we need to reset it to empty.

2.

Set up Fixture:

A tool like PHPUnit will then iterate over fixtures and insert into table.

3.

Run Test, Verify outcome and then Tear down:

After resetting database to empty and listing of fixtures,we need to run the test and verify the output.If it results in success, then tear down other wise do testing again,and repeat same procedure.

[edit]

Some problems in database testing

1. The setup for database testing is costly and complex to maintain, because database schema are constantly changing with insert,delete and update operations.

2. Extra overhead is involved in order to determine state of the database transactions.

3. After cleaning up the database,new test cases are required to design.

4. The SQL generator is required to transform SQL statement in order to include SQL semantic into database test cases.