The IUPUI Program Evaluation Website

advertisement

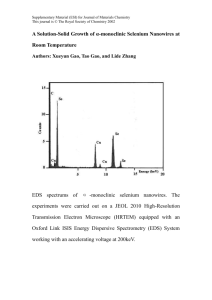

The IUPUI Program Evaluation Website The IUPUI program evaluation website is an evolving online point of entry to an expansive landscape of evaluation to help address the evaluation needs of IUPUI faculty and staff. It identifies practical, user-friendly documents and websites with high utility for conceptualizing and clarifying interventions to be evaluated, designing, planning, implementing, and reporting evaluation results. This website does not promote or endorse any of the listed links below and only offers these links as a resource to the IUPUI community and for individuals with an interest in evaluation. Insights into “What is” evaluation: Some definitions What is evaluation? Evaluation: Evaluation is the systematic acquisition and assessment of information to provide useful feedback about some object. William Trochim, Research Methods Knowledge Base, 2009 Evaluation: Evaluation is the systematic process of determining the merit, value, and worth of someone (the evaluee, such as a teacher, student, or employee) or something (the evaluand, such as a product, program, policy, procedure, or process). Michael Scriven, The Teacher Evaluation Glossary, 1992 What is program evaluation? Program evaluation: Program evaluations are individual systematic studies conducted periodically, or on an ad hoc basis, to assess how well a program is working. They are often conducted by experts external to the program, inside or outside the agency, as well as by program managers. A program evaluation typically examines achievement of program Jacqueline H. Singh, MPP, PhD objectives in the context of other aspects of program performance or in the context in which it occurs. Government Accountability Office, 2005 What is evaluation research? Evaluation research: Evaluation research is a systematic process for (a) assessing the strengths and weaknesses of programs, policies, organizations, technologies, persons, needs, or activities; (b) identifying ways to improve them; and (c) determining whether desired outcomes are achieved. Evaluation research can be descriptive, formative, process, impact, summative or outcomes oriented. It differs from more typical program evaluation in that it is more likely to be investigator initiated, theory based, and focused on evaluation as the object of the object of study. Leonard Bickman, 2005 Page 1 The IUPUI Program Evaluation Website Practical Resources for Program Evaluation and Evaluation Research I. Methods and Design: http://www.socialresearchmethods.net William Trochim; The Center for Social Research Methods). Helpful materials that include online courses and tutorials on applied social research methods, including program evaluation and research design. http://www.socialresearchmethods.net/kb/intrev al.php http://www.socialresearchmethods.net/kb/pecycl e.php http://gsociology.icaap.org/methods/Evaluationb eginnersguide_qual.pdfAddresses: Observation, interviews, and focus groups. http://gsociology.icaap.org/methods/Evaluationb eginnersguide_cause.pdf Addresses: Cause and how to determine whether a program caused its intended outcome. Documents that address specific challenges to evaluation: http://gsociology.icaap.org/methods/Evaluationb eginnersguide_challenge1.pdf This document focuses on one of the major challenges in evaluation: Getting an answer to the question about whether the program has an effect. II. Basic Guides: http://gsociology.icaap.org/methods Gene Shackman: Multiple Free Resources for Program Evaluation and Social Research Methods. (A private site compiled by Ya-Lin Liu and Gene Shackman.) http://gsociology.icaap.org/methods/Evaluationb eginnersguide_WhatIsEvaluation.pdf Addresses: What is program evaluation. http://gsociology.icaap.org/methods/Evaluationb eginnersguide_evaluationquestion.pdf Addresses: What are the evaluation questions. Using logic models to find the questions for the evaluation. http://gsociology.icaap.org/methods/Evaluationb eginnersguide_methodsoverview.pdf Addresses: What are the methods of evaluation, briefly listing the methods. http://gsociology.icaap.org/methods/Evaluationb eginnersguide_surveys.pdf Addresses: Surveys. Jacqueline H. Singh, MPP, PhD http://gsociology.icaap.org/methods/Evaluationb eginnersguide_challenge2.pdf This resource addresses another major challenge in evaluation: Whether the evaluation results are useful to people involved in the program. This version includes ideas about the entire evaluation process, all in one handout. http://gsociology.icaap.org/methods/evaluationb eginnersguide.pdf Addresses: What is evaluation, methods design, and questions. http://www.managementhelp.org/evaluatn/fnl_e val.htm Carter McNamara; Free Management Library; Basic Guide to Program Evaluation This document provides guidance toward planning and implementing an evaluation process for for-profit or nonprofit programs -there are many kinds of evaluations that can be applied to programs, for example, goals-based, process-based, outcomes-based, etc. Page 2 The IUPUI Program Evaluation Website III. University Resources http://www.wkkf.org/ W. K. Kellogg Foundation http://www.uwex.edu/ces/pdande/evaluation/eva ldocs.html University of Wisconsin: Multiple evaluation resources and support. http://ww2.wkkf.org/default.aspx?tabid=75&CI D=281&NID=61&LanguageID=0 The Evaluation Toolkit: A toolkit designed to provide grantees with guidance as they undertake this learning effort. It is targeted primarily to grantees who will be working with an external evaluator, but anyone seeking to design an effective, useful evaluation can benefit from this material. http://extension.psu.edu/evaluation/Default.html Program Evaluation at Penn State University, College of Agricultural Sciences, Cooperative Extension and Outreach, provides evaluation planning tools, including Tip-sheets on specific evaluation techniques. . http://www.extension.psu.edu/evaluation http://www.wmich.edu/evalctr/ The Evaluation Center at Western Michigan University: In addition to a Directory of Evaluators http://ec.wmich.edu/evaldir/ and glossaries http://ec.wmich.edu/Glossary/glossaryList.htm, this site advances the theory and practice of program, personnel, and student / constituent evaluation, as applied primarily to education and human services. It contains a number of links, project descriptions, publications and reports, as well as checklists . . . http://www.wmich.edu/evalctr/checklists/checkli stmenu.htm. IV. Government / Foundation Resources: http://www.gao.gov/ Government Accountability Office (GAO): The U.S. Government Accountability Office (GAO) is known as "the investigative arm of Congress" and "the congressional watchdog." The following GAO link addresses performance measurement and program evaluation: http://www.gao.gov/new.items/d05739sp.pdf Performance Measurement and Program Evaluation: Definitions and Relationships (GAO-05-739SP; 2005) This GAO PDF document provides definitions and distinctions between program evaluation and program measurement. Jacqueline H. Singh, MPP, PhD http://www.nsf.gov/ National Science Foundation: http://www.nsf.gov/pubs/2002/nsf02057/nsf020 57.pdf The 2002 User-Friendly Handbook for Project Evaluation: This handbook was developed to provide managers working with the National Science Foundation (NSF) with a basic guide for the evaluation of NSF’s educational programs. It is aimed at people who need to learn more about both what evaluation can do and how to do an evaluation, rather than those who already have a solid base of experience in the field. It builds on firmly established principles, blending technical knowledge and common sense to meet the special needs of NSF and its stakeholders. The Handbook discusses quantitative and qualitative evaluation methods, suggesting ways in which they can be used as complements in an evaluation strategy. V. Relevant Documents in Use Today (Evaluation Methods): http://www.nsf.gov/pubs/1997/nsf97153/start.ht m User-Friendly Handbook for Mixed Methods Evaluations: This handbook is aimed at users who need practical rather than technically sophisticated advice about evaluation methodology. The main objective is to make PIs and PDs "evaluation smart" and to provide the knowledge needed for planning and managing useful evaluations. Page 3 The IUPUI Program Evaluation Website http://www.gao.gov/ Government Accountability Office (GAO) http://www.gao.gov/special.pubs/erm.html The U.S. Government Accountability Office (GAO) is known as "the investigative arm of Congress" and "the congressional watchdog." Special publications are available at Evaluation Research and Methodology: http://www.gao.gov/special.pubs/pe1014.pdf Designing Evaluations: (PEMD-10.1.4., May 1991) Resource on program evaluation designs that focuses on: (1) the importance of evaluation designs; (2) appropriate program evaluation questions; (3) consideration of evaluation constraints; (4) design assessments; (5) design types; and (6) the use of available data. http://www.gao.gov/special.pubs/pe1019.pdf Case Study Evaluations: (PEMD-10.1.9., November 1990) Presents information on the use of case study evaluations for GAO audit and evaluation work, focusing on: (1) the definition of a case study; (2) conditions under which a case study is an appropriate evaluation method for GAO work; and (3) distinguishing a good case study from a poor one. Also includes information on: (1) various case study applications; and (2) case study design and strength assessment. http://www.gao.gov/special.pubs/pe1012.pdf The Evaluation Synthesis: (PEMD-10.1.2., March 1992) This GAO PDF document provides information on evaluation synthesis, a systematic procedure for organizing findings from several disparate evaluation studies, which enables evaluators to gather results from different evaluation reports and to ask questions about the group of reports. The GAO evaluation synthesis guide presents information on: (1) defining evaluation synthesis and the steps in such synthesis; (2) strengths and limitations of evaluation synthesis; (3) techniques for developing a synthesis; (4) quantitative and nonquantitative approaches for performing the Jacqueline H. Singh, MPP, PhD synthesis; (5) how evaluation synthesis can identify important interaction effects that single studies may not identify; and (6) study comparisons and problems. http://www.gao.gov/special.pubs/pe10110.pdf Prospective Evaluation Methods: the Prospective Evaluation Synthesis (PEMD10.1.10. November 1990) This methodology transfer paper on prospective evaluation synthesis focuses on a systematic method for providing the best possible information on, among other things, the likely outcomes of proposed programs, proposed legislation, the adequacy of proposed regulations, or toppriority problems. The paper uses a combination of techniques that best answer prospective questions involving the analyses of alternative proposals and projections of various kinds. http://www.gao.gov/special.pubs/pe10111.pdf Quantitative Data Analysis: An Introduction (PEMD-10.1.11. June 1992) This methodology transfer paper on quantitative data analysis deals with information expressed as numbers, as opposed to words, and is about statistical analysis in particular. The paper aims to bridge the communications gap between generalist and specialist, helping the generalist evaluator to be a wiser consumer of technical advice and helping report reviewers to be more sensitive to the potential for methodological errors. The intent is thus to provide a brief tour of the statistical terrain by introducing concepts and issues important to GAO’s work, illustrating the use of a variety of statistical methods, discussing factors that influence the choice of methods, and offering some advice on how to avoid pitfalls in the analysis of quantitative data. Concepts are presented in a nontechnical way by avoiding computational procedures, except for a few illustrations, and by avoiding a rigorous discussion of assumptions that underlie statistical methods. Page 4 The IUPUI Program Evaluation Website http://www.gao.gov/special.pubs/pe1015.pdf Using Structured Interviewing Techniques: (PEMD-10.1.5. July 1991) This methodology transfer paper describes techniques for designing a structured interview, for pretesting, for training interviewers, and for conducting interviews. Using Structured Interviewing Techniques is one of a series of papers issued by the Program Evaluation and Methodology Division (PEMD). http://archive.gao.gov/t2pbat4/150366.pdf Developing and Using Questionnaires: (PEMD-10.1.7 October 1993) The purpose of this methodology transfer paper is to provide evaluators with a background that is of sufficient depth to use questionnaires in their evaluations. Specifically, this paper provides rationales for determining when questionnaires should be used to accomplish assignment objectives. It also describes how to plan, design, and use a questionnaire in conducting a population survey. http://archive.gao.gov/t2pbat6/146859.pdf Using Statistical Sampling: (PEMD10.1.6) The purpose of this methodology transfer paper on statistical sampling is to provide its readers with a background on sampling concepts and methods that will enable them to identify jobs that can benefit from statistical sampling, to know when to seek assistance from a statistical sampling specialist, and to work with the specialist to design and execute a sampling plan. This paper describes sample design, selection and estimation procedures, and the concepts of confidence and sampling precision. Two additional topics, treated more briefly, include special applications of sampling to auditing and evaluation and some relationships between sampling and data collection problems. Lastly, the strengths and limitations of statistical sampling are summarized. http://archive.gao.gov/f0102/157490.pdf Jacqueline H. Singh, MPP, PhD Content Analysis: A Methodology for Structuring and Analyzing Written Material. (PEMD-10.3.1. September 1, 1996) A guide on content analysis, describing how to use this methodology in: (1) selecting textual material for analysis; (2) developing an analysis plan; (3) coding textual material; (4) ensuring data reliability; and (5) analyzing the data. It defines content analysis and details how to decide whether it is appropriate and, if so, how to develop an analysis plan. The paper also specifies how to code documents, analyze the data, and avoid pitfalls at each stage. VI. Resource Library (Samples): http://oerl.sri.com/ OERL: The Online Evaluation Resource Library is a National Science Foundation funded project developed for professional evaluators and program developers. Although targeted for those who work in school environments, it provides extensive evaluation resources and samples — instruments, plans, and reports — that can be modeled, adapted, or used as is. This website is broken down by project type and resource type. VII. Professional Associations: . http://www.eval.org/ The American . Evaluation Association (AEA): . An international professional association of evaluators devoted to the application and exploration of program evaluation, personnel evaluation, technology, and many other forms of evaluation. Evaluation involves assessing the strengths and weaknesses of programs, policies, personnel, products, and organizations to improve their effectiveness. Its mission is to improve evaluation practices and methods, increase evaluation use, promote evaluation as a profession, and support the contribution of evaluation to the generation of theory and knowledge about effective human action. One AEA goal is to be the pre-eminent source for identifying online resources of interest to evaluators. Page 5 The IUPUI Program Evaluation Website Contact Information: Jacqueline H. Singh, MPP, PhD Assessment Specialist 317-274-1300 jhsingh@iupui.edu Testing Center Indiana University Purdue University Indianapolis 620 Union Drive, UN 0035 Indianapolis, IN 46202 & Center for Teaching and Learning Indiana University Purdue University Indianapolis 755 West Michigan Street, UL 1125 Indianapolis, IN 46202 Jacqueline H. Singh, MPP, PhD Page 6