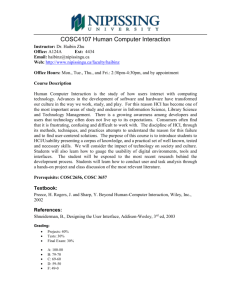

MS-Word version - Igbinedion University Okada

advertisement