chapter 2 - Library & Knowledge Center

advertisement

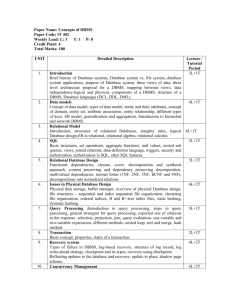

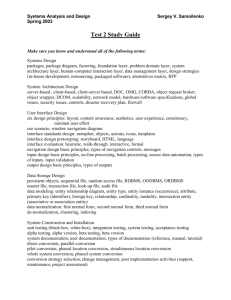

CHAPTER 2 THEORETICAL FOUNDATION 2.1. Theoretical Foundation 2.1.1. System According to Hoffer et al. (2002, p32), a system is an interrelated set of components with an identifiable boundary, working together for some purpose. Meanwhile, Capron (2000, p450) said that a system is an organized set of related components established to accomplish a certain task. 2.1.2. Information System As said by Whitten (2002, p45) that an information system (IS) is an arrangement of people, data, processes, and interfaces that interact to support and improve day-to-day operations in a business as well as support the problem-solving and decision-making needs of management and users. 2.1.3. Office Automation System Office automation is more than word processing and spreadsheet application. In accordance to Whitten (2002, p48), office automation (OA) systems support the wide range of business office activities that provide for improved work flow and communications between workers, regardless of whether or not those workers are located in the same offices. Office automation systems are concerned with getting all relevant information to those who need it. Office automation functions include word processing, electronics messages (or electronic mail), work group computing, work group scheduling, facsimile (fax) processing, imaging and electronic documents, and work flow management. 7 8 Office automations system can be designed to support both individuals and work groups. Personal information systems are those designed to meet the needs of a single user. They are designed to boost an individual’s productivity. Meanwhile work group information systems are those designed to meet the needs of a work group. They are designed to boost the group’s productivity. 2.1.4. System Analysis and Design in Information System Hoffer et al. (2002, p4-6) explain that information system is a complex, challenging, and stimulating organizational process that a team of business and system, professionals uses to develop and maintain computer-based information systems. Although advances in information technology continually give us new capabilities, the analysis and design of information system is driven from an organizational perspective. An organization might consist of a whole enterprise, specific departments, or individual work groups. Organizations can respond to and anticipate problems and opportunities through innovative uses of information technology. Information systems analysis and design is, therefore, an organizational improvement process. Systems are built and rebuilt for organizational benefits. Thus, the analysis and design of information system is based on your understanding of the organization’s objectives, structure, and processes as well as your knowledge of how to exploit information technology for advantage. In early years of computing, analysis and design was considered to be an art. Now that the need for systems and software has become so great, people in industry and academia have developed work methods that make analysis and design a disciplined process. Central to software engineering processes are various methodologies, 9 techniques, and tools that have been developed, tested, and widely used over the years to assist people during system analysis and design. Methodologies are comprehensive, multiple-step approaches to system development that will guide your work and influence the quality of your final product: the information system. A methodology adopted by an organization will be consistent with its general management cycle. Most methodologies incorporate several development techniques. Techniques are particular processes that an analyst will follow to help ensure that the work is well through-out, complete, and comprehensible to others. Techniques provide support for a wide range of tasks including conducting through interviews to determine what the system should do, planning and managing the activities in a system development project, diagramming the system’s logic, and designing the reports the system will generate. Tools are typically computers programs that make it easy to use and benefit from the techniques and to faithfully follow the guidelines of the overall development methodology. These three elements-methodologies, techniques, and tools-work together to form an organizational approach to system analysis and design. 2.1.4.1. Definition of System Analysis System analysis is the process of studying an existing system to determine how it works and how it meets user needs (Capron, 2000, p451). Whitten (2002 p165) described that system analysis is a problem-solving technique that decompose a system into its component pieces for the purpose of studying how well those component parts work and interact to accomplish their purpose. 10 System analysis is a term that collectively describes the early phase of systems development. Information system analysis is defined by Whitten (2002, p166) as those development phases in a project that primary focus on the business problem, independent of any technology that can or will be used to implement a solution to that problem. System analysis is driven by the business concerns of system owners and system users. Hence, it addresses the data, processes, and interface building blocks from system owners; and system users’ perspectives. Fundamentally, system analysis is about problem solving. 2.1.4.2. Definition of System Design System design is the process of developing a plan for an improved system, based on the results of the system analysis (Capron, 2000, p451). Moreover, according to Whitten (2002, p166) system design (also called system synthesis) is a complementary problem-solving technique (to system analysis) that reassembles a system’s component pieces back into a complete system-it is hoped an improved system. This may involve adding, deleting, and changing pieces relative to the original system. 2.1.4.3. System Development Life Cycle System Development Life Cycle (SDLC) is a common methodology for systems development in many organizations, featuring several phases that mark the progress of the system analysis and design effort (Hoffer et al., 2002, p.8). The SDLC is a phased approach to analysis and design that holds that systems are best developed through the use of a specific cycle of analyst and user activity (Kendall, 2002, p10). According to Capron (2000, p452-473) the system development life cycle can be described generally in five phases: 11 Phase 1 – preliminary investigation This phase is also known as the feasibility study or system survey, is the initial consideration of the problem to determine how – and whether – an analysis and design project should proceed. Aware of the importance of establishing a smooth working relationship, the analyst refers to an organization chart showing the lines of authority within the client organization. After determining the nature of the problem and its scope, the analyst expresses the users’ needs as objectives. Phase 2 – system analysis In phase two, system analysis, the analyst gathers and analyzes data from common sources such as written documents, interviews, questionnaires, observation, and sampling. The system analyst may use a variety of charts and diagrams to analyze the data. The analysis phase also includes preparation of system requirements, a detailed list of the things the system must be able to do. Phase 3 – system design In phase three, system design, the analyst submits a general preliminary design for the client’s approval before proceeding to the specific detail design. Preliminary design begins with reviewing the system requirements, followed by considering acquisition by purchase. Phase 4 – system development System development consists of scheduling, programming, and testing. Phase 5 – implementation This last phase includes training to prepare users of the new system. However, Dennis and Wixom (2003, p3-8) said that the SDLC has a similar set of four fundamental phases: planning, analysis, design, and implementation. Different 12 projects may emphasize different parts of the SDLC or approach the SDLC phases in different ways, but all projects have elements of these four phases. Each phase is itself composed of a series of steps, which rely on techniques that produce deliverables. Those four phases are: Planning The planning phase is the fundamental process of understanding why an information system should be built and determining how the project team will go about building it. The first step is to identify opportunity, during which the system’s business value to the organization is identified. Analysis The analysis phase answers the question of who will use the system, what the system will do, and where and when it will be used. During this phase, the project team investigates any current system(s), identifies improvement opportunities, and develops a concept for the new system. Design The design phase decides how the system will operate, in term of the hardware, software, and network infrastructure; the user interface, forms, and reports that will be used; and the specific programs, database, and files that will be needed. Although most of the strategic decisions about the system were made in the development of the system concept during the analysis phase, the steps in the design phase determine exactly how the system will operate. Implementation 13 The final phase in the SDLC is the implementation phase, during which the system is actually built. This is the phase that usually gets the most attention, because for most systems, it is the longest and most expensive single part of the development process. 2.1.4.4. Conceptual Data Modeling Data modeling is a technique for organizing and documenting a system’s data. Data modeling is sometimes called database modeling because a data model is eventually implemented as a database. It is sometimes called information modeling. In accordance with Hoffer et al. (2002, p306), a conceptual data model is a representation of organizational data. The purpose of a conceptual data model is to show as many rules about the meaning and inter-relationships among data as are possible. It is a detailed model that captures the overall structure of organizational data while being independent of any database management system or other implementation considerations. 2.1.4.4.1. Entity Relational Diagram According to Hoffer et al. (2002, p311), an entity-relationship data model (or ER model) is a detailed, logical representation of the data for an organization or for a business area. The E-R model is expressed in terms of entities in the business environment, the relationships or associations among those entities, and the attributes or properties of both the entities and their relationships. An E-R model is normally expressed as an entity-relationship diagram (or R-R diagram), which is a graphical representation of an E-R model. System analysts draw entity relationship diagrams (ERDs) to model the system’s raw data before they draw the data flow diagram that illustrate how the data will be captured , stored, used, and maintained. Whitten (2002, 14 p260) supports by saying there are several notation for data modeling. The actual model is frequently called an entity relationship diagram (ERD) because it depicts data in terms of the entities and relationships described by the data. 2.1.4.4.1.1. Entities An entity is a person, place, object, event, or concept in the user environment about which the organization wishes to maintain data. An entity has its own identity that distinguishes it from each other entity (Hoffer et al., 2002, p313). Moreover, an entity type (sometimes called an entity class) is a collection of entities that share common properties or characteristic. According to Whitten (2002, p260), entity is a class of persons, places, objects, events, or concepts about which we need to capture and store data. An entity is something about which the business needs to store data. Formal synonyms include entity type and entity class. Each entity is distinguishable from the other entities. 2.1.4.4.1.2. Attributes Each entity type has a set of attributes associated with it. An attribute is a property or characteristic of an entity that is of interest to the organization (Hoffer et al., 2002, p314). Whitten (2002, p261) cites if an entity is something about which we want to store data, then we need to identify what specific pieces of data we want to store about each instance of a given entity. We call these pieces of data attributes. An attribute is a descriptive property or characteristic of an entity. Synonyms include element, property, and field. 15 2.1.4.4.1.3. Relationships Relationships are the glue that hold together the various components of an E-R model. A relationship is an association between the instances of one or more entity types that is of interest to the organization. An association usually means that event has occurred or that there exists some natural linkage between entity instances (Hoffer et al., 2002, p317). Whitten (2002, p264) supports the fact that conceptually, entities and attributes do not exist in isolation. The things they represent interact with and impact one another to support the business mission. Thus, we introduce the concept of a relationship. A relationship is a natural business association that exists between one or more entities. The relationship may represent an event that links the entities or merely a logical affinity that exists between the entities. 2.1.4.5. Process Modeling A process model is a formal way of representing how a business system operates. It illustrates the processes or activities that are performed and how data moves among them. A process model can be used to document the current system or the new system being developed, whether computerized or not. 2.1.4.5.1. Data Analysis Data analysis shows how the current system works and helps determine the system requirements. In addition, data analysis material will serve as the basis for documentation of the system. Two types of tools are data flow diagram and decision tables (Capron, 2000, p458). 2.1.4.5.2. Structured Analysis 16 Whitten (2002, p168) described structured analysis as a model-driven, processcentered technique used to either analyze an existing system, define business requirements for anew system, or both. The models are pictures that illustrate the system’s component pieces: processes and their associated inputs, outputs, and files. 2.1.4.5.3. Data Flow Diagram Data flow diagramming (Dennis and Wixom, 2003) is a technique that diagrams the business processes and the data that passes among them. A data flow diagram (DFD) is a sort of road map that graphically shows the flow of data through a system. It is a valuable toll for depicting present procedures and data flow (Capron, 2000, p458). Similar description of Whitten (2002, p168, 308) structure analysis draw a series of process models called data flow diagram (DFD) that depict the existing and/or proposed processes in a system along with their inputs, outputs, and files. A data flow diagram (DFD) is a tool that depicts the flow of data through a system and the work or processing performed by that system. We can see the example of DFD in figure 2.1.1. Elements of data flow diagrams are (figure 2.1.2.): Process: A process is an activity or a function that is performed for some specific business reason. Data flow: A data flow is a single piece of data, or a logical collection of several pieces of information. Data flows are the glue that holds the processes together. Data flows show what inputs go into each process and what outputs each process produce. Data store: A data store is a collection of data that is stored in some way. 17 External entity: An external entity is a person, organization, or system that is external to the system but interacts with it. Figure 2.1.1. Example of Data Flow Diagram (Source: Dennis and Wixom, 2003) 18 Figure 2.1.2. DFD Elements (Source: Dennis and Wixom, 2003, p171) 2.1.4.6. Object Oriented Analysis and Design In object-oriented design phase, you define how the application-oriented analysis model will be realized in the implementation environment. Jacobson et al. (1992) cite three reasons for using object-oriented design. These three reasons are: The analysis model is not formal enough to be implemented directly in a programming language. To move seamlessly into the source code requires refining the objects by making decisions on what operations an object will provide, what the inter-object communication should look like, what messages are to be passed, and so forth. The actual system must be adapted to the environment in which the system will actually be implemented. To accomplish that, the analysis model has to be 19 transformed into a design model, considering different factors such as performance requirements, real-time requirements and concurrency, the target hardware and system software, the DBMS and programming language to be adopted, and so forth. The analysis results can be validated using object-oriented design. At this stage, you can verify if the results from the analysis are appropriate for building the system and make any necessary changes to the analysis model. To develop the design model, you must identify and investigate the consequences that the implementation environment will have on the design (Jacobson et al., 1992). Rumbaugh et al. (1991) separate the design activity into two stages: system design and object design. Whitten (2002, p170) said that Object oriented analysis (OOA) is a model-driven technique that integrates data and process concerns into constructs called objects. OOA models are pictures that illustrate the system’s objects from various perspectives such as structure and behavior. It is a technique used to study existing objects to see if they can be reused or adapted for new uses and define new or modified objects that will be combined with existing objects into a useful business computing application. There are four general activities when performing object-oriented analysis and they are as follows: 1. Modeling the function of the system 2. Finding and identifying the business objects 3. Organizing the objects and identifying their relationships 4. Modeling the behavior of the objects 20 2.1.4.7. Unified Modeling Language The unified Modeling Language (UML) is “a language for specifying, visualizing, and constructing the artifacts of software systems, as well as for business modeling” (UML Document Set, 1997). It is a culmination of the efforts of three leading experts, Grady Booch, Ivar Jacobson, and James Rumbaugh, who have defined objectoriented modeling language that is expected to become an industry standard in the near future. The UML builds upon and unifies the semantics and notations of the Booch (Booch, 1994), OSSE (Jacobson et al., 1992), and OMT (Rumbaugh et al., 1991) methods, as well as those other leading methods. Hoffer et al. (2002, p660) said that the UML notation is useful for graphically depicting object-oriented analysis and design models. It is not only allows you to specify the requirements of a system and capture the design decisions, but it also promotes communication among key persons involved in the development effort. OOA has become so popular that a modeling standard has evolved around it. The Unified Modeling language (or UML) provides a graphical syntax for an entire series of object models. The UML defines several different types of diagrams that collectively model an information system or application in terms of objects. 2.1.4.7.1. Use Case Modeling Jacobson et al. (1992) pioneering the application of use-case modeling for analyzing the functional requirements of a system. Because it focuses on what an existing system does or a new system should do, as opposed to how the system delivers or should deliver those functions, a use-case model is developed in the analysis phase of the object-oriented system development life cycle. Use case modeling is done in the 21 early stages of system development to help developers gain a clear understanding of the functional requirements of the system, without worrying about how those requirements will be implemented. The process is inherently iterative. Use case modeling is the process of modeling a system’s functions in terms of business events, who initiated the events, and how the system responds to the events. Use case modeling identifies and describes the system functions from the perspective of external users using a tool called use cases. The use case narrative is used in addition to textually describe the sequence of steps of each interaction (Whitten, 2002, p655). A use-case model consists of actors and use cases. A use case represents a sequence of related actions initiated by an actor to accomplish a specific goal; it is specific way of using the system (Jacobson et al., 1992). A use case is a behaviorally related sequence of steps (a scenario), both automated and manual, for the purpose of completing a single business task. Use cases describe the system functions from the perspective of external users and in the manner and terminology in which they understand. To accurately and thoroughly accomplish this demands a high level of user involvement. Use cases are the results of decomposing the scope of system functionality into many smaller statements of system functionality. The creation of the use cases has proven to be an excellent technique in order to better understand and document system requirements. A use case itself is not considered a functional requirement, but the story (scenario) the use case tells captures the essence of one or more requirements. Use cases are initiated or triggered by external users or system called actors. An actor is an external entity that interacts with the system (similar to an external entity in data flow diagramming). It is someone or something that exchanges information with the system. Note that there is a difference between an actor and a user. A user is anyone who uses 22 the system. An actor, on the other hand, represents a role that a user can play. The actor’s name should indicate that role. An actor is a type or class of a user; a user is a specific instance of an actor class playing the actors role. Use case diagrams graphically depict the interactions between the system and external systems and users. In other words, they graphically described who will use the system and in ways the user expect to interact with the system. 2.1.4.8. Object Modeling: Class Diagram A class diagram shows the static structure of an object-oriented model: the object classes, their internal structure, and the relationships in which they participate (Hoffer et al. 2002, p666). Furthermore, Whitten (2002, p655) cite that class diagrams depict the system’s object structure. They show object classes that the system is composed of as well as the relationships between those object classes. The class diagram (Dennis and Wixom, 2003, p501) is a static model that supports the static view of the evolving system. It shows the classes and the relationships among classes that remain constant in the system over time. The class diagram is very similar to the entity relationship diagram (ERD), however, the class diagram depicts classes, which include attributes, behaviors and states, while entities in the ERD only include attributes. The scope of class diagram, like the ERD, is systemwide. 2.1.4.8.1. Representing Objects in Class Diagram An object (Hoffer et al., 2002) is an entity that has a well-defined role in the application domain, and has state, behavior, and identity. An object has a state and exhibits behavior, through operations that can examine or affect its state. The state of an object encompasses its properties (attributes and relationships) and the values those 23 properties have, and its behavior represents how an object acts and reacts (Booch, 1994). An object’s state is determined by its attribute values and links to other objects. An object’s behavior depends on its state and the operation being performed. An operation is simply an action that one object performs upon another in order to get a response. Hoffer et al. (2002, p666) said that the term “object” is sometimes used to refer to a group of objects, rather that an individual object. The ambiguity can be usually resolved from the context. In the strict sense of the term, however, “object” refers to an individual object, not to a class of objects. That is the interpretation we follow in this text. If you want to eliminate any possible confusion altogether, you can use “object instance” to refer to an individual object, and object class (or simply class) to refer to a set of objects that share a common structure and a common behavior. An object is a concept, abstraction, or things that make sense in an application context (Rumbaugh et all, 1991). 2.1.4.8.2. Representing Classes in Class Diagram The main building block of a class diagram is the class, which stores and manages information in the system. During analysis, classes refer to people, places, events, and things about which the system will capture information. Later, during design and implementation, classes can refer to implementation-specific artifacts. The attributes of a class and their values define the state of each object that is created from the class, and the behavior is represented by the methods. Attributes are properties of the class about which we want to capture information (Dennis and Wixom, 2002). 2.1.4.8.3. Representing Relationships in Class Diagram 24 According to Dennis and Wixom (2002), a primary purpose of the class diagram is to show the relationships, or associations, that classes have with one another. These are depicted on the diagram by drawing lines between classes. These associations are very similar to the relationships that are found in ERD. Relationships are maintained by references, which are similar to pointers and maintained internally by the system. When multiple classes share a relationship, a line is drawn and labeled with either the name of the relationship or the roles that the class plays in the relationship. 2.1.5. Data Storage Design The data storage function manages how data is stored and handled by the programs that run the system. 2.1.5.1. Database As said by Hoffer et al. (2002, p10), a database is a shared collection of logically related data organized in ways that facilitate capture, storage, and retrieval for multiple users in an organization. Databases involve methods of data organization that allow data to be centrally managed, standardized, and consistent. Moreover, Dennis and Wixom (2003, p356) also said that a database is a collecting of groupings of information that are related to each other in some other way (e.g., through common fields). According to Microsoft SQL Server 2000 Database Design and Implementation (2001) a database is similar to a data file in that it is a storage place for data. Like most types of data files, a database does not present information directly to a user; rather, the user runs an application that accesses data from the database and presents it to the user in an understandable format. 25 Database systems are more powerful than data files because the data is more highly organized. In a well-designed database, there are no duplicate pieces of data that the user or application has to update at the same time. Related pieces of data are grouped together in a single structure or record, and you can define relationships among these structures and records. When working with data files, an application must be coded to work with the specific structure of each data file. In contrast, a database contains a catalog that applications use to determine how data is organized. Generic database applications can use the catalog to present users with data from different databases dynamically, without being tied to a specific data format. 2.1.5.2. Relational Database Model The relational database model (Codd, 1970) represents data in the form of related tables or relation. A relation is a named, two-dimensional table of data. Each relation (or table) consists of a set of named columns and an arbitrary number of unnamed rows. Each column in a relation corresponds to an attribute of that relation. Each row of a relation corresponds to a record that contains data values for an entity. Microsoft SQL Server 2000 Database Design and Implementation (2001) writes although there are different ways to organize data in a database, a relational database is one of the most effective systems. A relational database system uses mathematical set theory to effectively organize data. In a relational database, data is collected into tables (called relations in relational database theory). A table represents some class of objects that are important to an organization. For example, a company might have a database with a table for employees, a table for 26 customers, and another table for stores. Each table is built from columns and rows (attributes and tuples, respectively, in relational database theory). Each column represents an attribute of the object class represented by the table, such that the employees' table might have columns for attributes such as first name, last name, employee ID, department, pay grade, and job title. Each row represents an instance of the object class represented by the table. For example, one row in the employees' table might represent an employee who has employee ID 12345. You can usually find many different ways to organize data into tables. Relational database theory defines a process called normalization, which ensures that the set of tables you define will organize your data effectively. Furthermore, a relational database (Ramakrishnan, 2003, p61) is a collection of relations with distinct relation names. The relational database schema is the collection of schemas for the relations in the database. The relational database is the most popular kind of database for application development today. It is much easier to work with from a development perspective. A relational database is based on collections of tables, each of which has primary key. The tables are related to each other by placing the primary key from one table into the related table as a foreign key. 2.1.5.3. Database Management System Ramakrishnan (2003, p8-9) said that a DBMS is a piece of software designed to make the preceding tasks easier. By storing data in a DBMS rather than as a collection of operating system files, we can use the DBMS’s feature to manage the data in a robust and efficient manner. As the volume of data and the number of users grow-hundreds of 27 gigabytes of data and thousands of users are common in current corporate databaseDBMS support becomes indispensable. Using a DBMS to manage data has many advantages: Data Independence: Application programs should not, ideally, be exposed to detail of data representation and storage. The DBMS provides an abstract view of data that hides such details. Efficient Data Access: A DBMS utilizes a variety of sophisticated techniques to store and retrieve data efficiently. This feature is especially important if the data is stored on external storage devices. Data Integrity and Security: If data is always accessed through the DBMS, the DBMS can enforce integrity constraints. Also, it can enforce access controls that govern what data is visible to different classes of users. Data Administration: When several users share the data, centralizing the administration of data can offer significant improvements. Concurrent Access and Crash Recovery: A DBMS schedules concurrent access to the data in such manner that users can think of the data as being accessed by only one user at a time. Further, the DBMS protects users from the effects of system failures. Reduced Application Development Time: Clearly, the DBMS supports important functions that are common to many applications accessing data in the DBMS. This, in conjunction with the high-level interface to the data, facilitates quick application development. DB<S applications are also likely to be more robust 28 than similar stand-alone applications because many important tasks are handled by the DBMS. Also by Dennis and Wixom (2003, p356), a database management system (DBMS) is software that creates and manipulates databases. 2.1.5.4. Denormalization Based on a book of Performance Tuning SQL Server (Cox and Jones, 1997) that is published in http://www.windowsitlibrary.com/Content/77/16/5.html?Ad=1&, Denormalization is the art of introducing database design mechanisms that enhance performance. Successful denormalization depends upon a solid database design normalized to at least the third normal form. Only then can you begin a process called responsible denormalization. The only reason to denormalize a database is to improve performance. 2.1.6. Menu Structure and Hierarchy Diagram According to Marston (1999), a menu structure is a series of menu pages that are linked together to form a hierarchy. Each menu page contains a series of options which can be a mixture of other menu pages or application transactions. Meanwhile, Hierarchy_diagram a definition and by wikipedia in http://en.wikipedia.org/wiki/ http://en.wikipedia.org/wiki/Data_Structure_Diagram, a hierarchy diagram is a type of Data Structure Diagram. DSDs are a simple Hierarchy diagram, most useful for documenting complex data entities. They differ from ERDs in that ERDs focus on the relationships between different entities whereas DSDs focus on the relationships of the elements within an entity and enable users to fully see the links and relationships between each entity. 29 2.1.7. State Transition Diagram According to Martin and McClure (1985), transition diagram pictures an information system’s behavior, which shows how the system does a response to every action and how that actions change the state of the system. Transition diagram is a very important tool for a system analyst. This diagram is easy to be depicted and very helpful in many situations where many changes happen to that state. Moreover, transition diagram is used to show several probable states for entity types in database system. This diagram is also very helpful in showing diagram from a system habit in several types of messages with a complex process and need synchronization (Martin and McClure, 1985). Main component of this transition diagram are state and arrows that represents state change where the system exist. A state is defined as a group of attributes or a condition of man’s behavior or something at a certain time and condition, so the state from a typical system consist of (Yourdon, 1989, p260-261): 1. Waiting for user to enter password 2. Heating chemical mixture 3. Waiting for net command 4. Accelerating engine 5. Mixing ingredient 6. Waiting for instrument data 7. Filling tank 8. Idle 30 2.1.8. System Flow Chart According to Capron (2000, p466-467), we can use standard ANSI flowchart symbols as shown in table 2.1.1. to show the flow of data in the system. We use that standard to illustrate what will be done and what files will be used. System flowchart describes only the big picture. There are some shapes which have different meaning in the flow chart: Shape Indicates Process Data Display Manual input Stored data Decision On-page reference Off-page reference 31 Parallel Mode Table 2.1.1. ANSI Flow Chart Shapes (Source: Capron, 2000, p466) 2.1.9. Intranet Stalling (2004, p581) quoted an intranet used by a single organization that provides the key Internet applications, especially the World Wide Web. An internet operates within the organization for internal purposes and can exist as an isolated, selfcontained internet, or may have links to the internet. Meanwhile, Comer (2000) said intranet is a private corporate network consisting of hosts, routers, and networks that use TCP/IP technology. An intranet may or may not connect to global Internet. 2.1.9.1. Web Server In reality, a Web server (Powell, 2003) is just a computer running a piece of software that fulfills HTTP requests made by browsers. In the simplest sense, a Web server is just a file server, and a very slow one at times. Consider the operation of a Web server resulting from a user requesting a file, as shown in Figure 2.1.3. Basically, a user just requests a file and the server either delivers it back or issues some error message, such as the ubiquitous 404 not found message. However, a Web server isn't just a file server because it also can run programs and deliver results. In this sense, Web servers also can be considered application servers-if occasionally simple or slow ones. 32 Figure 2.1.3. Web Server Operation Overview (Source: Powell, 2003, Figure16-3) When it comes to the physical process of publishing documents, the main issues are whether to run your own server or to host elsewhere in conjunction with Web server software and hardware. However, a deeper understanding of how Web servers do their job is important to understand potential bottlenecks. Recall that, in general, all that a Web server does is listen for requests from browsers or, as they are called more generically, user agents. Once the server receives a request, typically to deliver a file, it determines whether it should do it. If so, it copies the file from the disk out to the network. In some cases, the user agent might ask the server to execute a program, but the idea is the same. Eventually, some data is transmitted back to the browser for display. 33 This discussion between the user agent, typically a Web browser, and the server takes place using the HTTP protocol. 2.1.9.2. Web Browser According to a quotation from http://encyclopedia.laborlawtalk.com, a web browser is a software package that enables a user to display and interact with documents hosted by web servers. Popular browsers include Microsoft Internet Explorer and Mozilla Firefox. A browser is the most commonly used kind of user agent. The largest networked collection of linked documents is known as the World Wide Web. Protocols and standards Web browsers communicate with web servers primarily using the HTTP protocol to fetch web pages identified by their URL (http:). HTTP allows web browsers to submit information to web servers as well as fetch web pages from them. As of writing, the most commonly used HTTP is HTTP/1.1, which is fully defined in RFC 2616. HTTP/1.1 has its own required standards, and these standards are not fully supported by Internet Explorer, but most other current-generation web browsers do. The file format for a web page is usually HTML and is identified in the HTTP protocol using a MIME content type. Most browsers natively support a variety of formats in addition to HTML, such as the JPEG, PNG and GIF image formats, and can be extended to support more through the use of plugins. Many browsers also support a variety of other URL types and their corresponding protocols, such as ftp: for FTP, gopher: for Gopher, and https: for HTTPS (an SSL encrypted version of HTTP). The combination of HTTP content type and URL protocol specification allows web page designers to embed images, 34 animations, video, sound, and streaming media into a web page, or to make them accessible through the web page. Early web browsers supported only a very simple version of HTML. The rapid development of proprietary web browsers (see Browser Wars) led to the development of non-standard dialects of HTML, leading to problems with Web interoperability. Modern web browsers (Mozilla, Opera, and Safari) support standards-based HTML and XHTML (starting with HTML 4.01), which should display in the same way across all browsers. Internet Explorer does not fully support XHTML 1.0 or 1.1 yet. Right now, many sites are designed using WYSIWYG HTML generation programs such as Macromedia Dreamweaver or Microsoft Frontpage, and these generally use invalid HTML in the first place, thus hindering the work of the W3C in developing standards, specifically with XHTML and CSS. Some of the more popular browsers include additional components to support Usenet news, IRC, and e-mail via the NNTP, SMTP, IMAP, and POP protocols. 2.1.9.3. HTTP According to Stalling (2004, p762), the Hypertext Transfer Protocol (HTTP) is the foundation protocol of the World Wide Web (WWW) and can be used in any client/ server application involving hypertext. HTTP is not a protocol for transferring hypertext; rather it is a protocol for transmitting information with efficiency necessary for making hypertext jumps. HTTP is a transaction-oriented client/server protocol. The most typical use of HTTP is between a Web browser and Web server. To provide reliability, HTTP makes use of TCP. 35 The Hypertext Transfer Protocol (HTTP) is the basic, underlying, applicationlevel protocol used to facilitate the transmission of data to and from a Web server. HTTP provides a simple, fast way to specify the interaction between client and server. The protocol actually defines how a client must ask for data from the server and how the server returns it. HTTP does not specify how the data actually is transferred; this is up to lower-level network protocols such as TCP (Powell, 2003). 2.1.9.4. TCP/IP In TCP/IP is an Internet-based concept and is the framework for developing a complete range of computer communication standards. Virtually all complete vendors now provide support for this architecture (Stalling, 2002). The TCP/IP protocol architecture is a result of protocol research and development conducted on the experimental packetswitched network and is generally referred to as the TCP/IP protocol suite. The TCP/IP model organizes the communication task into five relatively independent layers: Physical layer The physical layer covers the physical interface between a data transmission device and a transmission medium or network. This layer is concerned with specifying the characteristics of the transmission medium, the nature of the signals, the data rate, and related matters. Network access layer The network access layer is concerned with the exchange of data between an end system (server, workstation, etc.) and the network to which it is attached. The network access layer is concerned with access to and routing data across a network for two end system attached to the same network. In those cases where two devices are attached to 36 different networks, procedures are needed to allow data to traverse multiple interconnected networks. Internet layer To support the previous activity, this is the function of the internet layer. The Internet Protocol (IP) is used at this layer to provide the routing function across multiple networks. This protocol is implemented not only in the end systems but also in routers. Host-to-host, or transport layer The mechanisms for providing reliability are essentially independent of the nature of the applications. Thus, it makes sense to collect those mechanisms in a common layer shared by all applications. This is referred to host-to-host layer, or transport layer. The Transmission Control Protocol (TCP) is the most commonly used protocol to provide this functionality. Application layer The application layer contains the logic needed to support the various user applications. For each different type of application, such as file transfer, a separate module is needed that is peculiar to that application. 2.1.9.5. Client-Server Architecture Dennis and Wixom (2003, p276-278) believe that most organizations today are moving to client-server architectures, which attempt to balance the processing between the client and the server. In these architectures, the client is responsible for the presentation logic, whereas the server is responsible for the data access logic and data storage. The application logic may either reside on the client, reside on the server, or be split between both. When the client has most or all of the application logic, it is called a 37 thick client. If the client contains only the presentation function and most of application function resides on the server, it is called a thin client. Client-server architectures (figure 2.1.4.) have four important benefits. First and foremost, they are scalable. That means it is easy to increase or decrease the storage and processing capabilities of the servers. Second, client-server architectures can support many different types of clients and servers. It is possible to connect computers that use different operation systems so that users can choose which type of computer they prefer. You are not locked into one vendor, as is often the case with server-based networks. Middleware is a type of system software designed to translate between different vendors’ software. Middleware is installed on both the client computer and the server computer. The client software communicates with the middleware that can reformat the message into a standard language that can be understood by the middleware that assist the server software. Third, for thin client-server architectures that use Internet standards, is it simple to clearly separate the presentation logic, the application logic, and the data access logic and design each to be somewhat independent. Likewise, it is possible to change the application logic without changing the presentation logic or the data, which are stored in databases and accessed using SQL commands. Finally, because mo single server computer supports all the applications, the network is generally more reliable. There is no central point of failure that will halt the entire network if it fails, as there is with sever-based architectures. If any server fails in a client-server environment, the network can continue to function using all the other servers. 38 Figure 2.1.4. Client-Server Architecture (Source: http://www.dw.com/dev/archives/images/clientserver.gif) 2.1.10. Financial and Budgeting System The field of finance is closely related to economics and accounting, and financial managers need to understand the relationships between these fields. Economics provides a structure for decision making in such areas as risk analysis, pricing theory through supply and demand relationships, comparative return analysis, and many other important areas. Economics also provides the broad picture of the economic environment in which corporations must continually make decisions. A financial manager must understand the institutional structure of the Federal Reserve System, the commercial banking system, and the interrelationships between the various sectors of the economy. Economic variables, such as gross domestic product, industrial production, disposable income, 39 unemployment, inflation, interest rates, and taxes (to name a few), must fit into the financial manager’s decision model and be applied correctly. These terms will be presented throughout the text and integrated into the financial process. (Block and Hirt, 2003) According to Weygandt, et al. (1999, p1034-1035), he defines the noun “budget” as a formal written summary (or statement) of management’s plans for a specified future time period, expressed in financial terms. The primary benefits of budgeting are: It requires all level of management to plan ahead and to formalize their future goals on a recurring basis. It provides definite objectives for evaluating performance at each level of responsibility. It creates an early warning system for potential problems. With early warning, management has time to solve the problem before things get out of hand. For example, the cash budget may reveal the need for outside financing several months before an actual cash shortage occurs. It facilitates the coordination of activities within the business by correlating the goals of each segment with overall company objectives. Thus, production and the impact of external factors, such as economic trends, on the company’s operations. It contributes to positive behavior patterns throughout the organization by motivating personnel to meet planned objectives. 40 A budget is an aid to management; it is not a substitute for management. A budget cannot operate or enforce itself. The benefits of budgeting will be realized only when budgets are carefully prepared and properly administered by management. The development of the budget for the coming year starts several months before the end of the current year. The budgeting process usually begins with the collection of data from each of the organizational units of the company. Past performance is often the starting point in budgeting, from which future budget goals are formulated. The budget is developed within the framework of a sales forecast that shows potential sales for the industry and the company’s expected share of such sales. Sales forecasting involves a consideration of such factors as (1) general economic conditions, (2) industry trends, (3) market research studies, (4) anticipated advertising and promotion, (5) previous share market, (6) changes in price, and (7) technological development. The input of sales personnel and top management are essential in preparing the sales forecast. In many company, responsibility for coordinating the preparation of the budget is assigned to a budget committee. The committee, often headed by a budget director, ordinarily includes the president, treasurer, chief accountant (controller), and management personnel from each major areas of the company such as sales, production, and research, The budget committee serves as a review board where managers and supervisors can defend their budget goals and requests. After differences are reviewed, modified if necessary, and reconciled, the budget is prepared by the budget committee, put in its final form, approved, and distributed (Weygandt, et al., 1998, p1036). 41 2.2. Theoretical Framework 2.2.1. System Development Methodology A methodology is a formalized approach to implementing the SDLC (i.e., it is a list of steps and deliverables). There are many different system development methodologies, and each one is unique because of its emphasis on processes versus data and the order and focus it places on each SDLC (Dennis and Wixom, 2003, p8). 2.2.2. Rapid Application Development Dennis and Wixom (2003) state that rapid application development (RAD) is a newer approach to systems development that emerged in the 1990s. RAD (figure 2.2.1.) attempts to address both weaknesses of the structured development methodologies: long development times and the difficulty in understanding a system from a paper-based description. RAD methodologies adjust the SDLC phases to get some part of the system developed quickly and into the hands of the users. In the way, the users can better understand the system and suggest revisions that bring the system closer to what is needed. Most RAD methodologies recommend that analysts use special techniques and computer tools to speed up the analysis, design, and implementation phases such as CASE (computer-aided software engineering) tools, JAD (joint application design) sessions, fourth-generation/visual programming languages that simplify and speed up programming (e.g., Visual Basic), and code generators that automatically produce programs from design specifications. It is the combination of the changed SDLC phases and the use of these tools and techniques that improves the speed and quality of system development. There are process-centered, data-centered, and object-oriented 42 methodologies that follow the basic approaches of the three RAD categories. Table 2.2.1. shows advantages and disadvantages of using RAD. Advantage of RAD 1. Buying may save money compared Disadvantage of RAD 1. to building 2. Deliverables sometimes easier to compared to building 2. port (because they make greater use of 3. 3. (because there are no classic scripts, intermediate code) milestones) Development conducted at a higher 4. 7. Less efficient (because code isn't hand crafted) 5. Loss of scientific precision level) (because no formal methods are Early visibility used) (because of prototyping) 6. Harder to gauge progress high-level abstractions, (because RAD tools operate at that 5. Cost of integrated toolset and hardware to run it level of abstraction 4. Buying may not save money 6. May accidentally empower a return Greater flexibility to the uncontrolled practices (because developers can redesign of the early days of software almost at will) development Greatly reduced manual coding 7. More defects (because of wizards, code (because of the "code-like-hell" generators, code reuse) syndrome) Increased user involvement 8. Prototype may not scale up, a B-I-G 43 (because they are represented on the team at all times) 8. 9. (because of timeboxing, software (because CASE tools may generate reuse) 10. may ... I. because of reuse) II. III. Shorter development cycles (because development tilts toward 11. Reliance on third-party components Possibly reduced cost (because time is money, also 10. Reduced features Possibly fewer defects much of the code) 9. problem 11. sacrifice needed functionality add unneeded functionality create legal problems Requirements may not converge schedule and away from (because the interests of customers economy and quality) and developers may diverge Standardized look and feel from one iteration to the next) (because APIs and other reusable 12. Standardized look and feel components give a consistent (undistinguished, lackluster appearance) appearance) 13. Successful efforts difficult to repeat (no two projects evolve the same way) 14. Unwanted features (through reuse of existing components) Table 2.2.1. Evaluation of RAD (Source: csweb.cs.bgsu.edu/maner/domains/RAD.htm) 44 Figure 2.2.1. Rapid Application Development Using Iterative Prototyping (Source: csweb.cs.bgsu.edu/maner/domains/RAD.htm) 2.2.2.1. Phased Development According to Dennis and Wixom (2003, p11-12), the phased development methodology breaks the overall system into a series of versions that are developed sequentially (figure 2.2.2.). The analysis phase identifies the overall system concept, and the project team, users, and system sponsor then categorize the requirements into a series of versions. The most important and fundamental requirements are bundled into the first version of the system. The analysis phase then leads into design and implementation, but only with the set of requirements identifies for version 1. Once version 1 is implemented, work begins on version 2. Additional analysis is performed on the basis of the previously identifies requirements and combined with new ideas and issues that arose from users’ experience with version 1. Version 2 then is designed and implemented, and work immediately begins on the next version. This Process continues until the system is complete or is no longer in use. 45 Phased development has the advantage of quickly getting a useful system into the hands of the users. Although it does not perform all the functions the users need at first, it begins to provide business value sooner than if the system were delivered after completion, as is the case with waterfall or parallel methodologies. Likewise, because users begin to work with the system sooner, they are more likely to identify important additional requirements sooner than with structured design situations. The major drawback to phased development is that users begin to work with systems that are intentionally incomplete. It is critical to identify the most important and useful features and include them in the first version while managing users’ expectations along the way. Figure 2.2.2. The Phased Development Methodology (Source: Dennis and Wixom, 2003, p12) 46 2.3. Supported Knowledge 2.3.1. Microsoft SQL Server 2000 Powell (2002) wrote in his book, SQL Server 2000 is an RDBMS that uses Transact-SQL to send requests between a client computer and a SQL Server 2000 computer. An RDBMS includes databases, the database engine, and the applications that are necessary to manage the data and the components of the RDBMS. The RDBMS organizes data into related rows and columns within the database. The RDBMS is responsible for enforcing the database structure, including the following tasks: Maintaining the relationships among data in the database Ensuring that data is stored correctly and that the rules defining data relationships are not violated Recovering all data to a point of known consistency in case of system failures The database component of SQL Server 2000 is a Structured Query Language (SQL)-compatible, scalable, relational database with integrated XML support for Internet applications. SQL Server 2000 builds upon the modern, extensible foundation of SQL Server 7.0. The following sections introduce you to the fundamentals of databases, relational databases, SQL, and XML. Microsoft SQL Server 2000 is a complete database and analysis solution for rapidly delivering the next generation of scalable Web applications. SQL Server is an RDBMS that uses Transact-SQL to send requests between a client computer and a SQL Server 2000 computer. A database is similar to a data file in that it is a storage place for data; however, a database system is more powerful than a data file. The data in a database is more highly organized. A relational database is a type of database that uses 47 mathematical set theory to organize data. In a relational database, data is collected into tables. SQL Server 2000 includes a number of features that support ease of installation, deployment, and use; scalability; data warehousing; and system integration with other server software. In addition, SQL Server 2000 is available in different editions to accommodate the unique performance, run-time, and price requirements of different organizations and individuals. 2.3.2. C# Versus Visual Basic .Net According Jesse Liberty (2002), the premise of the .NET Framework is that all languages are created equal. To paraphrase George Orwell, however, some languages are more equal than others. C# is an excellent language for .NET development. You will find it is an extremely versatile, robust and well designed language. It is also currently the language most often used in articles and tutorials about .NET programming. It is likely that many VB programmers will choose to learn C#, rather than upgrading their skills to VB.NET. This would not be surprising because the transition from VB6 to VB.NET is, arguably, nearly as difficult as from VB6 to C# -- and, whether it's fair or not, historically, C-family programmers have had higher earning potential than VB programmers. As a practical matter, VB programmers have never gotten the respect or compensation they deserve, and C# offers a wonderful chance to make a potentially lucrative transition. 2.3.3. C# and the .Net Framework 2.3.3.1. The .Net Platform Jesse Liberty (2002, p9) said that when Microsoft announced C# in July 2000, its unveiling was part of a much larger event: the announcement of the .NET platform. The 48 .NET platform is, in essence, a new development framework that provides a fresh application programming interface (API) to the services and APIs of classic Windows operating systems (especially the Windows 2000 family), while bringing together a number of disparate technologies that emerged from Microsoft during the late 1990s. Among the latter are COM+ component services, the ASP web development framework, a commitment to XML and object-oriented design, support for new web services protocols such as SOAP, WSDL, and UDDI, and a focus on the Internet, all integrated within the DNA architecture. Microsoft says it is devoting 80% of its research and development budget to .NET and its associated technologies. The results of this commitment to date are impressive. For one thing, the scope of .NET is huge. The platform consists of four separate product groups: A set of languages, including C# and Visual Basic .NET; a set of development tools, including Visual Studio .NET; a comprehensive class library for building web services and web and Windows applications; as well as the Common Language Runtime (CLR) to execute objects built within this framework. A set of .NET Enterprise Servers, formerly known as SQL Server 2000, Exchange 2000, BizTalk 2000, and so on, that provide specialized functionality for relational data storage, email, B2B commerce, etc. An offering of commercial web services, called .NET My Services; for a fee, developers can use these services in building applications that require knowledge of user identity, etc. New .NET-enabled non-PC devices, from cell phones to game boxes. 49 2.3.3.2. The .Net Framework Microsoft .NET supports not only language independence (Jesse Liberty, 2002, p10-11), but also language integration. This means that you can inherit from classes, catch exceptions, and take advantage of polymorphism across different languages. The .NET Framework makes this possible with a specification called the Common Type System (CTS) that all .NET components must obey. For example, everything in .NET is an object of a specific class that derives from the root class called System.Object. The CTS supports the general concept of classes, interfaces, delegates (which support callbacks), reference types, and value types. Additionally, .NET includes a Common Language Specification (CLS), which provides a series of basic rules that are required for language integration. The CLS determines the minimum requirements for being a .NET language. Compilers that conform to the CLS create objects that can interoperate with one another. The entire Framework Class Library (FCL) can be used by any language that conforms to the CLS. The .NET Framework sits on top of the operating system, which can be any flavor of Windows, and consists of a number of components. Currently, the .NET Framework consists of: Four official languages: C#, VB.NET, Managed C++, and JScript .NET The Common Language Runtime (CLR), an object-oriented platform for Windows and web development that all these languages share A number of related class libraries, collectively known as the Framework Class Library (FCL). Figure 2.3.1 breaks down the .NET Framework into its system architectural components. 50 Figure 2.3.1. .NET Framework Architecture (Source : Liberty, 2002, p10) The most important component of the .NET Framework is the CLR, which provides the environment in which programs are executed. The CLR includes a virtual machine, analogous in many ways to the Java virtual machine. At a high level, the CLR activates objects, performs security checks on them, lays them out in memory, executes them, and garbage-collects them. (The Common Type System is also part of the CLR.) In Figure 2.3.1, the layer on top of the CLR is a set of framework base classes, followed by an additional layer of data and XML classes, plus another layer of classes intended for web services, Web Forms, and Windows Forms. Collectively, these classes are known as the Framework Class Library (FCL), one of the largest class libraries in history and one that provides an object-oriented API to all the functionality that the .NET platform encapsulates. With more than 4,000 classes, the FCL facilitates rapid development of desktop, client/server, and other web services and applications. The set of framework base classes, the lowest level of the FCL, is similar to the set of classes in Java. These classes support rudimentary input and output, string 51 manipulation, security management, network communication, thread management, text manipulation, reflection and collections functionality, etc. Above this level is a tier of classes that extend the base classes to support data management and XML manipulation. The data classes support persistent management of data that is maintained on backend databases. These classes include the Structured Query Language (SQL) classes to let you manipulate persistent data stores through a standard SQL interface. Additionally, a set of classes called ADO.NET allows you to manipulate persistent data. The .NET Framework also supports a number of classes to let you manipulate XML data and perform XML searching and translations. Extending the framework base classes and the data and XML classes is a tier of classes geared toward building applications using three different technologies: Web Services, Web Forms, and Windows Forms. Web services include a number of classes that support the development of lightweight distributed components, which will work even in the face of firewalls and NAT software. Because web services employ standard HTTP and SOAP as underlying communications protocols, these components support plug-and-play across cyberspace. Web Forms and Windows Forms allow you to apply Rapid Application Development techniques to building web and Windows applications. Simply drag and drop controls onto your form, double-click a control, and write the code to respond to the associated event. 52 2.3.3.3. The C# Language The C# language (Jesse Liberty, 2002, p12-13) is disarmingly simple, with only about 80 keywords and a dozen built-in datatypes, but C# is highly expressive when it comes to implementing modern programming concepts. C# includes all the support for structured, component-based, object-oriented programming that one expects of a modern language built on the shoulders of C++ and Java. The C# language was developed by a small team led by two distinguished Microsoft engineers, Anders Hejlsberg and Scott Wiltamuth. Hejlsberg is also known for creating Turbo Pascal, a popular language for PC programming, and for leading the team that designed Borland Delphi, one of the first successful integrated development environments for client/server programming. At the heart of any object-oriented language is its support for defining and working with classes. Classes define new types, allowing you to extend the language to better model the problem you are trying to solve. C# contains keywords for declaring new classes and their methods and properties, and for implementing encapsulation, inheritance, and polymorphism, the three pillars of object-oriented programming. In C# everything pertaining to a class declaration is found in the declaration itself. C# class definitions do not require separate header files or Interface Definition Language (IDL) files. Moreover, C# supports a new XML style of inline documentation that greatly simplifies the creation of online and print reference documentation for an application. C# also supports interfaces, a means of making a contract with a class for services that the interface stipulates. In C#, a class can inherit from only a single parent, but a class can implement multiple interfaces. When it implements an interface, a C# class in effect promises to provide the functionality the interface specifies. C# also 53 provides support for structs, a concept whose meaning has changed significantly from C++. In C#, a struct is a restricted, lightweight type that, when instantiated, makes fewer demands on the operating system and on memory than a conventional class does. A struct can't inherit from a class or be inherited from, but a struct can implement an interface. C# provides component-oriented features, such as properties, events, and declarative constructs (called attributes). Component-oriented programming is supported by the CLR's support for storing metadata with the code for the class. The metadata describes the class, including its methods and properties, as well as its security needs and other attributes, such as whether it can be serialized; the code contains the logic necessary to carry out its functions. A compiled class is thus a self-contained unit; therefore, a hosting environment that knows how to read a class' metadata and code needs no other information to make use of it. Using C# and the CLR, it is possible to add custom metadata to a class by creating custom attributes. Likewise, it is possible to read class metadata using CLR types that support reflection. An assembly is a collection of files that appear to the programmer to be a single dynamic link library (DLL) or executable (EXE). In .NET, an assembly is the basic unit of reuse, versioning, security, and deployment. The CLR provides a number of classes for manipulating assemblies. A final note about C# is that it also provides support for directly accessing memory using C++ style pointers and keywords for bracketing such operations as unsafe, and for warning the CLR garbage collector not to collect objects referenced by pointers until they are released. 54