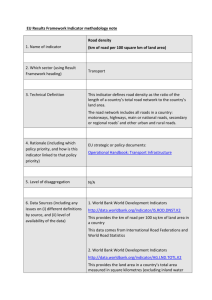

Indicator reference sheet

advertisement

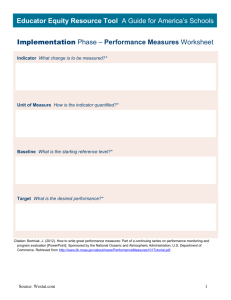

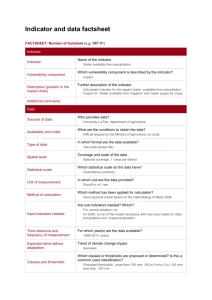

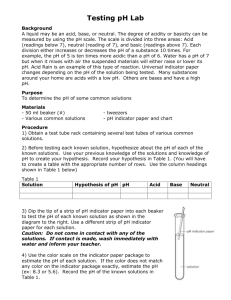

Indicator Reference Sheet – Suggested Concepts/Topics Strategic Objective: To which of the Program’s Strategic Objectives (SOs) does the result measured by this indicator contribute? Intermediate Result: To which of the SO’s Intermediate Results (if appropriate) does this indicator measure a contribution? Lower Level Result: To which lower-level result (if appropriate) does this indicator measure a contribution? Indicator: Provide the exact wording of the indicator that will measure/track a single impact of one of your activities. Be as precise as possible, providing a description that is clear and points transparently to the particular information which your data and calculations will provide. Date Established: When did relevant parties agree on the reporting of this indicator? Date Last Reviewed: When did relevant parties last review/discuss/alter the indicator? A. DESCRIPTION Precise Definition: Every significant term from the exact wording of the indicator must be clearly defined in this section. It is not enough merely to restate the indicator, nor is it sufficient to list the particular items you are planning to include or exclude from your data calculations. This section must define the categories so that anyone not familiar with your particular program would nonetheless be able to apply criteria or otherwise know exactly which categories of data should be included in indicator calculations and which should not. Unit of Measure: Normally, the unit of measure should be either NUMBER (#) or PERCENTAGE (%) Method of Calculation: This must be a mathematical description of the exact actions (addition, subtraction, division, multiplication) that will be performed on the raw data to arrive at the value of the indicator that will be reported. It must match exactly with the indicator provided in the top section, and its elements must match the items detailed in the precise definition. Any inconsistency must be resolved before the indicator reference sheet can be considered finalized. Disaggregated by: List significant subdivisions in the data that will routinely be divided for the normal presentation of data (e.g., by sex, facility type, rural/urban location, etc.) if any Justification/Management Utility: What are the activities that show that this specific indicator is an especially appropriate measurement of your project’s impacts and results? Why are these incremental results significant in or for the health sector? In what way will monitoring of these results contribute toward program success? Toward what results at a higher level, or which overarching goals, will these indicators ultimately contribute? B. PLAN FOR DATA COLLECTION Data Collection Method: List the source(s) of the raw data, the levels of collection (is a third party aggregating data or calculating some intermediate indicators that may affect your indicator values?), and describe the steps involved in the collection of any/all information needed to construct the indicator’s value for a given reporting period. Too much detail is better than too little detail here. Data Sources: As specifically as possible, identify the documents, databases, organization, and or individuals that/who will provide raw information or final figures that will be reported through this indicator. Timing/Frequency of Data Collection: Normally, this should be reported here in terms of the timing or frequency of indicator calculation. If data are collected every month but the indicator will be calculate/reported (i.e., collected by USAID) only annually, the frequency listed here should be Annually. Estimated Cost of Collection: Unless this is a special survey or other new M&E activity outside of current or ongoing plans, it will often be appropriate to note here that the cost will fall within the contract budget, or other similar language. This section helps USAID keep tract of new budget items or any not previously included in standard or routing obligations. Responsible Organization/Individual(s): With as much clarity as possible, identify the person and position within each relevant organization who will have responsibility either for providing relevant data or for otherwise contributing to indicator calculation. In most cases, there will be at least one USAID person and position identified here AND at least one Implementing Partner person and position. Location of Data Storage: In cases where raw data and calculated indicators will be stored by separate organizations, it is a good idea to note each location where portions of the information that would be necessary to reconstruct the indicator value will be stored. C. PLAN FOR DATA ANALYSIS, REPORTING, AND REVIEW (SCHEDULE, METHODOLOGY, RESPONSIBILITY) Data Analysis: Monitoring indicators typically should be analyzed at least through comparison to baselines and targets, and considered in terms of their implications for program performance. Presentation of Data: Most often, indicator values will be presented in Tables. Graphical presentation may be more appropriate for some indicators. Qualitative indicators may require more narrative explication. Review of Data: Most often, indicator values will be reviewed annually, or less frequently for less frequently calculated/reported indicators (see “Timing/Frequency of Data Collection “ above). Reporting of Data: What is the reporting schedule within USAID – SO team only, or will this indicator be reported further up to higher levels of oversight, e.g. in R4 annual report? D. DATA QUALITY ISSUES: THIS SECTION REPORTS ONLY ON ISSUES RELATED TO DATA QUALITY. ISSUES OF INDICATOR DEFINITION, PERFORMANCE, RELEVANCE OR DATA AVAILABILITY OR ALTERNATIVE STANDARDS SHOULD BE EXPLAINED OR EXPLORED IN OTHER SECTIONS. Initial Data Quality Assessment: (Validity Concerns): Given what you know at this point in time, how do you feel about the potential for problems with the quality of data that you will eventually collect and use to calculate this indicator? Do you think your data validly measure the result targeted by this indicator? Do you think your measurements are valid metrics for the (conceptual) result you are trying to track here? Do you expect institutional or other challenges to arise that may affect the degree of measurement error or other systematic errors in your data set? Known Data Limitations and Significance (if any): (Reliability Concerns) Even if your indicator is valid, are your data reliable? Do you foresee any gaps or inconsistencies in the data that might affect the soundness of the Indicator’s calculated value, or your ability to interpret/understand the meaning of the Indicator? If limitations arise, do you judge them likely to be highly significant, trivial/unimportant, or somewhere in-between? Actions Taken or Planned to Address Data Limitations: Think of all of the things that could go wrong with your planned indicator when you start trying to gather information about real results of your program activities. How will you try to mitigate or correct for any gaps or measurement errors that may be due to difficulties with the data as noted in the previous two sections? E. PERFORMANCE DATA TABLE Key to Table: If items are disaggregated or if subsets are provided in parentheses or any other key to understanding the table at a glance is required, explanatory information should be provided here. Rationale for Selection of Baselines and Targets How exactly have you determined your baseline for your indicator value(s)? If no exact baseline was available, what information did you use for a proxy measure and how did you adjust or otherwise interpret the data in order to arrive at what you consider to be a reasonable approximation of a baseline? How exactly have you determined a target (or targets) for your indicator values? If you have extrapolated form existing partial data or estimated based on data from another geographical area, explain your reasoning. TARGET/PLANNED ACTUAL COMMENTS 2003 (Baseline) 2004 2005 2006 2007 2008 Final Comments: After calculation of indicator values for one or more periods, note here any adjustments you may have had to make. Adjustments may be needed, for example, according to information provided in any of the sections above (e.g., data that were expected to be available turned out not to be available (for certain disaggregations, for example); data whose quality was already suspect was in the end judged to be of insufficient validity or reliability; data collection that depended on cooperating government or NGO entities did not occur or was incomplete). In addition, further (unanticipated) issues may have arisen in defining, collecting, calculating, or otherwise arriving at sound and transparently interpretable indicator values. Any such additional information that would be helpful for people interpreting the meaning or significance of the indicator values should be discussed here. ILLUSTRATIVE COMPLETED INDICATOR REFERENCE SHEET Performance Indicator Reference Sheet Objective: Increased use of high impact health services and improved health practices Intermediate Result (output measures): N/A Indicator: DTP3 vaccine coverage Geographic Focus: In four target zones Is this an Annual Report Indicator? Yes, 2003, 2004, 2005, 2006 Date established: January 2001 Date last reviewed: September 30, 2003 Description Precise Definition(s): When calculating annual coverage of DTP3 from routine data, the definition is as follows: Percentage of infants 0-11 months of age in a specified calendar year who were immunized with DTP3 in that calendar year. The numerator is the number immunized by 12 months with DTP3 in a specified calendar year. The denominator is the total number of surviving infants less than 12 months of age in the same year. Unit of Measure: Percentage Disaggregated by: Geographic location Justification/Management Utility: DPT3 is the best measure of the use of services and also serves as a proxy for full immunization coverage. The indicator is an important measure of incremental progress in the country’s immunization program. When calculated from surveys, the indicator is helpful in validating the routine reporting system. Data Collection, Compilation and Analysis Data Collection Method: Review of written service records Data Source(s): Written records of services provided at clinics in target zones Frequency and Timing of Data Collection: Every six months. October report from partners helpful for compiling Annual Report; April report in a separate report Budget Mechanism: cost included within the contract with implementing partner Individual(s) Responsible at USAID: J. Perez, Activity Manager Individual(s) Responsible for Providing Data to USAID: K. Lee, M&E Specialist form implementing partner. Semi-annual reports to USAID Location of Data Storage: Electronic and hard copies to be maintained in USAID/SO5 PMP filing cabinet and electronic filing system (C:/USAID/Health/PMP) as well as by each partner at their sites. Questions for Analyzing Data: a. Is the trend going up or down? In which regions? b. Which regions have the highest increase and why? c. What do we know about the immunization rat in areas where USAID does not implement programs? d. How do the semi-annual data from partners compare to the annual data from MOH? Data Quality Issues Date of Initial Data Quality Assessment: January 2001. Official government data from MOH were compared with project level service statistics to determine data quality. Known Data Limitations and Significance (if any): The data collection and analysis do not take into account the timeliness of the doses or the time interval between the doses. Actions Taken or Planned to Address Data Limitations: Conduct small household survey to calculate valid coverage rates based on card information in target zones. Date(s) of Future Data Quality Assessments: 2005 Procedures for Future Data Quality Assessment: TBD BY MISSION Other Notes Notes on Baseline/Targets: Comments Performance Indicator Values Year Target Actual 2000 (Baseline) 15% 2001 20% 2002 25% 2003 30% 2004 35% 2005 40% THIS SHEET LAST UPDATED ON: September 30, 2003 Notes