MS_Project_Proposal_Adipudi(050607)

advertisement

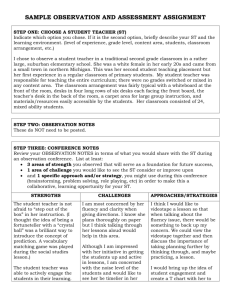

ACCELERATING RANKING-SYSTEM USING WEBGRAPH MASTER PROJECT PROPOSAL Padmaja Adipudi Computer Science Department University of Colorado at Colorado Springs 6th May 2007 Approved by: Dr. Jugal Kalita ________________________________________________ (Advisor) Dr. Edward Chow ________________________________________________ Dr. Tim Chamillard __________________________________ ______________1. Introduction Search Engine technology was born almost at the same time as the World Wide Web [9]. The Web is potentially a terrific place to get information on almost any topic. Doing research without leaving your desk sounds like a great idea, but all too often you end up wasting precious time chasing down useless URLs if the search engine is not designed properly. The basic components of a search engine are a Web Crawler, a Parser, a Page-Rank System, a Repository and a Front-End [4]. In a nut shell here is how the Search Engine operates. The Web Crawler fetches the Web pages from Web, the Parser takes all downloaded raw results, analyzes and eventually tries to make sense out of them. Finally the Page-Rank system finds the important pages, lists the results in the order of relevance and importance. Designing a search engine is a tedious process because of the dynamic nature of the World Wide Web and the sheer volume of the data in the Web. In short, a Page-Rank is a “vote”, by all the other pages on the Web, about how important a page is. The importance of a Web page is an inherently subjective matter. It depends on the reader’s interests, knowledge and attitudes. But there is still much that can be said objectively about the relative importance of Web pages. The Page-Rank system provides a method for rating Web pages objectively and mechanically, effectively measuring the human interest and attention devoted to them. Yi Zhang [4], a former Master’s student at UCCS implemented a search engine called Needle (http://128.198.144.16/cgi-bin/search.pl) that uses a Cluster Ranking algorithm that is similar to the Google’s PageRankT algorithm [6]. The current implementation takes a little over three hours for 300,000 URLs for Page Ranking. This project is to accelerate the existing Page-Rank system to make the Needle Search Engine [4], more efficient by using a package called “WebGraph” [1]. This package is developed in “Java” and consists of tools and algorithms. It provides simple methods for managing very large graphs by exploiting modern compression techniques. Since WebGraph provides only a highly efficient representation for a large sparsely connected graph, the PageRank calculation needs to be done separately. Using the compressed graph representation, we intend to compare Yi’s ClusterRank [4] algorithm with SourceRank [10] and Truncated PageRank [11] algorithms for Page-Rank calculation. 2. Background Research The dramatic growth of the World Wide Web is forcing modern search engines to be more efficient and research is being done to improve the existing technology. Page-Rank is a system of scoring nodes in a directed graph based on the stationary distribution of a random walk on the directed graph [4]. Conceptually, the score of a node corresponds to the frequency with which the node is visited as an individual strolls randomly through the graph. Motivated largely by the success and scale of Google’s PageRank ranking function, much research has emerged on efficiently computing the stationary distributions of ‘Web-scale Markov chain’, the mathematical mechanism underlying Page-Rank. The main challenge is that the Web graph is so large that its edges typically only exist in external memory and an explicit representation of its stationary distribution just barely fits in to main memory There is a paper called “SimRank: A Measure of Structural-Context Similarity” written by G. Jeh and J. Widom [3]. They propose a complementary approach, applicable in any domain with object-to-object relationships, that measures similarity of the structural context in which objects occur, based on their relationships with other objects. Effectively, they compute a measure that says “two objects are similar if they are related to similar objects.” This general similarity measure, called SimRank, is based on a simple and intuitive graph-theoretic model. The main advantage of the Google’s Page-Rank measure is that it is independent of the query posed by user. This means that it can be pre-computed and then used to optimize the layout of the inverted index structure accordingly. However, computing the Page-Rank requires implementing an iterative process on a massive graph corresponding to billions of Web pages and hyperlinks. There is a paper written by Yen-Yu Chen and Qingqing Gan [2] on Page-Rank calculation by using efficient techniques to perform the iterative computation. They derived two algorithms for Page-Rank and compared those with two existing algorithms proposed by Havveliwala [3, 2], and the results were impressive. Most analysis of the World Wide Web have focused on the connectivity or graph structure of the Web [5]. However, from the point of view of understanding the net effect of the multitude of Web ”surfers'' and the commercial potential of the Web, an understanding of WWW traffic is even more important. This paper describes a method for modeling and projecting such traffic when the appropriate data are available, and also proposes measures for ranking the “importance'' of Web sites. Such information has considerable importance to the 2 commercial value of many Web sites. In [6], the authors, namely Lawrence Page, Sergey Brin, Rajeev Motwani and Terry Winograd took advantage of the link structure of the Web to produce a global “importance" ranking of every Web page. This ranking, called Page-Rank, helps search engines and users quickly make sense of the vast heterogeneity of the World Wide Web. The ranking of a Web page is based on the importance of the parent pages. In [7], the authors, namely Ricardo BaezaYates, Paolo Boldi and Carlos Castillo introduce a family of link-based ranking algorithms that propagate page importance through links. In these algorithms there is a damping function that decreases with distance, so a direct link implies more endorsement than a link through a long path. Page-Rank is the most widely known ranking function of this family. The main objective is to determine whether the family of ranking techniques has some interest per se, and how different choices for the damping function impact on rank quality and on convergence speed. The need to run different kinds of algorithms over large Web graph motivates the research for compressed graph representations that permit accessing without decompressing them [8]. At this point there exists a few such compression proposals, some of them are very efficient in practice. Studying the Web graph is often difficult due to their large size [1]. It currently contains some 3 billion nodes, and more than 50 billion arcs. Recently, several proposals have been published about various techniques that allow storing a Web graph in memory in a limited space, exploiting the inner redundancies of the Web. The WebGraph [1] framework is a suit of codes, algorithms and tools that aims at making it easy to manipulate large Web graphs. The WebGraph can compress the WebBase graph [12], (118 Mnodes, 1Glinks) in as little as 3.08 bits per link, and its transposed version in as little as 2.89 bits per link. It consists of a set of flat codes suitable for storing Web graphs (or, in general, integers with power-law distribution in a certain exponent range), compression algorithms that provide a high compression ratio, algorithms for accessing a compressed graph without actually decompressing it (decompression is delayed until it is actually necessary, and documentation and data sets. 3 3. Project Scope The scope of the project is to accelerate the Page-Ranking system of the Needle Search Engine [ 4], at UCCS. This can be accomplished by comparing the three algorithms called ClusterRank [4], SourceRank [10] and Truncated PageRank [11], and choose the best for the Needle Search Engine [4]. The first Page-Rank system is the existing Yi Zhang’s Cluster-Rank system [4]. This system has been used to calculate Page-Rank for only 300,000 Web pages in .edu domains starting with those of UCCS. We want to use the accelerated Page-Rank calculations with up to 5 million or more pages and use the Page-Rank calculations of these pages to make them available on the Needle Search Engine. The second one is a new Ranking System [10], which is a Link-Based Ranking of the Web with Source-Centric Collaboration that uses a better Page-Rank finding algorithm than the algorithm being used in the existing Ranking System. The third one is a new Ranking System, which is based on Truncated PageRank algorithm [11]. In PageRank [6], the Web page can gain high Page-Rank score with supporters (in-links) that are topologically “Close” to the target node. Truncated PageRank is similar to PageRank, except that the supporters that are too “close” to a target node, usually the spammers, do not contribute towards its ranking. The acceleration of Page-Rank computation will be accomplished by applying a package called “WebGraph” with compression techniques to represent the Web graph compactly. One of the features of the WebGraph compression format is that it is devised to compress efficiently not only the Web graph, but also its transposed graph (i.e., a graph with the same nodes, but with the direction of all arcs reversed). A compact representation of the transposed graph is essential in the study of several advanced ranking algorithm. The criteria for success of this project, is to accelerate ranking system of Needle Search Engine. The existing ranking system should perform better (faster Page-Rank calculation) in calculating the Page-Rank. In addition, after applying the package, the new Link-Based Ranking Systems should continue to perform even better than the existing Ranking System because of the combined advantage from the better Page-Rank finding algorithm and the acceleration techniques provided by the “WebGraph” package. 4 4. System Design As shown below in the basic architecture of the Needle Search Engine, the basic components of a Needle Search Engine [4] are crawler, parser, page ranking system, repository system and front-end. Flexibility and scalability were taken as the two most important goals during the design of the Needle Search Engine such that all modules are self contained and operate individually. The existing Ranking System of the Needle search Engine can be replaced with the better ranking system without affecting the other modules and the number of pages covered by the Needle Search Engine will be increased substantially. Figure 1. Basic Architecture of the Needle Search Engine 5. Milestones S.No Action Item 1. Understand the low-level design and implementation of the existing Needle search engine. More detailed analysis of the existing ranking system. Perform some tests to know the current quality of the existing Page-Rank system. Record the results to compare with the results obtained by the new Page-Rank system at the end of this project. Understand “WebGraph” package implementation details. Use the “WebGraph” package to compress the Web graph. Calculate the Page-Rank for the currently existing Web pages using the existing ranking algorithm (Develop Java module for Page-Rank) after compressing the graph Calculate the Page-Rank for the currently existing Web pages using the new algorithms (Develop java module for Page-Rank) after compressing the graph Compare the new results with the previously recorded results to show, how the WEBGRAPGH package helped in accelerating the Page-Rank system with the compression technique. 2. 3. 4. 5. 6. 7. Target Date of Completion May, 15th May, 22nd May, 25th May, 28th June, 10th July, 15th July, 22nd 6. Schedule May 2007: Topic scope; completion of milestone 1, 2 and 3. June 2007: Completion of milestone 4 and 5. July 2007: Completion of milestone 6 and 7; Completion of project with report. 5 7. References [1] Paolo Boldi, Sebastiano Vigna. The WebGraoh Framework 1: Compression Techniques. In Proceedings of The 14th International World Wide Web Conference (New York), http://www2004.org/proceedings/docs/1p595.pdf, Pages 595 – 602, ACM Press, 2004. [2] Yen-Yu Chen, Qingqing Gan, Torsten Suel. I/O-Efficient Techniques for Computing Pagerank. In Proceedings of the Eleventh ACM Conference on Information and Knowledge Management (CIKM), http://cis.poly.edu/suel/papers/pagerank.pdf, Pages 549-557, 2002. [3] G. Jeh and J. Widom. “SimRank: A Measure of Structural-Context Similarity”, Proceedings of the 8th ACM International Conference on Knowledge Discovery and Data Mining (SIGKDD), Pages 538-543, 2002, http://www-cs-students.stanford.edu/~glenj/simrank.pdf [4] Yi Zhang. Design and Implementation of a Search Engine with the Cluster Rank Algorithm. UCCS Computer Science Master’s Thesis, 2006. [5] John A. Tomlin. A New Paradigm for Ranking Pages on the World Wide Web, http://www2003.org/cdrom/papers/refereed/p042/paper42_html/p42-tomlin.htm, Pages 350-355, WWW 2003. [6] Page, Lawrence, Brin, Sergey, Motwani, Rajeev, Winograd, Terry. The PageRank Citation Ranking: Bringing Order to the Web, http://www.cs.huji.ac.il/~csip/1999-66.pdf, 1999. [7] Ricardo BaezaYates, Paolo Boldi, Carlos Castillo. Generalizing PageRank: Damping Functions for LinkBased Ranking Algorithms. In Proceedings of the 29th Annual International ACM SIGIR, http://www.dcc.uchile.cl/~ccastill/papers/baeza06_general_pagerank_damping_functions_link_ranking.pdf, Pages 308-315, ACM Press, 2006. [8] Gonzalo Navarro. Compressing Web Graphs like Texts, ftp://ftp.dcc.uchile.cl/pub/users/gnavarro/graphcompression.ps.gz, 2007. [9] The Spiders Apprentice, http://www.monash.com/spidap1.html, 2004. [10] James Caverlee, Ling Liu, S.Webb. Spam-Resilient Web Ranking via influence Throttling. 21st IEEE International Parallel and Distributed Processing Symposium (IPDPS), http://www-static.cc.gatech.edu/~caverlee/pubs/caverlee07ipdps.pdf, LongBeach, 2007. [11] L. Becchetti, C. Castillo, D. Donato, S. Leonardi, and R. Baeza-Yates, “Using rank propagation and probabilistic counting for link-based spam detection. Technical report”, 2006, http://www.dcc.uchile.cl/~ccastill/papers/becchetti_06_automatic_link_spam_detection_rank_propagation.pdf [12] Jun Hirai, Sriram Raghavan, Hector Garcia-Molina, and Andreas Paepcke. WebBase: A repository of Web pages. In Proceedings of WWW9, Amsterdam, The Netherlands, 2000. 6