2. Thesaurus structure

advertisement

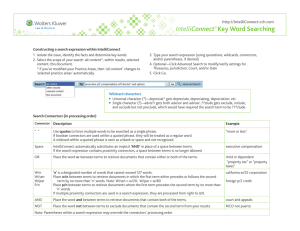

Freie Universität Berlin, Institut für Informatik

WS 2001/02

Construction and use of thesauri for

information retrieval

Author: Manuel Freire Morán

Construction and use of thesauri for information retrieval

Seite 2 von 11

Index

1. Introduction

1.1. What is a thesaurus

1.2. Applications of thesauri in IR

2. Basic thesaurus structure

2.1. Coordination: construction of phrases, size, and precision

2.2. Relationships: theoretical classification, routinely ignored in IR

2.3. Normalization: reduction of duplicate forms to “base form”

3. Automated construction

3.1. Manual vs. Automatic thesauri for IR

3.2. Techniques for automated thesauri generation

3.3. Problems of automated thesauri generation

4. PhraseFinder

4.1. Architecture

4.2. Access to the thesaurus and query expansion

4.3. Some results

5. Bibliography

1. Introduction

In this document I will try to describe the application of thesauri to information retrieval (IR)

systems, underlining the differences between manual thesauri and automatically generated

ones. The focus will be on the creation and later use of the machine-generated sort, and as

such I will try to enlighten the reader with the methods and pitfalls encountered.

I will also explain the structure of an existing system, PhraseFinder, and the decisions

involved in its design.

A thesaurus is (Merrian Webster Dictionary definition)

a: book of words or of information about a particular field or set of concepts;

especially : a book of words and their synonyms

b: a list of subject headings or descriptors usually with a cross-reference system for

use in the organization of a collection of documents for reference and retrieval”

[source: http://www.m-w.com]

This definition emphathises the difference between the “thesaurus” used by a creative author,

and that used in conjunction with information retrieval (IR) systems. A writer’s thesaurus

contains creative synonyms and related phrases that allow authors to enhance their

vocabulary. For an example, Merrian Webster’s thesaurus offers the following entries for

“fish”:

Construction and use of thesauri for information retrieval

Seite 3 von 11

Entry Word: fish

Function: noun

Text: 1

Synonyms FOOL 3, butt, chump, dupe, fall guy, gudgeon, gull, pigeon, sap, sucker

|| 2

Synonyms DOLLAR, bill, ||bone, ||buck, ||frogskin, ||iron man, one, ||skin, ||smacker, ||smackeroo

[source: http://www.m-w.com]

Whereas an IR-oriented thesaurus’s aims are completely different: for example, this excerpt

of the INSPEC Thesaurus (built to assist IR in the fields of physics, electrical engineering,

electronics, computers and control):

THESAURUS search words: natural languages

UF natural language processing (UF=used for natural language processing)

BT languages (BT=broader term is languages)

TT languages (TT=top term in a hierarchy of terms)

RT artificial intelligence (RT=related term/s)

computational linguistic

formal languages

programming languages

query languages

specification languages

speech recognition

user interfaces

CC C4210L; C6140D; C6180N; C7820(CC=classification code)

DI January 1985(DI=date [1985])

PT high level languages (PT=prior term to natural languages)

[source:

http://www.cs.ucla.edu/Leap/Eisa/inspec.htm]

This is still a manually generated thesauri (more on this later), but the differences are already

apparent: it’s objective is no longer to provide better, richer vocabulary to a writer. Instead, it

aims at:

- Assist indexing by providing a common, precise and controlled vocabulary. For an

example, libraries commonly use a similar hierarchy to classify their books.

- Assist the development of search strategies by a user. The user can browse through the

thesaurus in search of the most appropriate terms for his/her particular query.

- Refine a query, either by

o Reformulating it with broader terms (query expansion), useful when a query has

returned too few relevant results.

o Query contraction, by reformulation with narrower terms

Construction and use of thesauri for information retrieval

Seite 4 von 11

2. Thesaurus structure

A thesaurus, as used for IR, is a collection of terms/phrases and relationships between those

terms. Basic decisions are:

2.1 Level of coordination

Affects what the thesaurus considers to be a term or phrase. A high coordination seeks to

build bigger phrases, which produces a much more specific thesaurus. The problem with this

is that too much specificity is not useful (if we knew “exactly” what we were looking for, we

would not need it). Indeed, too much coordination is an evil: the user must be aware of the

exact rules used by the system for constructing the big phrase he is looking for. On the

practical side, the problem of automatically these phrases is a difficult one.

Minimum coordination comes in the form of using single terms as phrases. This is also not

optimal: for an example, it misses the distinction between “school library” and “library

school”, and thus mixes totally separate concepts.

In a nutshell,

Coordination High

level

+

Greater specificity

Can be used for indexing

High-frequency terms can be included

into phrases to make them more specific

Hard to build automatically

User must be familiar with phrasebuilding rules

Low

Easy to build

Easy to search with (user need not

worry about term ordering)

Less specific

Only good for retrieval (bad

indexing capabilities)

3.1 Relationships

One of several taxonomies for relationships between thesaural terms is the following:

- Part – Whole: element - set

- Collocation: words that frequently come together

- Paradigmatic: words with similar semantic core (lunar – moon)

- Taxonomy and Synonymy: same meaning, different levels of specificity

- Antinomy: opposite (in some sense) meaning

However, these are not easily found during automatic thesaurus generation, as they require a

great deal of “semantic” knowledge that is not easy to capture from the documents alone.

Instead, the multi-purpose “associated with” relation is used.

3.2 Normalization

Manual thesauri use a very complex set of rules (few adjectives, strip some prepositions, noun

form, capitalization) to achieve vocabulary “normalization”: store only the “base form” of

each term, instead of all it’s variants. Normalization can be critical to reduce the amount of

needed space. The problem with this complex normalization is that the user must be aware of

the normalized form in order to use the thesaurus.

In automatic thesauri, a simpler (but less precise) approach is usually taken:

- Apply a stoplist filter

- Use a standard stemmer on the remaining words (eg, Porter)

Construction and use of thesauri for information retrieval

Seite 5 von 11

The other side of the problem (a single word for multiple meanings) arises with

“homographs”. Homographs can be handled in manual thesauri via parenthetical specification

(in INSPEC, the terms “bond(chemical)” and “bond(cohesive)”). This is not so easy to do in

automatically generated ones, as the meaning can only be extracted from the term’s context.

4 Automated thesauri

4.1 Manual vs. Automatic thesauri for IR

This chapter deals with the differences to be found between manually and automatically

generated thesauri for the field of IR. The following tables illustrate those in the fields of

structure, goal, construction and verification.

Structure

Goal

Manual

- Hierarchy of thesaural terms

- High level of coordination

- Many types of relations between

terms

- Complex normalization rules

- Field limits are specified by the

creators

-

Main goal is to precisely define

the vocabulary to be used in a

technical field

- Due to this precise definition,

useful to index documents.

- Assistance in developing search

strategy

- Assistance in retrieval through

query expansion/contraction

Construction

1. define boundaries of field,

subdivide into areas

2. fix characteristics

3. collect term definitions from

a variety of sources

(including encyclopaedias,

expert advise, …)

4. analize data and set up

relationships. From these, a

hierarchy should arise

5. Evaluate consistency,

incorporate new terms or

change relationships [3, 4]

6. Create an inverted form, and

release the thesaurus

7. Periodical updates

Verification Soundness and coverage of concept

classification

Automatic

- Many different approaches, but not

always hierarchical

- Lower level of coordination (phrase

selection not easy to do)

- Simple normalization rules; hard to

separate homographs.

- Field limits are specified by the

collection

- Depending on level of coordination,

can be used for indexing.

- Main use is to assist in retrieval

through (possibly automated) query

expansion/contraction

1. Identify the collection to be used

2. Fix characteristics (less degrees

of liberty here)

3. Select and normalize terms,

phrase construction.

4. Statistical analysis to find

relationships (only one kind)

5. If desired, organize as a

hierarchy

Ability to improve retrieval performance

Construction and use of thesauri for information retrieval

Seite 6 von 11

4.2 Techniques for automatic thesaurus generation

We will now sample some techniques used in automated thesaurus construction. It must be

noted that there are other approaches to this problem that do not involve statistical analysis of

a document collection, for an example:

- Automatic merging existing thesauri to produce a combination of both

- Use of an expert system in conjunction with a retrieval engine to learn, through user

feedback, the necessary thesaural associations.

Some techniques for term selection

Terms can be extracted from title, abstract, or full text (if it is available). The first step is

usually to normalize the terms via stopword-filter and stemmer.

Afterwards, “relevant” terms are determined (for an example) via one of the following

techniques:

-

by frequency of occurrence:

o Terms can be classified into high, middle and low frequency

High frequency terms are often too general to be of interest (although

maybe they can be used in conjunction with others to build a lessgeneral phrase)

Low frequency terms will be too specific, and will probably lack the

necessary relationships to be of interest.

Middle-frequency terms are usually the best ones to keep.

o The thresholds must be manually specified

-

by discrimination value

o DV(k) = average similarity – average similarity without using term ‘k’

o Similarity is defined as distance between documents, with “distance” any

appropriate measure (usually that of the vector-space model).

o Average similarity in a collection can be calculated via the “method of

centroids”:

Calculate the average vector-space “vector” for the whole collection

Average distance between documents is the average distance to this

“centroid”.

o If DV(k) > 0, k is a good discriminator (the whole collection without “k” is

less specific than before).

-

by statistical distribution

o Trivial words obey a single-Poisson distribution

o Therefore, term “relevance” can be computed by comparing its distribution to

the single-Poisson one.

o This can be done via a chi-square test.

Statistical selection of phrases

A simple approach to phrase construction/selection is based on the following principles

- Phrase terms must occur frequently together (“with less than k words in between them”)

- Phrase components must be relatively frequent

Construction and use of thesauri for information retrieval

Seite 7 von 11

The resulting algorithm reads:

o Compute pair-wise co-ocurrence within constraints

o If greater than a threshold value,

cohesionValue t i , t j

coocurrenceFreqt i , t j

freq(t j ) freq(t j )

4.3 Problems of automated thesaurus construction

It must be noted that a purely statistical analysis cannot expect to find the exact type of

semantical relationships between terms. This has much to do with the problem of natural

language processing (NLP), a promising field of research that involves both Artificial

Intelligence and Linguistic.

A simple modification that can provide non-statistical insight into semantic is the distinction

of part-of-speech. This implies “tagging” each term with it’s corresponding type (verb, noun,

adjective, …). A program capable of doing this is called a “tagger”.

Although we have seen only the statistical approach, it is possible to either bypass it or

complement it with external relevance judgements. For an example, given a query that divides

all documents into either “relevant” or “irrelevant”, a thesaural term that appears in only one

of the classes would be better for that specific query than one that appears in both. Many such

approaches have been proposed and tested, mostly with good results.

The problem with relevance judgements is their availability: as yet, only humans can produce

them, and therefore they are scarce for most collections. A truly automatically generated

thesaurus should not depend on such judgements.

Another problem in automated thesaurus construction is verification: How can the

performance of a thesaurus be tested?. Usually, the ability of a thesaurus to extract more

relevant documents during a search is used as a pointer to thesaurus quality.

5 PhraseFinder

PhraseFinder is an automatically generated thesaurus that is integrated within a retrieval

engine, InQuery. InQuery is part of the TIPSTER project in the Information Retrieval

Laboratory of the Computer Science Department, University of Massachusetts, Amherst.

About TIPSTER:

The TIPSTER Text Program was a Defense Advanced Research Projects Agency (DARPA )

led government effort to advance the state of the art in text processing technologies through

the cooperation of researchers and developers in Government, industry and academia. The

resulting capabilities were deployed within the intelligence community to provide analysts with

improved operational tools. Due to lack of funding, this program formally ended in the Fall of

1998.

Construction and use of thesauri for information retrieval

Seite 8 von 11

5.1 Architecture

PhraseFinder takes tagged documents as input (the Church tagger is employed to assign each

word a part-of-speech), selects terms and phrases, and creates associations between these

thesaural terms.

PhraseFinder distinguishes the following hierarchy in a text document:

Text Object Paragraph Sentence Phrase Word

Where the text object is simply the whole document, a paragraph is defined as either a

“natural paragraph” or a fixed number of sentences, and a phrase can be whatever fits a

“phrase rule”. Phrase rules are specified by their part-of-speech components and the

restriction that a single phrase cannot span more than one sentence. Simple stopword-list +

stemming is used on individual words, but phrases are treated more conservatively.

Paragraph limits (max. number of sentences in a paragraph) also mark the limits for

association finding. Associations are built only within a same paragraph, and have the

following structure.

<termId, phraseId, associationFrequency>

where

associationFrequency = termFrequency x phraseFrequency

Since most associations (70%) occur only once in the TIPSTER database, and 90% only once

in the same document, association filtering is performed as follows:

- If an association has a frequency of 1, it is discarded

- If a simple term has too many associations, it is discarded as too general

This has the effect of both reducing the storage size and improving the search capabilities of

the thesaurus.

5.2 Acces to the thesaurus and query expansion

Access to the thesaurus is done via InQuery, an IR system based on probabilistic (Bayesian)

inference networks.

The associations for each term are added as “pseudo-documents”, and this “pseudo-database”

is later searched to find the relevant phrases for a given query. The output is ranked by the

search engine.

The original query is then expanded with these results, although the weighing of the added

query-terms is still done manually (smaller for smaller collections). On deciding which

phrases to add to a query, the following decision has to be made:

- duplicates: Add only those phrases where all the words where already present in the

original query (this “reweighs” the original query)

- nonduplicates: Add only those where at least a word is not present in the original one

- both

5.3 Results

The experimental results from this system are divided in two parts: those used to set up

particular parameters for the system (eg.: phrase rules), tested on smaller collections, and

those designed to test broader assumptions, done on greater collections.

The “small collection” used is NLP, a 11,429 document collection with titles and abstracts in

the area of physics

Construction and use of thesauri for information retrieval

Seite 9 von 11

The “big one” is TIPSTER, which includes 742,358 full-text documents from various sources

(San Jose Mercury news, Associated Press, Federal Register, ...). A thesaus for the whole

TIPSTER database takes (1993 computer) about 2 weeks to generate.

Best type of word

4,5

4

3,5

3

2,5

Percent

improvement

2

Duplicates

1,5

Nodup

Both

1

0,5

0

-0,5

{V, G, D}

{J, R}

{N}

All

Phrase rule

Verbs are least usefull, and ajectives and adverbs alone perform better than a “all goes”

approach. Clearly, nouns are the most informative (although their effectiveness is most

dramatic when used to re-weigh a query).

Best phrase rule

10

9

8

7

6

Percent

improvement

5

Duplicates

4

Nondup

3

Both

2

1

0

{NNN, JNN, JJN,

NN, JN, N}

{NNN, NN, N}

Phrase rule

{NNN, NN}

Construction and use of thesauri for information retrieval

Seite 10 von 11

There is no advantage to be found by adding adjectives to the phrase rule.

It is also important not to forget individual nouns, as they seem to convey much meaning.

The comparison between these last two graphs points strongly in favor of noun phrases as

phrase rule.

Sample vs whole

100

90

80

Precision

70

60

Original

50

TIPSAMP

40

TIPFULL

30

20

10

0

0

10

20

30

40

50

60

70

80

90

100

Recall

The difference between a thesaurus constructed with a full database (TIPFULL) and one

constructed by taking 1 of every 5 documents from this same collection (TIPSAMP) is

practically nonexistent. The ideal sample size was not calculated, though. The phrase rule

used is “noun-phrases” (that is, {NNN, NN, N}).

A related result shows that a thesaurus built for one collection can be used successfully to

improve search in a separate but similar one.

On-line use of the thesaurus (short queries)

Construction and use of thesauri for information retrieval

Seite 11 von 11

80

70

Precision

60

50

Oringinal

Dup

Nodup

Both

40

30

20

10

0

0

10

20

30

40

50

60

70

80

90

100

Recall

The preceding runs on TIPSTER where done using the full length of the predefined queries.

On-line queries are usually much simpler, and consist of few words. By dropping the

description fields of TIPSTER queries, online performance was measured. The improvement

due to the use of a thesaurus is greater than before, and as expected, “Both”-type query

expansion provides the best results.

6 Bibliography

Srinivasa, Padmini. 1992. "Thesaurus Construction." In Information Retrieval, edited by W.

B. Frakes, and R. Baeza-Yates. Englewood Cliffs, NJ: Prentice Hall: 161—218

[no online version]

Yufeng Jing and W. Bruce Croft. An association thesaurus for information retrieval. In Proc.

of Intelligent Multimedia Retrieval Systems and Management Conference (RIAO), pages

146--160, 1994.

http://www.cs.umass.edu/Dienst/UI/2.0/Describe/ncstrl.umassa_cs/UM-CS-1994-017?abstract=thesaur

TIPSTER project

http://www.itl.nist.gov/iaui/894.02/related_projects/tipster/

INQUERY information retrieval system

http://ciir.cs.umass.edu/demonstrations/InQueryRetrievalEngine.html

Merrian-Webster online dictionary and thesaurus

http://www.m-w.com

More information…

http://www.nrc.ca/irc/thesaurus/roofing/report_b.html

And of course,

http://www.google.com